* [infer] Infer/llama demo (#4503)

* add

* add infer example

* finish

* finish

* stash

* fix

* [Kernels] add inference token attention kernel (#4505)

* add token forward

* fix tests

* fix comments

* add try import triton

* add adapted license

* add tests check

* [Kernels] add necessary kernels (llama & bloom) for attention forward and kv-cache manager (#4485)

* added _vllm_rms_norm

* change place

* added tests

* added tests

* modify

* adding kernels

* added tests:

* adding kernels

* modify

* added

* updating kernels

* adding tests

* added tests

* kernel change

* submit

* modify

* added

* edit comments

* change name

* change commnets and fix import

* add

* added

* combine codes (#4509)

* [feature] add KV cache manager for llama & bloom inference (#4495)

* add kv cache memory manager

* add stateinfo during inference

* format

* format

* rename file

* add kv cache test

* revise on BatchInferState

* file dir change

* [Bug FIx] import llama context ops fix (#4524)

* added _vllm_rms_norm

* change place

* added tests

* added tests

* modify

* adding kernels

* added tests:

* adding kernels

* modify

* added

* updating kernels

* adding tests

* added tests

* kernel change

* submit

* modify

* added

* edit comments

* change name

* change commnets and fix import

* add

* added

* fix

* add ops into init.py

* add

* [Infer] Add TPInferEngine and fix file path (#4532)

* add engine for TP inference

* move file path

* update path

* fix TPInferEngine

* remove unused file

* add engine test demo

* revise TPInferEngine

* fix TPInferEngine, add test

* fix

* Add Inference test for llama (#4508)

* add kv cache memory manager

* add stateinfo during inference

* add

* add infer example

* finish

* finish

* format

* format

* rename file

* add kv cache test

* revise on BatchInferState

* add inference test for llama

* fix conflict

* feature: add some new features for llama engine

* adapt colossalai triton interface

* Change the parent class of llama policy

* add nvtx

* move llama inference code to tensor_parallel

* fix __init__.py

* rm tensor_parallel

* fix: fix bugs in auto_policy.py

* fix:rm some unused codes

* mv colossalai/tpinference to colossalai/inference/tensor_parallel

* change __init__.py

* save change

* fix engine

* Bug fix: Fix hang

* remove llama_infer_engine.py

---------

Co-authored-by: yuanheng-zhao <jonathan.zhaoyh@gmail.com>

Co-authored-by: CjhHa1 <cjh18671720497@outlook.com>

* [infer] Add Bloom inference policy and replaced methods (#4512)

* add bloom inference methods and policy

* enable pass BatchInferState from model forward

* revise bloom infer layers/policies

* add engine for inference (draft)

* add test for bloom infer

* fix bloom infer policy and flow

* revise bloom test

* fix bloom file path

* remove unused codes

* fix bloom modeling

* fix dir typo

* fix trivial

* fix policy

* clean pr

* trivial fix

* Revert "[infer] Add Bloom inference policy and replaced methods (#4512)" (#4552)

This reverts commit

|

||

|---|---|---|

| .. | ||

| tensor_parallel | ||

| README.md | ||

| __init__.py | ||

README.md

🚀 Colossal-Inference

Table of contents

Introduction

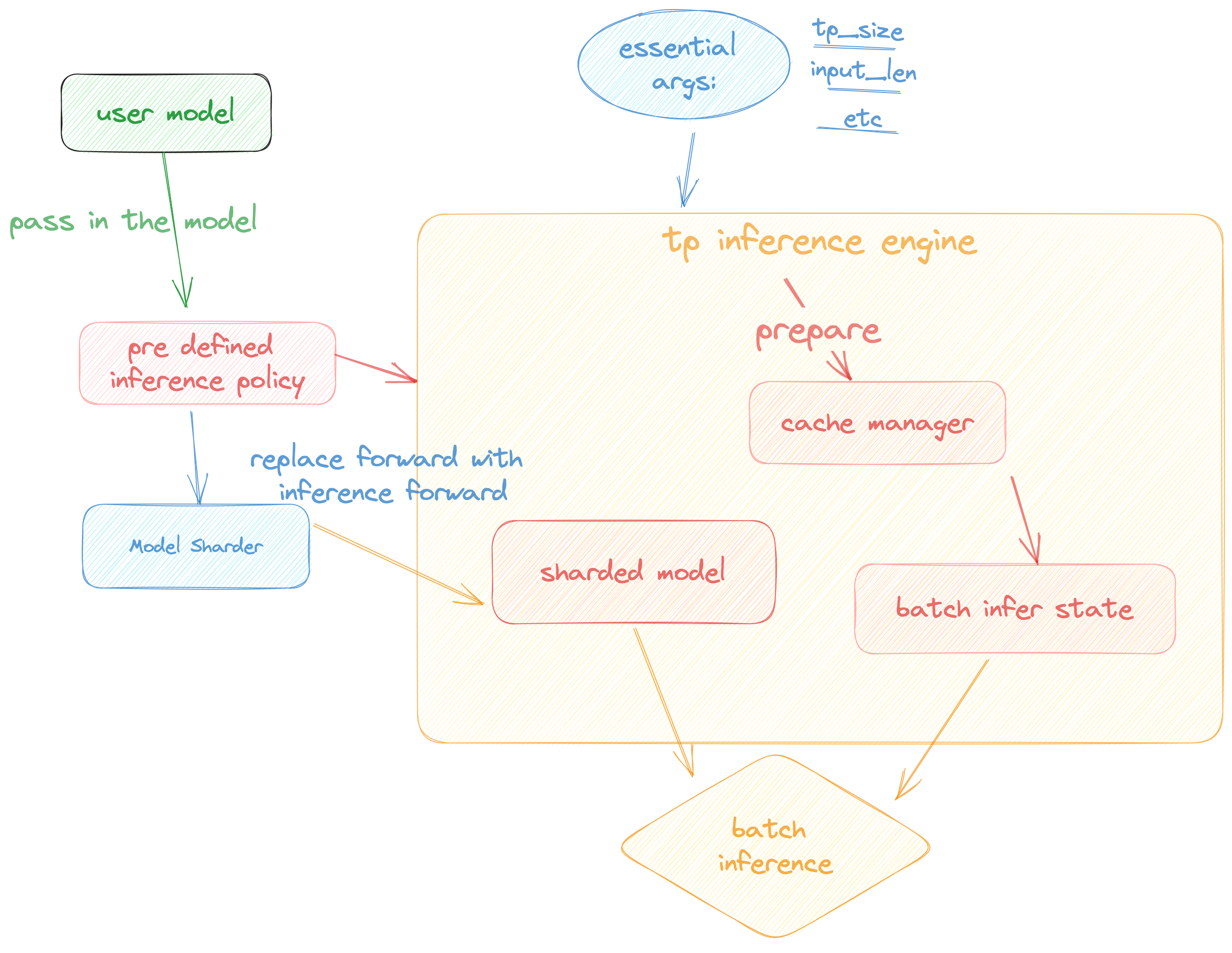

Colossal Inference is a module that contains colossal-ai designed inference framework, featuring high performance, steady and easy usability. Colossal Inference incorporated the advantages of the latest open-source inference systems, including TGI, vLLM, FasterTransformer, LightLLM and flash attention. while combining the design of Colossal AI, especially Shardformer, to reduce the learning curve for users.

Design

Colossal Inference is composed of two main components:

- High performance kernels and ops: which are inspired from existing libraries and modified correspondingly.

- Efficient memory management mechanism:which includes the key-value cache manager, allowing for zero memory waste during inference.

cache manager: serves as a memory manager to help manage the key-value cache, it integrates functions such as memory allocation, indexing and release.batch_infer_info: holds all essential elements of a batch inference, which is updated every batch.

- High-level inference engine combined with

Shardformer: it allows our inference framework to easily invoke and utilize various parallel methods.engine.TPInferEngine: it is a high level interface that integrates with shardformer, especially for multi-card (tensor parallel) inference:modeling.llama.LlamaInferenceForwards: contains theforwardmethods for llama inference. (in this case : llama)policies.llama.LlamaModelInferPolicy: contains the policies forllamamodels, which is used to callshardformerand segmentate the model forward in tensor parallelism way.

Pipeline of inference:

In this section we discuss how the colossal inference works and integrates with the Shardformer . The details can be found in our codes.

Roadmap of our implementation

- Design cache manager and batch infer state

- Design TpInference engine to integrates with

Shardformer - Register corresponding high-performance

kernelandops - Design policies and forwards (e.g.

LlamaandBloom)- policy

- context forward

- token forward

- Replace the kernels with

faster-transformerin token-forward stage - Support all models

- Llama

- Bloom

- Chatglm2

- Benchmarking for all models

Get started

Installation

pip install -e .

Requirements

dependencies

pytorch= 1.13.1 (gpu)

cuda>= 11.6

transformers= 4.30.2

triton==2.0.0.dev20221202

# for install vllm, please use this branch to install https://github.com/tiandiao123/vllm/tree/setup_branch

vllm

# for install flash-attention, please use commit hash: 67ae6fd74b4bc99c36b2ce524cf139c35663793c

flash-attention

Docker

You can use docker run to use docker container to set-up environment

# env: python==3.8, cuda 11.6, pytorch == 1.13.1 triton==2.0.0.dev20221202, vllm kernels support, flash-attention-2 kernels support

docker pull hpcaitech/colossalai-inference:v2

docker run -it --gpus all --name ANY_NAME -v $PWD:/workspace -w /workspace hpcaitech/colossalai-inference:v2 /bin/bash

Dive into fast-inference!

example files are in

cd colossalai.examples

python xx

Performance

environment:

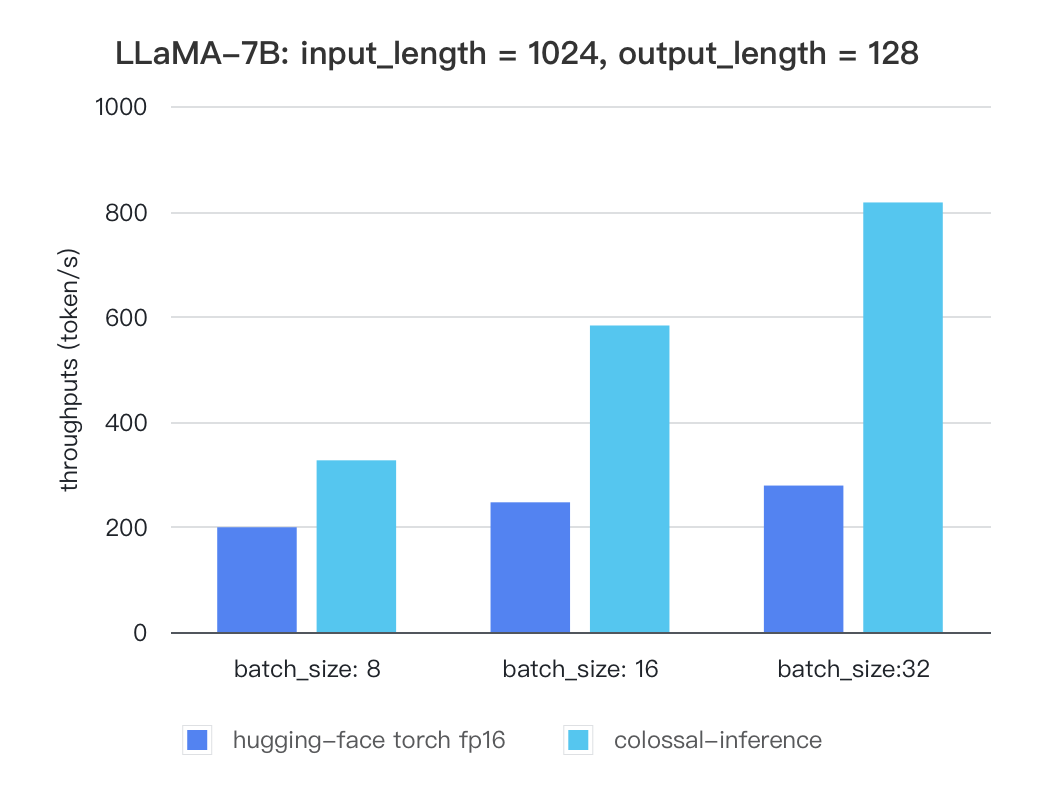

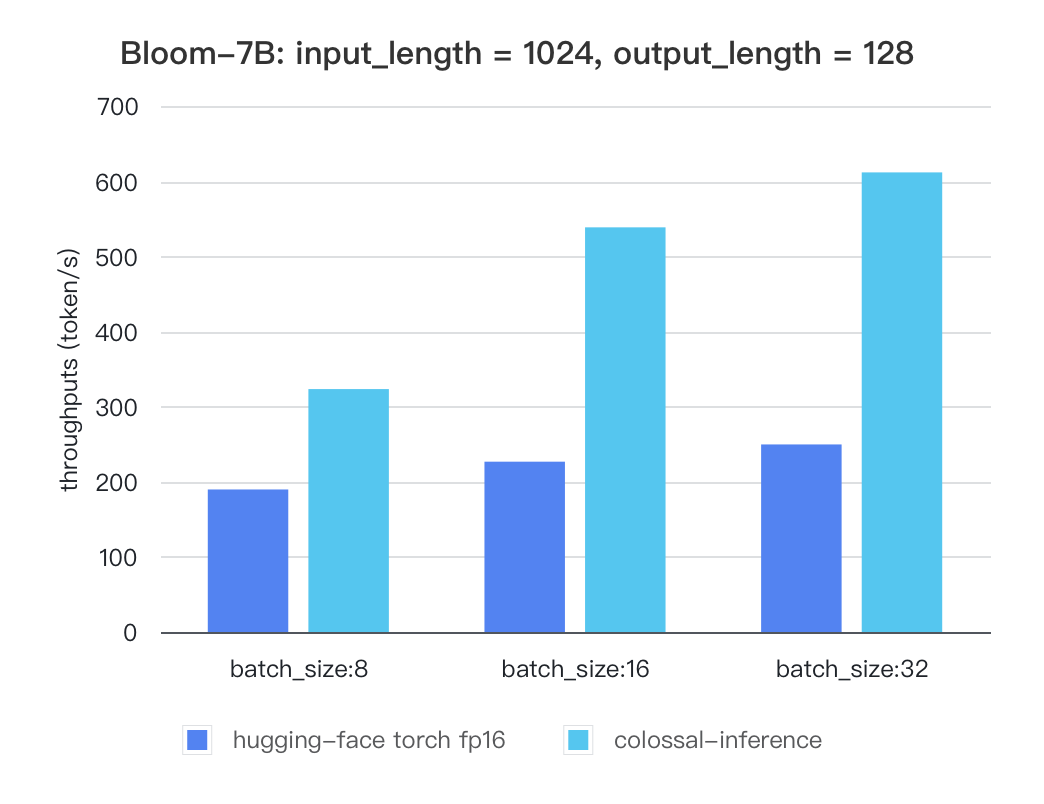

We conducted multiple benchmark tests to evaluate the performance. We compared the inference latency and throughputs between colossal-inference and original hugging-face torch fp16.

For various models, experiments were conducted using multiple batch sizes under the consistent model configuration of 7 billion(7b) parameters, 1024 input length, and 128 output length. The obtained results are as follows (due to time constraints, the evaluation has currently been performed solely on the A100 single GPU performance; multi-GPU performance will be addressed in the future):

Single GPU Performance:

Currently the stats below are calculated based on A100 (single GPU), and we calculate token latency based on average values of context-forward and decoding forward process, which means we combine both of processes to calculate token generation times. We are actively developing new features and methods to furthur optimize the performance of LLM models. Please stay tuned.

Llama

| batch_size | 8 | 16 | 32 |

|---|---|---|---|

| hugging-face torch fp16 | 199.12 | 246.56 | 278.4 |

| colossal-inference | 326.4 | 582.72 | 816.64 |

Bloom

| batch_size | 8 | 16 | 32 |

|---|---|---|---|

| hugging-face torch fp16 | 189.68 | 226.66 | 249.61 |

| colossal-inference | 323.28 | 538.52 | 611.64 |

The results of more models are coming soon!