mirror of https://github.com/hpcaitech/ColossalAI

[Feature] The first PR to Add TP inference engine, kv-cache manager and related kernels for our inference system (#4577)

* [infer] Infer/llama demo (#4503)

* add

* add infer example

* finish

* finish

* stash

* fix

* [Kernels] add inference token attention kernel (#4505)

* add token forward

* fix tests

* fix comments

* add try import triton

* add adapted license

* add tests check

* [Kernels] add necessary kernels (llama & bloom) for attention forward and kv-cache manager (#4485)

* added _vllm_rms_norm

* change place

* added tests

* added tests

* modify

* adding kernels

* added tests:

* adding kernels

* modify

* added

* updating kernels

* adding tests

* added tests

* kernel change

* submit

* modify

* added

* edit comments

* change name

* change commnets and fix import

* add

* added

* combine codes (#4509)

* [feature] add KV cache manager for llama & bloom inference (#4495)

* add kv cache memory manager

* add stateinfo during inference

* format

* format

* rename file

* add kv cache test

* revise on BatchInferState

* file dir change

* [Bug FIx] import llama context ops fix (#4524)

* added _vllm_rms_norm

* change place

* added tests

* added tests

* modify

* adding kernels

* added tests:

* adding kernels

* modify

* added

* updating kernels

* adding tests

* added tests

* kernel change

* submit

* modify

* added

* edit comments

* change name

* change commnets and fix import

* add

* added

* fix

* add ops into init.py

* add

* [Infer] Add TPInferEngine and fix file path (#4532)

* add engine for TP inference

* move file path

* update path

* fix TPInferEngine

* remove unused file

* add engine test demo

* revise TPInferEngine

* fix TPInferEngine, add test

* fix

* Add Inference test for llama (#4508)

* add kv cache memory manager

* add stateinfo during inference

* add

* add infer example

* finish

* finish

* format

* format

* rename file

* add kv cache test

* revise on BatchInferState

* add inference test for llama

* fix conflict

* feature: add some new features for llama engine

* adapt colossalai triton interface

* Change the parent class of llama policy

* add nvtx

* move llama inference code to tensor_parallel

* fix __init__.py

* rm tensor_parallel

* fix: fix bugs in auto_policy.py

* fix:rm some unused codes

* mv colossalai/tpinference to colossalai/inference/tensor_parallel

* change __init__.py

* save change

* fix engine

* Bug fix: Fix hang

* remove llama_infer_engine.py

---------

Co-authored-by: yuanheng-zhao <jonathan.zhaoyh@gmail.com>

Co-authored-by: CjhHa1 <cjh18671720497@outlook.com>

* [infer] Add Bloom inference policy and replaced methods (#4512)

* add bloom inference methods and policy

* enable pass BatchInferState from model forward

* revise bloom infer layers/policies

* add engine for inference (draft)

* add test for bloom infer

* fix bloom infer policy and flow

* revise bloom test

* fix bloom file path

* remove unused codes

* fix bloom modeling

* fix dir typo

* fix trivial

* fix policy

* clean pr

* trivial fix

* Revert "[infer] Add Bloom inference policy and replaced methods (#4512)" (#4552)

This reverts commit 17cfa57140.

* [Doc] Add colossal inference doc (#4549)

* create readme

* add readme.md

* fix typos

* [infer] Add Bloom inference policy and replaced methods (#4553)

* add bloom inference methods and policy

* enable pass BatchInferState from model forward

* revise bloom infer layers/policies

* add engine for inference (draft)

* add test for bloom infer

* fix bloom infer policy and flow

* revise bloom test

* fix bloom file path

* remove unused codes

* fix bloom modeling

* fix dir typo

* fix trivial

* fix policy

* clean pr

* trivial fix

* trivial

* Fix Bugs In Llama Model Forward (#4550)

* add kv cache memory manager

* add stateinfo during inference

* add

* add infer example

* finish

* finish

* format

* format

* rename file

* add kv cache test

* revise on BatchInferState

* add inference test for llama

* fix conflict

* feature: add some new features for llama engine

* adapt colossalai triton interface

* Change the parent class of llama policy

* add nvtx

* move llama inference code to tensor_parallel

* fix __init__.py

* rm tensor_parallel

* fix: fix bugs in auto_policy.py

* fix:rm some unused codes

* mv colossalai/tpinference to colossalai/inference/tensor_parallel

* change __init__.py

* save change

* fix engine

* Bug fix: Fix hang

* remove llama_infer_engine.py

* bug fix: fix bugs about infer_state.is_context_stage

* remove pollcies

* fix: delete unused code

* fix: delete unused code

* remove unused coda

* fix conflict

---------

Co-authored-by: yuanheng-zhao <jonathan.zhaoyh@gmail.com>

Co-authored-by: CjhHa1 <cjh18671720497@outlook.com>

* [doc] add colossal inference fig (#4554)

* create readme

* add readme.md

* fix typos

* upload fig

* [NFC] fix docstring for colossal inference (#4555)

Fix docstring and comments in kv cache manager and bloom modeling

* fix docstring in llama modeling (#4557)

* [Infer] check import vllm (#4559)

* change import vllm

* import apply_rotary_pos_emb

* change import location

* [DOC] add installation req (#4561)

* add installation req

* fix

* slight change

* remove empty

* [Feature] rms-norm transfer into inference llama.py (#4563)

* add installation req

* fix

* slight change

* remove empty

* add rmsnorm polciy

* add

* clean codes

* [infer] Fix tp inference engine (#4564)

* fix engine prepare data

* add engine test

* use bloom for testing

* revise on test

* revise on test

* reset shardformer llama (#4569)

* [infer] Fix engine - tensors on different devices (#4570)

* fix diff device in engine

* [codefactor] Feature/colossal inference (#4579)

* code factors

* remove

* change coding (#4581)

* [doc] complete README of colossal inference (#4585)

* complete fig

* Update README.md

* [doc]update readme (#4586)

* update readme

* Update README.md

* bug fix: fix bus in llama and bloom (#4588)

* [BUG FIX]Fix test engine in CI and non-vllm kernels llama forward (#4592)

* fix tests

* clean

* clean

* fix bugs

* add

* fix llama non-vllm kernels bug

* modify

* clean codes

* [Kernel]Rmsnorm fix (#4598)

* fix tests

* clean

* clean

* fix bugs

* add

* fix llama non-vllm kernels bug

* modify

* clean codes

* add triton rmsnorm

* delete vllm kernel flag

* [Bug Fix]Fix bugs in llama (#4601)

* fix tests

* clean

* clean

* fix bugs

* add

* fix llama non-vllm kernels bug

* modify

* clean codes

* bug fix: remove rotary_positions_ids

---------

Co-authored-by: cuiqing.li <lixx3527@gmail.com>

* [kernel] Add triton layer norm & replace norm for bloom (#4609)

* add layernorm for inference

* add test for layernorm kernel

* add bloom layernorm replacement policy

* trivial: path

* [Infer] Bug fix rotary embedding in llama (#4608)

* fix rotary embedding

* delete print

* fix init seq len bug

* rename pytest

* add benchmark for llama

* refactor codes

* delete useless code

* [bench] Add bloom inference benchmark (#4621)

* add bloom benchmark

* readme - update benchmark res

* trivial - uncomment for testing (#4622)

* [Infer] add check triton and cuda version for tests (#4627)

* fix rotary embedding

* delete print

* fix init seq len bug

* rename pytest

* add benchmark for llama

* refactor codes

* delete useless code

* add check triton and cuda

* Update sharder.py (#4629)

* [Inference] Hot fix some bugs and typos (#4632)

* fix

* fix test

* fix conflicts

* [typo]Comments fix (#4633)

* fallback

* fix commnets

* bug fix: fix some bugs in test_llama and test_bloom (#4635)

* [Infer] delete benchmark in tests and fix bug for llama and bloom (#4636)

* fix rotary embedding

* delete print

* fix init seq len bug

* rename pytest

* add benchmark for llama

* refactor codes

* delete useless code

* add check triton and cuda

* delete benchmark and fix infer bugs

* delete benchmark for tests

* delete useless code

* delete bechmark function in utils

* [Fix] Revise TPInferEngine, inference tests and benchmarks (#4642)

* [Fix] revise TPInferEngine methods and inference tests

* fix llama/bloom infer benchmarks

* fix infer tests

* trivial fix: benchmakrs

* trivial

* trivial: rm print

* modify utils filename for infer ops test (#4657)

* [Infer] Fix TPInferEngine init & inference tests, benchmarks (#4670)

* fix engine funcs

* TPInferEngine: receive shard config in init

* benchmarks: revise TPInferEngine init

* benchmarks: remove pytest decorator

* trivial fix

* use small model for tests

* [NFC] use args for infer benchmarks (#4674)

* revise infer default (#4683)

* [Fix] optimize/shard model in TPInferEngine init (#4684)

* remove using orig model in engine

* revise inference tests

* trivial: rename

---------

Co-authored-by: Jianghai <72591262+CjhHa1@users.noreply.github.com>

Co-authored-by: Xu Kai <xukai16@foxmail.com>

Co-authored-by: Yuanheng Zhao <54058983+yuanheng-zhao@users.noreply.github.com>

Co-authored-by: yuehuayingxueluo <867460659@qq.com>

Co-authored-by: yuanheng-zhao <jonathan.zhaoyh@gmail.com>

Co-authored-by: CjhHa1 <cjh18671720497@outlook.com>

pull/4686/head

parent

eedaa3e1ef

commit

bce0f16702

32

LICENSE

32

LICENSE

|

|

@ -396,3 +396,35 @@ Copyright 2021- HPC-AI Technology Inc. All rights reserved.

|

|||

CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE)

|

||||

ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE

|

||||

POSSIBILITY OF SUCH DAMAGE.

|

||||

|

||||

---------------- LICENSE FOR VLLM TEAM ----------------

|

||||

|

||||

from VLLM TEAM:

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License");

|

||||

you may not use this file except in compliance with the License.

|

||||

You may obtain a copy of the License at

|

||||

|

||||

https://github.com/vllm-project/vllm/blob/main/LICENSE

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software

|

||||

distributed under the License is distributed on an "AS IS" BASIS,

|

||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

See the License for the specific language governing permissions and

|

||||

limitations under the License.

|

||||

|

||||

---------------- LICENSE FOR LIGHTLLM TEAM ----------------

|

||||

|

||||

from LIGHTLLM TEAM:

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License");

|

||||

you may not use this file except in compliance with the License.

|

||||

You may obtain a copy of the License at

|

||||

|

||||

https://github.com/ModelTC/lightllm/blob/main/LICENSE

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software

|

||||

distributed under the License is distributed on an "AS IS" BASIS,

|

||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

See the License for the specific language governing permissions and

|

||||

limitations under the License.

|

||||

|

|

|

|||

|

|

@ -0,0 +1,117 @@

|

|||

# 🚀 Colossal-Inference

|

||||

|

||||

## Table of contents

|

||||

|

||||

## Introduction

|

||||

|

||||

`Colossal Inference` is a module that contains colossal-ai designed inference framework, featuring high performance, steady and easy usability. `Colossal Inference` incorporated the advantages of the latest open-source inference systems, including TGI, vLLM, FasterTransformer, LightLLM and flash attention. while combining the design of Colossal AI, especially Shardformer, to reduce the learning curve for users.

|

||||

|

||||

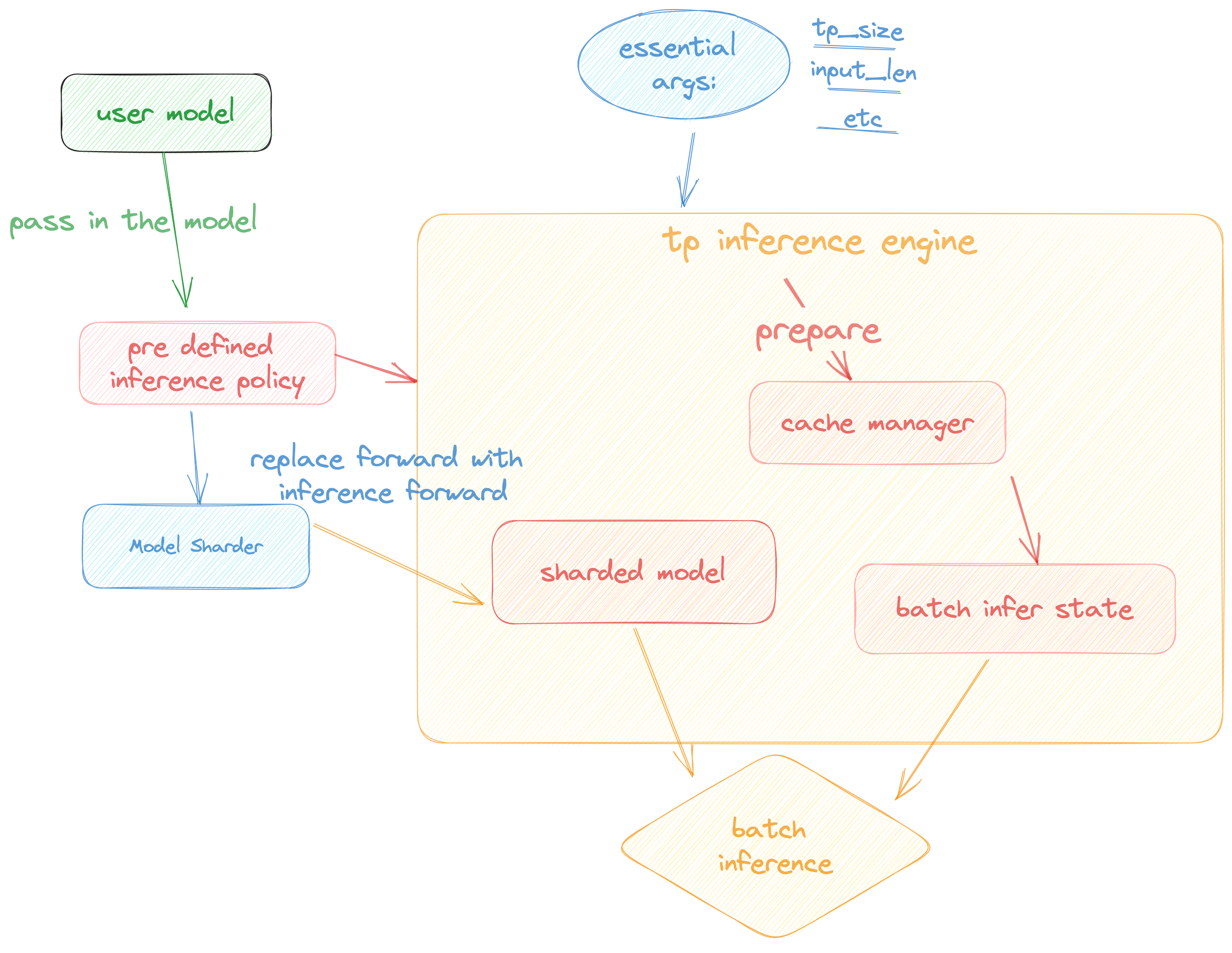

## Design

|

||||

|

||||

Colossal Inference is composed of two main components:

|

||||

|

||||

1. High performance kernels and ops: which are inspired from existing libraries and modified correspondingly.

|

||||

2. Efficient memory management mechanism:which includes the key-value cache manager, allowing for zero memory waste during inference.

|

||||

1. `cache manager`: serves as a memory manager to help manage the key-value cache, it integrates functions such as memory allocation, indexing and release.

|

||||

2. `batch_infer_info`: holds all essential elements of a batch inference, which is updated every batch.

|

||||

3. High-level inference engine combined with `Shardformer`: it allows our inference framework to easily invoke and utilize various parallel methods.

|

||||

1. `engine.TPInferEngine`: it is a high level interface that integrates with shardformer, especially for multi-card (tensor parallel) inference:

|

||||

2. `modeling.llama.LlamaInferenceForwards`: contains the `forward` methods for llama inference. (in this case : llama)

|

||||

3. `policies.llama.LlamaModelInferPolicy` : contains the policies for `llama` models, which is used to call `shardformer` and segmentate the model forward in tensor parallelism way.

|

||||

|

||||

## Pipeline of inference:

|

||||

|

||||

In this section we discuss how the colossal inference works and integrates with the `Shardformer` . The details can be found in our codes.

|

||||

|

||||

|

||||

|

||||

## Roadmap of our implementation

|

||||

|

||||

- [x] Design cache manager and batch infer state

|

||||

- [x] Design TpInference engine to integrates with `Shardformer`

|

||||

- [x] Register corresponding high-performance `kernel` and `ops`

|

||||

- [x] Design policies and forwards (e.g. `Llama` and `Bloom`)

|

||||

- [x] policy

|

||||

- [x] context forward

|

||||

- [x] token forward

|

||||

- [ ] Replace the kernels with `faster-transformer` in token-forward stage

|

||||

- [ ] Support all models

|

||||

- [x] Llama

|

||||

- [x] Bloom

|

||||

- [ ] Chatglm2

|

||||

- [ ] Benchmarking for all models

|

||||

|

||||

## Get started

|

||||

|

||||

### Installation

|

||||

|

||||

```bash

|

||||

pip install -e .

|

||||

```

|

||||

|

||||

### Requirements

|

||||

|

||||

dependencies

|

||||

|

||||

```bash

|

||||

pytorch= 1.13.1 (gpu)

|

||||

cuda>= 11.6

|

||||

transformers= 4.30.2

|

||||

triton==2.0.0.dev20221202

|

||||

# for install vllm, please use this branch to install https://github.com/tiandiao123/vllm/tree/setup_branch

|

||||

vllm

|

||||

# for install flash-attention, please use commit hash: 67ae6fd74b4bc99c36b2ce524cf139c35663793c

|

||||

flash-attention

|

||||

```

|

||||

|

||||

### Docker

|

||||

|

||||

You can use docker run to use docker container to set-up environment

|

||||

|

||||

```

|

||||

# env: python==3.8, cuda 11.6, pytorch == 1.13.1 triton==2.0.0.dev20221202, vllm kernels support, flash-attention-2 kernels support

|

||||

docker pull hpcaitech/colossalai-inference:v2

|

||||

docker run -it --gpus all --name ANY_NAME -v $PWD:/workspace -w /workspace hpcaitech/colossalai-inference:v2 /bin/bash

|

||||

|

||||

```

|

||||

|

||||

### Dive into fast-inference!

|

||||

|

||||

example files are in

|

||||

|

||||

```bash

|

||||

cd colossalai.examples

|

||||

python xx

|

||||

```

|

||||

|

||||

## Performance

|

||||

|

||||

### environment:

|

||||

|

||||

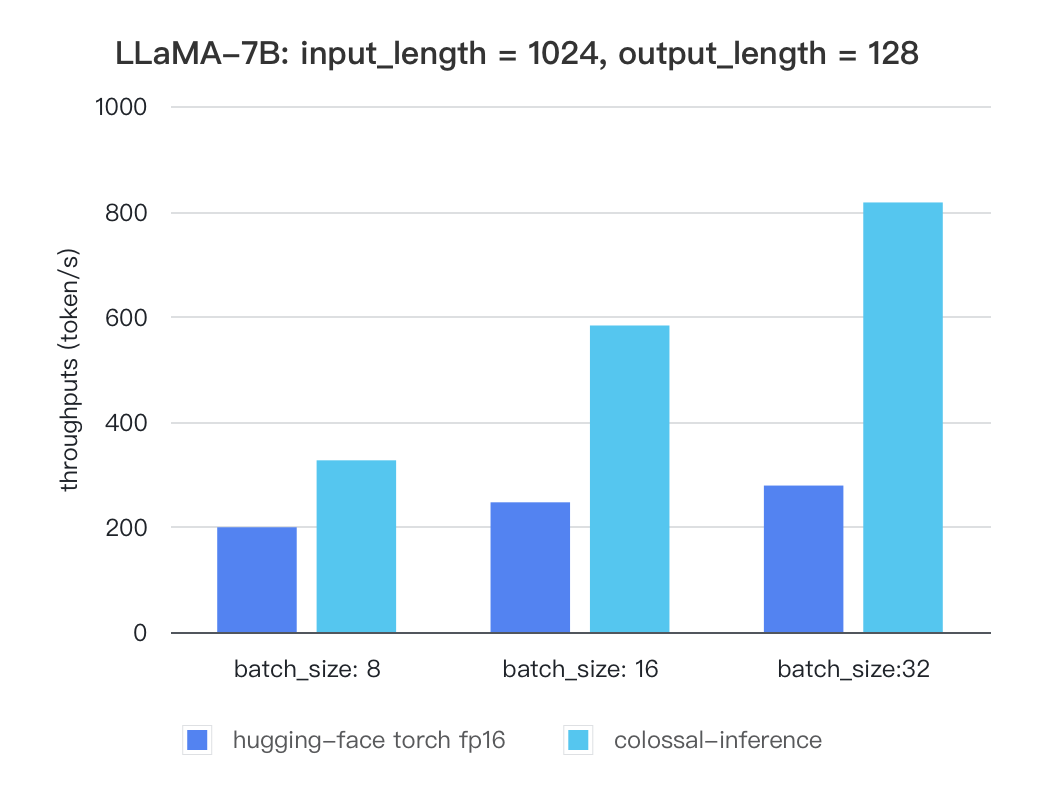

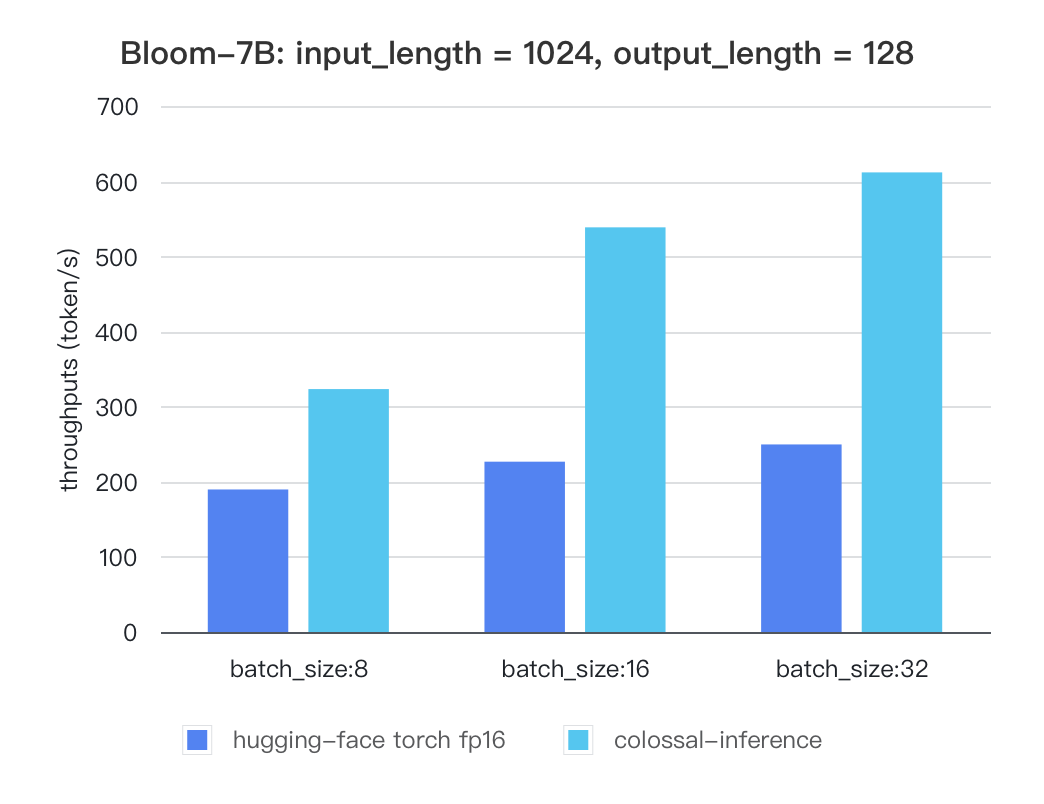

We conducted multiple benchmark tests to evaluate the performance. We compared the inference `latency` and `throughputs` between `colossal-inference` and original `hugging-face torch fp16`.

|

||||

|

||||

For various models, experiments were conducted using multiple batch sizes under the consistent model configuration of `7 billion(7b)` parameters, `1024` input length, and 128 output length. The obtained results are as follows (due to time constraints, the evaluation has currently been performed solely on the `A100` single GPU performance; multi-GPU performance will be addressed in the future):

|

||||

|

||||

### Single GPU Performance:

|

||||

|

||||

Currently the stats below are calculated based on A100 (single GPU), and we calculate token latency based on average values of context-forward and decoding forward process, which means we combine both of processes to calculate token generation times. We are actively developing new features and methods to furthur optimize the performance of LLM models. Please stay tuned.

|

||||

|

||||

#### Llama

|

||||

|

||||

| batch_size | 8 | 16 | 32 |

|

||||

| :---------------------: | :----: | :----: | :----: |

|

||||

| hugging-face torch fp16 | 199.12 | 246.56 | 278.4 |

|

||||

| colossal-inference | 326.4 | 582.72 | 816.64 |

|

||||

|

||||

|

||||

|

||||

### Bloom

|

||||

|

||||

| batch_size | 8 | 16 | 32 |

|

||||

| :---------------------: | :----: | :----: | :----: |

|

||||

| hugging-face torch fp16 | 189.68 | 226.66 | 249.61 |

|

||||

| colossal-inference | 323.28 | 538.52 | 611.64 |

|

||||

|

||||

|

||||

|

||||

The results of more models are coming soon!

|

||||

|

|

@ -0,0 +1,4 @@

|

|||

from .engine import TPInferEngine

|

||||

from .kvcache_manager import MemoryManager

|

||||

|

||||

__all__ = ['MemoryManager', 'TPInferEngine']

|

||||

|

|

@ -0,0 +1,55 @@

|

|||

# might want to consider combine with InferenceConfig in colossalai/ppinference/inference_config.py later

|

||||

from dataclasses import dataclass

|

||||

from typing import Any

|

||||

|

||||

import torch

|

||||

|

||||

from .kvcache_manager import MemoryManager

|

||||

|

||||

|

||||

@dataclass

|

||||

class BatchInferState:

|

||||

r"""

|

||||

Information to be passed and used for a batch of inputs during

|

||||

a single model forward

|

||||

"""

|

||||

batch_size: int

|

||||

max_len_in_batch: int

|

||||

|

||||

cache_manager: MemoryManager = None

|

||||

|

||||

block_loc: torch.Tensor = None

|

||||

start_loc: torch.Tensor = None

|

||||

seq_len: torch.Tensor = None

|

||||

past_key_values_len: int = None

|

||||

|

||||

is_context_stage: bool = False

|

||||

context_mem_index: torch.Tensor = None

|

||||

decode_is_contiguous: bool = None

|

||||

decode_mem_start: int = None

|

||||

decode_mem_end: int = None

|

||||

decode_mem_index: torch.Tensor = None

|

||||

decode_layer_id: int = None

|

||||

|

||||

device: torch.device = torch.device('cuda')

|

||||

|

||||

@property

|

||||

def total_token_num(self):

|

||||

# return self.batch_size * self.max_len_in_batch

|

||||

assert self.seq_len is not None and self.seq_len.size(0) > 0

|

||||

return int(torch.sum(self.seq_len))

|

||||

|

||||

def set_cache_manager(self, manager: MemoryManager):

|

||||

self.cache_manager = manager

|

||||

|

||||

@staticmethod

|

||||

def init_block_loc(b_loc: torch.Tensor, seq_len: torch.Tensor, max_len_in_batch: int,

|

||||

alloc_mem_index: torch.Tensor):

|

||||

""" in-place update block loc mapping based on the sequence length of the inputs in current bath"""

|

||||

start_index = 0

|

||||

seq_len_numpy = seq_len.cpu().numpy()

|

||||

for i, cur_seq_len in enumerate(seq_len_numpy):

|

||||

b_loc[i, max_len_in_batch - cur_seq_len:max_len_in_batch] = alloc_mem_index[start_index:start_index +

|

||||

cur_seq_len]

|

||||

start_index += cur_seq_len

|

||||

return

|

||||

|

|

@ -0,0 +1,294 @@

|

|||

from typing import Any, Callable, Dict, List, Optional, Union

|

||||

|

||||

import torch

|

||||

import torch.nn as nn

|

||||

from transformers import BloomForCausalLM, LlamaForCausalLM

|

||||

from transformers.generation import GenerationConfig

|

||||

from transformers.generation.stopping_criteria import StoppingCriteriaList

|

||||

from transformers.tokenization_utils_base import BatchEncoding

|

||||

|

||||

from colossalai.shardformer import ShardConfig, ShardFormer

|

||||

from colossalai.shardformer.policies.auto_policy import get_autopolicy

|

||||

|

||||

from .batch_infer_state import BatchInferState

|

||||

from .kvcache_manager import MemoryManager

|

||||

|

||||

DP_AXIS, PP_AXIS, TP_AXIS = 0, 1, 2

|

||||

|

||||

_supported_models = ['LlamaForCausalLM', 'LlamaModel', 'BloomForCausalLM']

|

||||

|

||||

|

||||

class TPInferEngine:

|

||||

"""Engine class for tensor parallel inference.

|

||||

|

||||

Args:

|

||||

model (Module): original model, e.g. huggingface CausalLM

|

||||

shard_config (ShardConfig): The config for sharding original model

|

||||

max_batch_size (int): maximum batch size

|

||||

max_input_len (int): maximum input length of sequence

|

||||

max_output_len (int): maximum output length of output tokens

|

||||

dtype (torch.dtype): datatype used to init KV cache space

|

||||

device (str): device the KV cache of engine to be initialized on

|

||||

|

||||

Examples:

|

||||

>>> # define model and shard config for your inference

|

||||

>>> model = ...

|

||||

>>> generate_kwargs = ...

|

||||

>>> shard_config = ShardConfig(enable_tensor_parallelism=True, inference_only=True)

|

||||

>>> infer_engine = TPInferEngine(model, shard_config, MAX_BATCH_SIZE, MAX_INPUT_LEN, MAX_OUTPUT_LEN)

|

||||

>>> outputs = infer_engine.generate(input_ids, **generate_kwargs)

|

||||

"""

|

||||

|

||||

def __init__(self,

|

||||

model: nn.Module,

|

||||

shard_config: ShardConfig,

|

||||

max_batch_size: int,

|

||||

max_input_len: int,

|

||||

max_output_len: int,

|

||||

dtype: torch.dtype = torch.float16,

|

||||

device: str = 'cuda') -> None:

|

||||

self.max_batch_size = max_batch_size

|

||||

self.max_input_len = max_input_len

|

||||

self.max_output_len = max_output_len

|

||||

self.max_total_token_num = self.max_batch_size * (self.max_input_len + self.max_output_len)

|

||||

|

||||

# Constraints relatable with specs of devices and model

|

||||

# This may change into an optional arg in the future

|

||||

assert self.max_batch_size <= 64, "Max batch size exceeds the constraint"

|

||||

assert self.max_input_len + self.max_output_len <= 4096, "Max length exceeds the constraint"

|

||||

|

||||

self.dtype = dtype

|

||||

|

||||

self.head_dim = model.config.hidden_size // model.config.num_attention_heads

|

||||

self.head_num = model.config.num_attention_heads

|

||||

self.layer_num = model.config.num_hidden_layers

|

||||

|

||||

self.tp_size = -1 # to be set with given shard config in self.prepare_shard_config

|

||||

self.cache_manager = None

|

||||

|

||||

self.shard_config = shard_config

|

||||

self.model = None

|

||||

# optimize the original model by sharding with ShardFormer

|

||||

self._optimize_model(model=model.to(device))

|

||||

|

||||

def _init_manager(self) -> None:

|

||||

assert self.tp_size >= 1, "TP size not initialized without providing a valid ShardConfig"

|

||||

assert self.head_num % self.tp_size == 0, f"Cannot shard {self.head_num} heads with tp size {self.tp_size}"

|

||||

self.head_num //= self.tp_size # update sharded number of heads

|

||||

self.cache_manager = MemoryManager(self.max_total_token_num, self.dtype, self.head_num, self.head_dim,

|

||||

self.layer_num)

|

||||

|

||||

def _optimize_model(self, model: nn.Module) -> None:

|

||||

"""

|

||||

Optimize the original model by sharding with ShardFormer.

|

||||

In further generation, use the sharded model instead of original model.

|

||||

"""

|

||||

# NOTE we will change to use an inference config later with additional attrs we want

|

||||

assert self.shard_config.inference_only is True

|

||||

shardformer = ShardFormer(shard_config=self.shard_config)

|

||||

self._prepare_with_shard_config(shard_config=self.shard_config)

|

||||

self._shard_model_by(shardformer, model)

|

||||

|

||||

def _prepare_with_shard_config(self, shard_config: Optional[ShardConfig] = None) -> ShardConfig:

|

||||

""" Prepare the engine with a given ShardConfig.

|

||||

|

||||

Args:

|

||||

shard_config (ShardConfig): shard config given to specify settings of the engine.

|

||||

If not provided, a default ShardConfig with tp size 1 will be created.

|

||||

"""

|

||||

self.tp_size = 1

|

||||

if shard_config is None:

|

||||

shard_config = ShardConfig(

|

||||

tensor_parallel_process_group=None,

|

||||

pipeline_stage_manager=None,

|

||||

enable_tensor_parallelism=False,

|

||||

enable_fused_normalization=False,

|

||||

enable_all_optimization=False,

|

||||

enable_flash_attention=False,

|

||||

enable_jit_fused=False,

|

||||

inference_only=True,

|

||||

)

|

||||

else:

|

||||

shard_config.inference_only = True

|

||||

shard_config.pipeline_stage_manager = None

|

||||

if shard_config.enable_tensor_parallelism:

|

||||

self.tp_size = shard_config.tensor_parallel_size

|

||||

self._init_manager()

|

||||

|

||||

return shard_config

|

||||

|

||||

def _shard_model_by(self, shardformer: ShardFormer, model: nn.Module) -> None:

|

||||

""" Shard original model by the given ShardFormer and store the sharded model. """

|

||||

assert self.tp_size == shardformer.shard_config.tensor_parallel_size, \

|

||||

"Discrepancy between the tp size of TPInferEngine and the tp size of shard config"

|

||||

model_name = model.__class__.__name__

|

||||

assert model_name in self.supported_models, f"Unsupported model cls {model_name} for TP inference."

|

||||

policy = get_autopolicy(model, inference_only=True)

|

||||

self.model, _ = shardformer.optimize(model, policy)

|

||||

self.model = self.model.cuda()

|

||||

|

||||

@property

|

||||

def supported_models(self) -> List[str]:

|

||||

return _supported_models

|

||||

|

||||

def generate(self, input_tokens: Union[BatchEncoding, dict, list, torch.Tensor], **generate_kwargs) -> torch.Tensor:

|

||||

"""Generate token sequence.

|

||||

|

||||

Args:

|

||||

input_tokens: could be one of the following types

|

||||

1. BatchEncoding or dict (e.g. tokenizer batch_encode)

|

||||

2. list of input token ids (e.g. appended result of tokenizer encode)

|

||||

3. torch.Tensor (e.g. tokenizer encode with return_tensors='pt')

|

||||

Returns:

|

||||

torch.Tensor: The returned sequence is given inputs + generated_tokens.

|

||||

"""

|

||||

if isinstance(input_tokens, torch.Tensor):

|

||||

input_tokens = dict(input_ids=input_tokens, attention_mask=torch.ones_like(input_tokens, dtype=torch.bool))

|

||||

for t in input_tokens:

|

||||

if torch.is_tensor(input_tokens[t]):

|

||||

input_tokens[t] = input_tokens[t].cuda()

|

||||

if 'max_new_tokens' not in generate_kwargs:

|

||||

generate_kwargs.update(max_new_tokens=self.max_output_len)

|

||||

|

||||

return self._generate_by_set_infer_state(input_tokens, **generate_kwargs)

|

||||

|

||||

def prepare_batch_state(self, inputs) -> BatchInferState:

|

||||

"""

|

||||

Create and prepare BatchInferState used for inference during model forwrad,

|

||||

by processing each sequence of the given inputs.

|

||||

|

||||

Args:

|

||||

inputs: should be one of the following types

|

||||

1. BatchEncoding or dict (e.g. tokenizer batch_encode)

|

||||

2. list of input token ids (e.g. appended result of tokenizer encode)

|

||||

3. torch.Tensor (e.g. tokenizer encode with return_tensors='pt')

|

||||

NOTE For torch.Tensor inputs representing a batch of inputs, we are unable to retrieve

|

||||

the actual length (e.g. number of tokens) of each input without attention mask

|

||||

Hence, for torch.Tensor with shape [bs, l] where bs > 1, we will assume

|

||||

all the inputs in the batch has the maximum length l

|

||||

Returns:

|

||||

BatchInferState: the states for the current batch during inference

|

||||

"""

|

||||

if not isinstance(inputs, (BatchEncoding, dict, list, torch.Tensor)):

|

||||

raise TypeError(f"inputs type {type(inputs)} is not supported in prepare_batch_state")

|

||||

|

||||

input_ids_list = None

|

||||

attention_mask = None

|

||||

|

||||

if isinstance(inputs, (BatchEncoding, dict)):

|

||||

input_ids_list = inputs['input_ids']

|

||||

attention_mask = inputs['attention_mask']

|

||||

else:

|

||||

input_ids_list = inputs

|

||||

if isinstance(input_ids_list[0], int): # for a single input

|

||||

input_ids_list = [input_ids_list]

|

||||

attention_mask = [attention_mask] if attention_mask is not None else attention_mask

|

||||

|

||||

batch_size = len(input_ids_list)

|

||||

|

||||

seq_start_indexes = torch.zeros(batch_size, dtype=torch.int32, device='cuda')

|

||||

seq_lengths = torch.zeros(batch_size, dtype=torch.int32, device='cuda')

|

||||

start_index = 0

|

||||

|

||||

max_len_in_batch = -1

|

||||

if isinstance(inputs, (BatchEncoding, dict)):

|

||||

for i, attn_mask in enumerate(attention_mask):

|

||||

curr_seq_len = len(attn_mask)

|

||||

# if isinstance(attn_mask, torch.Tensor):

|

||||

# curr_seq_len = int(torch.sum(attn_mask))

|

||||

# else:

|

||||

# curr_seq_len = int(sum(attn_mask))

|

||||

seq_lengths[i] = curr_seq_len

|

||||

seq_start_indexes[i] = start_index

|

||||

start_index += curr_seq_len

|

||||

max_len_in_batch = curr_seq_len if curr_seq_len > max_len_in_batch else max_len_in_batch

|

||||

else:

|

||||

length = max(len(input_id) for input_id in input_ids_list)

|

||||

for i, input_ids in enumerate(input_ids_list):

|

||||

curr_seq_len = length

|

||||

seq_lengths[i] = curr_seq_len

|

||||

seq_start_indexes[i] = start_index

|

||||

start_index += curr_seq_len

|

||||

max_len_in_batch = curr_seq_len if curr_seq_len > max_len_in_batch else max_len_in_batch

|

||||

block_loc = torch.empty((batch_size, self.max_input_len + self.max_output_len), dtype=torch.long, device='cuda')

|

||||

batch_infer_state = BatchInferState(batch_size, max_len_in_batch)

|

||||

batch_infer_state.seq_len = seq_lengths.to('cuda')

|

||||

batch_infer_state.start_loc = seq_start_indexes.to('cuda')

|

||||

batch_infer_state.block_loc = block_loc

|

||||

batch_infer_state.decode_layer_id = 0

|

||||

batch_infer_state.past_key_values_len = 0

|

||||

batch_infer_state.is_context_stage = True

|

||||

batch_infer_state.set_cache_manager(self.cache_manager)

|

||||

return batch_infer_state

|

||||

|

||||

@torch.no_grad()

|

||||

def _generate_by_set_infer_state(self, input_tokens, **generate_kwargs) -> torch.Tensor:

|

||||

"""

|

||||

Generate output tokens by setting BatchInferState as an attribute to the model and calling model.generate

|

||||

|

||||

Args:

|

||||

inputs: should be one of the following types

|

||||

1. BatchEncoding or dict (e.g. tokenizer batch_encode)

|

||||

2. list of input token ids (e.g. appended result of tokenizer encode)

|

||||

3. torch.Tensor (e.g. tokenizer encode with return_tensors='pt')

|

||||

"""

|

||||

|

||||

# for testing, always use sharded model

|

||||

assert self.model is not None, "sharded model does not exist"

|

||||

|

||||

batch_infer_state = self.prepare_batch_state(input_tokens)

|

||||

assert batch_infer_state.max_len_in_batch <= self.max_input_len, "max length in batch exceeds limit"

|

||||

|

||||

# set BatchInferState for the current batch as attr to model

|

||||

# NOTE this is not a preferable way to pass BatchInferState during inference

|

||||

# we might want to rewrite generate function (e.g. _generate_by_pass_infer_state)

|

||||

# and pass BatchInferState via model forward

|

||||

model = self.model

|

||||

if isinstance(model, LlamaForCausalLM):

|

||||

model = self.model.model

|

||||

elif isinstance(model, BloomForCausalLM):

|

||||

model = self.model.transformer

|

||||

setattr(model, 'infer_state', batch_infer_state)

|

||||

|

||||

outputs = self.model.generate(**input_tokens, **generate_kwargs, early_stopping=False)

|

||||

|

||||

# NOTE In future development, we're going to let the scheduler to handle the cache,

|

||||

# instead of freeing space explicitly at the end of generation

|

||||

self.cache_manager.free_all()

|

||||

|

||||

return outputs

|

||||

|

||||

# TODO might want to implement the func that generates output tokens by passing BatchInferState

|

||||

# as an arg into model.forward.

|

||||

# It requires rewriting model generate and replacing model forward.

|

||||

@torch.no_grad()

|

||||

def _generate_by_pass_infer_state(self,

|

||||

input_tokens,

|

||||

max_out_length: int,

|

||||

generation_config: Optional[GenerationConfig] = None,

|

||||

stopping_criteria: Optional[StoppingCriteriaList] = None,

|

||||

prepare_inputs_fn: Optional[Callable[[torch.Tensor, Any], dict]] = None,

|

||||

**model_kwargs) -> torch.Tensor:

|

||||

|

||||

raise NotImplementedError("generate by passing BatchInferState is not implemented.")

|

||||

|

||||

# might want to use in rewritten generate method: use after model.forward

|

||||

# BatchInferState is created and kept during generation

|

||||

# after each iter of model forward, we should update BatchInferState

|

||||

def _update_batch_state(self, infer_state: Optional[BatchInferState]) -> None:

|

||||

batch_size = infer_state.batch_size

|

||||

device = infer_state.start_loc.device

|

||||

infer_state.start_loc = infer_state.start_loc + torch.arange(0, batch_size, dtype=torch.int32, device=device)

|

||||

infer_state.seq_len += 1

|

||||

|

||||

# might want to create a sequence pool

|

||||

# add a single request/sequence/input text at a time and record its length

|

||||

# In other words, store the actual length of input tokens representing a single input text

|

||||

# E.g. "Introduce landmarks in Beijing"

|

||||

# => add request

|

||||

# => record token length and other necessary information to be used

|

||||

# => engine hold all these necessary information until `generate` (or other name) is called,

|

||||

# => put information already recorded in batchinferstate and pass it to model forward

|

||||

# => clear records in engine

|

||||

def add_request():

|

||||

raise NotImplementedError()

|

||||

|

|

@ -0,0 +1,101 @@

|

|||

# Adapted from lightllm/common/mem_manager.py

|

||||

# of the ModelTC/lightllm GitHub repository

|

||||

# https://github.com/ModelTC/lightllm/blob/050af3ce65edca617e2f30ec2479397d5bb248c9/lightllm/common/mem_manager.py

|

||||

|

||||

import torch

|

||||

from transformers.utils import logging

|

||||

|

||||

|

||||

class MemoryManager:

|

||||

r"""

|

||||

Manage token block indexes and allocate physical memory for key and value cache

|

||||

|

||||

Args:

|

||||

size: maximum token number used as the size of key and value buffer

|

||||

dtype: data type of cached key and value

|

||||

head_num: number of heads the memory manager is responsible for

|

||||

head_dim: embedded size per head

|

||||

layer_num: the number of layers in the model

|

||||

device: device used to store the key and value cache

|

||||

"""

|

||||

|

||||

def __init__(self,

|

||||

size: int,

|

||||

dtype: torch.dtype,

|

||||

head_num: int,

|

||||

head_dim: int,

|

||||

layer_num: int,

|

||||

device: torch.device = torch.device('cuda')):

|

||||

self.logger = logging.get_logger(__name__)

|

||||

self.available_size = size

|

||||

self.past_key_values_length = 0

|

||||

self._init_mem_states(size, device)

|

||||

self._init_kv_buffers(size, device, dtype, head_num, head_dim, layer_num)

|

||||

|

||||

def _init_mem_states(self, size, device):

|

||||

""" Initialize tensors used to manage memory states """

|

||||

self.mem_state = torch.ones((size,), dtype=torch.bool, device=device)

|

||||

self.mem_cum_sum = torch.empty((size,), dtype=torch.int32, device=device)

|

||||

self.indexes = torch.arange(0, size, dtype=torch.long, device=device)

|

||||

|

||||

def _init_kv_buffers(self, size, device, dtype, head_num, head_dim, layer_num):

|

||||

""" Initialize key buffer and value buffer on specified device """

|

||||

self.key_buffer = [

|

||||

torch.empty((size, head_num, head_dim), dtype=dtype, device=device) for _ in range(layer_num)

|

||||

]

|

||||

self.value_buffer = [

|

||||

torch.empty((size, head_num, head_dim), dtype=dtype, device=device) for _ in range(layer_num)

|

||||

]

|

||||

|

||||

@torch.no_grad()

|

||||

def alloc(self, required_size):

|

||||

""" allocate space of required_size by providing indexes representing available physical spaces """

|

||||

if required_size > self.available_size:

|

||||

self.logger.warning(f"No enough cache: required_size {required_size} "

|

||||

f"left_size {self.available_size}")

|

||||

return None

|

||||

torch.cumsum(self.mem_state, dim=0, dtype=torch.int32, out=self.mem_cum_sum)

|

||||

select_index = torch.logical_and(self.mem_cum_sum <= required_size, self.mem_state == 1)

|

||||

select_index = self.indexes[select_index]

|

||||

self.mem_state[select_index] = 0

|

||||

self.available_size -= len(select_index)

|

||||

return select_index

|

||||

|

||||

@torch.no_grad()

|

||||

def alloc_contiguous(self, required_size):

|

||||

""" allocate contiguous space of required_size """

|

||||

if required_size > self.available_size:

|

||||

self.logger.warning(f"No enough cache: required_size {required_size} "

|

||||

f"left_size {self.available_size}")

|

||||

return None

|

||||

torch.cumsum(self.mem_state, dim=0, dtype=torch.int32, out=self.mem_cum_sum)

|

||||

sum_size = len(self.mem_cum_sum)

|

||||

loc_sums = self.mem_cum_sum[required_size - 1:] - self.mem_cum_sum[0:sum_size - required_size +

|

||||

1] + self.mem_state[0:sum_size -

|

||||

required_size + 1]

|

||||

can_used_loc = self.indexes[0:sum_size - required_size + 1][loc_sums == required_size]

|

||||

if can_used_loc.shape[0] == 0:

|

||||

self.logger.info(f"No enough contiguous cache: required_size {required_size} "

|

||||

f"left_size {self.available_size}")

|

||||

return None

|

||||

start_loc = can_used_loc[0]

|

||||

select_index = self.indexes[start_loc:start_loc + required_size]

|

||||

self.mem_state[select_index] = 0

|

||||

self.available_size -= len(select_index)

|

||||

start = start_loc.item()

|

||||

end = start + required_size

|

||||

return select_index, start, end

|

||||

|

||||

@torch.no_grad()

|

||||

def free(self, free_index):

|

||||

""" free memory by updating memory states based on given indexes """

|

||||

self.available_size += free_index.shape[0]

|

||||

self.mem_state[free_index] = 1

|

||||

|

||||

@torch.no_grad()

|

||||

def free_all(self):

|

||||

""" free all memory by updating memory states """

|

||||

self.available_size = len(self.mem_state)

|

||||

self.mem_state[:] = 1

|

||||

self.past_key_values_length = 0

|

||||

self.logger.info("freed all space of memory manager")

|

||||

|

|

@ -0,0 +1,4 @@

|

|||

from .bloom import BloomInferenceForwards

|

||||

from .llama import LlamaInferenceForwards

|

||||

|

||||

__all__ = ['BloomInferenceForwards', 'LlamaInferenceForwards']

|

||||

|

|

@ -0,0 +1,521 @@

|

|||

import math

|

||||

import warnings

|

||||

from typing import List, Optional, Tuple, Union

|

||||

|

||||

import torch

|

||||

import torch.distributed as dist

|

||||

from torch.nn import CrossEntropyLoss

|

||||

from torch.nn import functional as F

|

||||

from transformers.models.bloom.modeling_bloom import (

|

||||

BaseModelOutputWithPastAndCrossAttentions,

|

||||

BloomAttention,

|

||||

BloomBlock,

|

||||

BloomForCausalLM,

|

||||

BloomModel,

|

||||

CausalLMOutputWithCrossAttentions,

|

||||

)

|

||||

from transformers.utils import logging

|

||||

|

||||

from colossalai.inference.tensor_parallel.batch_infer_state import BatchInferState

|

||||

from colossalai.kernel.triton.context_attention import bloom_context_attn_fwd

|

||||

from colossalai.kernel.triton.copy_kv_cache_dest import copy_kv_cache_to_dest

|

||||

from colossalai.kernel.triton.token_attention_kernel import token_attention_fwd

|

||||

|

||||

|

||||

def generate_alibi(n_head, dtype=torch.float16):

|

||||

"""

|

||||

This method is adapted from `_generate_alibi` function

|

||||

in `lightllm/models/bloom/layer_weights/transformer_layer_weight.py`

|

||||

of the ModelTC/lightllm GitHub repository.

|

||||

This method is originally the `build_alibi_tensor` function

|

||||

in `transformers/models/bloom/modeling_bloom.py`

|

||||

of the huggingface/transformers GitHub repository.

|

||||

"""

|

||||

|

||||

def get_slopes_power_of_2(n):

|

||||

start = 2**(-(2**-(math.log2(n) - 3)))

|

||||

return [start * start**i for i in range(n)]

|

||||

|

||||

def get_slopes(n):

|

||||

if math.log2(n).is_integer():

|

||||

return get_slopes_power_of_2(n)

|

||||

else:

|

||||

closest_power_of_2 = 2**math.floor(math.log2(n))

|

||||

slopes_power_of_2 = get_slopes_power_of_2(closest_power_of_2)

|

||||

slopes_double = get_slopes(2 * closest_power_of_2)

|

||||

slopes_combined = slopes_power_of_2 + slopes_double[0::2][:n - closest_power_of_2]

|

||||

return slopes_combined

|

||||

|

||||

slopes = get_slopes(n_head)

|

||||

return torch.tensor(slopes, dtype=dtype)

|

||||

|

||||

|

||||

class BloomInferenceForwards:

|

||||

"""

|

||||

This class serves a micro library for bloom inference forwards.

|

||||

We intend to replace the forward methods for BloomForCausalLM, BloomModel, BloomBlock, and BloomAttention,

|

||||

as well as prepare_inputs_for_generation method for BloomForCausalLM.

|

||||

For future improvement, we might want to skip replacing methods for BloomForCausalLM,

|

||||

and call BloomModel.forward iteratively in TpInferEngine

|

||||

"""

|

||||

|

||||

@staticmethod

|

||||

def bloom_model_forward(

|

||||

self: BloomModel,

|

||||

input_ids: Optional[torch.LongTensor] = None,

|

||||

past_key_values: Optional[Tuple[Tuple[torch.Tensor, torch.Tensor], ...]] = None,

|

||||

attention_mask: Optional[torch.Tensor] = None,

|

||||

head_mask: Optional[torch.LongTensor] = None,

|

||||

inputs_embeds: Optional[torch.LongTensor] = None,

|

||||

use_cache: Optional[bool] = None,

|

||||

output_attentions: Optional[bool] = None,

|

||||

output_hidden_states: Optional[bool] = None,

|

||||

return_dict: Optional[bool] = None,

|

||||

infer_state: Optional[BatchInferState] = None,

|

||||

**deprecated_arguments,

|

||||

) -> Union[Tuple[torch.Tensor, ...], BaseModelOutputWithPastAndCrossAttentions]:

|

||||

|

||||

logger = logging.get_logger(__name__)

|

||||

|

||||

if deprecated_arguments.pop("position_ids", False) is not False:

|

||||

# `position_ids` could have been `torch.Tensor` or `None` so defaulting pop to `False` allows to detect if users were passing explicitly `None`

|

||||

warnings.warn(

|

||||

"`position_ids` have no functionality in BLOOM and will be removed in v5.0.0. You can safely ignore"

|

||||

" passing `position_ids`.",

|

||||

FutureWarning,

|

||||

)

|

||||

if len(deprecated_arguments) > 0:

|

||||

raise ValueError(f"Got unexpected arguments: {deprecated_arguments}")

|

||||

|

||||

output_attentions = output_attentions if output_attentions is not None else self.config.output_attentions

|

||||

output_hidden_states = (output_hidden_states

|

||||

if output_hidden_states is not None else self.config.output_hidden_states)

|

||||

use_cache = use_cache if use_cache is not None else self.config.use_cache

|

||||

return_dict = return_dict if return_dict is not None else self.config.use_return_dict

|

||||

|

||||

if input_ids is not None and inputs_embeds is not None:

|

||||

raise ValueError("You cannot specify both input_ids and inputs_embeds at the same time")

|

||||

elif input_ids is not None:

|

||||

batch_size, seq_length = input_ids.shape

|

||||

elif inputs_embeds is not None:

|

||||

batch_size, seq_length, _ = inputs_embeds.shape

|

||||

else:

|

||||

raise ValueError("You have to specify either input_ids or inputs_embeds")

|

||||

|

||||

# still need to keep past_key_values to fit original forward flow

|

||||

if past_key_values is None:

|

||||

past_key_values = tuple([None] * len(self.h))

|

||||

|

||||

# Prepare head mask if needed

|

||||

# 1.0 in head_mask indicate we keep the head

|

||||

# attention_probs has shape batch_size x num_heads x N x N

|

||||

# head_mask has shape n_layer x batch x num_heads x N x N

|

||||

head_mask = self.get_head_mask(head_mask, self.config.n_layer)

|

||||

|

||||

if inputs_embeds is None:

|

||||

inputs_embeds = self.word_embeddings(input_ids)

|

||||

|

||||

hidden_states = self.word_embeddings_layernorm(inputs_embeds)

|

||||

|

||||

presents = () if use_cache else None

|

||||

all_self_attentions = () if output_attentions else None

|

||||

all_hidden_states = () if output_hidden_states else None

|

||||

|

||||

if self.gradient_checkpointing and self.training:

|

||||

if use_cache:

|

||||

logger.warning_once(

|

||||

"`use_cache=True` is incompatible with gradient checkpointing. Setting `use_cache=False`...")

|

||||

use_cache = False

|

||||

|

||||

# NOTE determine if BatchInferState is passed in via arg

|

||||

# if not, get the attr binded to the model

|

||||

# We might wantto remove setattr later

|

||||

if infer_state is None:

|

||||

assert hasattr(self, 'infer_state')

|

||||

infer_state = self.infer_state

|

||||

|

||||

# Compute alibi tensor: check build_alibi_tensor documentation

|

||||

seq_length_with_past = seq_length

|

||||

past_key_values_length = 0

|

||||

# if self.cache_manager.past_key_values_length > 0:

|

||||

if infer_state.cache_manager.past_key_values_length > 0:

|

||||

# update the past key values length in cache manager,

|

||||

# NOTE use BatchInferState.past_key_values_length instead the one in cache manager

|

||||

past_key_values_length = infer_state.cache_manager.past_key_values_length

|

||||

seq_length_with_past = seq_length_with_past + past_key_values_length

|

||||

|

||||

# infer_state.cache_manager = self.cache_manager

|

||||

|

||||

if use_cache and seq_length != 1:

|

||||

# prefill stage

|

||||

infer_state.is_context_stage = True # set prefill stage, notify attention layer

|

||||

infer_state.context_mem_index = infer_state.cache_manager.alloc(infer_state.total_token_num)

|

||||

BatchInferState.init_block_loc(infer_state.block_loc, infer_state.seq_len, seq_length,

|

||||

infer_state.context_mem_index)

|

||||

else:

|

||||

infer_state.is_context_stage = False

|

||||

alloc_mem = infer_state.cache_manager.alloc_contiguous(batch_size)

|

||||

if alloc_mem is not None:

|

||||

infer_state.decode_is_contiguous = True

|

||||

infer_state.decode_mem_index = alloc_mem[0]

|

||||

infer_state.decode_mem_start = alloc_mem[1]

|

||||

infer_state.decode_mem_end = alloc_mem[2]

|

||||

infer_state.block_loc[:, seq_length_with_past - 1] = infer_state.decode_mem_index

|

||||

else:

|

||||

print(f" *** Encountered allocation non-contiguous")

|

||||

print(

|

||||

f" infer_state.cache_manager.past_key_values_length: {infer_state.cache_manager.past_key_values_length}"

|

||||

)

|

||||

infer_state.decode_is_contiguous = False

|

||||

alloc_mem = infer_state.cache_manager.alloc(batch_size)

|

||||

infer_state.decode_mem_index = alloc_mem

|

||||

# infer_state.decode_key_buffer = torch.empty((batch_size, self.tp_head_num_, self.head_dim_), dtype=torch.float16, device="cuda")

|

||||

# infer_state.decode_value_buffer = torch.empty((batch_size, self.tp_head_num_, self.head_dim_), dtype=torch.float16, device="cuda")

|

||||

infer_state.block_loc[:, seq_length_with_past - 1] = infer_state.decode_mem_index

|

||||

|

||||

if attention_mask is None:

|

||||

attention_mask = torch.ones((batch_size, seq_length_with_past), device=hidden_states.device)

|

||||

else:

|

||||

attention_mask = attention_mask.to(hidden_states.device)

|

||||

|

||||

# NOTE revise: we might want to store a single 1D alibi(length is #heads) in model,

|

||||

# or store to BatchInferState to prevent re-calculating

|

||||

# When we have multiple process group (e.g. dp together with tp), we need to pass the pg to here

|

||||

# alibi = generate_alibi(self.num_heads).contiguous().cuda()

|

||||

tp_size = dist.get_world_size()

|

||||

curr_tp_rank = dist.get_rank()

|

||||

alibi = generate_alibi(self.num_heads * tp_size).contiguous()[curr_tp_rank * self.num_heads:(curr_tp_rank + 1) *

|

||||

self.num_heads].cuda()

|

||||

causal_mask = self._prepare_attn_mask(

|

||||

attention_mask,

|

||||

input_shape=(batch_size, seq_length),

|

||||

past_key_values_length=past_key_values_length,

|

||||

)

|

||||

|

||||

for i, (block, layer_past) in enumerate(zip(self.h, past_key_values)):

|

||||

if output_hidden_states:

|

||||

all_hidden_states = all_hidden_states + (hidden_states,)

|

||||

|

||||

if self.gradient_checkpointing and self.training:

|

||||

# NOTE: currently our KV cache manager does not handle this condition

|

||||

def create_custom_forward(module):

|

||||

|

||||

def custom_forward(*inputs):

|

||||

# None for past_key_value

|

||||

return module(*inputs, use_cache=use_cache, output_attentions=output_attentions)

|

||||

|

||||

return custom_forward

|

||||

|

||||

outputs = torch.utils.checkpoint.checkpoint(

|

||||

create_custom_forward(block),

|

||||

hidden_states,

|

||||

alibi,

|

||||

causal_mask,

|

||||

layer_past,

|

||||

head_mask[i],

|

||||

)

|

||||

else:

|

||||

outputs = block(

|

||||

hidden_states,

|

||||

layer_past=layer_past,

|

||||

attention_mask=causal_mask,

|

||||

head_mask=head_mask[i],

|

||||

use_cache=use_cache,

|

||||

output_attentions=output_attentions,

|

||||

alibi=alibi,

|

||||

infer_state=infer_state,

|

||||

)

|

||||

|

||||

hidden_states = outputs[0]

|

||||

if use_cache is True:

|

||||

presents = presents + (outputs[1],)

|

||||

|

||||

if output_attentions:

|

||||

all_self_attentions = all_self_attentions + (outputs[2 if use_cache else 1],)

|

||||

|

||||

# Add last hidden state

|

||||

hidden_states = self.ln_f(hidden_states)

|

||||

|

||||

if output_hidden_states:

|

||||

all_hidden_states = all_hidden_states + (hidden_states,)

|

||||

|

||||

# update indices of kv cache block

|

||||

# NOT READY FOR PRIME TIME

|

||||

# might want to remove this part, instead, better to pass the BatchInferState from model forward,

|

||||

# and update these information in engine.generate after model foward called

|

||||

infer_state.start_loc = infer_state.start_loc + torch.arange(0, batch_size, dtype=torch.int32, device="cuda")

|

||||

infer_state.seq_len += 1

|

||||

infer_state.decode_layer_id = 0

|

||||

|

||||

if not return_dict:

|

||||

return tuple(v for v in [hidden_states, presents, all_hidden_states, all_self_attentions] if v is not None)

|

||||

|

||||

return BaseModelOutputWithPastAndCrossAttentions(

|

||||

last_hidden_state=hidden_states,

|

||||

past_key_values=presents, # should always be (None, None, ..., None)

|

||||

hidden_states=all_hidden_states,

|

||||

attentions=all_self_attentions,

|

||||

)

|

||||

|

||||

@staticmethod

|

||||

def bloom_for_causal_lm_forward(self: BloomForCausalLM,

|

||||

input_ids: Optional[torch.LongTensor] = None,

|

||||

past_key_values: Optional[Tuple[Tuple[torch.Tensor, torch.Tensor], ...]] = None,

|

||||

attention_mask: Optional[torch.Tensor] = None,

|

||||

head_mask: Optional[torch.Tensor] = None,

|

||||

inputs_embeds: Optional[torch.Tensor] = None,

|

||||

labels: Optional[torch.Tensor] = None,

|

||||

use_cache: Optional[bool] = None,

|

||||

output_attentions: Optional[bool] = None,

|

||||

output_hidden_states: Optional[bool] = None,

|

||||

return_dict: Optional[bool] = None,

|

||||

infer_state: Optional[BatchInferState] = None,

|

||||

**deprecated_arguments):

|

||||

r"""

|

||||

labels (`torch.LongTensor` of shape `(batch_size, sequence_length)`, *optional*):

|

||||

Labels for language modeling. Note that the labels **are shifted** inside the model, i.e. you can set

|

||||

`labels = input_ids` Indices are selected in `[-100, 0, ..., config.vocab_size]` All labels set to `-100`

|

||||

are ignored (masked), the loss is only computed for labels in `[0, ..., config.vocab_size]`

|

||||

"""

|

||||

logger = logging.get_logger(__name__)

|

||||

|

||||

if deprecated_arguments.pop("position_ids", False) is not False:

|

||||

# `position_ids` could have been `torch.Tensor` or `None` so defaulting pop to `False` allows to detect if users were passing explicitly `None`

|

||||

warnings.warn(

|

||||

"`position_ids` have no functionality in BLOOM and will be removed in v5.0.0. You can safely ignore"

|

||||

" passing `position_ids`.",

|

||||

FutureWarning,

|

||||

)

|

||||

if len(deprecated_arguments) > 0:

|

||||

raise ValueError(f"Got unexpected arguments: {deprecated_arguments}")

|

||||

|

||||

return_dict = return_dict if return_dict is not None else self.config.use_return_dict

|

||||

|

||||

transformer_outputs = BloomInferenceForwards.bloom_model_forward(self.transformer,

|

||||

input_ids,

|

||||

past_key_values=past_key_values,

|

||||

attention_mask=attention_mask,

|

||||

head_mask=head_mask,

|

||||

inputs_embeds=inputs_embeds,

|

||||

use_cache=use_cache,

|

||||

output_attentions=output_attentions,

|

||||

output_hidden_states=output_hidden_states,

|

||||

return_dict=return_dict,

|

||||

infer_state=infer_state)

|

||||

hidden_states = transformer_outputs[0]

|

||||

|

||||

lm_logits = self.lm_head(hidden_states)

|

||||

|

||||

loss = None

|

||||

if labels is not None:

|

||||

# move labels to correct device to enable model parallelism

|

||||

labels = labels.to(lm_logits.device)

|

||||

# Shift so that tokens < n predict n

|

||||

shift_logits = lm_logits[..., :-1, :].contiguous()

|

||||

shift_labels = labels[..., 1:].contiguous()

|

||||

batch_size, seq_length, vocab_size = shift_logits.shape

|

||||

# Flatten the tokens

|

||||

loss_fct = CrossEntropyLoss()

|

||||

loss = loss_fct(shift_logits.view(batch_size * seq_length, vocab_size),

|

||||

shift_labels.view(batch_size * seq_length))

|

||||

|

||||

if not return_dict:

|

||||

output = (lm_logits,) + transformer_outputs[1:]

|

||||

return ((loss,) + output) if loss is not None else output

|

||||

|

||||

return CausalLMOutputWithCrossAttentions(

|

||||

loss=loss,

|

||||

logits=lm_logits,

|

||||

past_key_values=transformer_outputs.past_key_values,

|

||||

hidden_states=transformer_outputs.hidden_states,

|

||||

attentions=transformer_outputs.attentions,

|

||||

)

|

||||

|

||||

@staticmethod

|

||||

def bloom_for_causal_lm_prepare_inputs_for_generation(

|

||||

self: BloomForCausalLM,

|

||||

input_ids: torch.LongTensor,

|

||||

past_key_values: Optional[torch.Tensor] = None,

|

||||

attention_mask: Optional[torch.Tensor] = None,

|

||||

inputs_embeds: Optional[torch.Tensor] = None,

|

||||

**kwargs,

|

||||

) -> dict:

|

||||

# only last token for input_ids if past is not None

|

||||

if past_key_values:

|

||||

input_ids = input_ids[:, -1].unsqueeze(-1)

|

||||

|

||||

# NOTE we won't use past key values here

|

||||

# the cache may be in the stardard format (e.g. in contrastive search), convert to bloom's format if needed

|

||||

# if past_key_values[0][0].shape[0] == input_ids.shape[0]:

|

||||

# past_key_values = self._convert_to_bloom_cache(past_key_values)

|

||||

|

||||

# if `inputs_embeds` are passed, we only want to use them in the 1st generation step

|

||||

if inputs_embeds is not None and past_key_values is None:

|

||||

model_inputs = {"inputs_embeds": inputs_embeds}

|

||||

else:

|

||||

model_inputs = {"input_ids": input_ids}

|

||||

|

||||

model_inputs.update({

|

||||

"past_key_values": past_key_values,

|

||||

"use_cache": kwargs.get("use_cache"),

|

||||

"attention_mask": attention_mask,

|

||||

})

|

||||

return model_inputs

|

||||

|

||||

@staticmethod

|

||||

def bloom_block_forward(

|

||||

self: BloomBlock,

|

||||

hidden_states: torch.Tensor,

|

||||

alibi: torch.Tensor,

|

||||

attention_mask: torch.Tensor,

|

||||

layer_past: Optional[Tuple[torch.Tensor, torch.Tensor]] = None,

|

||||

head_mask: Optional[torch.Tensor] = None,

|

||||

use_cache: bool = False,

|

||||

output_attentions: bool = False,

|

||||

infer_state: Optional[BatchInferState] = None,

|

||||

):

|

||||

# hidden_states: [batch_size, seq_length, hidden_size]

|

||||

|

||||

# Layer norm at the beginning of the transformer layer.

|

||||

layernorm_output = self.input_layernorm(hidden_states)

|

||||

|

||||

# Layer norm post the self attention.

|

||||

if self.apply_residual_connection_post_layernorm:

|

||||

residual = layernorm_output

|

||||

else:

|

||||

residual = hidden_states

|

||||

|

||||

# Self attention.

|

||||

attn_outputs = self.self_attention(

|

||||

layernorm_output,

|

||||

residual,

|

||||

layer_past=layer_past,

|

||||

attention_mask=attention_mask,

|

||||

alibi=alibi,

|

||||

head_mask=head_mask,

|

||||

use_cache=use_cache,

|

||||

output_attentions=output_attentions,

|

||||

infer_state=infer_state,

|

||||

)

|

||||

|

||||

attention_output = attn_outputs[0]

|

||||

|

||||

outputs = attn_outputs[1:]

|

||||

|

||||

layernorm_output = self.post_attention_layernorm(attention_output)

|

||||

|

||||

# Get residual

|

||||

if self.apply_residual_connection_post_layernorm:

|

||||

residual = layernorm_output

|

||||

else:

|

||||

residual = attention_output

|

||||

|

||||

# MLP.

|

||||

output = self.mlp(layernorm_output, residual)

|

||||

|

||||

if use_cache:

|

||||

outputs = (output,) + outputs

|

||||

else:

|

||||

outputs = (output,) + outputs[1:]

|

||||

|

||||

return outputs # hidden_states, present, attentions

|

||||

|

||||

@staticmethod

|

||||

def bloom_attention_forward(

|

||||

self: BloomAttention,

|

||||

hidden_states: torch.Tensor,

|

||||

residual: torch.Tensor,

|

||||

alibi: torch.Tensor,

|

||||

attention_mask: torch.Tensor,

|

||||

layer_past: Optional[Tuple[torch.Tensor, torch.Tensor]] = None,

|

||||

head_mask: Optional[torch.Tensor] = None,

|

||||

use_cache: bool = False,

|

||||

output_attentions: bool = False,

|

||||

infer_state: Optional[BatchInferState] = None,

|

||||

):

|

||||

|

||||

fused_qkv = self.query_key_value(hidden_states) # [batch_size, seq_length, 3 x hidden_size]

|

||||

|

||||

# 3 x [batch_size, seq_length, num_heads, head_dim]

|

||||

(query_layer, key_layer, value_layer) = self._split_heads(fused_qkv)

|

||||

batch_size, q_length, H, D_HEAD = query_layer.shape

|

||||

k = key_layer.reshape(-1, H, D_HEAD) # batch_size * q_length, H, D_HEAD, q_lenth == 1

|

||||

v = value_layer.reshape(-1, H, D_HEAD) # batch_size * q_length, H, D_HEAD, q_lenth == 1

|

||||

|

||||

mem_manager = infer_state.cache_manager

|

||||

layer_id = infer_state.decode_layer_id

|

||||

|

||||

if layer_id == 0: # once per model.forward

|

||||

infer_state.cache_manager.past_key_values_length += q_length # += 1

|

||||

|

||||

if infer_state.is_context_stage:

|

||||

# context process

|

||||

max_input_len = q_length

|

||||

b_start_loc = infer_state.start_loc

|

||||

b_seq_len = infer_state.seq_len[:batch_size]

|

||||

q = query_layer.reshape(-1, H, D_HEAD)

|

||||

|

||||

copy_kv_cache_to_dest(k, infer_state.context_mem_index, mem_manager.key_buffer[layer_id])

|

||||

copy_kv_cache_to_dest(v, infer_state.context_mem_index, mem_manager.value_buffer[layer_id])

|

||||

|

||||

# output = self.output[:batch_size*q_length, :, :]

|

||||

output = torch.empty_like(q)

|

||||

|

||||

bloom_context_attn_fwd(q, k, v, output, b_start_loc, b_seq_len, max_input_len, alibi)

|

||||

|

||||