mirror of https://github.com/InternLM/InternLM

[Update] InternLM2.5 (#752)

Co-authored-by: zhangwenwei <zhangwenwei@pjlab.org.cn> Co-authored-by: ZwwWayne <wayne.zw@outlook.com> Co-authored-by: 张硕 <zhangshuo@pjlab.org.cn> Co-authored-by: zhangsongyang <zhangsongyang@pjlab.org.cn> Co-authored-by: 王子奕 <wangziyi@pjlab.org.cn> Co-authored-by: 曹巍瀚 <caoweihan@pjlab.org.cn> Co-authored-by: tonysy <sy.zhangbuaa@gmail.com> Co-authored-by: 李博文 <libowen@pjlab.org.cn>pull/753/head

parent

9943444614

commit

3b086d7914

143

README.md

143

README.md

|

|

@ -36,18 +36,18 @@

|

|||

|

||||

## Introduction

|

||||

|

||||

InternLM2 series are released with the following features:

|

||||

InternLM2.5 series are released with the following features:

|

||||

|

||||

- **200K Context window**: Nearly perfect at finding needles in the haystack with 200K-long context, with leading performance on long-context tasks like LongBench and L-Eval. Try it with [LMDeploy](./chat/lmdeploy.md) for 200K-context inference.

|

||||

- **Outstanding reasoning capability**: State-of-the-art performance on Math reasoning, surpassing models like Llama3 and Gemma2-9B.

|

||||

|

||||

- **Outstanding comprehensive performance**: Significantly better than the last generation in all dimensions, especially in reasoning, math, code, chat experience, instruction following, and creative writing, with leading performance among open-source models in similar sizes. In some evaluations, InternLM2-Chat-20B may match or even surpass ChatGPT (GPT-3.5).

|

||||

- **1M Context window**: Nearly perfect at finding needles in the haystack with 1M-long context, with leading performance on long-context tasks like LongBench. Try it with [LMDeploy](./chat/lmdeploy.md) for 1M-context inference. More details and a file chat demo are found [here](./long_context/README.md).

|

||||

|

||||

- **Code interpreter & Data analysis**: With code interpreter, InternLM2-Chat-20B obtains compatible performance with GPT-4 on GSM8K and MATH. InternLM2-Chat also provides data analysis capability.

|

||||

|

||||

- **Stronger tool use**: Based on better tool utilization-related capabilities in instruction following, tool selection and reflection, InternLM2 can support more kinds of agents and multi-step tool calling for complex tasks. See [examples](./agent/).

|

||||

- **Stronger tool use**: InternLM2.5 supports gathering information from more than 100 web pages, corresponding implementation will be released in [Lagent](https://github.com/InternLM/lagent/tree/main) soon. InternLM2.5 has better tool utilization-related capabilities in instruction following, tool selection and reflection. See [examples](./agent/).

|

||||

|

||||

## News

|

||||

|

||||

\[2024.06.30\] We release InternLM2.5-7B, InternLM2.5-7B-Chat and InternLM2.5-7B-Chat-1M. See [model zoo below](#model-zoo) for download or [model cards](./model_cards/) for more details.

|

||||

|

||||

\[2024.03.26\] We release InternLM2 technical report. See [arXiv](https://arxiv.org/abs/2403.17297) for details.

|

||||

|

||||

\[2024.01.31\] We release InternLM2-1.8B, along with the associated chat model. They provide a cheaper deployment option while maintaining leading performance.

|

||||

|

|

@ -62,6 +62,33 @@ InternLM2 series are released with the following features:

|

|||

|

||||

## Model Zoo

|

||||

|

||||

### InternLM2.5

|

||||

|

||||

| Model | Transformers(HF) | ModelScope(HF) | OpenXLab(HF) | OpenXLab(Origin) | Release Date |

|

||||

| -------------------------- | ------------------------------------------ | ---------------------------------------- | -------------------------------------- | ------------------------------------------ | ------------ |

|

||||

| **InternLM2.5-7B** | [🤗internlm2_5-7b](https://huggingface.co/internlm/internlm2_5-7b) | [<img src="./assets/modelscope_logo.png" width="20px" /> internlm2_5-7b](https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2_5-7b/summary) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2_5-7b) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2_5-7b-original) | 2024-06-30 |

|

||||

| **InternLM2.5-7B-Chat** | [🤗internlm2_5-7b-chat](https://huggingface.co/internlm/internlm2_5-7b-chat) | [<img src="./assets/modelscope_logo.png" width="20px" /> internlm2_5-7b-chat](https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2_5-7b-chat/summary) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2_5-7b-chat) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2_5-7b-chat-original) | 2024-06-30 |

|

||||

| **InternLM2.5-7B-Chat-1M** | [🤗internlm2_5-7b-chat-1m](https://huggingface.co/internlm/internlm2_5-7b-chat-1m) | [<img src="./assets/modelscope_logo.png" width="20px" /> internlm2_5-7b-chat-1m](https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2_5-7b-chat-1m/summary) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2_5-7b-chat-1m) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2_5-7b-chat-1m-original) | 2024-06-30 |

|

||||

|

||||

**Notes:**

|

||||

|

||||

The release of InternLM2.5 series contains 7B model size for now and we are going to release the 1.8B and 20B versions soon. 7B models are efficient for research and application and 20B models are more powerful and can support more complex scenarios. The relation of these models are shown as follows.

|

||||

|

||||

1. **InternLM2.5**: Foundation models pre-trained on large-scale corpus. InternLM2.5 models are recommended for consideration in most applications.

|

||||

2. **InternLM2.5-Chat**: The Chat model that undergoes supervised fine-tuning (SFT) and reinforcement learning from human feedback (RLHF), based on the InternLM2.5 model. InternLM2.5-Chat is optimized for instruction following, chat experience, and function call, which is recommended for downstream applications.

|

||||

3. **InternLM2.5-Chat-1M**: InternLM2.5-Chat-1M supports 1M long-context with compatible performance as InternLM2.5-Chat.

|

||||

|

||||

**Limitations:** Although we have made efforts to ensure the safety of the model during the training process and to encourage the model to generate text that complies with ethical and legal requirements, the model may still produce unexpected outputs due to its size and probabilistic generation paradigm. For example, the generated responses may contain biases, discrimination, or other harmful content. Please do not propagate such content. We are not responsible for any consequences resulting from the dissemination of harmful information.

|

||||

|

||||

**Supplements:** `HF` refers to the format used by HuggingFace in [transformers](https://github.com/huggingface/transformers), whereas `Origin` denotes the format adopted by the InternLM team in [InternEvo](https://github.com/InternLM/InternEvo).

|

||||

|

||||

### InternLM2

|

||||

|

||||

<details>

|

||||

<summary>(click to expand)</summary>

|

||||

|

||||

Our previous generation models with advanced capabilities in long-context processing, reasoning, and coding. See [model cards](./model_cards/) for more details.

|

||||

|

||||

| Model | Transformers(HF) | ModelScope(HF) | OpenXLab(HF) | OpenXLab(Origin) | Release Date |

|

||||

| --------------------------- | ----------------------------------------- | ---------------------------------------- | -------------------------------------- | ------------------------------------------ | ------------ |

|

||||

| **InternLM2-1.8B** | [🤗internlm2-1.8b](https://huggingface.co/internlm/internlm2-1_8b) | [<img src="./assets/modelscope_logo.png" width="20px" /> internlm2-1.8b](https://www.modelscope.cn/models/Shanghai_AI_Laboratory/internlm2-1_8b/summary) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2-base-1.8b) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2-base-1.8b-original) | 2024-01-31 |

|

||||

|

|

@ -76,63 +103,38 @@ InternLM2 series are released with the following features:

|

|||

| **InternLM2-Chat-20B-SFT** | [🤗internlm2-chat-20b-sft](https://huggingface.co/internlm/internlm2-chat-20b-sft) | [<img src="./assets/modelscope_logo.png" width="20px" /> internlm2-chat-20b-sft](https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2-chat-20b-sft/summary) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2-chat-20b-sft) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2-chat-20b-sft-original) | 2024-01-17 |

|

||||

| **InternLM2-Chat-20B** | [🤗internlm2-chat-20b](https://huggingface.co/internlm/internlm2-chat-20b) | [<img src="./assets/modelscope_logo.png" width="20px" /> internlm2-chat-20b](https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2-chat-20b/summary) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2-chat-20b) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2-chat-20b-original) | 2024-01-17 |

|

||||

|

||||

**Notes:**

|

||||

|

||||

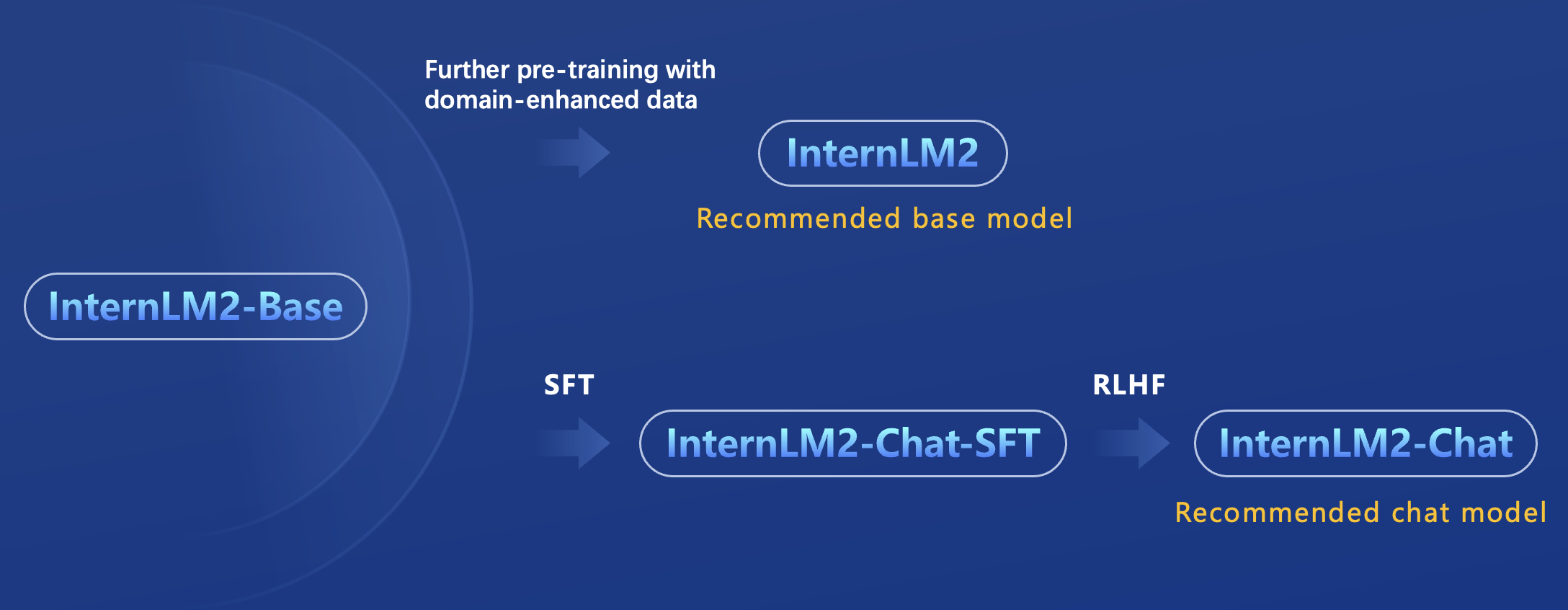

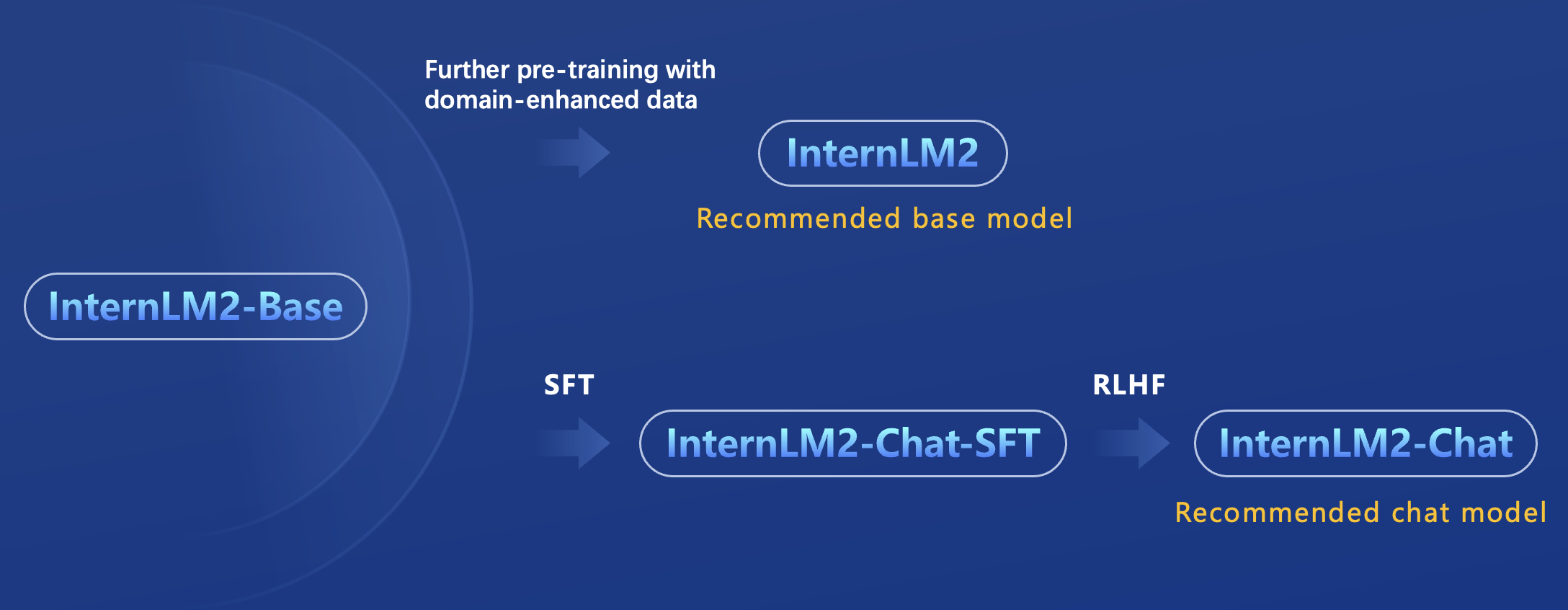

The release of InternLM2 series contains two model sizes: 7B and 20B. 7B models are efficient for research and application and 20B models are more powerful and can support more complex scenarios. The relation of these models are shown as follows.

|

||||

|

||||

|

||||

|

||||

1. **InternLM2-Base**: Foundation models with high quality and high adaptation flexibility, which serve as a good starting point for downstream deep adaptations.

|

||||

2. **InternLM2**: Further pretrain with general domain data and domain-enhanced corpus, obtaining state-of-the-art performance in evaluation with good language capability. InternLM2 models are recommended for consideration in most applications.

|

||||

3. **InternLM2-Chat-SFT**: Intermediate version of InternLM2-Chat that only undergoes supervised fine-tuning (SFT), based on the InternLM2-Base model. We release them to benefit research on alignment.

|

||||

4. **InternLM2-Chat**: Further aligned on top of InternLM2-Chat-SFT through online RLHF. InternLM2-Chat exhibits better instruction following, chat experience, and function call, which is recommended for downstream applications.

|

||||

|

||||

**Limitations:** Although we have made efforts to ensure the safety of the model during the training process and to encourage the model to generate text that complies with ethical and legal requirements, the model may still produce unexpected outputs due to its size and probabilistic generation paradigm. For example, the generated responses may contain biases, discrimination, or other harmful content. Please do not propagate such content. We are not responsible for any consequences resulting from the dissemination of harmful information.

|

||||

|

||||

**Supplements:** `HF` refers to the format used by HuggingFace in [transformers](https://github.com/huggingface/transformers), whereas `Origin` denotes the format adopted by the InternLM team in [InternEvo](https://github.com/InternLM/InternEvo).

|

||||

</details>

|

||||

|

||||

## Performance

|

||||

|

||||

### Objective Evaluation

|

||||

We have evaluated InternLM2.5 on several important benchmarks using the open-source evaluation tool [OpenCompass](https://github.com/open-compass/opencompass). Some of the evaluation results are shown in the table below. You are welcome to visit the [OpenCompass Leaderboard](https://rank.opencompass.org.cn) for more evaluation results.

|

||||

|

||||

| Dataset | Baichuan2-7B-Chat | Mistral-7B-Instruct-v0.2 | Qwen-7B-Chat | InternLM2-Chat-7B | ChatGLM3-6B | Baichuan2-13B-Chat | Mixtral-8x7B-Instruct-v0.1 | Qwen-14B-Chat | InternLM2-Chat-20B |

|

||||

| ---------------- | ----------------- | ------------------------ | ------------ | ----------------- | ----------- | ------------------ | -------------------------- | ------------- | ------------------ |

|

||||

| MMLU | 50.1 | 59.2 | 57.1 | 63.7 | 58.0 | 56.6 | 70.3 | 66.7 | 66.5 |

|

||||

| CMMLU | 53.4 | 42.0 | 57.9 | 63.0 | 57.8 | 54.8 | 50.6 | 68.1 | 65.1 |

|

||||

| AGIEval | 35.3 | 34.5 | 39.7 | 47.2 | 44.2 | 40.0 | 41.7 | 46.5 | 50.3 |

|

||||

| C-Eval | 53.9 | 42.4 | 59.8 | 60.8 | 59.1 | 56.3 | 54.0 | 71.5 | 63.0 |

|

||||

| TrivialQA | 37.6 | 35.0 | 46.1 | 50.8 | 38.1 | 40.3 | 57.7 | 54.5 | 53.9 |

|

||||

| NaturalQuestions | 12.8 | 8.1 | 18.6 | 24.1 | 14.0 | 12.7 | 22.5 | 22.9 | 25.9 |

|

||||

| C3 | 78.5 | 66.9 | 84.4 | 91.5 | 79.3 | 84.4 | 82.1 | 91.5 | 93.5 |

|

||||

| CMRC | 8.1 | 5.6 | 14.6 | 63.8 | 43.2 | 27.8 | 5.3 | 13.0 | 50.4 |

|

||||

| WinoGrande | 49.9 | 50.8 | 54.2 | 65.8 | 61.7 | 50.9 | 60.9 | 55.7 | 74.8 |

|

||||

| BBH | 35.9 | 46.5 | 45.5 | 61.2 | 56.0 | 42.5 | 57.3 | 55.8 | 68.3 |

|

||||

| GSM-8K | 32.4 | 48.3 | 44.1 | 70.7 | 53.8 | 56.0 | 71.7 | 57.7 | 79.6 |

|

||||

| Math | 5.7 | 8.6 | 12.0 | 23.0 | 20.4 | 4.3 | 22.5 | 27.6 | 31.9 |

|

||||

| HumanEval | 17.7 | 35.4 | 36.0 | 59.8 | 52.4 | 19.5 | 37.8 | 40.9 | 67.1 |

|

||||

| MBPP | 37.7 | 25.7 | 33.9 | 51.4 | 55.6 | 40.9 | 40.9 | 30.0 | 65.8 |

|

||||

### Base Model

|

||||

|

||||

- Performance of MBPP is reported with MBPP(Sanitized)

|

||||

| Benchmark | InternLM2-7B | LLaMA-3-8B | Yi-1.5-9B | InternLM2.5-7B |

|

||||

| ------------- | ------------ | ---------- | --------- | -------------- |

|

||||

| MMLU(5-shot) | 65.8 | 66.4 | 71.6 | 71.6 |

|

||||

| CMMLU(5-shot) | 66.2 | 51.0 | 74.1 | 79.1 |

|

||||

| BBH(3-shot) | 65.0 | 59.7 | 71.1 | 70.1 |

|

||||

| MATH(4-shot) | 20.2 | 16.4 | 31.9 | 34.0 |

|

||||

| GSM8K(4-shot) | 70.8 | 54.3 | 74.5 | 74.8 |

|

||||

| GPQA(0-shot) | 28.3 | 31.3 | 27.8 | 31.3 |

|

||||

|

||||

### Alignment Evaluation

|

||||

### Chat Model

|

||||

|

||||

- We have evaluated our model on [AlpacaEval 2.0](https://tatsu-lab.github.io/alpaca_eval/) and InternLM2-Chat-20B surpass Claude 2, GPT-4(0613) and Gemini Pro.

|

||||

| Benchmark | InternLM2-Chat-7B | LLaMA-3-8B-Instruct | Yi-1.5-9B-Chat | GLM-4-9B-Chat | Qwen2-7B-Instruct | Gemma2-9B-IT | InternLM2.5-7B-Chat | Llama-3-70B-Instruct |

|

||||

| ----------------- | ----------------- | ------------------- | -------------- | ------------- | ----------------- | ------------ | ------------------- | -------------------- |

|

||||

| MMLU(5-shot) | 62.3 | 68.4 | 71.0 | 71.4 | 70.8 | 70.9 | 72.8 | 80.5 |

|

||||

| CMMLU(5-shot) | 62.4 | 53.3 | 74.5 | 74.5 | 80.9 | 60.3 | 78.0 | 70.1 |

|

||||

| BBH(3-shot CoT) | 59.0 | 54.4 | 69.6 | 69.6 | 65.0 | 68.2\* | 71.6 | 80.5 |

|

||||

| MATH(0-shot CoT) | 27.6 | 27.9 | 51.1 | 51.1 | 48.6 | 46.9 | 60.1 | 47.1 |

|

||||

| GSM8K(0-shot CoT) | 72.5 | 72.9 | 80.1 | 85.3 | 82.9 | 88.9 | 86.0 | 92.8 |

|

||||

| GPQA(0-shot) | 29.8 | 26.1 | 37.9 | 36.9 | 38.4 | 33.8 | 38.4 | 38.9 |

|

||||

|

||||

| Model Name | Win Rate | Length |

|

||||

| ------------------ | -------- | ------ |

|

||||

| GPT-4 Turbo | 50.00% | 2049 |

|

||||

| GPT-4 | 23.58% | 1365 |

|

||||

| GPT-4 0314 | 22.07% | 1371 |

|

||||

| Mistral Medium | 21.86% | 1500 |

|

||||

| XwinLM 70b V0.1 | 21.81% | 1775 |

|

||||

| InternLM2 Chat 20B | 21.75% | 2373 |

|

||||

| Mixtral 8x7B v0.1 | 18.26% | 1465 |

|

||||

| Claude 2 | 17.19% | 1069 |

|

||||

| Gemini Pro | 16.85% | 1315 |

|

||||

| GPT-4 0613 | 15.76% | 1140 |

|

||||

| Claude 2.1 | 15.73% | 1096 |

|

||||

|

||||

- According to the released performance of 2024-01-17.

|

||||

- We use `ppl` for the MCQ evaluation on base model.

|

||||

- The evaluation results were obtained from [OpenCompass](https://github.com/open-compass/opencompass) , and evaluation configuration can be found in the configuration files provided by [OpenCompass](https://github.com/open-compass/opencompass).

|

||||

- The evaluation data may have numerical differences due to the version iteration of [OpenCompass](https://github.com/open-compass/opencompass), so please refer to the latest evaluation results of [OpenCompass](https://github.com/open-compass/opencompass).

|

||||

- \* means the result is copied from the original paper.

|

||||

|

||||

## Requirements

|

||||

|

||||

|

|

@ -152,14 +154,14 @@ transformers >= 4.38

|

|||

|

||||

### Import from Transformers

|

||||

|

||||

To load the InternLM2-7B-Chat model using Transformers, use the following code:

|

||||

To load the InternLM2.5-7B-Chat model using Transformers, use the following code:

|

||||

|

||||

```python

|

||||

import torch

|

||||

from transformers import AutoTokenizer, AutoModelForCausalLM

|

||||

tokenizer = AutoTokenizer.from_pretrained("internlm/internlm2-chat-7b", trust_remote_code=True)

|

||||

tokenizer = AutoTokenizer.from_pretrained("internlm/internlm2_5-7b-chat", trust_remote_code=True)

|

||||

# Set `torch_dtype=torch.float16` to load model in float16, otherwise it will be loaded as float32 and might cause OOM Error.

|

||||

model = AutoModelForCausalLM.from_pretrained("internlm/internlm2-chat-7b", device_map="auto", trust_remote_code=True, torch_dtype=torch.float16)

|

||||

model = AutoModelForCausalLM.from_pretrained("internlm/internlm2_5-7b-chat", device_map="auto", trust_remote_code=True, torch_dtype=torch.float16)

|

||||

# (Optional) If on low resource devices, you can load model in 4-bit or 8-bit to further save GPU memory via bitsandbytes.

|

||||

# InternLM 7B in 4bit will cost nearly 8GB GPU memory.

|

||||

# pip install -U bitsandbytes

|

||||

|

|

@ -175,12 +177,12 @@ print(response)

|

|||

|

||||

### Import from ModelScope

|

||||

|

||||

To load the InternLM2-7B-Chat model using ModelScope, use the following code:

|

||||

To load the InternLM2.5-7B-Chat model using ModelScope, use the following code:

|

||||

|

||||

```python

|

||||

import torch

|

||||

from modelscope import snapshot_download, AutoTokenizer, AutoModelForCausalLM

|

||||

model_dir = snapshot_download('Shanghai_AI_Laboratory/internlm2-chat-7b')

|

||||

model_dir = snapshot_download('Shanghai_AI_Laboratory/internlm2_5-7b-chat')

|

||||

tokenizer = AutoTokenizer.from_pretrained(model_dir, device_map="auto", trust_remote_code=True)

|

||||

# Set `torch_dtype=torch.float16` to load model in float16, otherwise it will be loaded as float32 and might cause OOM Error.

|

||||

model = AutoModelForCausalLM.from_pretrained(model_dir, device_map="auto", trust_remote_code=True, torch_dtype=torch.float16)

|

||||

|

|

@ -210,26 +212,33 @@ streamlit run ./chat/web_demo.py

|

|||

|

||||

We use [LMDeploy](https://github.com/InternLM/LMDeploy) for fast deployment of InternLM.

|

||||

|

||||

With only 4 lines of codes, you can perform `internlm2-chat-7b` inference after `pip install lmdeploy>=0.2.1`.

|

||||

With only 4 lines of codes, you can perform `internlm2_5-7b-chat` inference after `pip install lmdeploy>=0.2.1`.

|

||||

|

||||

```python

|

||||

from lmdeploy import pipeline

|

||||

pipe = pipeline("internlm/internlm2-chat-7b")

|

||||

pipe = pipeline("internlm/internlm2_5-7b-chat")

|

||||

response = pipe(["Hi, pls intro yourself", "Shanghai is"])

|

||||

print(response)

|

||||

```

|

||||

|

||||

Please refer to the [guidance](./chat/lmdeploy.md) for more usages about model deployment. For additional deployment tutorials, feel free to explore [here](https://github.com/InternLM/LMDeploy).

|

||||

|

||||

### 200K-long-context Inference

|

||||

### 1M-long-context Inference

|

||||

|

||||

By enabling the Dynamic NTK feature of LMDeploy, you can acquire the long-context inference power.

|

||||

|

||||

Note: 1M context length requires 4xA100-80G.

|

||||

|

||||

```python

|

||||

from lmdeploy import pipeline, GenerationConfig, TurbomindEngineConfig

|

||||

|

||||

backend_config = TurbomindEngineConfig(rope_scaling_factor=2.0, session_len=200000)

|

||||

pipe = pipeline('internlm/internlm2-chat-7b', backend_config=backend_config)

|

||||

backend_config = TurbomindEngineConfig(

|

||||

rope_scaling_factor=2.5,

|

||||

session_len=1048576, # 1M context length

|

||||

max_batch_size=1,

|

||||

cache_max_entry_count=0.7,

|

||||

tp=4) # 4xA100-80G.

|

||||

pipe = pipeline('internlm/internlm2_5-7b-chat-1m', backend_config=backend_config)

|

||||

prompt = 'Use a long prompt to replace this sentence'

|

||||

response = pipe(prompt)

|

||||

print(response)

|

||||

|

|

@ -237,7 +246,7 @@ print(response)

|

|||

|

||||

## Agent

|

||||

|

||||

InternLM2-Chat models have excellent tool utilization capabilities and can work with function calls in a zero-shot manner. See more examples in [agent session](./agent/).

|

||||

InternLM2.5-Chat models have excellent tool utilization capabilities and can work with function calls in a zero-shot manner. It also supports to conduct analysis by collecting information from more than 100 web pages. See more examples in [agent section](./agent/).

|

||||

|

||||

## Fine-tuning

|

||||

|

||||

|

|

@ -247,7 +256,7 @@ Please refer to [finetune docs](./finetune/) for fine-tuning with InternLM.

|

|||

|

||||

## Evaluation

|

||||

|

||||

We utilize [OpenCompass](https://github.com/open-compass/opencompass) for model evaluation. In InternLM-2, we primarily focus on standard objective evaluation, long-context evaluation (needle in a haystack), data contamination assessment, agent evaluation, and subjective evaluation.

|

||||

We utilize [OpenCompass](https://github.com/open-compass/opencompass) for model evaluation. In InternLM2.5, we primarily focus on standard objective evaluation, long-context evaluation (needle in a haystack), data contamination assessment, agent evaluation, and subjective evaluation.

|

||||

|

||||

### Objective Evaluation

|

||||

|

||||

|

|

|

|||

144

README_zh-CN.md

144

README_zh-CN.md

|

|

@ -36,15 +36,16 @@

|

|||

|

||||

## 简介

|

||||

|

||||

InternLM2 系列模型在本仓库正式发布,具有如下特性:

|

||||

InternLM2.5 系列模型在本仓库正式发布,具有如下特性:

|

||||

|

||||

- 有效支持20万字超长上下文:模型在 20 万字长输入中几乎完美地实现长文“大海捞针”,而且在 LongBench 和 L-Eval 等长文任务中的表现也达到开源模型中的领先水平。 可以通过 [LMDeploy](./chat/lmdeploy_zh_cn.md) 尝试20万字超长上下文推理。

|

||||

- 综合性能全面提升:各能力维度相比上一代模型全面进步,在推理、数学、代码、对话体验、指令遵循和创意写作等方面的能力提升尤为显著,综合性能达到同量级开源模型的领先水平,在重点能力评测上 InternLM2-Chat-20B 能比肩甚至超越 ChatGPT (GPT-3.5)。

|

||||

- 代码解释器与数据分析:在配合代码解释器(code-interpreter)的条件下,InternLM2-Chat-20B 在 GSM8K 和 MATH 上可以达到和 GPT-4 相仿的水平。基于在数理和工具方面强大的基础能力,InternLM2-Chat 提供了实用的数据分析能力。

|

||||

- 工具调用能力整体升级:基于更强和更具有泛化性的指令理解、工具筛选与结果反思等能力,新版模型可以更可靠地支持复杂智能体的搭建,支持对工具进行有效的多轮调用,完成较复杂的任务。可以查看更多[样例](./agent/)。

|

||||

- 卓越的推理性能:在数学推理方面取得了同量级模型最优精度,超越了 Llama3 和 Gemma2-9B。

|

||||

- 有效支持百万字超长上下文:模型在 1 百万字长输入中几乎完美地实现长文“大海捞针”,而且在 LongBench 等长文任务中的表现也达到开源模型中的领先水平。 可以通过 [LMDeploy](./chat/lmdeploy_zh_cn.md) 尝试百万字超长上下文推理。更多内容和文档对话 demo 请查看[这里](./long_context/README_zh-CN.md)。

|

||||

- 工具调用能力整体升级:InternLM2.5 支持从上百个网页搜集有效信息进行分析推理,相关实现将于近期开源到 [Lagent](https://github.com/InternLM/lagent/tree/main)。InternLM2.5 具有更强和更具有泛化性的指令理解、工具筛选与结果反思等能力,新版模型可以更可靠地支持复杂智能体的搭建,支持对工具进行有效的多轮调用,完成较复杂的任务。可以查看更多[样例](./agent/)。

|

||||

|

||||

## 更新

|

||||

|

||||

\[2024.06.30\] 我们发布了 InternLM2.5-7B、InternLM2.5-7B-Chat 和 InternLM2.5-7B-Chat-1M。可以在下方的 [模型库](#model-zoo) 进行下载,或者在 [model cards](./model_cards/) 中了解更多细节。

|

||||

|

||||

\[2024.03.26\] 我们发布了 InternLM2 的技术报告。 可以点击 [arXiv链接](https://arxiv.org/abs/2403.17297) 来了解更多细节。

|

||||

|

||||

\[2024.01.31\] 我们发布了 InternLM2-1.8B,以及相关的对话模型。该模型在保持领先性能的情况下,提供了更低廉的部署方案。

|

||||

|

|

@ -59,6 +60,33 @@ InternLM2 系列模型在本仓库正式发布,具有如下特性:

|

|||

|

||||

## Model Zoo

|

||||

|

||||

### InternLM2.5

|

||||

|

||||

| Model | Transformers(HF) | ModelScope(HF) | OpenXLab(HF) | OpenXLab(Origin) | Release Date |

|

||||

| -------------------------- | ------------------------------------------ | ---------------------------------------- | -------------------------------------- | ------------------------------------------ | ------------ |

|

||||

| **InternLM2.5-7B** | [🤗internlm2_5-7b](https://huggingface.co/internlm/internlm2_5-7b) | [<img src="./assets/modelscope_logo.png" width="20px" /> internlm2_5-7b](https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2_5-7b/summary) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2_5-7b) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2_5-7b-original) | 2024-06-30 |

|

||||

| **InternLM2.5-7B-Chat** | [🤗internlm2_5-7b-chat](https://huggingface.co/internlm/internlm2_5-7b-chat) | [<img src="./assets/modelscope_logo.png" width="20px" /> internlm2_5-7b-chat](https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2_5-7b-chat/summary) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2_5-7b-chat) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2_5-7b-chat-original) | 2024-06-30 |

|

||||

| **InternLM2.5-7B-Chat-1M** | [🤗internlm2_5-7b-chat-1m](https://huggingface.co/internlm/internlm2_5-7b-chat-1m) | [<img src="./assets/modelscope_logo.png" width="20px" /> internlm2_5-7b-chat-1m](https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2_5-7b-chat-1m/summary) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2_5-7b-chat-1m) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2_5-7b-chat-1m-original) | 2024-06-30 |

|

||||

|

||||

**模型说明:**

|

||||

|

||||

目前 InternLM 2.5 系列只发布了 7B 大小的模型,我们接下来将开源 1.8B 和 20B 的版本。7B 为轻量级的研究和应用提供了一个轻便但性能不俗的模型,20B 模型的综合性能更为强劲,可以有效支持更加复杂的实用场景。每个规格不同模型关系如下所示:

|

||||

|

||||

1. **InternLM2.5**:经历了大规模预训练的基座模型,是我们推荐的在大部分应用中考虑选用的优秀基座。

|

||||

2. **InternLM2.5-Chat**: 对话模型,在 InternLM2.5 基座上经历了有监督微调和 online RLHF。InternLM2.5-Chat 面向对话交互进行了优化,具有较好的指令遵循、共情聊天和调用工具等的能力,是我们推荐直接用于下游应用的模型。

|

||||

3. **InternLM2.5-Chat-1M**: InternLM2.5-Chat-1M 支持一百万字超长上下文,并具有和 InternLM2.5-Chat 相当的综合性能表现。

|

||||

|

||||

**局限性:** 尽管在训练过程中我们非常注重模型的安全性,尽力促使模型输出符合伦理和法律要求的文本,但受限于模型大小以及概率生成范式,模型可能会产生各种不符合预期的输出,例如回复内容包含偏见、歧视等有害内容,请勿传播这些内容。由于传播不良信息导致的任何后果,本项目不承担责任。

|

||||

|

||||

**补充说明:** 上表中的 `HF` 表示对应模型为 HuggingFace 平台提供的 [transformers](https://github.com/huggingface/transformers) 框架格式;`Origin` 则表示对应模型为我们 InternLM 团队的 [InternEvo](https://github.com/InternLM/InternEvo) 框架格式。

|

||||

|

||||

### InternLM2

|

||||

|

||||

<details>

|

||||

<summary>(click to expand)</summary>

|

||||

|

||||

我们上一代的模型,在长上下文处理、推理和编码方面具有优秀的性能。请参考 [model cards](./model_cards/) 了解更多细节。

|

||||

|

||||

| Model | Transformers(HF) | ModelScope(HF) | OpenXLab(HF) | OpenXLab(Origin) | Release Date |

|

||||

| --------------------------- | ----------------------------------------- | ---------------------------------------- | -------------------------------------- | ------------------------------------------ | ------------ |

|

||||

| **InternLM2-1.8B** | [🤗internlm2-1.8b](https://huggingface.co/internlm/internlm2-1_8b) | [<img src="./assets/modelscope_logo.png" width="20px" /> internlm2-1.8b](https://www.modelscope.cn/models/Shanghai_AI_Laboratory/internlm2-1_8b/summary) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2-base-1.8b) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2-base-1.8b-original) | 2024-01-31 |

|

||||

|

|

@ -73,63 +101,38 @@ InternLM2 系列模型在本仓库正式发布,具有如下特性:

|

|||

| **InternLM2-Chat-20B-SFT** | [🤗internlm2-chat-20b-sft](https://huggingface.co/internlm/internlm2-chat-20b-sft) | [<img src="./assets/modelscope_logo.png" width="20px" /> internlm2-chat-20b-sft](https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2-chat-20b-sft/summary) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2-chat-20b-sft) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2-chat-20b-sft-original) | 2024-01-17 |

|

||||

| **InternLM2-Chat-20B** | [🤗internlm2-chat-20b](https://huggingface.co/internlm/internlm2-chat-20b) | [<img src="./assets/modelscope_logo.png" width="20px" /> internlm2-chat-20b](https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2-chat-20b/summary) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2-chat-20b) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2-chat-20b-original) | 2024-01-17 |

|

||||

|

||||

**模型说明:**

|

||||

|

||||

在此次发布中,InternLM2 包含两种模型规格:7B 和 20B。7B 为轻量级的研究和应用提供了一个轻便但性能不俗的模型,20B 模型的综合性能更为强劲,可以有效支持更加复杂的实用场景。每个规格不同模型关系如下图所示:

|

||||

|

||||

|

||||

|

||||

1. **InternLM2-Base**:高质量和具有很强可塑性的模型基座,是模型进行深度领域适配的高质量起点。

|

||||

2. **InternLM2**:进一步在大规模无标签数据上进行预训练,并结合特定领域的增强语料库进行训练,在评测中成绩优异,同时保持了很好的通用语言能力,是我们推荐的在大部分应用中考虑选用的优秀基座。

|

||||

3. **InternLM2-Chat-SFT**: 基于 InternLM2-Base 模型进行了有监督微调,是 InternLM2-Chat 模型的中间版本。我们将它们开源以助力社区在对齐方面的研究。

|

||||

4. **InternLM2-Chat**: 在 InternLM2-Chat-SFT 的基础上进行了 online RLHF 以进一步对齐. InternLM2-Chat 面向对话交互进行了优化,具有较好的指令遵循、共情聊天和调用工具等的能力,是我们推荐直接用于下游应用的模型。

|

||||

|

||||

**局限性:** 尽管在训练过程中我们非常注重模型的安全性,尽力促使模型输出符合伦理和法律要求的文本,但受限于模型大小以及概率生成范式,模型可能会产生各种不符合预期的输出,例如回复内容包含偏见、歧视等有害内容,请勿传播这些内容。由于传播不良信息导致的任何后果,本项目不承担责任。

|

||||

|

||||

**补充说明:** 上表中的 `HF` 表示对应模型为 HuggingFace 平台提供的 [transformers](https://github.com/huggingface/transformers) 框架格式;`Origin` 则表示对应模型为我们 InternLM 团队的 [InternEvo](https://github.com/InternLM/InternEvo) 框架格式。

|

||||

</details>

|

||||

|

||||

## 性能

|

||||

|

||||

### 客观评测

|

||||

我们使用开源评测工具 [OpenCompass](https://github.com/open-compass/opencompass) 在几个重要的基准测试中对 InternLM2.5 进行了评测。部分评测结果如下表所示。欢迎访问 [OpenCompass 排行榜](https://rank.opencompass.org.cn) 获取更多评测结果。

|

||||

|

||||

| Dataset | Baichuan2-7B-Chat | Mistral-7B-Instruct-v0.2 | Qwen-7B-Chat | InternLM2-Chat-7B | ChatGLM3-6B | Baichuan2-13B-Chat | Mixtral-8x7B-Instruct-v0.1 | Qwen-14B-Chat | InternLM2-Chat-20B |

|

||||

| ---------------- | ----------------- | ------------------------ | ------------ | ----------------- | ----------- | ------------------ | -------------------------- | ------------- | ------------------ |

|

||||

| MMLU | 50.1 | 59.2 | 57.1 | 63.7 | 58.0 | 56.6 | 70.3 | 66.7 | 66.5 |

|

||||

| CMMLU | 53.4 | 42.0 | 57.9 | 63.0 | 57.8 | 54.8 | 50.6 | 68.1 | 65.1 |

|

||||

| AGIEval | 35.3 | 34.5 | 39.7 | 47.2 | 44.2 | 40.0 | 41.7 | 46.5 | 50.3 |

|

||||

| C-Eval | 53.9 | 42.4 | 59.8 | 60.8 | 59.1 | 56.3 | 54.0 | 71.5 | 63.0 |

|

||||

| TrivialQA | 37.6 | 35.0 | 46.1 | 50.8 | 38.1 | 40.3 | 57.7 | 54.5 | 53.9 |

|

||||

| NaturalQuestions | 12.8 | 8.1 | 18.6 | 24.1 | 14.0 | 12.7 | 22.5 | 22.9 | 25.9 |

|

||||

| C3 | 78.5 | 66.9 | 84.4 | 91.5 | 79.3 | 84.4 | 82.1 | 91.5 | 93.5 |

|

||||

| CMRC | 8.1 | 5.6 | 14.6 | 63.8 | 43.2 | 27.8 | 5.3 | 13.0 | 50.4 |

|

||||

| WinoGrande | 49.9 | 50.8 | 54.2 | 65.8 | 61.7 | 50.9 | 60.9 | 55.7 | 74.8 |

|

||||

| BBH | 35.9 | 46.5 | 45.5 | 61.2 | 56.0 | 42.5 | 57.3 | 55.8 | 68.3 |

|

||||

| GSM-8K | 32.4 | 48.3 | 44.1 | 70.7 | 53.8 | 56.0 | 71.7 | 57.7 | 79.6 |

|

||||

| Math | 5.7 | 8.6 | 12.0 | 23.0 | 20.4 | 4.3 | 22.5 | 27.6 | 31.9 |

|

||||

| HumanEval | 17.7 | 35.4 | 36.0 | 59.8 | 52.4 | 19.5 | 37.8 | 40.9 | 67.1 |

|

||||

| MBPP | 37.7 | 25.7 | 33.9 | 51.4 | 55.6 | 40.9 | 40.9 | 30.0 | 65.8 |

|

||||

### 基座模型

|

||||

|

||||

- MBPP性能使用的是MBPP(Sanitized)版本数据集

|

||||

| Benchmark | InternLM2-7B | LLaMA-3-8B | Yi-1.5-9B | InternLM2.5-7B |

|

||||

| ------------- | ------------ | ---------- | --------- | -------------- |

|

||||

| MMLU(5-shot) | 65.8 | 66.4 | 71.6 | 71.6 |

|

||||

| CMMLU(5-shot) | 66.2 | 51.0 | 74.1 | 79.1 |

|

||||

| BBH(3-shot) | 65.0 | 59.7 | 71.1 | 70.1 |

|

||||

| MATH(4-shot) | 20.2 | 16.4 | 31.9 | 34.0 |

|

||||

| GSM8K(4-shot) | 70.8 | 54.3 | 74.5 | 74.8 |

|

||||

| GPQA(0-shot) | 28.3 | 31.3 | 27.8 | 31.3 |

|

||||

|

||||

### 主观评测

|

||||

### 对话模型

|

||||

|

||||

- 我们评测了InternLM2-Chat在[AlpacaEval 2.0](https://tatsu-lab.github.io/alpaca_eval/) 上的性能,结果表明InternLM2-Chat在AlpacaEval上已经超过了 Claude 2, GPT-4(0613) 和 Gemini Pro.

|

||||

| Benchmark | InternLM2-Chat-7B | LLaMA-3-8B-Instruct | Yi-1.5-9B-Chat | GLM-4-9B-Chat | Qwen2-7B-Instruct | Gemma2-9B-IT | InternLM2.5-7B-Chat | Llama-3-70B-Instruct |

|

||||

| ----------------- | ----------------- | ------------------- | -------------- | ------------- | ----------------- | ------------ | ------------------- | -------------------- |

|

||||

| MMLU(5-shot) | 62.3 | 68.4 | 71.0 | 71.4 | 70.8 | 70.9 | 72.8 | 80.5 |

|

||||

| CMMLU(5-shot) | 62.4 | 53.3 | 74.5 | 74.5 | 80.9 | 60.3 | 78.0 | 70.1 |

|

||||

| BBH(3-shot CoT) | 59.0 | 54.4 | 69.6 | 69.6 | 65.0 | 68.2\* | 71.6 | 80.5 |

|

||||

| MATH(0-shot CoT) | 27.6 | 27.9 | 51.1 | 51.1 | 48.6 | 46.9 | 60.1 | 47.1 |

|

||||

| GSM8K(0-shot CoT) | 72.5 | 72.9 | 80.1 | 85.3 | 82.9 | 88.9 | 86.0 | 92.8 |

|

||||

| GPQA(0-shot) | 29.8 | 26.1 | 37.9 | 36.9 | 38.4 | 33.8 | 38.4 | 38.9 |

|

||||

|

||||

| Model Name | Win Rate | Length |

|

||||

| ------------------ | -------- | ------ |

|

||||

| GPT-4 Turbo | 50.00% | 2049 |

|

||||

| GPT-4 | 23.58% | 1365 |

|

||||

| GPT-4 0314 | 22.07% | 1371 |

|

||||

| Mistral Medium | 21.86% | 1500 |

|

||||

| XwinLM 70b V0.1 | 21.81% | 1775 |

|

||||

| InternLM2 Chat 20B | 21.75% | 2373 |

|

||||

| Mixtral 8x7B v0.1 | 18.26% | 1465 |

|

||||

| Claude 2 | 17.19% | 1069 |

|

||||

| Gemini Pro | 16.85% | 1315 |

|

||||

| GPT-4 0613 | 15.76% | 1140 |

|

||||

| Claude 2.1 | 15.73% | 1096 |

|

||||

|

||||

- 性能数据截止2024-01-17

|

||||

- 我们使用 `ppl` 对基座模型进行 MCQ 指标的评测。

|

||||

- 评测结果来自 [OpenCompass](https://github.com/open-compass/opencompass) ,评测配置可以在 [OpenCompass](https://github.com/open-compass/opencompass) 提供的配置文件中找到。

|

||||

- 由于 [OpenCompass](https://github.com/open-compass/opencompass) 的版本迭代,评测数据可能存在数值差异,因此请参考 [OpenCompass](https://github.com/open-compass/opencompass) 的最新评测结果。

|

||||

- \* 表示从原论文中复制而来。

|

||||

|

||||

## 依赖

|

||||

|

||||

|

|

@ -149,14 +152,14 @@ transformers >= 4.38

|

|||

|

||||

### 通过 Transformers 加载

|

||||

|

||||

通过以下的代码从 Transformers 加载 InternLM2-7B-Chat 模型 (可修改模型名称替换不同的模型)

|

||||

通过以下的代码从 Transformers 加载 InternLM2.5-7B-Chat 模型 (可修改模型名称替换不同的模型)

|

||||

|

||||

```python

|

||||

import torch

|

||||

from transformers import AutoTokenizer, AutoModelForCausalLM

|

||||

tokenizer = AutoTokenizer.from_pretrained("internlm/internlm2-chat-7b", trust_remote_code=True)

|

||||

tokenizer = AutoTokenizer.from_pretrained("internlm/internlm2_5-7b-chat", trust_remote_code=True)

|

||||

# 设置`torch_dtype=torch.float16`来将模型精度指定为torch.float16,否则可能会因为您的硬件原因造成显存不足的问题。

|

||||

model = AutoModelForCausalLM.from_pretrained("internlm/internlm2-chat-7b", device_map="auto",trust_remote_code=True, torch_dtype=torch.float16)

|

||||

model = AutoModelForCausalLM.from_pretrained("internlm/internlm2_5-7b-chat", device_map="auto",trust_remote_code=True, torch_dtype=torch.float16)

|

||||

# (可选) 如果在低资源设备上,可以通过bitsandbytes加载4-bit或8-bit量化的模型,进一步节省GPU显存.

|

||||

# 4-bit 量化的 InternLM 7B 大约会消耗 8GB 显存.

|

||||

# pip install -U bitsandbytes

|

||||

|

|

@ -172,12 +175,12 @@ print(response)

|

|||

|

||||

### 通过 ModelScope 加载

|

||||

|

||||

通过以下的代码从 ModelScope 加载 InternLM 模型 (可修改模型名称替换不同的模型)

|

||||

通过以下的代码从 ModelScope 加载 InternLM2.5-7B-Chat 模型 (可修改模型名称替换不同的模型)

|

||||

|

||||

```python

|

||||

import torch

|

||||

from modelscope import snapshot_download, AutoTokenizer, AutoModelForCausalLM

|

||||

model_dir = snapshot_download('Shanghai_AI_Laboratory/internlm2-chat-7b')

|

||||

model_dir = snapshot_download('Shanghai_AI_Laboratory/internlm2_5-7b-chat')

|

||||

tokenizer = AutoTokenizer.from_pretrained(model_dir, device_map="auto", trust_remote_code=True)

|

||||

model = AutoModelForCausalLM.from_pretrained(model_dir, device_map="auto", trust_remote_code=True, torch_dtype=torch.float16)

|

||||

# (可选) 如果在低资源设备上,可以通过bitsandbytes加载4-bit或8-bit量化的模型,进一步节省GPU显存.

|

||||

|

|

@ -210,27 +213,38 @@ streamlit run ./chat/web_demo.py

|

|||

|

||||

```python

|

||||

from lmdeploy import pipeline

|

||||

pipe = pipeline("internlm/internlm2-chat-7b")

|

||||

pipe = pipeline("internlm/internlm2_5-7b-chat")

|

||||

response = pipe(["Hi, pls intro yourself", "Shanghai is"])

|

||||

print(response)

|

||||

```

|

||||

|

||||

请参考[部署指南](./chat/lmdeploy.md)了解更多使用案例,更多部署教程则可在[这里](https://github.com/InternLM/LMDeploy)找到。

|

||||

|

||||

### 20万字超长上下文推理

|

||||

### 1百万字超长上下文推理

|

||||

|

||||

激活 LMDeploy 的 Dynamic NTK 能力,可以轻松把 internlm2-chat-7b 外推到 200K 上下文

|

||||

激活 LMDeploy 的 Dynamic NTK 能力,可以轻松把 internlm2_5-7b-chat 外推到 200K 上下文。

|

||||

|

||||

注意: 1M 上下文需要 4xA100-80G。

|

||||

|

||||

```python

|

||||

from lmdeploy import pipeline, GenerationConfig, TurbomindEngineConfig

|

||||

|

||||

backend_config = TurbomindEngineConfig(rope_scaling_factor=2.0, session_len=160000)

|

||||

pipe = pipeline('internlm/internlm2-chat-7b', backend_config=backend_config)

|

||||

backend_config = TurbomindEngineConfig(

|

||||

rope_scaling_factor=2.5,

|

||||

session_len=1048576, # 1M context length

|

||||

max_batch_size=1,

|

||||

cache_max_entry_count=0.7,

|

||||

tp=4) # 4xA100-80G.

|

||||

pipe = pipeline('internlm/internlm2_5-7b-chat-1m', backend_config=backend_config)

|

||||

prompt = 'Use a long prompt to replace this sentence'

|

||||

response = pipe(prompt)

|

||||

print(response)

|

||||

```

|

||||

|

||||

## 智能体

|

||||

|

||||

InternLM-2.5-Chat 模型有出色的工具调用性能并具有一定的零样本泛化能力。它支持从上百个网页中搜集信息并进行分析。更多样例可以参考 [agent 目录](./agent/).

|

||||

|

||||

## 微调&训练

|

||||

|

||||

请参考[微调教程](./finetune/)尝试续训或微调 InternLM2。

|

||||

|

|

@ -239,7 +253,7 @@ print(response)

|

|||

|

||||

## 评测

|

||||

|

||||

我们使用 [OpenCompass](https://github.com/open-compass/opencompass) 进行模型评估。在 InternLM-2 中,我们主要标准客观评估、长文评估(大海捞针)、数据污染评估、智能体评估和主观评估。

|

||||

我们使用 [OpenCompass](https://github.com/open-compass/opencompass) 进行模型评估。在 InternLM2.5 中,我们主要标准客观评估、长文评估(大海捞针)、数据污染评估、智能体评估和主观评估。

|

||||

|

||||

### 标准客观评测

|

||||

|

||||

|

|

|

|||

|

|

@ -4,77 +4,80 @@ English | [简体中文](README_zh-CN.md)

|

|||

|

||||

## Introduction

|

||||

|

||||

InternLM-Chat-7B v1.1 has been released as the first open-source model with code interpreter capabilities, supporting external tools such as Python code interpreter and search engine.

|

||||

InternLM2.5-Chat, open sourced on June 30, 2024, further enhances its capabilities in code interpreter and general tool utilization. With improved and more generalized instruction understanding, tool selection, and reflection abilities, InternLM2.5-Chat can more reliably support complex agents and multi-step tool calling for more intricate tasks. When combined with a code interpreter, InternLM2.5-Chat obtains comparable results to GPT-4 on MATH. Leveraging strong foundational capabilities in mathematics and tools, InternLM2.5-Chat provides practical data analysis capabilities.

|

||||

|

||||

InternLM2-Chat, open sourced on January 17, 2024, further enhances its capabilities in code interpreter and general tool utilization. With improved and more generalized instruction understanding, tool selection, and reflection abilities, InternLM2-Chat can more reliably support complex agents and multi-step tool calling for more intricate tasks. InternLM2-Chat exhibits decent computational and reasoning abilities even without external tools, surpassing ChatGPT in mathematical performance. When combined with a code interpreter, InternLM2-Chat-20B obtains comparable results to GPT-4 on GSM8K and MATH. Leveraging strong foundational capabilities in mathematics and tools, InternLM2-Chat provides practical data analysis capabilities.

|

||||

The results of InternLM2.5-Chat on math code interpreter is as below:

|

||||

|

||||

The results of InternLM2-Chat-20B on math code interpreter is as below:

|

||||

|

||||

| | GSM8K | MATH |

|

||||

| :--------------------------------------: | :---: | :---: |

|

||||

| InternLM2-Chat-20B | 79.6 | 32.5 |

|

||||

| InternLM2-Chat-20B with Code Interpreter | 84.5 | 51.2 |

|

||||

| ChatGPT (GPT-3.5) | 78.2 | 28.0 |

|

||||

| GPT-4 | 91.4 | 45.8 |

|

||||

| Models | Tool-Integrated | MATH |

|

||||

| :-----------------: | :-------------: | :--: |

|

||||

| InternLM2-Chat-7B | w/ | 45.1 |

|

||||

| InternLM2-Chat-20B | w/ | 51.2 |

|

||||

| InternLM2.5-7B-Chat | w/ | 63.0 |

|

||||

| gpt-4-0125-preview | w/o | 64.2 |

|

||||

|

||||

## Usages

|

||||

|

||||

We offer an example using [Lagent](lagent.md) to build agents based on InternLM2-Chat to call the code interpreter. Firstly install the extra dependencies:

|

||||

We offer an example using [Lagent](lagent.md) to build agents based on InternLM2.5-Chat to call the code interpreter. Firstly install the extra dependencies:

|

||||

|

||||

```bash

|

||||

pip install -r requirements.txt

|

||||

```

|

||||

|

||||

Run the following script to perform inference and evaluation on GSM8K and MATH test.

|

||||

Run the following script to perform inference and evaluation on MATH test.

|

||||

|

||||

```bash

|

||||

python streaming_inference.py \

|

||||

--backend=lmdeploy \ # For HuggingFace models: hf

|

||||

--model_path=internlm/internlm2-chat-20b \

|

||||

--tp=2 \

|

||||

--model_path=internlm/internlm2_5-7b-chat \

|

||||

--tp=1 \

|

||||

--temperature=1.0 \

|

||||

--top_k=1 \

|

||||

--dataset=math \

|

||||

--output_path=math_lmdeploy.jsonl \

|

||||

--do_eval

|

||||

```

|

||||

|

||||

`output_path` is a jsonl format file to save the inference results. Each line is like

|

||||

|

||||

```json

|

||||

````json

|

||||

{

|

||||

"idx": 41,

|

||||

"query": "The point $(a, b)$ lies on the line with the equation $3x + 2y = 12.$ When $a = 4$, what is the value of $b$?",

|

||||

"gt": "0",

|

||||

"pred": ["0"],

|

||||

"problem": "The midpoint of the line segment between $(x,y)$ and $(2,4)$ is $(-7,0)$. Find $(x,y)$.",

|

||||

"level": "Level 3",

|

||||

"type": "Algebra",

|

||||

"solution": "Applying the midpoint formula gives $$\\left(\\frac{2+x}{2},\\frac{4+y}{2}\\right)=(-7,0).$$Solving $\\frac{2+x}{2}=-7$ for $x$ and $\\frac{4+y}{2} = 0$ for $y$ we find $(x,y)$ to be $\\boxed{(-16,-4)}$.",

|

||||

"idx": 306,

|

||||

"query": "The midpoint of the line segment between $(x,y)$ and $(2,4)$ is $(-7,0)$. Find $(x,y)$.",

|

||||

"gt": "(-16,-4)",

|

||||

"pred": [

|

||||

"(-16,-4)"

|

||||

],

|

||||

"steps": [

|

||||

{

|

||||

"role": "language",

|

||||

"content": ""

|

||||

"content": "To find the coordinates of the point $(x,y)$, we can use the midpoint formula. The midpoint $M$ of a line segment with endpoints $(x_1, y_1)$ and $(x_2, y_2)$ is given by:\n\n\\[M = \\left(\\frac{x_1 + x_2}{2}, \\frac{y_1 + y_2}{2}\\right)\\]\n\nGiven that the midpoint is $(-7,0)$ and one endpoint is $(2,4)$, we can set up equations to solve for $x$ and $y$.\n\nLet's start by setting up the equations based on the midpoint formula:\n\n\\[\\frac{x + 2}{2} = -7\\]\n\\[\\frac{y + 4}{2} = 0\\]\n\nNow, let's solve these equations using Python to find the values of $x$ and $y$."

|

||||

},

|

||||

{

|

||||

"role": "tool",

|

||||

"content": {

|

||||

"name": "IPythonInteractive",

|

||||

"name": "AsyncIPythonInteractiveManager",

|

||||

"parameters": {

|

||||

"command": "```python\nfrom sympy import symbols, solve\n\ndef find_b():\n x, y = symbols('x y')\n equation = 3*x + 2*y - 12\n b = solve(equation.subs(x, 4), y)[0]\n\n return b\n\nresult = find_b()\nprint(result)\n```"

|

||||

"command": "```python\nfrom sympy import symbols, Eq, solve\n\n# Define symbols\nx, y = symbols('x y')\n\n# Define equations based on the midpoint formula\neq1 = Eq((x + 2)/2, -7)\neq2 = Eq((y + 4)/2, 0)\n\n# Solve equations\nsolution = solve((eq1, eq2), (x, y))\n\nsolution\n```"

|

||||

}

|

||||

},

|

||||

"name": "interpreter"

|

||||

},

|

||||

{

|

||||

"role": "environment",

|

||||

"content": "0",

|

||||

"content": "{x: -16, y: -4}",

|

||||

"name": "interpreter"

|

||||

},

|

||||

{

|

||||

"role": "language",

|

||||

"content": "The value of $b$ when $a = 4$ is $\\boxed{0}$."

|

||||

"content": "After solving the equations, we find that the coordinates of the point $(x,y)$ are $(-16, -4)$. Therefore, the solution to the problem is:\n\n\\[\\boxed{(-16, -4)}\\]"

|

||||

}

|

||||

],

|

||||

"error": null

|

||||

}

|

||||

```

|

||||

````

|

||||

|

||||

Once it is prepared, just skip the inference stage as follows.

|

||||

|

||||

|

|

|

|||

|

|

@ -4,77 +4,80 @@

|

|||

|

||||

## 简介

|

||||

|

||||

InternLM-Chat-7B v1.1 是首个具有代码解释能力的开源对话模型,支持 Python 解释器和搜索引擎等外部工具。

|

||||

InternLM2.5-Chat 在代码解释和通用工具调用方面的能力得到进一步提升。基于更强和更具有泛化性的指令理解、工具筛选与结果反思等能力,新版模型可以更可靠地支持复杂智能体的搭建,支持对工具进行有效的多轮调用,完成较复杂的任务。在配合代码解释器(code-interpreter)的条件下,InternLM2.5-Chat 在 MATH 上可以达到和 GPT-4 相仿的水平。基于在数理和工具方面强大的基础能力,InternLM2.5-Chat 提供了实用的数据分析能力。

|

||||

|

||||

InternLM2-Chat 进一步提高了它在代码解释和通用工具调用方面的能力。基于更强和更具有泛化性的指令理解、工具筛选与结果反思等能力,新版模型可以更可靠地支持复杂智能体的搭建,支持对工具进行有效的多轮调用,完成较复杂的任务。模型在不使用外部工具的条件下已具备不错的计算能力和推理能力,数理表现超过 ChatGPT;在配合代码解释器(code-interpreter)的条件下,InternLM2-Chat-20B 在 GSM8K 和 MATH 上可以达到和 GPT-4 相仿的水平。基于在数理和工具方面强大的基础能力,InternLM2-Chat 提供了实用的数据分析能力。

|

||||

以下是 InternLM2.5-Chat 在数学代码解释器上的结果。

|

||||

|

||||

以下是 InternLM2-Chat-20B 在数学代码解释器上的结果。

|

||||

|

||||

| | GSM8K | MATH |

|

||||

| :---------------------------------: | :---: | :---: |

|

||||

| InternLM2-Chat-20B 单纯依靠内在能力 | 79.6 | 32.5 |

|

||||

| InternLM2-Chat-20B 配合代码解释器 | 84.5 | 51.2 |

|

||||

| ChatGPT (GPT-3.5) | 78.2 | 28.0 |

|

||||

| GPT-4 | 91.4 | 45.8 |

|

||||

| 模型 | 是否集成工具 | MATH |

|

||||

| :-----------------: | :----------: | :--: |

|

||||

| InternLM2-Chat-7B | w/ | 45.1 |

|

||||

| InternLM2-Chat-20B | w/ | 51.2 |

|

||||

| InternLM2.5-7B-Chat | w/ | 63.0 |

|

||||

| gpt-4-0125-preview | w/o | 64.2 |

|

||||

|

||||

## 体验

|

||||

|

||||

我们提供了使用 [Lagent](lagent_zh-CN.md) 来基于 InternLM2-Chat 构建智能体调用代码解释器的例子。首先安装额外依赖:

|

||||

我们提供了使用 [Lagent](lagent_zh-CN.md) 来基于 InternLM2.5-Chat 构建智能体调用代码解释器的例子。首先安装额外依赖:

|

||||

|

||||

```bash

|

||||

pip install -r requirements.txt

|

||||

```

|

||||

|

||||

运行以下脚本在 GSM8K 和 MATH 测试集上进行推理和评估:

|

||||

运行以下脚本在 MATH 测试集上进行推理和评估:

|

||||

|

||||

```bash

|

||||

python streaming_inference.py \

|

||||

--backend=lmdeploy \ # For HuggingFace models: hf

|

||||

--model_path=internlm/internlm2-chat-20b \

|

||||

--tp=2 \

|

||||

--model_path=internlm/internlm2_5-7b-chat \

|

||||

--tp=1 \

|

||||

--temperature=1.0 \

|

||||

--top_k=1 \

|

||||

--dataset=math \

|

||||

--output_path=math_lmdeploy.jsonl \

|

||||

--do_eval

|

||||

```

|

||||

|

||||

`output_path` 是一个存储推理结果的 jsonl 格式文件,每行形如:

|

||||

|

||||

```json

|

||||

````json

|

||||

{

|

||||

"idx": 41,

|

||||

"query": "The point $(a, b)$ lies on the line with the equation $3x + 2y = 12.$ When $a = 4$, what is the value of $b$?",

|

||||

"gt": "0",

|

||||

"pred": ["0"],

|

||||

"problem": "The midpoint of the line segment between $(x,y)$ and $(2,4)$ is $(-7,0)$. Find $(x,y)$.",

|

||||

"level": "Level 3",

|

||||

"type": "Algebra",

|

||||

"solution": "Applying the midpoint formula gives $$\\left(\\frac{2+x}{2},\\frac{4+y}{2}\\right)=(-7,0).$$Solving $\\frac{2+x}{2}=-7$ for $x$ and $\\frac{4+y}{2} = 0$ for $y$ we find $(x,y)$ to be $\\boxed{(-16,-4)}$.",

|

||||

"idx": 306,

|

||||

"query": "The midpoint of the line segment between $(x,y)$ and $(2,4)$ is $(-7,0)$. Find $(x,y)$.",

|

||||

"gt": "(-16,-4)",

|

||||

"pred": [

|

||||

"(-16,-4)"

|

||||

],

|

||||

"steps": [

|

||||

{

|

||||

"role": "language",

|

||||

"content": ""

|

||||

"content": "To find the coordinates of the point $(x,y)$, we can use the midpoint formula. The midpoint $M$ of a line segment with endpoints $(x_1, y_1)$ and $(x_2, y_2)$ is given by:\n\n\\[M = \\left(\\frac{x_1 + x_2}{2}, \\frac{y_1 + y_2}{2}\\right)\\]\n\nGiven that the midpoint is $(-7,0)$ and one endpoint is $(2,4)$, we can set up equations to solve for $x$ and $y$.\n\nLet's start by setting up the equations based on the midpoint formula:\n\n\\[\\frac{x + 2}{2} = -7\\]\n\\[\\frac{y + 4}{2} = 0\\]\n\nNow, let's solve these equations using Python to find the values of $x$ and $y$."

|

||||

},

|

||||

{

|

||||

"role": "tool",

|

||||

"content": {

|

||||

"name": "IPythonInteractive",

|

||||

"name": "AsyncIPythonInteractiveManager",

|

||||

"parameters": {

|

||||

"command": "```python\nfrom sympy import symbols, solve\n\ndef find_b():\n x, y = symbols('x y')\n equation = 3*x + 2*y - 12\n b = solve(equation.subs(x, 4), y)[0]\n\n return b\n\nresult = find_b()\nprint(result)\n```"

|

||||

"command": "```python\nfrom sympy import symbols, Eq, solve\n\n# Define symbols\nx, y = symbols('x y')\n\n# Define equations based on the midpoint formula\neq1 = Eq((x + 2)/2, -7)\neq2 = Eq((y + 4)/2, 0)\n\n# Solve equations\nsolution = solve((eq1, eq2), (x, y))\n\nsolution\n```"

|

||||

}

|

||||

},

|

||||

"name": "interpreter"

|

||||

},

|

||||

{

|

||||

"role": "environment",

|

||||

"content": "0",

|

||||

"content": "{x: -16, y: -4}",

|

||||

"name": "interpreter"

|

||||

},

|

||||

{

|

||||

"role": "language",

|

||||

"content": "The value of $b$ when $a = 4$ is $\\boxed{0}$."

|

||||

"content": "After solving the equations, we find that the coordinates of the point $(x,y)$ are $(-16, -4)$. Therefore, the solution to the problem is:\n\n\\[\\boxed{(-16, -4)}\\]"

|

||||

}

|

||||

],

|

||||

"error": null

|

||||

}

|

||||

```

|

||||

````

|

||||

|

||||

如果已经准备好了该文件,可直接跳过推理阶段进行评估:

|

||||

|

||||

|

|

|

|||

|

|

@ -38,7 +38,7 @@ Then you can chat through the UI shown as below

|

|||

|

||||

|

||||

|

||||

## Run a ReAct agent with InternLM2-Chat

|

||||

## Run a ReAct agent with InternLM2.5-Chat

|

||||

|

||||

**NOTE:** If you want to run a HuggingFace model, please run `pip install -e .[all]` first.

|

||||

|

||||

|

|

@ -49,7 +49,7 @@ from lagent.actions import ActionExecutor, GoogleSearch, PythonInterpreter

|

|||

from lagent.llms import HFTransformer

|

||||

|

||||

# Initialize the HFTransformer-based Language Model (llm) and provide the model name.

|

||||

llm = HFTransformer('internlm/internlm2-chat-7b')

|

||||

llm = HFTransformer('internlm/internlm2_5-7b-chat')

|

||||

|

||||

# Initialize the Google Search tool and provide your API key.

|

||||

search_tool = GoogleSearch(api_key='Your SERPER_API_KEY')

|

||||

|

|

|

|||

|

|

@ -38,7 +38,7 @@ streamlit run examples/react_web_demo.py

|

|||

|

||||

|

||||

|

||||

## 用 InternLM-Chat 构建一个 ReAct 智能体

|

||||

## 用 InternLM2.5-Chat 构建一个 ReAct 智能体

|

||||

|

||||

\*\*注意:\*\*如果你想要启动一个 HuggingFace 的模型,请先运行 pip install -e .\[all\]。

|

||||

|

||||

|

|

@ -49,7 +49,7 @@ from lagent.actions import ActionExecutor, GoogleSearch, PythonInterpreter

|

|||

from lagent.llms import HFTransformer

|

||||

|

||||

# Initialize the HFTransformer-based Language Model (llm) and provide the model name.

|

||||

llm = HFTransformer('internlm/internlm-chat-7b-v1_1')

|

||||

llm = HFTransformer('internlm/internlm2_5-7b-chat')

|

||||

|

||||

# Initialize the Google Search tool and provide your API key.

|

||||

search_tool = GoogleSearch(api_key='Your SERPER_API_KEY')

|

||||

|

|

|

|||

|

|

@ -189,8 +189,8 @@ def generate_interactive(

|

|||

generation_config.max_length = generation_config.max_new_tokens + input_ids_seq_length

|

||||

if not has_default_max_length:

|

||||

logger.warn( # pylint: disable=W4902

|

||||

f"Both `max_new_tokens` (={generation_config.max_new_tokens}) and `max_length`(="

|

||||

f"{generation_config.max_length}) seem to have been set. `max_new_tokens` will take precedence. "

|

||||

f'Both `max_new_tokens` (={generation_config.max_new_tokens}) and `max_length`(='

|

||||

f'{generation_config.max_length}) seem to have been set. `max_new_tokens` will take precedence. '

|

||||

'Please refer to the documentation for more information. '

|

||||

'(https://huggingface.co/docs/transformers/main/en/main_classes/text_generation)',

|

||||

UserWarning,

|

||||

|

|

@ -199,8 +199,8 @@ def generate_interactive(

|

|||

if input_ids_seq_length >= generation_config.max_length:

|

||||

input_ids_string = 'input_ids'

|

||||

logger.warning(

|

||||

f"Input length of {input_ids_string} is {input_ids_seq_length}, but `max_length` is set to"

|

||||

f" {generation_config.max_length}. This can lead to unexpected behavior. You should consider"

|

||||

f'Input length of {input_ids_string} is {input_ids_seq_length}, but `max_length` is set to'

|

||||

f' {generation_config.max_length}. This can lead to unexpected behavior. You should consider'

|

||||

' increasing `max_new_tokens`.')

|

||||

|

||||

# 2. Set generation parameters if not already defined

|

||||

|

|

@ -510,7 +510,7 @@ def main():

|

|||

interface.clear_history()

|

||||

f.flush()

|

||||

|

||||

print(f"{args.model}: Accuracy - {sum(scores) / len(scores)}")

|

||||

print(f'{args.model}: Accuracy - {sum(scores) / len(scores)}')

|

||||

torch.cuda.empty_cache()

|

||||

|

||||

|

||||

|

|

|

|||

|

|

@ -1,10 +1,10 @@

|

|||

lmdeploy>=0.2.2

|

||||

antlr4-python3-runtime==4.11.0

|

||||

datasets

|

||||

tqdm

|

||||

einops

|

||||

jsonlines

|

||||

lagent @ git+https://github.com/InternLM/lagent@main

|

||||

lmdeploy>=0.2.2

|

||||

numpy

|

||||

pebble

|

||||

jsonlines

|

||||

sympy==1.12

|

||||

antlr4-python3-runtime==4.11.0

|

||||

lagent

|

||||

einops

|

||||

tqdm

|

||||

|

|

|

|||

|

|

@ -46,13 +46,6 @@ from sympy.parsing.sympy_parser import parse_expr

|

|||

from tqdm import tqdm

|

||||

|

||||

# --------------------- modify the system prompt as needed ---------------------

|

||||

# DEFAULT_PROMPT = (

|

||||

# 'Integrate step-by-step reasoning and Python code to solve math problems '

|

||||

# 'using the following guidelines:\n'

|

||||

# '- Just write jupyter code to solve the problem without giving your thought;\n'

|

||||

# r"- Present the final result in LaTeX using a '\boxed{{}}' without any "

|

||||

# 'units. \n')

|

||||

|

||||

DEFAULT_PROMPT = (

|

||||

'Integrate step-by-step reasoning and Python code to solve math problems '

|

||||

'using the following guidelines:\n'

|

||||

|

|

@ -64,12 +57,11 @@ DEFAULT_PROMPT = (

|

|||

|

||||

def parse_args():

|

||||

parser = argparse.ArgumentParser(description='Math Code Interpreter')

|

||||

parser.add_argument(

|

||||

'--backend',

|

||||

type=str,

|

||||

default='lmdeploy',

|

||||

help='Which inference framework to use.',

|

||||

choices=['lmdeploy', 'hf'])

|

||||

parser.add_argument('--backend',

|

||||

type=str,

|

||||

default='lmdeploy',

|

||||

help='Which inference framework to use.',

|

||||

choices=['lmdeploy', 'hf'])

|

||||

parser.add_argument(

|

||||

'--model_path',

|

||||

type=str,

|

||||

|

|

@ -81,21 +73,14 @@ def parse_args():

|

|||

type=str,

|

||||

required=True,

|

||||

help='Path to save inference results to, should be a `jsonl` file')

|

||||

parser.add_argument(

|

||||

'--dataset',

|

||||

type=str,

|

||||

default='math',

|

||||

choices=['gsm8k', 'math'],

|

||||

help='Dataset for inference')

|

||||

parser.add_argument(

|

||||

'--batch_size',

|

||||

type=int,

|

||||

default=100,

|

||||

help='Agent inference batch size')

|

||||

parser.add_argument('--batch_size',

|

||||

type=int,

|

||||

default=100,

|

||||

help='Agent inference batch size')

|

||||

parser.add_argument(

|

||||

'--max_turn',

|

||||

type=int,

|

||||

default=3,

|

||||

default=5,

|

||||

help=

|

||||

'Maximum number of interaction rounds between the agent and environment'

|

||||

)

|

||||

|

|

@ -104,29 +89,27 @@ def parse_args():

|

|||

type=int,

|

||||

default=1,

|

||||

help='Number of tensor parallelism. It may be required in LMDelpoy.')

|

||||

parser.add_argument(

|

||||

'--temperature',

|

||||

type=float,

|

||||

default=0.1,

|

||||

help='Temperature in next token prediction')

|

||||

parser.add_argument(

|

||||

'--top_p',

|

||||

type=float,

|

||||

default=0.8,

|

||||

help='Parameter for Top-P Sampling.')

|

||||

parser.add_argument(

|

||||

'--top_k', type=int, default=40, help='Parameter for Top-K Sampling.')

|

||||

parser.add_argument(

|

||||

'--stop_words',

|

||||

type=str,

|

||||

default=['<|action_end|>', '<|im_end|>'],

|

||||

action='append',

|

||||

help='Stop words')

|

||||

parser.add_argument(

|

||||

'--max_new_tokens',

|

||||

type=int,

|

||||

default=512,

|

||||

help='Number of maximum generated tokens.')

|

||||

parser.add_argument('--temperature',

|

||||

type=float,

|

||||

default=0.1,

|

||||

help='Temperature in next token prediction')

|

||||

parser.add_argument('--top_p',

|

||||

type=float,

|

||||

default=0.8,

|

||||

help='Parameter for Top-P Sampling.')

|

||||

parser.add_argument('--top_k',

|

||||

type=int,

|

||||

default=40,

|

||||

help='Parameter for Top-K Sampling.')

|

||||

parser.add_argument('--stop_words',

|

||||

type=str,

|

||||

default=['<|action_end|>', '<|im_end|>'],

|

||||

action='append',

|

||||

help='Stop words')

|

||||

parser.add_argument('--max_new_tokens',

|

||||

type=int,

|

||||

default=512,

|

||||

help='Number of maximum generated tokens.')

|

||||

parser.add_argument(

|

||||

'--do_infer',

|

||||

default=True,

|

||||

|

|

@ -138,21 +121,14 @@ def parse_args():

|

|||

# action='store_false',

|

||||

# help='Disable the inference.'

|

||||

# )

|

||||

parser.add_argument(

|

||||

'--do_eval',

|

||||

default=False,

|

||||

action='store_true',

|

||||

help='Whether to evaluate the inference results.')

|

||||

parser.add_argument(

|

||||

'--overwrite',

|

||||

default=False,

|

||||

action='store_true',

|

||||

help='Whether to overwrite the existing result file')

|

||||

# parser.add_argument(

|

||||

# '--debug',

|

||||

# default=False,

|

||||

# action='store_true',

|

||||

# help='Only infer the first 50 samples')

|

||||

parser.add_argument('--do_eval',

|

||||

default=False,

|

||||

action='store_true',

|

||||

help='Whether to evaluate the inference results.')

|

||||

parser.add_argument('--overwrite',

|

||||

default=False,

|

||||

action='store_true',

|

||||

help='Whether to overwrite the existing result file')

|

||||

return parser.parse_args()

|

||||

|

||||

|

||||

|

|

@ -339,28 +315,41 @@ def last_boxed_only_string(string):

|

|||

return retval

|

||||

|

||||

|

||||

def extract_answer(pred_str):

|

||||

if 'boxed' not in pred_str:

|

||||

return ''

|

||||

answer = pred_str.split('boxed')[-1]

|

||||

if len(answer) == 0:

|

||||

return ''

|

||||

elif (answer[0] == '{'):

|

||||

stack = 1

|

||||

a = ''

|

||||

for c in answer[1:]:

|

||||

if (c == '{'):

|

||||

stack += 1

|

||||

a += c

|

||||

elif (c == '}'):

|

||||

stack -= 1

|

||||

if (stack == 0): break

|

||||

a += c

|

||||

else:

|

||||

a += c

|

||||

else:

|

||||

a = answer.split('$')[0].strip()

|

||||

def extract_answer(pred_str: str, execute: bool = False) -> str:

|

||||

if re.search('\boxed|boxed', pred_str):

|

||||

answer = re.split('\boxed|boxed', pred_str)[-1]

|

||||

if len(answer) == 0:

|

||||

return ''

|

||||

elif (answer[0] == '{'):

|

||||

stack = 1

|

||||

a = ''

|

||||

for c in answer[1:]:

|

||||

if (c == '{'):

|

||||

stack += 1

|

||||

a += c

|

||||

elif (c == '}'):

|

||||

stack -= 1

|

||||

if (stack == 0): break

|

||||

a += c

|

||||

else:

|

||||

a += c

|

||||

else:

|

||||

a = answer.split('$')[0].strip()

|

||||

elif re.search('[Tt]he (final )?answer is:?', pred_str):

|

||||

a = re.split('[Tt]he (final )?answer is:?',

|

||||

pred_str)[-1].strip().rstrip('.')

|

||||

elif pred_str.startswith('```python') and execute:

|

||||

# fall back to program

|

||||

from lagent import get_tool

|

||||

|

||||

a = get_tool('IPythonInteractive').exec(pred_str).value or ''

|

||||

else: # use the last number

|

||||

pred = re.findall(r'-?\d*\.?\d+', pred_str.replace(',', ''))

|

||||

if len(pred) >= 1:

|

||||

a = pred[-1]

|

||||

else:

|

||||

a = ''

|

||||

# multiple lines

|

||||

pred = a.split('\n')[0]

|

||||

if pred != '' and pred[0] == ':':

|

||||

pred = pred[1:]

|

||||

|

|

@ -501,8 +490,9 @@ def symbolic_equal_process(a, b, output_queue):

|

|||

def call_with_timeout(func, *args, timeout=1, **kwargs):

|

||||

output_queue = multiprocessing.Queue()

|

||||

process_args = args + (output_queue, )

|

||||

process = multiprocessing.Process(

|

||||

target=func, args=process_args, kwargs=kwargs)

|

||||

process = multiprocessing.Process(target=func,

|

||||

args=process_args,

|

||||

kwargs=kwargs)

|

||||

process.start()

|

||||

process.join(timeout)

|

||||

|

||||

|

|

@ -525,65 +515,45 @@ def init_agent(backend: str, max_turn: int, model_path: str, tp: int,

|

|||

pipeline_cfg=dict(backend_config=TurbomindEngineConfig(tp=tp)),

|

||||

**kwargs)

|

||||

elif backend == 'hf':

|

||||

model = HFTransformer(

|

||||

path=model_path, meta_template=INTERNLM2_META, **kwargs)

|

||||

model = HFTransformer(path=model_path,

|

||||

meta_template=INTERNLM2_META,

|

||||

**kwargs)

|

||||

else:

|

||||

raise NotImplementedError

|

||||

|

||||

agent = Internlm2Agent(

|

||||

llm=model,

|

||||

protocol=Internlm2Protocol(

|

||||

meta_prompt=None, interpreter_prompt=DEFAULT_PROMPT),

|

||||

protocol=Internlm2Protocol(meta_prompt=None,

|

||||

interpreter_prompt=DEFAULT_PROMPT),

|

||||

interpreter_executor=ActionExecutor(actions=[

|

||||

IPythonInteractiveManager(

|

||||

max_workers=200,

|

||||

ci_lock=os.path.join(

|

||||

os.path.dirname(__file__), '.ipython.lock'))

|

||||

IPythonInteractiveManager(max_workers=200,

|

||||

ci_lock=os.path.join(

|

||||

os.path.dirname(__file__),

|

||||

'.ipython.lock'))

|

||||

]),

|

||||

max_turn=max_turn)

|

||||

return agent

|

||||

|

||||

|

||||

def predict(args):

|

||||

if args.dataset == 'gsm8k':

|

||||

|

||||

def process(d, k):

|

||||

d['answer'] = re.sub(r'#### (.+)', r'The answer is \1',

|

||||

re.sub(r'<<.*?>>', '',

|

||||

d['answer'])).replace('$', '')

|

||||

d['idx'] = k

|

||||

d['query'] = d['question'].replace('$', '')

|

||||

d['gt'] = re.search('The answer is (.+)', d['answer'])[1]

|

||||

d['pred'], d['steps'], d['error'] = [], [], None

|

||||

return d

|

||||

|

||||

dataset = load_dataset(

|

||||

'gsm8k', 'main', split='test').map(process, True)

|

||||

|

||||

elif args.dataset == 'math':

|

||||

|