mirror of https://github.com/InternLM/InternLM

Merge branch 'main' into tools/convert2llama

commit

1758aff49c

|

|

@ -1,128 +0,0 @@

|

|||

name: demo-in-readme

|

||||

on:

|

||||

pull_request:

|

||||

branches:

|

||||

- "main"

|

||||

- "develop"

|

||||

paths-ignore:

|

||||

- "docs/**"

|

||||

- "**.md"

|

||||

env:

|

||||

WORKSPACE_PREFIX: $(echo $GITHUB_WORKSPACE |cut -d '/' -f 1-4)

|

||||

SLURM_PARTITION: llm_s

|

||||

|

||||

jobs:

|

||||

check-requirements:

|

||||

runs-on: [t_cluster]

|

||||

steps:

|

||||

- name: mask env

|

||||

run: |

|

||||

echo "::add-mask::${{env.WORKSPACE_PREFIX}}"

|

||||

- uses: actions/checkout@v3

|

||||

with:

|

||||

fetch-depth: 2

|

||||

- name: check-requirements

|

||||

run: |

|

||||

source activate internlm-env-test

|

||||

changed_files=$(git diff --name-only -r HEAD^1 HEAD)

|

||||

echo $changed_files

|

||||

if [[ $changed_files =~ "runtime.txt" ]]; then

|

||||

pip install -r requirements/runtime.txt

|

||||

fi

|

||||

|

||||

if [[ $changed_files =~ "torch.txt" ]]; then

|

||||

pip install -r requirements/torch.txt

|

||||

fi

|

||||

|

||||

dataset-preparation:

|

||||

if: ${{ always() }}

|

||||

needs: check-requirements

|

||||

runs-on: [t_cluster]

|

||||

steps:

|

||||

- name: mask env

|

||||

run: |

|

||||

echo "::add-mask::${{env.WORKSPACE_PREFIX}}"

|

||||

- uses: actions/checkout@v3

|

||||

|

||||

- name: raw-chinese-data

|

||||

run: |

|

||||

source activate internlm-env-test

|

||||

sh ./ci_scripts/data/tokenizer_chinese.sh ${GITHUB_RUN_ID}-${GITHUB_JOB}

|

||||

|

||||

- name: alpaca-data

|

||||

run: |

|

||||

source activate internlm-env-test

|

||||

sh ./ci_scripts/data/tokenizer_alpaca.sh

|

||||

|

||||

train:

|

||||

if: ${{ always() }}

|

||||

needs: check-requirements

|

||||

runs-on: [t_cluster]

|

||||

timeout-minutes: 30

|

||||

steps:

|

||||

- name: mask env

|

||||

run: |

|

||||

echo "::add-mask::${{env.WORKSPACE_PREFIX}}"

|

||||

- uses: actions/checkout@v3

|

||||

|

||||

- name: slurm-train

|

||||

id: basic_train

|

||||

run: |

|

||||

source activate internlm-env-test

|

||||

sh ./ci_scripts/train/slurm_train.sh ${GITHUB_RUN_ID}-${GITHUB_JOB}

|

||||

|

||||

- name: load_preset_ckpt

|

||||

if: ${{ failure() && steps.basic_train.conclusion == 'failure' }}

|

||||

run: |

|

||||

source activate internlm-env-test

|

||||

export PYTHONPATH=$PWD:$PYTHONPATH

|

||||

sh ./ci_scripts/train/load_ckpt.sh 7B_load_preset_ckpt ${GITHUB_RUN_ID}-${GITHUB_JOB}

|

||||

|

||||

- name: load_new_ckpt

|

||||

run: |

|

||||

source activate internlm-env-test

|

||||

export PYTHONPATH=$PWD:$PYTHONPATH

|

||||

sh ./ci_scripts/train/load_ckpt.sh 7B_load_new_ckpt ${GITHUB_RUN_ID}-${GITHUB_JOB}

|

||||

rsync -av --remove-source-files $GITHUB_WORKSPACE/llm_ckpts ${{env.WORKSPACE_PREFIX}}/ci_clean_bak

|

||||

|

||||

- name: torchrun-train

|

||||

run: |

|

||||

source activate internlm-env-test

|

||||

sh ./ci_scripts/train/torchrun.sh ${GITHUB_RUN_ID}-${GITHUB_JOB}

|

||||

rsync -av --remove-source-files $GITHUB_WORKSPACE/llm_ckpts ${{env.WORKSPACE_PREFIX}}/ci_clean_bak

|

||||

|

||||

convert-model-then-load:

|

||||

if: ${{ always() }}

|

||||

needs: check-requirements

|

||||

runs-on: [t_cluster]

|

||||

timeout-minutes: 15

|

||||

steps:

|

||||

- name: mask env

|

||||

run: |

|

||||

echo "::add-mask::${{env.WORKSPACE_PREFIX}}"

|

||||

- uses: actions/checkout@v3

|

||||

|

||||

- name: convert-model-then-load

|

||||

run: |

|

||||

source activate internlm-env-test

|

||||

export PYTHONPATH=$PWD:$PYTHONPATH

|

||||

sh ./ci_scripts/model/convert_to_hf.sh

|

||||

cd ./hf_ckpt

|

||||

srun -p ${SLURM_PARTITION} --quotatype=spot --job-name=${GITHUB_RUN_ID}-${GITHUB_JOB} --gpus-per-task=2 python ../ci_scripts/model/loaded_as_transformer.py

|

||||

cd ..

|

||||

rsync -av --remove-source-files $GITHUB_WORKSPACE/hf_ckpt ${{env.WORKSPACE_PREFIX}}/ci_clean_bak

|

||||

load-chat-model-in-hf:

|

||||

if: ${{ always() }}

|

||||

needs: check-requirements

|

||||

runs-on: [t_cluster]

|

||||

timeout-minutes: 15

|

||||

steps:

|

||||

- name: mask env

|

||||

run: |

|

||||

echo "::add-mask::${{env.WORKSPACE_PREFIX}}"

|

||||

- uses: actions/checkout@v3

|

||||

|

||||

- name: chat-model-in-hf

|

||||

run: |

|

||||

source activate internlm-env-test

|

||||

srun -p ${SLURM_PARTITION} --quotatype=spot --job-name=${GITHUB_RUN_ID}-${GITHUB_JOB} --gpus-per-task=2 python ./ci_scripts/model/demo_load_7B_chat_model.py

|

||||

17

.owners.yml

17

.owners.yml

|

|

@ -1,17 +0,0 @@

|

|||

assign:

|

||||

issues: disabled

|

||||

pull_requests: disabled

|

||||

|

||||

strategy:

|

||||

# random

|

||||

daily-shift-based

|

||||

schedule:

|

||||

'*/1 * * * *'

|

||||

assignees:

|

||||

- yhcc

|

||||

- yhcc

|

||||

- sunpengsdu

|

||||

- sunpengsdu

|

||||

- ZwwWayne

|

||||

- ZwwWayne

|

||||

- yhcc

|

||||

18

README.md

18

README.md

|

|

@ -18,7 +18,7 @@

|

|||

[](https://github.com/internLM/OpenCompass/)

|

||||

<!-- [](https://internlm.readthedocs.io/zh_CN/latest/?badge=latest) -->

|

||||

[📘Commercial Application](#license) |

|

||||

[🤗HuggingFace](https://huggingface.co/spaces/internlm/internlm2-Chat-7B) |

|

||||

[🤗HuggingFace](https://huggingface.co/internlm) |

|

||||

[🆕Update News](#news) |

|

||||

[🤔Reporting Issues](https://github.com/InternLM/InternLM/issues/new)

|

||||

|

||||

|

|

@ -64,14 +64,16 @@ InternLM2 series are released with the following features:

|

|||

| **InternLM2-Chat-20B-SFT** | [🤗internlm/internlm2-chat-20b-sft](https://huggingface.co/internlm/internlm2-chat-20b-sft) | [<img src="./assets/modelscope_logo.png" width="20px" /> internlm2-chat-20b-sft](https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2-chat-20b-sft/summary) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2-chat-20b-sft) | 2024-01-17 |

|

||||

| **InternLM2-Chat-20B** | [🤗internlm/internlm2-chat-20b](https://huggingface.co/internlm/internlm2-chat-20b) | [<img src="./assets/modelscope_logo.png" width="20px" /> internlm2-chat-20b](https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2-chat-20b/summary) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2-chat-20b) | 2024-01-17 |

|

||||

|

||||

**Note of Models:**

|

||||

**Notes:**

|

||||

|

||||

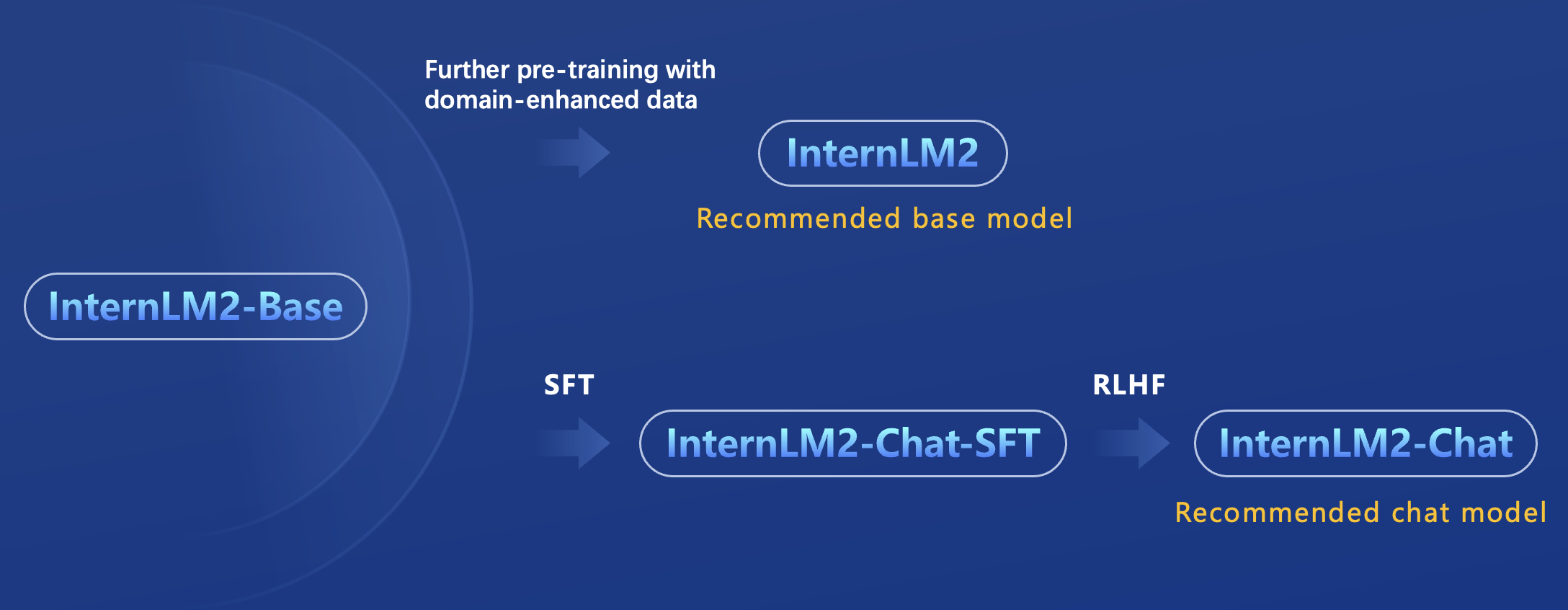

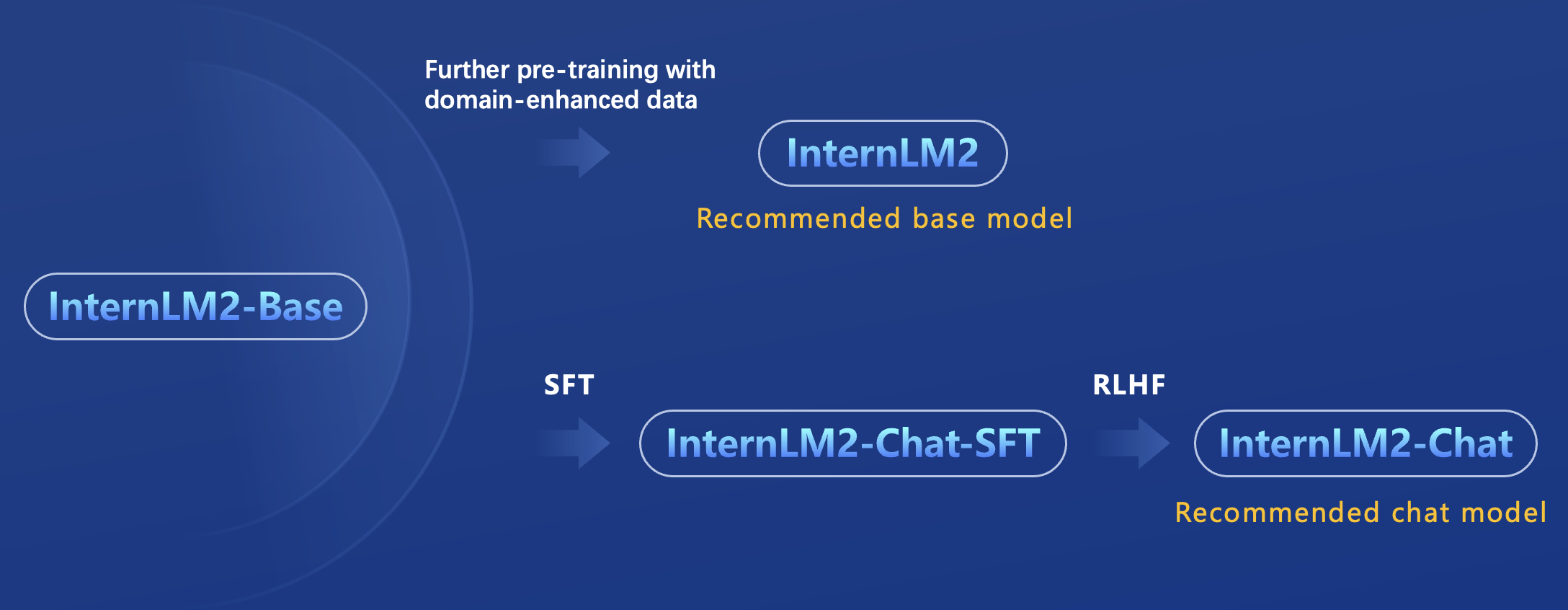

The release of InternLM2 series contains two model sizes: 7B and 20B. 7B models are efficient for research and application and 20B models are more powerful and can support more complex scenarios. For each model size, there are four types of models for different user requirements

|

||||

The release of InternLM2 series contains two model sizes: 7B and 20B. 7B models are efficient for research and application and 20B models are more powerful and can support more complex scenarios. The relation of these models are shown as follows.

|

||||

|

||||

1. InternLM2-Base: Foundation models with high quality and high adaptation flexibility, which serve as a good starting point for downstream deep adaptations.

|

||||

2. InternLM2: Optimized in multiple dimensions based on InternLM2-Base, obtaining state-of-the-art performance in evaluation with good language capability. InternLM2 models are recommended for consideration in most applications.

|

||||

3. InternLM2-Chat-SFT: Intermediate version of InternLM2-Chat that only undergoes supervised fine-tuning (SFT), based on the InternLM2-Base model. We release them to benefit research on alignment.

|

||||

4. InternLM2-Chat: Further aligned on top of InternLM2-Chat-SFT through online RLHF. InternLM2-Chat exhibits better instruction following, chat experience, and function call, which is recommended for downstream applications.

|

||||

|

||||

|

||||

1. **InternLM2-Base**: Foundation models with high quality and high adaptation flexibility, which serve as a good starting point for downstream deep adaptations.

|

||||

2. **InternLM2**: Further pretrain with general domain data and domain-enhanced corpus, obtaining state-of-the-art performance in evaluation with good language capability. InternLM2 models are recommended for consideration in most applications.

|

||||

3. **InternLM2-Chat-SFT**: Intermediate version of InternLM2-Chat that only undergoes supervised fine-tuning (SFT), based on the InternLM2-Base model. We release them to benefit research on alignment.

|

||||

4. **InternLM2-Chat**: Further aligned on top of InternLM2-Chat-SFT through online RLHF. InternLM2-Chat exhibits better instruction following, chat experience, and function call, which is recommended for downstream applications.

|

||||

|

||||

**Limitations:** Although we have made efforts to ensure the safety of the model during the training process and to encourage the model to generate text that complies with ethical and legal requirements, the model may still produce unexpected outputs due to its size and probabilistic generation paradigm. For example, the generated responses may contain biases, discrimination, or other harmful content. Please do not propagate such content. We are not responsible for any consequences resulting from the dissemination of harmful information.

|

||||

|

||||

|

|

@ -81,7 +83,7 @@ The release of InternLM2 series contains two model sizes: 7B and 20B. 7B models

|

|||

|

||||

| Dataset | Baichuan2-7B-Chat | Mistral-7B-Instruct-v0.2 | Qwen-7B-Chat | InternLM2-Chat-7B | ChatGLM3-6B | Baichuan2-13B-Chat | Mixtral-8x7B-Instruct-v0.1 | Qwen-14B-Chat | InternLM2-Chat-20B |

|

||||

|-----------------------|-------------------|--------------------------|--------------|-------------------|-------------|---------------------|--------------------------------|---------------|---------------------|

|

||||

| MMLU | 50.1 | 59.2 | 57.1 | 63.7 | 58.0 | 56.6 | 70.3 | 66.7 | 65.1 |

|

||||

| MMLU | 50.1 | 59.2 | 57.1 | 63.7 | 58.0 | 56.6 | 70.3 | 66.7 | 66.5 |

|

||||

| CMMLU | 53.4 | 42.0 | 57.9 | 63.0 | 57.8 | 54.8 | 50.6 | 68.1 | 65.1 |

|

||||

| AGIEval | 35.3 | 34.5 | 39.7 | 47.2 | 44.2 | 40.0 | 41.7 | 46.5 | 50.3 |

|

||||

| C-Eval | 53.9 | 42.4 | 59.8 | 60.8 | 59.1 | 56.3 | 54.0 | 71.5 | 63.0 |

|

||||

|

|

|

|||

|

|

@ -19,7 +19,7 @@

|

|||

<!-- [](https://internlm.readthedocs.io/zh_CN/latest/?badge=latest) -->

|

||||

|

||||

[📘商业授权](#开源许可证) |

|

||||

[🤗HuggingFace](https://huggingface.co/spaces/internlm/internlm2-Chat-7B) |

|

||||

[🤗HuggingFace](https://huggingface.co/internlm) |

|

||||

[🆕最新消息](#更新) |

|

||||

[🤔提交反馈](https://github.com/InternLM/InternLM/issues/new)

|

||||

|

||||

|

|

@ -62,14 +62,16 @@ InternLM2 系列模型在本仓库正式发布,具有如下特性:

|

|||

| **InternLM2-Chat-20B-SFT** | [🤗internlm/internlm2-chat-20b-sft](https://huggingface.co/internlm/internlm2-chat-20b-sft) | [<img src="./assets/modelscope_logo.png" width="20px" /> internlm2-chat-20b-sft](https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2-chat-20b-sft/summary) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2-chat-20b-sft) | 2024-01-17 |

|

||||

| **InternLM2-Chat-20B** | [🤗internlm/internlm2-chat-20b](https://huggingface.co/internlm/internlm2-chat-20b) | [<img src="./assets/modelscope_logo.png" width="20px" /> internlm2-chat-20b](https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2-chat-20b/summary) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2-chat-20b) | 2024-01-17 |

|

||||

|

||||

**关于模型说明:**

|

||||

**模型说明:**

|

||||

|

||||

在此次发布中,InternLM2 包含两种模型规格:7B 和 20B。7B 为轻量级的研究和应用提供了一个轻便但性能不俗的模型,20B 模型的综合性能更为强劲,可以有效支持更加复杂的实用场景。面向不同的使用需求,每个规格包含四个模型版本:

|

||||

在此次发布中,InternLM2 包含两种模型规格:7B 和 20B。7B 为轻量级的研究和应用提供了一个轻便但性能不俗的模型,20B 模型的综合性能更为强劲,可以有效支持更加复杂的实用场景。每个规格不同模型关系如下图所示:

|

||||

|

||||

1. InternLM2-Base:高质量和具有很强可塑性的模型基座,是模型进行深度领域适配的高质量起点。

|

||||

2. InternLM2:在 Base 模型基础上,在多个能力方向进行了强化,在评测中成绩优异,同时保持了很好的通用语言能力,是我们推荐的在大部分应用中考虑选用的优秀基座。

|

||||

3. InternLM2-Chat-SFT: 基于 InternLM2-Base 模型进行了有监督微调,是 InternLM2-Chat 模型的中间版本。我们将它们开源以助力社区在对齐方面的研究。

|

||||

4. InternLM2-Chat: 在 InternLM2-Chat-SFT 的基础上进行了 online RLHF 以进一步对齐. InternLM2-Chat 面向对话交互进行了优化,具有较好的指令遵循、共情聊天和调用工具等的能力,是我们推荐直接用于下游应用的模型。

|

||||

|

||||

|

||||

1. **InternLM2-Base**:高质量和具有很强可塑性的模型基座,是模型进行深度领域适配的高质量起点。

|

||||

2. **InternLM2**:进一步在大规模无标签数据上进行预训练,并结合特定领域的增强语料库进行训练,在评测中成绩优异,同时保持了很好的通用语言能力,是我们推荐的在大部分应用中考虑选用的优秀基座。

|

||||

3. **InternLM2-Chat-SFT**: 基于 InternLM2-Base 模型进行了有监督微调,是 InternLM2-Chat 模型的中间版本。我们将它们开源以助力社区在对齐方面的研究。

|

||||

4. **InternLM2-Chat**: 在 InternLM2-Chat-SFT 的基础上进行了 online RLHF 以进一步对齐. InternLM2-Chat 面向对话交互进行了优化,具有较好的指令遵循、共情聊天和调用工具等的能力,是我们推荐直接用于下游应用的模型。

|

||||

|

||||

**局限性:** 尽管在训练过程中我们非常注重模型的安全性,尽力促使模型输出符合伦理和法律要求的文本,但受限于模型大小以及概率生成范式,模型可能会产生各种不符合预期的输出,例如回复内容包含偏见、歧视等有害内容,请勿传播这些内容。由于传播不良信息导致的任何后果,本项目不承担责任。

|

||||

|

||||

|

|

@ -79,7 +81,7 @@ InternLM2 系列模型在本仓库正式发布,具有如下特性:

|

|||

|

||||

| Dataset | Baichuan2-7B-Chat | Mistral-7B-Instruct-v0.2 | Qwen-7B-Chat | InternLM2-Chat-7B | ChatGLM3-6B | Baichuan2-13B-Chat | Mixtral-8x7B-Instruct-v0.1 | Qwen-14B-Chat | InternLM2-Chat-20B |

|

||||

|-----------------------|-------------------|--------------------------|--------------|-------------------|-------------|---------------------|--------------------------------|---------------|---------------------|

|

||||

| MMLU | 50.1 | 59.2 | 57.1 | 63.7 | 58.0 | 56.6 | 70.3 | 66.7 | 65.1 |

|

||||

| MMLU | 50.1 | 59.2 | 57.1 | 63.7 | 58.0 | 56.6 | 70.3 | 66.7 | 66.5 |

|

||||

| CMMLU | 53.4 | 42.0 | 57.9 | 63.0 | 57.8 | 54.8 | 50.6 | 68.1 | 65.1 |

|

||||

| AGIEval | 35.3 | 34.5 | 39.7 | 47.2 | 44.2 | 40.0 | 41.7 | 46.5 | 50.3 |

|

||||

| C-Eval | 53.9 | 42.4 | 59.8 | 60.8 | 59.1 | 56.3 | 54.0 | 71.5 | 63.0 |

|

||||

|

|

|

|||

|

|

@ -39,7 +39,7 @@ Parameter explanation:

|

|||

A simple usage example is as follows:

|

||||

|

||||

```bash

|

||||

python tools/pal_inference.py internlm/internlm-chat-7k ./output -v

|

||||

python tools/pal_inference.py internlm/internlm-chat-7b ./output -v

|

||||

```

|

||||

|

||||

Each line in the output file includes the input question, correct answer, executed answer, score, and the Python code block generated by the model:

|

||||

|

|

|

|||

|

|

@ -0,0 +1,477 @@

|

|||

# flake8: noqa

|

||||

|

||||

# This file is modified from:

|

||||

# hhttps://github.com/reasoning-machines/pal/blob/main/pal/core/interface.py

|

||||

#

|

||||

# Copyright 2022 PAL Authors. All rights reserved.

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

|

||||

import argparse

|

||||

import copy

|

||||

import json

|

||||

import os

|

||||

import signal

|

||||

import warnings

|

||||

from dataclasses import asdict, dataclass

|

||||

from typing import Any, Callable, Dict, List, Optional

|

||||

|

||||

import torch

|

||||

import tqdm

|

||||

from datasets import load_dataset

|

||||

from torch import nn

|

||||

from transformers import AutoModelForCausalLM, AutoTokenizer

|

||||

from transformers.generation.utils import LogitsProcessorList, StoppingCriteriaList

|

||||

from transformers.utils import logging

|

||||

|

||||

logger = logging.get_logger(__name__)

|

||||

|

||||

|

||||

def parse_args():

|

||||

parser = argparse.ArgumentParser(description="PAL Inference")

|

||||

parser.add_argument("model", type=str, help="Path to the pre-trained LLM used for inference.")

|

||||

parser.add_argument(

|

||||

"out_dir", type=str, help="Name of the output folder where generated code snippets will be saved."

|

||||

)

|

||||

parser.add_argument("--dataset", default="gsm8k", type=str, help="Name of the dataset used for code generation.")

|

||||

parser.add_argument(

|

||||

"--max_length",

|

||||

default=2048,

|

||||

type=int,

|

||||

help="Maximum input token length for the natural language description.",

|

||||

)

|

||||

parser.add_argument(

|

||||

"--top_p",

|

||||

default=0.8,

|

||||

type=float,

|

||||

help="Probability threshold to choose sample tokens during generation.",

|

||||

)

|

||||

parser.add_argument(

|

||||

"--eoh",

|

||||

default="",

|

||||

type=str,

|

||||

help="End of human (user) token.",

|

||||

)

|

||||

parser.add_argument(

|

||||

"--eoa",

|

||||

default="",

|

||||

type=str,

|

||||

help="End of assistant (bot) token.",

|

||||

)

|

||||

parser.add_argument(

|

||||

"--eos",

|

||||

default="",

|

||||

type=str,

|

||||

help="End of system token.",

|

||||

)

|

||||

parser.add_argument(

|

||||

"--temperature", "-t", default=1.0, type=float, help="Temperature of token sampling during generation."

|

||||

)

|

||||

parser.add_argument(

|

||||

"--time_out", default=100, type=float, help="Maximum time allowed for executing generated code."

|

||||

)

|

||||

parser.add_argument(

|

||||

"--verbose",

|

||||

"-v",

|

||||

action="store_true",

|

||||

help="Print code error information when executing generated code (optional).",

|

||||

)

|

||||

parser.add_argument("--append", "-a", action="store_true", help="Append output to the history results (optional).")

|

||||

args = parser.parse_args()

|

||||

return args

|

||||

|

||||

|

||||

class Timeout:

|

||||

"""Timer to execute code

|

||||

|

||||

Adapted from https://github.com/reasoning-machines/pal

|

||||

|

||||

Args:

|

||||

seconds (float): The maximum seconds to execute code

|

||||

error_message (str)

|

||||

"""

|

||||

|

||||

def __init__(self, seconds=1, error_message="Timeout"):

|

||||

self.seconds = seconds

|

||||

self.error_message = error_message

|

||||

|

||||

def timeout_handler(self, signum, frame):

|

||||

raise TimeoutError(self.error_message)

|

||||

|

||||

def __enter__(self):

|

||||

signal.signal(signal.SIGALRM, self.timeout_handler)

|

||||

signal.alarm(self.seconds)

|

||||

|

||||

def __exit__(self, error_type, value, traceback):

|

||||

signal.alarm(0)

|

||||

|

||||

|

||||

@dataclass

|

||||

class GenerationConfig:

|

||||

max_length: int = 64

|

||||

top_p: float = 0.8

|

||||

temperature: float = 0.8

|

||||

do_sample: bool = True

|

||||

repetition_penalty: float = 1.0

|

||||

|

||||

|

||||

@torch.inference_mode()

|

||||

def generate_interactive(

|

||||

model,

|

||||

tokenizer,

|

||||

prompt,

|

||||

generation_config: Optional[GenerationConfig] = None,

|

||||

logits_processor: Optional[LogitsProcessorList] = None,

|

||||

stopping_criteria: Optional[StoppingCriteriaList] = None,

|

||||

prefix_allowed_tokens_fn: Optional[Callable[[int, torch.Tensor], List[int]]] = None,

|

||||

additional_eos_token_id: Optional[int] = None,

|

||||

**kwargs,

|

||||

):

|

||||

inputs = tokenizer([prompt], padding=True, return_tensors="pt")

|

||||

input_length = len(inputs["input_ids"][0])

|

||||

for k, v in inputs.items():

|

||||

inputs[k] = v.cuda()

|

||||

input_ids = inputs["input_ids"]

|

||||

batch_size, input_ids_seq_length = input_ids.shape[0], input_ids.shape[-1] # noqa: F841 # pylint: disable=W0612

|

||||

if generation_config is None:

|

||||

generation_config = model.generation_config

|

||||

generation_config = copy.deepcopy(generation_config)

|

||||

model_kwargs = generation_config.update(**kwargs)

|

||||

bos_token_id, eos_token_id = ( # noqa: F841 # pylint: disable=W0612

|

||||

generation_config.bos_token_id,

|

||||

generation_config.eos_token_id,

|

||||

)

|

||||

if isinstance(eos_token_id, int):

|

||||

eos_token_id = [eos_token_id]

|

||||

if additional_eos_token_id is not None:

|

||||

eos_token_id.append(additional_eos_token_id)

|

||||

has_default_max_length = kwargs.get("max_length") is None and generation_config.max_length is not None

|

||||

if has_default_max_length and generation_config.max_new_tokens is None:

|

||||

warnings.warn(

|

||||

f"Using `max_length`'s default ({generation_config.max_length}) to control the generation length. "

|

||||

"This behaviour is deprecated and will be removed from the config in v5 of Transformers -- we"

|

||||

" recommend using `max_new_tokens` to control the maximum length of the generation.",

|

||||

UserWarning,

|

||||

)

|

||||

elif generation_config.max_new_tokens is not None:

|

||||

generation_config.max_length = generation_config.max_new_tokens + input_ids_seq_length

|

||||

if not has_default_max_length:

|

||||

logger.warn( # pylint: disable=W4902

|

||||

f"Both `max_new_tokens` (={generation_config.max_new_tokens}) and `max_length`(="

|

||||

f"{generation_config.max_length}) seem to have been set. `max_new_tokens` will take precedence. "

|

||||

"Please refer to the documentation for more information. "

|

||||

"(https://huggingface.co/docs/transformers/main/en/main_classes/text_generation)",

|

||||

UserWarning,

|

||||

)

|

||||

|

||||

if input_ids_seq_length >= generation_config.max_length:

|

||||

input_ids_string = "input_ids"

|

||||

logger.warning(

|

||||

f"Input length of {input_ids_string} is {input_ids_seq_length}, but `max_length` is set to"

|

||||

f" {generation_config.max_length}. This can lead to unexpected behavior. You should consider"

|

||||

" increasing `max_new_tokens`."

|

||||

)

|

||||

|

||||

# 2. Set generation parameters if not already defined

|

||||

logits_processor = logits_processor if logits_processor is not None else LogitsProcessorList()

|

||||

stopping_criteria = stopping_criteria if stopping_criteria is not None else StoppingCriteriaList()

|

||||

|

||||

logits_processor = model._get_logits_processor(

|

||||

generation_config=generation_config,

|

||||

input_ids_seq_length=input_ids_seq_length,

|

||||

encoder_input_ids=input_ids,

|

||||

prefix_allowed_tokens_fn=prefix_allowed_tokens_fn,

|

||||

logits_processor=logits_processor,

|

||||

)

|

||||

|

||||

stopping_criteria = model._get_stopping_criteria(

|

||||

generation_config=generation_config, stopping_criteria=stopping_criteria

|

||||

)

|

||||

logits_warper = model._get_logits_warper(generation_config)

|

||||

|

||||

unfinished_sequences = input_ids.new(input_ids.shape[0]).fill_(1)

|

||||

scores = None

|

||||

while True:

|

||||

model_inputs = model.prepare_inputs_for_generation(input_ids, **model_kwargs)

|

||||

# forward pass to get next token

|

||||

outputs = model(

|

||||

**model_inputs,

|

||||

return_dict=True,

|

||||

output_attentions=False,

|

||||

output_hidden_states=False,

|

||||

)

|

||||

|

||||

next_token_logits = outputs.logits[:, -1, :]

|

||||

|

||||

# pre-process distribution

|

||||

next_token_scores = logits_processor(input_ids, next_token_logits)

|

||||

next_token_scores = logits_warper(input_ids, next_token_scores)

|

||||

|

||||

# sample

|

||||

probs = nn.functional.softmax(next_token_scores, dim=-1)

|

||||

if generation_config.do_sample:

|

||||

next_tokens = torch.multinomial(probs, num_samples=1).squeeze(1)

|

||||

else:

|

||||

next_tokens = torch.argmax(probs, dim=-1)

|

||||

|

||||

# update generated ids, model inputs, and length for next step

|

||||

input_ids = torch.cat([input_ids, next_tokens[:, None]], dim=-1)

|

||||

model_kwargs = model._update_model_kwargs_for_generation(outputs, model_kwargs, is_encoder_decoder=False)

|

||||

unfinished_sequences = unfinished_sequences.mul((min(next_tokens != i for i in eos_token_id)).long())

|

||||

|

||||

output_token_ids = input_ids[0].cpu().tolist()

|

||||

output_token_ids = output_token_ids[input_length:]

|

||||

for each_eos_token_id in eos_token_id:

|

||||

if output_token_ids[-1] == each_eos_token_id:

|

||||

output_token_ids = output_token_ids[:-1]

|

||||

response = tokenizer.decode(output_token_ids)

|

||||

|

||||

yield response

|

||||

# stop when each sentence is finished, or if we exceed the maximum length

|

||||

if unfinished_sequences.max() == 0 or stopping_criteria(input_ids, scores):

|

||||

break

|

||||

|

||||

|

||||

class GenericRuntime:

|

||||

"""Adapted from https://github.com/reasoning-machines/pal"""

|

||||

|

||||

GLOBAL_DICT: dict = {}

|

||||

LOCAL_DICT = None

|

||||

HEADERS: List = []

|

||||

|

||||

def __init__(self):

|

||||

self._global_vars = copy.copy(self.GLOBAL_DICT)

|

||||

self._local_vars = copy.copy(self.LOCAL_DICT) if self.LOCAL_DICT else None

|

||||

|

||||

for c in self.HEADERS:

|

||||

self.exec_code(c)

|

||||

|

||||

def exec_code(self, code_piece: str) -> None:

|

||||

exec(code_piece, self._global_vars)

|

||||

|

||||

def eval_code(self, expr: str) -> Any:

|

||||

return eval(expr, self._global_vars)

|

||||

|

||||

def inject(self, var_dict: Dict[str, Any]) -> None:

|

||||

for k, v in var_dict.items():

|

||||

self._global_vars[k] = v

|

||||

|

||||

@property

|

||||

def answer(self):

|

||||

return self._global_vars["answer"]

|

||||

|

||||

|

||||

class PALInterface:

|

||||

"""PAL interface wrap fun:`generate_interactive` to extract and execute

|

||||

generated code.

|

||||

|

||||

Adapted from https://github.com/reasoning-machines/pal

|

||||

|

||||

Args:

|

||||

model (AutoModelForCausalLM)

|

||||

tokenizer (AutoTokenizer)

|

||||

generation_config (GenerationConfig): Decode strategies

|

||||

additional_eos_token_id (int): End of sentence token id, default: 103028

|

||||

get_answer_expr (str): The function name of generated code, default: "solution()"

|

||||

verbose (bool): Print error information

|

||||

"""

|

||||

|

||||

def __init__(

|

||||

self,

|

||||

model: AutoModelForCausalLM,

|

||||

tokenizer: AutoTokenizer,

|

||||

generation_config: GenerationConfig,

|

||||

additional_eos_token_id: int = 103028,

|

||||

get_answer_expr: str = "solution()",

|

||||

verbose: bool = False,

|

||||

):

|

||||

self.runtime = GenericRuntime()

|

||||

self.history: List = []

|

||||

self.model = model

|

||||

self.tokenizer = tokenizer

|

||||

self.generation_config = generation_config

|

||||

self.additional_eos_token_id = additional_eos_token_id

|

||||

self.answer_expr = get_answer_expr

|

||||

self.verbose = verbose

|

||||

|

||||

def generate(self, prompt):

|

||||

# The api will generate response word by word

|

||||

# we only need the last generation as the final results

|

||||

for cur_gen in generate_interactive(

|

||||

model=self.model,

|

||||

tokenizer=self.tokenizer,

|

||||

prompt=prompt,

|

||||

additional_eos_token_id=self.additional_eos_token_id,

|

||||

**asdict(self.generation_config),

|

||||

):

|

||||

continue

|

||||

# Get final response

|

||||

self.history.append(cur_gen)

|

||||

# Extract code block

|

||||

code = self.process_generation_to_code(cur_gen)

|

||||

return code

|

||||

|

||||

def process_generation_to_code(self, gens: str):

|

||||

if "```python" in gens:

|

||||

gens = gens.split("```python")[1].split("```")[0]

|

||||

elif "```" in gens:

|

||||

gens = gens.split("```")[1].split("```")[0]

|

||||

code = gens.split("\n")

|

||||

return code

|

||||

|

||||

def run(self, prompt, time_out: float = 100):

|

||||

code = self.generate(prompt)

|

||||

with Timeout(time_out):

|

||||

try:

|

||||

exec_result = self.execute(code)

|

||||

except Exception as e:

|

||||

if self.verbose:

|

||||

print(e)

|

||||

return exec_result

|

||||

|

||||

def execute(self, code: List[str]):

|

||||

self.runtime.exec_code("\n".join(code))

|

||||

return self.runtime.eval_code(self.answer_expr)

|

||||

|

||||

def clear_history(self):

|

||||

self.history = []

|

||||

|

||||

|

||||

def load_model(args):

|

||||

model = AutoModelForCausalLM.from_pretrained(args.model, trust_remote_code=True).to(torch.bfloat16).cuda()

|

||||

tokenizer = AutoTokenizer.from_pretrained(args.model, trust_remote_code=True)

|

||||

return model, tokenizer

|

||||

|

||||

|

||||

def load_data(args):

|

||||

# Load data from huggingface dataset

|

||||

if args.dataset == "gsm8k":

|

||||

gsm8k = load_dataset(path=args.dataset, name="main")

|

||||

test_set = gsm8k["test"]

|

||||

input_data = []

|

||||

for data in test_set:

|

||||

question = data["question"]

|

||||

target = float(data["answer"].split("#")[-1].replace(",", ""))

|

||||

input_data.append({"question": question, "target": target})

|

||||

else:

|

||||

raise NotImplementedError

|

||||

return input_data

|

||||

|

||||

|

||||

PROMPT = """<|System|>:You are a helpful assistant which use tools to solve mathematical reasoning questions. The tools you can use are:

|

||||

PythonExecutor: It can execute Python code. The code must be a function, and the function name must be 'solution'. The example format is as follows:

|

||||

```python

|

||||

def solution():

|

||||

variable_names_with_real_meaning = func(variable)

|

||||

return variable_names_with_real_meaning

|

||||

```{eos}

|

||||

<|User|>:Olivia has $23. She bought five bagels for $3 each. How much money does she have left?{eoh}

|

||||

<|Bot|>:

|

||||

```python

|

||||

def solution():

|

||||

money_initial = 23

|

||||

bagels = 5

|

||||

bagel_cost = 3

|

||||

money_spent = bagels * bagel_cost

|

||||

money_left = money_initial - money_spent

|

||||

result = money_left

|

||||

return result

|

||||

```{eoa}

|

||||

<|User|>:Michael had 58 golf balls. On tuesday, he lost 23 golf balls. On wednesday, he lost 2 more. How many golf balls did he have at the end of wednesday?{eoh}

|

||||

<|Bot|>:

|

||||

```python

|

||||

def solution():

|

||||

golf_balls_initial = 58

|

||||

golf_balls_lost_tuesday = 23

|

||||

golf_balls_lost_wednesday = 2

|

||||

golf_balls_left = golf_balls_initial - golf_balls_lost_tuesday - golf_balls_lost_wednesday

|

||||

result = golf_balls_left

|

||||

return result

|

||||

```{eoa}

|

||||

<|User|>:There were nine computers in the server room. Five more computers were installed each day, from monday to thursday. How many computers are now in the server room?{eoh}

|

||||

<|Bot|>:

|

||||

```python

|

||||

def solution():

|

||||

computers_initial = 9

|

||||

computers_per_day = 5

|

||||

num_days = 4 # 4 days between monday and thursday

|

||||

computers_added = computers_per_day * num_days

|

||||

computers_total = computers_initial + computers_added

|

||||

result = computers_total

|

||||

return result

|

||||

```{eoa}

|

||||

<|System|>:How about this question?{eos}

|

||||

<|User|>:{question}{eoh}

|

||||

<|Bot|>:""".strip()

|

||||

|

||||

|

||||

def main():

|

||||

|

||||

args = parse_args()

|

||||

|

||||

print("load model begin.")

|

||||

model, tokenizer = load_model(args)

|

||||

print("load model end.")

|

||||

|

||||

generation_config = GenerationConfig(max_length=args.max_length, top_p=args.top_p, temperature=args.temperature)

|

||||

|

||||

verbose = args.verbose

|

||||

interface = PALInterface(model=model, tokenizer=tokenizer, generation_config=generation_config, verbose=verbose)

|

||||

|

||||

if not os.path.exists(args.out_dir):

|

||||

os.makedirs(args.out_dir)

|

||||

savepath = os.path.join(args.out_dir, args.dataset + ".json")

|

||||

|

||||

# Load from history results

|

||||

if args.append and os.path.exists(savepath):

|

||||

lines = open(savepath).readlines()

|

||||

num_skip_exps = len(lines)

|

||||

scores = [x["score"] for x in map(json.loads, lines)]

|

||||

else:

|

||||

num_skip_exps = 0

|

||||

scores = []

|

||||

|

||||

examples = load_data(args)

|

||||

with open(savepath, "a" if args.append else "w") as f:

|

||||

pbar = tqdm.tqdm(examples[num_skip_exps:], initial=num_skip_exps, total=len(examples))

|

||||

for x in pbar:

|

||||

question = x["question"]

|

||||

result = copy.copy(x)

|

||||

|

||||

try:

|

||||

answer = interface.run(

|

||||

prompt=PROMPT.format(question=question, eoh=args.eoh, eoa=args.eoa, eos=args.eos),

|

||||

time_out=args.time_out,

|

||||

)

|

||||

answer = float(answer)

|

||||

score = 1 if abs(answer - x["target"]) < 1e-3 else 0

|

||||

except Exception as e:

|

||||

if verbose:

|

||||

print(e)

|

||||

answer = ""

|

||||

score = 0

|

||||

scores.append(score)

|

||||

result["answer"] = answer

|

||||

result["score"] = score

|

||||

result["generation"] = interface.history

|

||||

f.write(json.dumps(result) + "\n")

|

||||

|

||||

interface.clear_history()

|

||||

f.flush()

|

||||

|

||||

print(f"{args.model}: Accuracy - {sum(scores) / len(scores)}")

|

||||

torch.cuda.empty_cache()

|

||||

|

||||

|

||||

if __name__ == "__main__":

|

||||

main()

|

||||

|

|

@ -39,7 +39,7 @@ python pal_inference.py \

|

|||

简单的使用示例如下:

|

||||

|

||||

```bash

|

||||

python tools/pal_inference.py internlm/internlm-chat-7k ./output -v

|

||||

python tools/pal_inference.py internlm/internlm-chat-7b ./output -v

|

||||

```

|

||||

|

||||

其输出文件每一行包括输入的问题,正确答案,执行答案,得分,以及模型生成的 Python 代码块:

|

||||

|

|

|

|||

150

chat/web_demo.py

150

chat/web_demo.py

|

|

@ -7,18 +7,148 @@ Please refer to these links below for more information:

|

|||

3. transformers: https://github.com/huggingface/transformers

|

||||

"""

|

||||

|

||||

from dataclasses import asdict

|

||||

import copy

|

||||

import warnings

|

||||

from dataclasses import asdict, dataclass

|

||||

from typing import Callable, List, Optional

|

||||

|

||||

import streamlit as st

|

||||

import torch

|

||||

from torch import nn

|

||||

from transformers import AutoModelForCausalLM, AutoTokenizer

|

||||

from transformers.generation.utils import LogitsProcessorList, StoppingCriteriaList

|

||||

from transformers.utils import logging

|

||||

|

||||

from tools.transformers.interface import GenerationConfig, generate_interactive

|

||||

|

||||

logger = logging.get_logger(__name__)

|

||||

|

||||

|

||||

@dataclass

|

||||

class GenerationConfig:

|

||||

# this config is used for chat to provide more diversity

|

||||

max_length: int = 32768

|

||||

top_p: float = 0.8

|

||||

temperature: float = 0.8

|

||||

do_sample: bool = True

|

||||

repetition_penalty: float = 1.005

|

||||

|

||||

|

||||

@torch.inference_mode()

|

||||

def generate_interactive(

|

||||

model,

|

||||

tokenizer,

|

||||

prompt,

|

||||

generation_config: Optional[GenerationConfig] = None,

|

||||

logits_processor: Optional[LogitsProcessorList] = None,

|

||||

stopping_criteria: Optional[StoppingCriteriaList] = None,

|

||||

prefix_allowed_tokens_fn: Optional[Callable[[int, torch.Tensor], List[int]]] = None,

|

||||

additional_eos_token_id: Optional[int] = None,

|

||||

**kwargs,

|

||||

):

|

||||

inputs = tokenizer([prompt], padding=True, return_tensors="pt")

|

||||

input_length = len(inputs["input_ids"][0])

|

||||

for k, v in inputs.items():

|

||||

inputs[k] = v.cuda()

|

||||

input_ids = inputs["input_ids"]

|

||||

batch_size, input_ids_seq_length = input_ids.shape[0], input_ids.shape[-1] # noqa: F841 # pylint: disable=W0612

|

||||

if generation_config is None:

|

||||

generation_config = model.generation_config

|

||||

generation_config = copy.deepcopy(generation_config)

|

||||

model_kwargs = generation_config.update(**kwargs)

|

||||

bos_token_id, eos_token_id = ( # noqa: F841 # pylint: disable=W0612

|

||||

generation_config.bos_token_id,

|

||||

generation_config.eos_token_id,

|

||||

)

|

||||

if isinstance(eos_token_id, int):

|

||||

eos_token_id = [eos_token_id]

|

||||

if additional_eos_token_id is not None:

|

||||

eos_token_id.append(additional_eos_token_id)

|

||||

has_default_max_length = kwargs.get("max_length") is None and generation_config.max_length is not None

|

||||

if has_default_max_length and generation_config.max_new_tokens is None:

|

||||

warnings.warn(

|

||||

f"Using `max_length`'s default ({generation_config.max_length}) to control the generation length. "

|

||||

"This behaviour is deprecated and will be removed from the config in v5 of Transformers -- we"

|

||||

" recommend using `max_new_tokens` to control the maximum length of the generation.",

|

||||

UserWarning,

|

||||

)

|

||||

elif generation_config.max_new_tokens is not None:

|

||||

generation_config.max_length = generation_config.max_new_tokens + input_ids_seq_length

|

||||

if not has_default_max_length:

|

||||

logger.warn( # pylint: disable=W4902

|

||||

f"Both `max_new_tokens` (={generation_config.max_new_tokens}) and `max_length`(="

|

||||

f"{generation_config.max_length}) seem to have been set. `max_new_tokens` will take precedence. "

|

||||

"Please refer to the documentation for more information. "

|

||||

"(https://huggingface.co/docs/transformers/main/en/main_classes/text_generation)",

|

||||

UserWarning,

|

||||

)

|

||||

|

||||

if input_ids_seq_length >= generation_config.max_length:

|

||||

input_ids_string = "input_ids"

|

||||

logger.warning(

|

||||

f"Input length of {input_ids_string} is {input_ids_seq_length}, but `max_length` is set to"

|

||||

f" {generation_config.max_length}. This can lead to unexpected behavior. You should consider"

|

||||

" increasing `max_new_tokens`."

|

||||

)

|

||||

|

||||

# 2. Set generation parameters if not already defined

|

||||

logits_processor = logits_processor if logits_processor is not None else LogitsProcessorList()

|

||||

stopping_criteria = stopping_criteria if stopping_criteria is not None else StoppingCriteriaList()

|

||||

|

||||

logits_processor = model._get_logits_processor(

|

||||

generation_config=generation_config,

|

||||

input_ids_seq_length=input_ids_seq_length,

|

||||

encoder_input_ids=input_ids,

|

||||

prefix_allowed_tokens_fn=prefix_allowed_tokens_fn,

|

||||

logits_processor=logits_processor,

|

||||

)

|

||||

|

||||

stopping_criteria = model._get_stopping_criteria(

|

||||

generation_config=generation_config, stopping_criteria=stopping_criteria

|

||||

)

|

||||

logits_warper = model._get_logits_warper(generation_config)

|

||||

|

||||

unfinished_sequences = input_ids.new(input_ids.shape[0]).fill_(1)

|

||||

scores = None

|

||||

while True:

|

||||

model_inputs = model.prepare_inputs_for_generation(input_ids, **model_kwargs)

|

||||

# forward pass to get next token

|

||||

outputs = model(

|

||||

**model_inputs,

|

||||

return_dict=True,

|

||||

output_attentions=False,

|

||||

output_hidden_states=False,

|

||||

)

|

||||

|

||||

next_token_logits = outputs.logits[:, -1, :]

|

||||

|

||||

# pre-process distribution

|

||||

next_token_scores = logits_processor(input_ids, next_token_logits)

|

||||

next_token_scores = logits_warper(input_ids, next_token_scores)

|

||||

|

||||

# sample

|

||||

probs = nn.functional.softmax(next_token_scores, dim=-1)

|

||||

if generation_config.do_sample:

|

||||

next_tokens = torch.multinomial(probs, num_samples=1).squeeze(1)

|

||||

else:

|

||||

next_tokens = torch.argmax(probs, dim=-1)

|

||||

|

||||

# update generated ids, model inputs, and length for next step

|

||||

input_ids = torch.cat([input_ids, next_tokens[:, None]], dim=-1)

|

||||

model_kwargs = model._update_model_kwargs_for_generation(outputs, model_kwargs, is_encoder_decoder=False)

|

||||

unfinished_sequences = unfinished_sequences.mul((min(next_tokens != i for i in eos_token_id)).long())

|

||||

|

||||

output_token_ids = input_ids[0].cpu().tolist()

|

||||

output_token_ids = output_token_ids[input_length:]

|

||||

for each_eos_token_id in eos_token_id:

|

||||

if output_token_ids[-1] == each_eos_token_id:

|

||||

output_token_ids = output_token_ids[:-1]

|

||||

response = tokenizer.decode(output_token_ids)

|

||||

|

||||

yield response

|

||||

# stop when each sentence is finished, or if we exceed the maximum length

|

||||

if unfinished_sequences.max() == 0 or stopping_criteria(input_ids, scores):

|

||||

break

|

||||

|

||||

|

||||

def on_btn_click():

|

||||

del st.session_state.messages

|

||||

|

||||

|

|

@ -36,7 +166,7 @@ def load_model():

|

|||

|

||||

def prepare_generation_config():

|

||||

with st.sidebar:

|

||||

max_length = st.slider("Max Length", min_value=32, max_value=2048, value=2048)

|

||||

max_length = st.slider("Max Length", min_value=8, max_value=32768, value=32768)

|

||||

top_p = st.slider("Top P", 0.0, 1.0, 0.8, step=0.01)

|

||||

temperature = st.slider("Temperature", 0.0, 1.0, 0.7, step=0.01)

|

||||

st.button("Clear Chat History", on_click=on_btn_click)

|

||||

|

|

@ -53,17 +183,21 @@ cur_query_prompt = "[UNUSED_TOKEN_146]user\n{user}[UNUSED_TOKEN_145]\n[UNUSED_TO

|

|||

|

||||

def combine_history(prompt):

|

||||

messages = st.session_state.messages

|

||||

total_prompt = ""

|

||||

meta_instruction = (

|

||||

"You are InternLM (书生·浦语), a helpful, honest, and harmless AI assistant developed by Shanghai "

|

||||

"AI Laboratory (上海人工智能实验室)."

|

||||

)

|

||||

total_prompt = f"<s>[UNUSED_TOKEN_146]system\n{meta_instruction}[UNUSED_TOKEN_145]\n"

|

||||

for message in messages:

|

||||

cur_content = message["content"]

|

||||

if message["role"] == "user":

|

||||

cur_prompt = user_prompt.replace("{user}", cur_content)

|

||||

cur_prompt = user_prompt.format(user=cur_content)

|

||||

elif message["role"] == "robot":

|

||||

cur_prompt = robot_prompt.replace("{robot}", cur_content)

|

||||

cur_prompt = robot_prompt.format(robot=cur_content)

|

||||

else:

|

||||

raise RuntimeError

|

||||

total_prompt += cur_prompt

|

||||

total_prompt = total_prompt + cur_query_prompt.replace("{user}", prompt)

|

||||

total_prompt = total_prompt + cur_query_prompt.format(user=prompt)

|

||||

return total_prompt

|

||||

|

||||

|

||||

|

|

|

|||

|

|

@ -6,8 +6,7 @@ We recommend two projects to fine-tune InternLM.

|

|||

|

||||

1. [XTuner](https://github.com/InternLM/xtuner) is an efficient, flexible and full-featured toolkit for fine-tuning large models.

|

||||

|

||||

2. [InternLM-Train](): brief introduction

|

||||

|

||||

2. [InternEvo](https://github.com/InternLM/InternEvo/) is a powerful training framework that supports large-scale pre-training and finetuning.

|

||||

|

||||

## XTuner

|

||||

|

||||

|

|

@ -95,3 +94,7 @@ LLaVA-InternLM2-7B:

|

|||

```shell

|

||||

xtuner chat internlm/internlm2-chat-7b --visual-encoder openai/clip-vit-large-patch14-336 --llava xtuner/llava-internlm2-7b --prompt-template internlm2_chat --image $IMAGE_PATH

|

||||

```

|

||||

|

||||

## InternEvo

|

||||

|

||||

[TODO]

|

||||

|

|

|

|||

|

|

@ -2,12 +2,11 @@

|

|||

|

||||

[English](./README.md) | 简体中文

|

||||

|

||||

我们推荐以下两种框架微调 InternLM

|

||||

我们推荐以下两种框架微调 InternLM:

|

||||

|

||||

1. [XTuner](https://github.com/InternLM/xtuner) 是一个高效、灵活、全能的轻量化大模型微调工具库。

|

||||

|

||||

2. [InternLM-Train](): brief introduction

|

||||

|

||||

2. [InternEvo](https://github.com/InternLM/InternEvo/) 是一个支持大规模预训练和微调的训练框架。

|

||||

|

||||

## XTuner

|

||||

|

||||

|

|

@ -18,7 +17,6 @@

|

|||

3. 兼容 [DeepSpeed](https://github.com/microsoft/DeepSpeed) 🚀,轻松应用各种 ZeRO 训练优化策略。

|

||||

4. 训练所得模型可无缝接入部署工具库 [LMDeploy](https://github.com/InternLM/lmdeploy)、大规模评测工具库 [OpenCompass](https://github.com/open-compass/opencompass) 及 [VLMEvalKit](https://github.com/open-compass/VLMEvalKit)。

|

||||

|

||||

|

||||

### 安装

|

||||

|

||||

- 借助 conda 准备虚拟环境

|

||||

|

|

@ -36,7 +34,6 @@

|

|||

|

||||

### 微调

|

||||

|

||||

|

||||

- **步骤 0**,准备配置文件。XTuner 提供多个开箱即用的配置文件,用户可以通过下列命令查看所有 InternLM2 的预置配置文件:

|

||||

|

||||

```shell

|

||||

|

|

@ -91,6 +88,11 @@ xtuner chat internlm/internlm2-chat-7b --adapter xtuner/internlm2-chat-7b-qlora-

|

|||

```

|

||||

|

||||

与 LLaVA-InternLM2-7B 对话:

|

||||

|

||||

```shell

|

||||

xtuner chat internlm/internlm2-chat-7b --visual-encoder openai/clip-vit-large-patch14-336 --llava xtuner/llava-internlm2-7b --prompt-template internlm2_chat --image $IMAGE_PATH

|

||||

```

|

||||

|

||||

## InternEvo

|

||||

|

||||

[TODO]

|

||||

|

|

|

|||

|

|

@ -5,7 +5,7 @@

|

|||

The second generation of the InternLM model, InternLM2, includes models at two scales: 7B and 20B. For the convenience of users and researchers, we have open-sourced four versions of each scale of the model, which are:

|

||||

|

||||

- internlm2-base-20b: Foundation models with high quality and high adaptation flexibility, which serve as a good starting point for downstream deep adaptations.

|

||||

- internlm2-20b (**recommended**): Optimized in multiple dimensions based on InternLM2-Base, obtaining state-of-the-art performance in evaluation with good language capability. InternLM2 models are recommended for consideration in most applications.

|

||||

- internlm2-20b (**recommended**): Further pretrain with general domain data and domain-enhanced corpus, obtaining state-of-the-art performance in evaluation with good language capability. InternLM2 models are recommended for consideration in most applications.

|

||||

- internlm2-chat-20b-sft: Intermediate version of InternLM2-Chat that only undergoes supervised fine-tuning (SFT), based on the InternLM2-Base model. We release them to benefit research on alignment.

|

||||

- internlm2-chat-20b (**recommended**): Further aligned on top of InternLM2-Chat-SFT through online RLHF. InternLM2-Chat exhibits better instruction following, chat experience, and function calling, which is recommended for downstream applications.

|

||||

|

||||

|

|

|

|||

|

|

@ -5,7 +5,7 @@

|

|||

The second generation of the InternLM model, InternLM2, includes models at two scales: 7B and 20B. For the convenience of users and researchers, we have open-sourced four versions of each scale of the model, which are:

|

||||

|

||||

- internlm2-base-7b: Foundation models with high quality and high adaptation flexibility, which serve as a good starting point for downstream deep adaptations.

|

||||

- internlm2-7b (**recommended**): Optimized in multiple dimensions based on InternLM2-Base, obtaining state-of-the-art performance in evaluation with good language capability. InternLM2 models are recommended for consideration in most applications.

|

||||

- internlm2-7b (**recommended**): Further pretrain with general domain data and domain-enhanced corpus, obtaining state-of-the-art performance in evaluation with good language capability. InternLM2 models are recommended for consideration in most applications.

|

||||

- internlm2-chat-7b-sft: Intermediate version of InternLM2-Chat that only undergoes supervised fine-tuning (SFT), based on the InternLM2-Base model. We release them to benefit research on alignment.

|

||||

- internlm2-chat-7b (**recommended**): Further aligned on top of InternLM2-Chat-SFT through online RLHF. InternLM2-Chat exhibits better instruction following, chat experience, and function calling, which is recommended for downstream applications.

|

||||

|

||||

|

|

|

|||

Loading…

Reference in New Issue