* [moe] removed openmoe-coupled code and rectify mixstral code (#5471) * [Feauture] MoE refractor; Intergration with Mixtral (#5682) * cherry pick from refractor-moe branch * tests passed * [pre-commit.ci] auto fixes from pre-commit.com hooks for more information, see https://pre-commit.ci * support ep + zero --------- Co-authored-by: Edenzzzz <wtan45@wisc.edu> Co-authored-by: pre-commit-ci[bot] <66853113+pre-commit-ci[bot]@users.noreply.github.com> * add mixtral auto policy & move pipeline forward code to modeling folder * [moe refactor] modify kernel test without Route Class * [moe refactor] add moe tensor test path environment variable to github workflow * fix typos * fix moe test bug due to the code rebase * [moe refactor] fix moe zero test, and little bug in low level zero * fix typo * add moe tensor path to github workflow * remove some useless code * fix typo & unify global variable XX_AXIS logic without using -1 * fix typo & prettifier the code * remove print code & support zero 2 test * remove useless code * reanme function * fix typo * fix typo * Further improve the test code * remove print code * [moe refactor] change test model from fake moe model to mixtral moe layer and remove useless test * [moe refactor] skip some unit test which will be refactored later * [moe refactor] fix unit import error * [moe refactor] fix circular import issues * [moe refactor] remove debug code * [moe refactor] update github workflow * [moe/zero] refactor low level optimizer (#5767) * [zero] refactor low level optimizer * [pre-commit.ci] auto fixes from pre-commit.com hooks for more information, see https://pre-commit.ci --------- Co-authored-by: pre-commit-ci[bot] <66853113+pre-commit-ci[bot]@users.noreply.github.com> * [Feature] MoE refactor with newest version of ZeRO (#5801) * [zero] remove redundant members in BucketStore (#5802) * [zero] align api with previous version * [Moe/Zero] Update MoeHybridParallelPlugin with refactored ZeRO and Fix Zero bug (#5819) * [moe refactor] update unit test with the refactored ZeRO and remove useless test * move moe checkpoint to checkpoint folder and exchange global axis to class member * update moe hybrid parallel plugin with newest version of zero & fix zero working/master params bug * fix zero unit test * Add an assertion to prevent users from using it incorrectly * [hotfix]Solve the compatibility issue of zero refactor (#5823) * [moe refactor] update unit test with the refactored ZeRO and remove useless test * move moe checkpoint to checkpoint folder and exchange global axis to class member * update moe hybrid parallel plugin with newest version of zero & fix zero working/master params bug * fix zero unit test * Add an assertion to prevent users from using it incorrectly * Modify function parameter names to resolve compatibility issues * [zero] fix missing hook removal (#5824) * [MoE] Resolve .github conflict (#5829) * [Fix/Example] Fix Llama Inference Loading Data Type (#5763) * [fix/example] fix llama inference loading dtype * revise loading dtype of benchmark llama3 * [release] update version (#5752) * [release] update version * [devops] update compatibility test * [devops] update compatibility test * [devops] update compatibility test * [devops] update compatibility test * [test] fix ddp plugin test * [test] fix gptj and rpc test * [devops] fix cuda ext compatibility * [inference] fix flash decoding test * [inference] fix flash decoding test * fix (#5765) * [test] Fix/fix testcase (#5770) * [fix] branch for fix testcase; * [fix] fix test_analyzer & test_auto_parallel; * [fix] remove local change about moe; * [fix] rm local change moe; * [Hotfix] Add missing init file in inference.executor (#5774) * [CI/tests] simplify some test case to reduce testing time (#5755) * [ci/tests] simplify some test case to reduce testing time * [ci/tests] continue to remove test case to reduce ci time cost * restore some test config * [ci/tests] continue to reduce ci time cost * [misc] update dockerfile (#5776) * [misc] update dockerfile * [misc] update dockerfile * [devops] fix docker ci (#5780) * [Inference]Add Streaming LLM (#5745) * Add Streaming LLM * add some parameters to llama_generation.py * verify streamingllm config * add test_streamingllm.py * modified according to the opinions of review * add Citation * change _block_tables tolist * [hotfix] fix llama flash attention forward (#5777) * [misc] Accelerate CI for zero and dist optim (#5758) * remove fp16 from lamb * remove d2h copy in checking states --------- Co-authored-by: Edenzzzz <wtan45@wisc.edu> * [Test/CI] remove test cases to reduce CI duration (#5753) * [test] smaller gpt2 test case * [test] reduce test cases: tests/test_zero/test_gemini/test_zeroddp_state_dict.py * [test] reduce test cases: tests/test_zero/test_gemini/test_grad_accum.py * [test] reduce test cases tests/test_zero/test_gemini/test_optim.py * Revert "[test] smaller gpt2 test case" Some tests might depend on the size of model (num of chunks) This reverts commit |

||

|---|---|---|

| .. | ||

| benchmark | ||

| model | ||

| README.md | ||

| infer.py | ||

| infer.sh | ||

| requirements.txt | ||

| test_ci.sh | ||

| train.py | ||

| train.sh | ||

README.md

OpenMoE

OpenMoE is the open-source community's first decoder-only MoE transformer. OpenMoE is implemented in Jax, and Colossal-AI has pioneered an efficient open-source support for this model in PyTorch, enabling a broader range of users to participate in and use this model. The following example of Colossal-AI demonstrates finetune and inference methods.

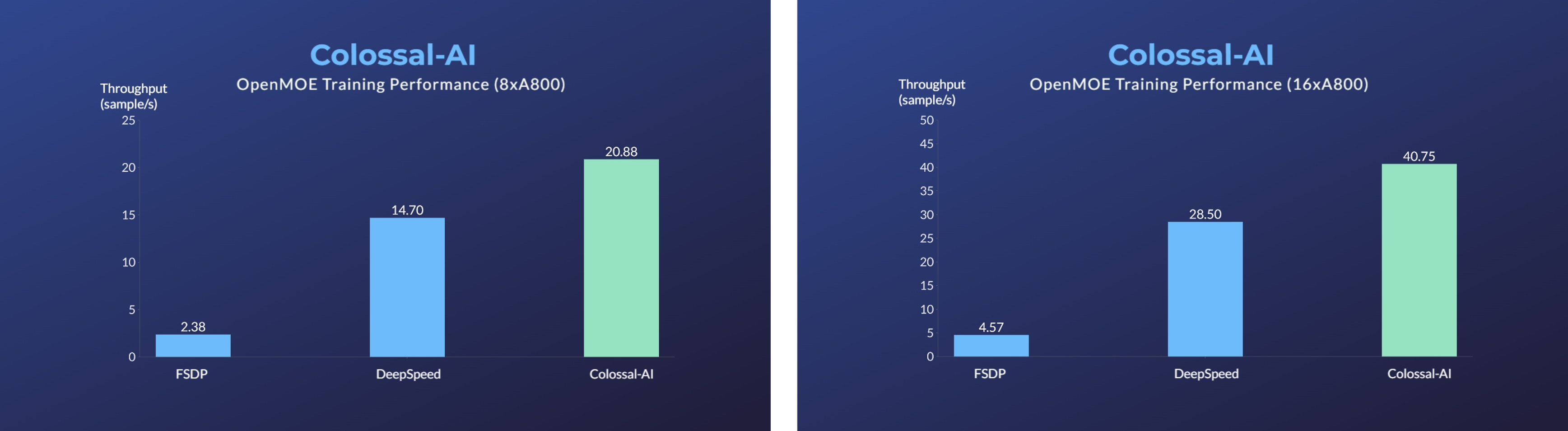

- [2023/11] Enhanced MoE Parallelism, Open-source MoE Model Training Can Be 9 Times More Efficient [code] [blog]

Usage

1. Installation

Please install the latest ColossalAI from source.

BUILD_EXT=1 pip install -U git+https://github.com/hpcaitech/ColossalAI

Then install dependencies.

cd ColossalAI/examples/language/openmoe

pip install -r requirements.txt

Additionally, we recommend you to use torch 1.13.1. We've tested our code on torch 1.13.1 and found it's compatible with our code and flash attention.

2. Install kernels (Optional)

We have utilized Triton, FlashAttention and Apex kernel for better performance. They are not necessary but we recommend you to install them to fully utilize your hardware.

# install triton via pip

pip install triton

# install flash attention via pip

pip install flash-attn==2.0.5

# install apex from source

git clone https://github.com/NVIDIA/apex.git

cd apex

git checkout 741bdf50825a97664db08574981962d66436d16a

pip install -v --disable-pip-version-check --no-cache-dir --no-build-isolation --config-settings "--build-option=--cpp_ext" --config-settings "--build-option=--cuda_ext" ./ --global-option="--cuda_ext"

3. Train

Yon can use colossalai run to launch single-node training:

colossalai run --standalone --nproc_per_node YOUR_GPU_PER_NODE train.py --OTHER_CONFIGURATIONS

Yon can also use colossalai run to launch multi-nodes training:

colossalai run --nproc_per_node YOUR_GPU_PER_NODE --hostfile YOUR_HOST_FILE train.py --OTHER_CONFIGURATIONS

Here is a sample hostfile:

hostname1

hostname2

hostname3

hostname4

The hostname refers to the ip address of your nodes. Make sure master node can access all nodes (including itself) by ssh without password.

Here is details about CLI arguments:

- Model configuration:

--model_name.baseand8bare supported for OpenMoE. - Booster plugin:

--plugin.ep,ep_zeroandhybridare supported.ep_zerois recommended for general cases.epcan provides least memory consumption andhybridsuits large scale training. - Output path:

--output_path. The path to save your model. The default value is./outputs. - Number of epochs:

--num_epochs. The default value is 1. - Local batch size:

--batch_size. Batch size per GPU. The default value is 1. - Save interval:

-i,--save_interval. The interval (steps) of saving checkpoints. The default value is 1000. - Mixed precision:

--precision. The default value is "bf16". "fp16", "bf16" and "fp32" are supported. - Max length:

--max_length. Max sequence length. Default to 2048. - Dataset:

-d,--dataset. The default dataset isyizhongw/self_instruct. It support any dataset fromdatasetswith the same data format as it. - Task Name:

--task_name. Task of corresponding dataset. Default tosuper_natural_instructions. - Learning rate:

--lr. The default value is 1e-5. - Weight decay:

--weight_decay. The default value is 0. - Zero stage:

--zero_stage. Zero stage. Recommend 2 for ep and 1 for ep zero. - Extra dp size:

--extra_dp_size. Extra moe param dp size for ep_zero plugin. Recommended to be 2 or 4. - Use kernel:

--use_kernel. Use kernel optim. Need to install flash attention and triton to enable all kernel optimizations. Skip if not installed. - Use layernorm kernel:

--use_layernorm_kernel. Use layernorm kernel. Need to install apex. Raise error if not installed. - Router aux loss factor:

--router_aux_loss_factor. Moe router z loss factor. You can refer to STMoE for details. - Router z loss factor:

--router_z_loss_factor. Moe router aux loss factor. You can refer to STMoE for details. - Label smoothing:

--label_smoothing. Label smoothing. - Z loss factor:

--z_loss_factor. The final outputs' classification z loss factor. Load balance:--load_balance. Expert load balance. Defaults to False. Recommend enabling. - Load balance interval:

--load_balance_interval. Expert load balance interval. - Communication overlap:

--comm_overlap. Use communication overlap for MoE. Recommended to enable for multi-node training.

4. Shell Script Examples

For your convenience, we provide some shell scripts to train with various configurations. Here we will show an example of how to run training OpenMoE.

a. Running environment

This experiment was performed on a single computing nodes with 8 A800 80GB GPUs in total for OpenMoE-8B. The GPUs are fully connected with NVLink.

b. Running command

We demonstrate how to run three plugins in train.sh. You can choose anyone and use your own args.

bash train.sh

c. Multi-Nodes Training

To run on multi-nodes, you can modify the script as:

colossalai run --nproc_per_node YOUR_GPU_PER_NODE --hostfile YOUR_HOST_FILE \

train.py --OTHER_CONFIGURATIONS

Reference

@article{bian2021colossal,

title={Colossal-AI: A Unified Deep Learning System For Large-Scale Parallel Training},

author={Bian, Zhengda and Liu, Hongxin and Wang, Boxiang and Huang, Haichen and Li, Yongbin and Wang, Chuanrui and Cui, Fan and You, Yang},

journal={arXiv preprint arXiv:2110.14883},

year={2021}

}

@misc{openmoe2023,

author = {Fuzhao Xue, Zian Zheng, Yao Fu, Jinjie Ni, Zangwei Zheng, Wangchunshu Zhou and Yang You},

title = {OpenMoE: Open Mixture-of-Experts Language Models},

year = {2023},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/XueFuzhao/OpenMoE}},

}