Co-authored-by: github-actions <github-actions@github.com> |

||

|---|---|---|

| .. | ||

| auto_parallel | ||

| fastfold | ||

| fp8/mnist | ||

| hybrid_parallel | ||

| large_batch_optimizer | ||

| opt | ||

| sequence_parallel | ||

| .gitignore | ||

| README.md | ||

| download_cifar10.py | ||

| requirements.txt | ||

README.md

Colossal-AI Tutorial Hands-on

This path is an abbreviated tutorial prepared for specific activities and may not be maintained in real time. For use of Colossal-AI, please refer to other examples and documents.

Introduction

Welcome to the Colossal-AI tutorial, which has been accepted as official tutorials by top conference SC, AAAI, PPoPP, CVPR, ISC, etc.

Colossal-AI, a unified deep learning system for the big model era, integrates many advanced technologies such as multi-dimensional tensor parallelism, sequence parallelism, heterogeneous memory management, large-scale optimization, adaptive task scheduling, etc. By using Colossal-AI, we could help users to efficiently and quickly deploy large AI model training and inference, reducing large AI model training budgets and scaling down the labor cost of learning and deployment.

🚀 Quick Links

Colossal-AI | Paper | Documentation | Issue | Slack

Table of Content

- Multi-dimensional Parallelism [code] [video]

- Sequence Parallelism [code] [video]

- Large Batch Training Optimization [code] [video]

- Automatic Parallelism [code] [video]

- Fine-tuning and Inference for OPT [code] [video]

- Optimized AlphaFold [code] [video]

- Optimized Stable Diffusion [code] [video]

Discussion

Discussion about the Colossal-AI project is always welcomed! We would love to exchange ideas with the community to better help this project grow. If you think there is a need to discuss anything, you may jump to our Slack.

If you encounter any problem while running these tutorials, you may want to raise an issue in this repository.

🛠️ Setup environment

[video] You should use conda to create a virtual environment, we recommend python 3.8, e.g. conda create -n colossal python=3.8. This installation commands are for CUDA 11.3, if you have a different version of CUDA, please download PyTorch and Colossal-AI accordingly.

You can refer to the Installation to set up your environment.

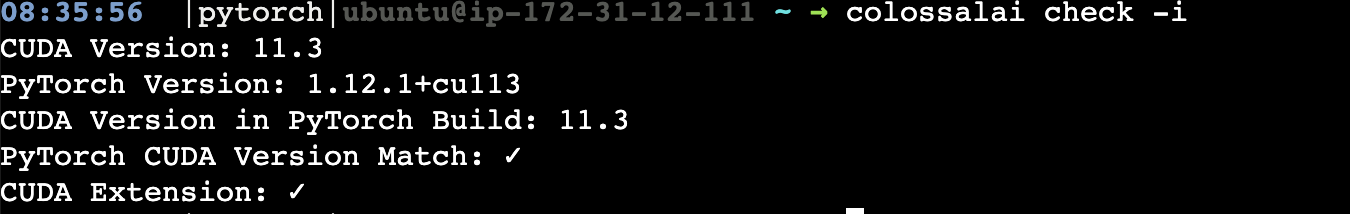

You can run colossalai check -i to verify if you have correctly set up your environment 🕹️.

If you encounter messages like please install with cuda_ext, do let me know as it could be a problem of the distribution wheel. 😥

Then clone the Colossal-AI repository from GitHub.

git clone https://github.com/hpcaitech/ColossalAI.git

cd ColossalAI/examples/tutorial