fix image (#3288)

|

|

@ -1,6 +1,6 @@

|

||||||

<h1 align="center">

|

<h1 align="center">

|

||||||

<span>Coati - ColossalAI Talking Intelligence</span>

|

<span>Coati - ColossalAI Talking Intelligence</span>

|

||||||

<img width="auto" height="50px", src="assets/logo_coati.png"/>

|

<img width="auto" height="50px", src="https://raw.githubusercontent.com/hpcaitech/public_assets/main/applications/chat/logo_coati.png"/>

|

||||||

</h1>

|

</h1>

|

||||||

|

|

||||||

|

|

||||||

|

|

@ -60,7 +60,7 @@ You can experience the performance of Coati7B on this page.

|

||||||

### Install the environment

|

### Install the environment

|

||||||

|

|

||||||

```shell

|

```shell

|

||||||

conda creat -n coati

|

conda create -n coati

|

||||||

conda activate coati

|

conda activate coati

|

||||||

pip install .

|

pip install .

|

||||||

```

|

```

|

||||||

|

|

@ -83,7 +83,7 @@ we colllected 104K bilingual dataset of Chinese and English, and you can find th

|

||||||

|

|

||||||

Here is how we collected the data

|

Here is how we collected the data

|

||||||

<p align="center">

|

<p align="center">

|

||||||

<img src="assets/data-collect.png" width=500/>

|

<img src="https://raw.githubusercontent.com/hpcaitech/public_assets/main/applications/chat/data-collect.png" width=500/>

|

||||||

</p>

|

</p>

|

||||||

|

|

||||||

### Stage1 - Supervised instructs tuning

|

### Stage1 - Supervised instructs tuning

|

||||||

|

|

@ -127,7 +127,7 @@ torchrun --standalone --nproc_per_node=4 train_reward_model.py

|

||||||

Stage3 uses reinforcement learning algorithm, which is the most complex part of the training process:

|

Stage3 uses reinforcement learning algorithm, which is the most complex part of the training process:

|

||||||

|

|

||||||

<p align="center">

|

<p align="center">

|

||||||

<img src="assets/stage-3.jpeg" width=500/>

|

<img src="https://raw.githubusercontent.com/hpcaitech/public_assets/main/applications/chat/stage-3.jpeg" width=500/>

|

||||||

</p>

|

</p>

|

||||||

|

|

||||||

you can run the `examples/train_prompts.sh` to start training PPO with human feedback

|

you can run the `examples/train_prompts.sh` to start training PPO with human feedback

|

||||||

|

|

@ -150,67 +150,67 @@ We also support training reward model with true-world data. See `examples/train_

|

||||||

|

|

||||||

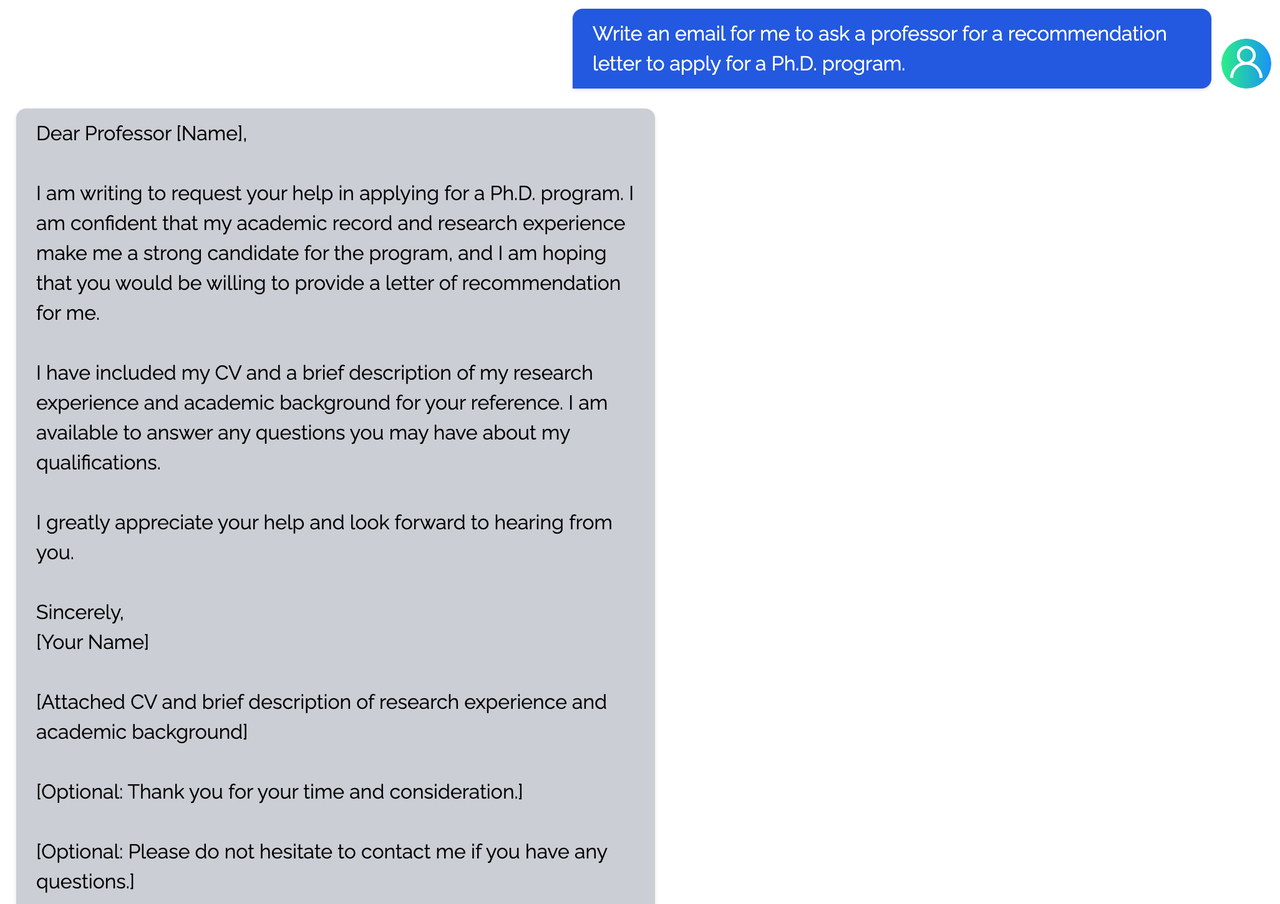

<details><summary><b>E-mail</b></summary>

|

<details><summary><b>E-mail</b></summary>

|

||||||

|

|

||||||

|

|

||||||

</details>

|

</details>

|

||||||

|

|

||||||

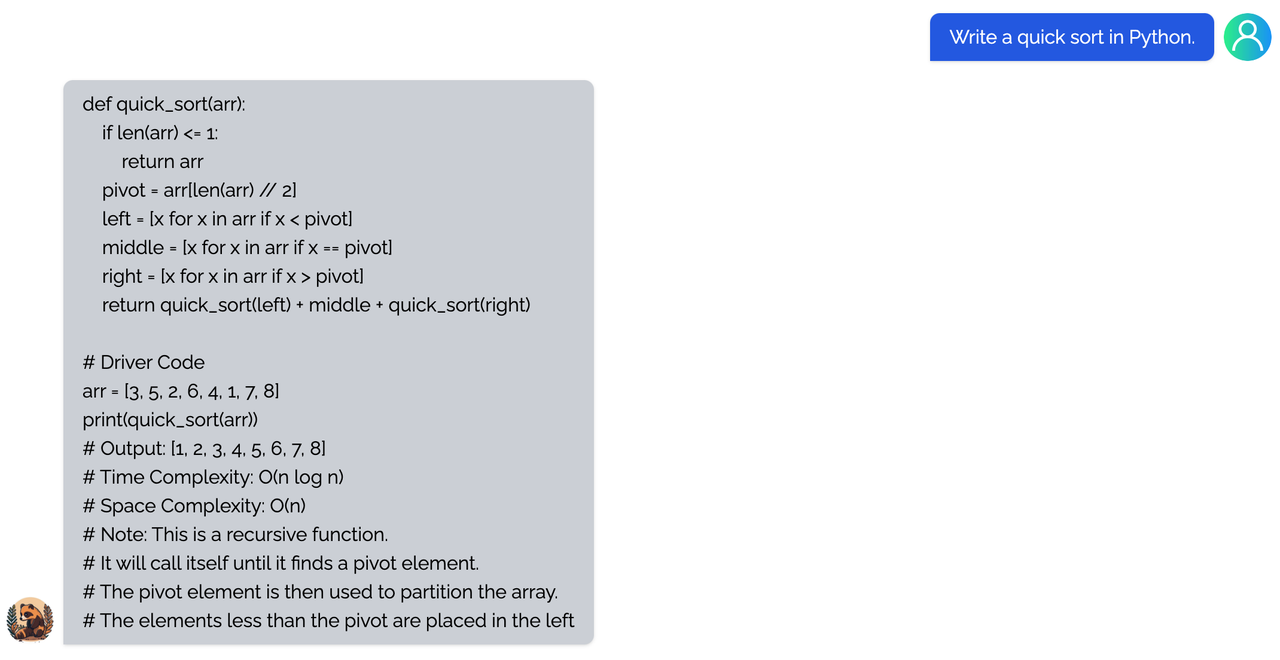

<details><summary><b>coding</b></summary>

|

<details><summary><b>coding</b></summary>

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

</details>

|

</details>

|

||||||

|

|

||||||

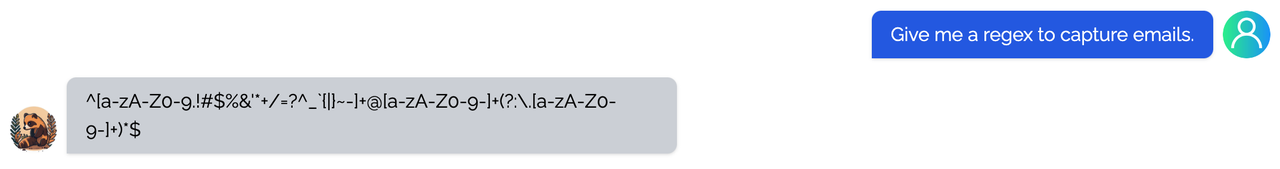

<details><summary><b>regex</b></summary>

|

<details><summary><b>regex</b></summary>

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

</details>

|

</details>

|

||||||

|

|

||||||

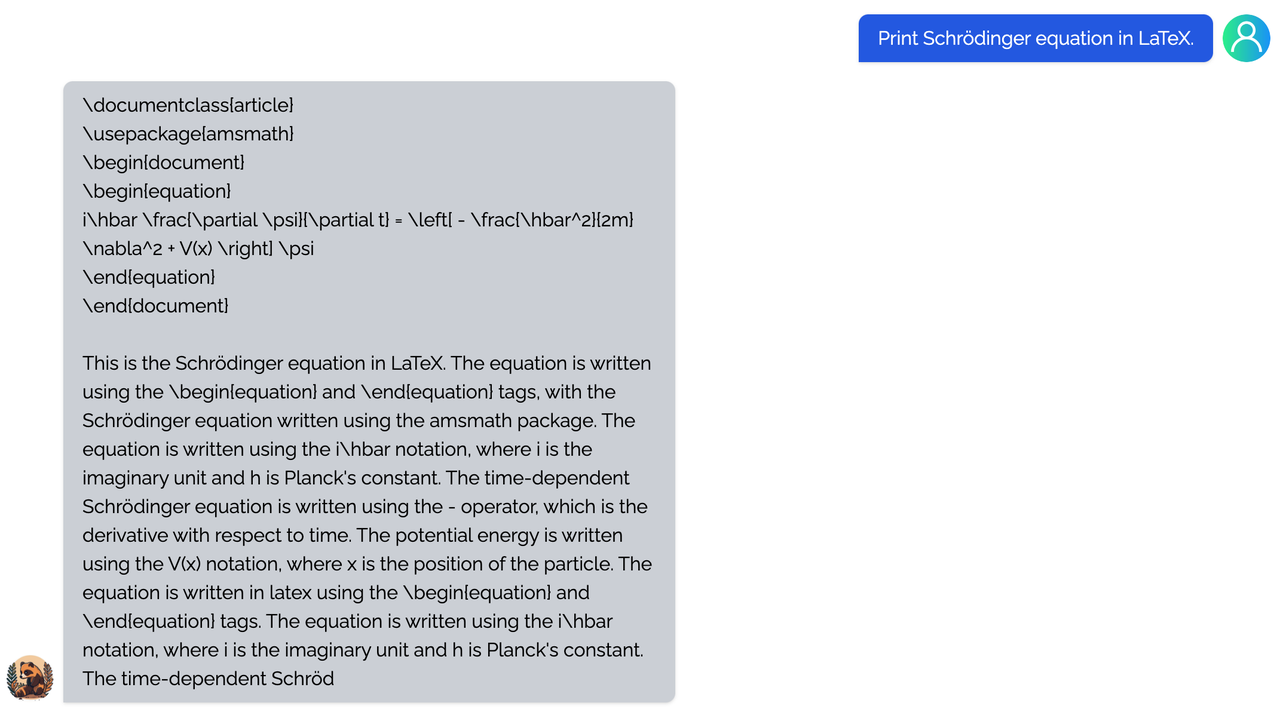

<details><summary><b>Tex</b></summary>

|

<details><summary><b>Tex</b></summary>

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

</details>

|

</details>

|

||||||

|

|

||||||

<details><summary><b>writing</b></summary>

|

<details><summary><b>writing</b></summary>

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

</details>

|

</details>

|

||||||

|

|

||||||

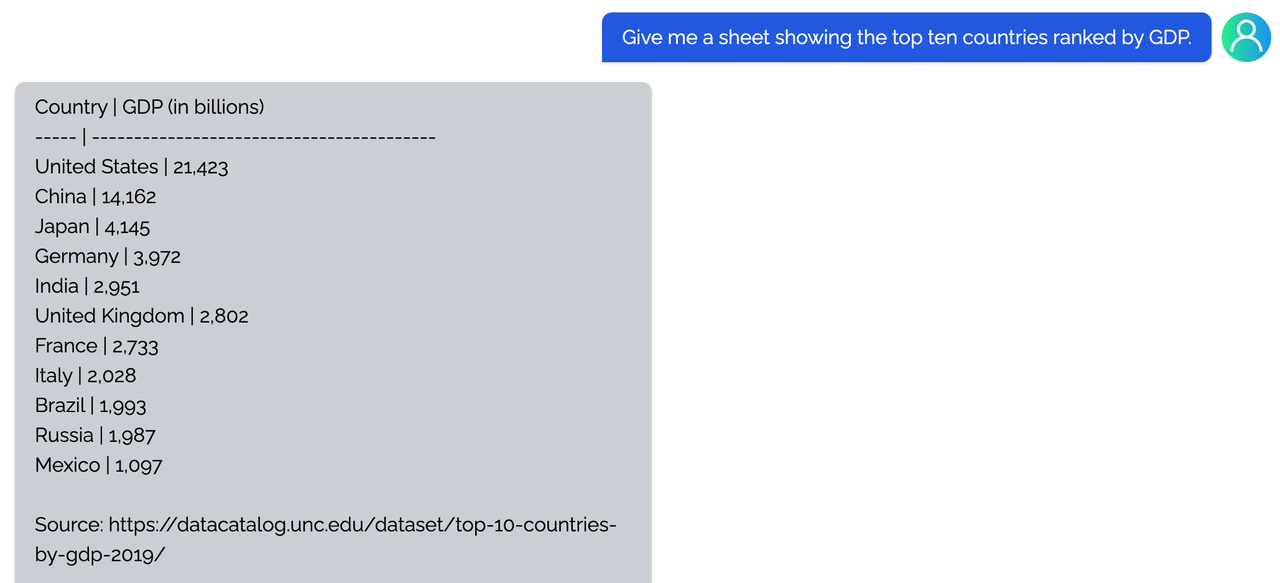

<details><summary><b>Table</b></summary>

|

<details><summary><b>Table</b></summary>

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

</details>

|

</details>

|

||||||

|

|

||||||

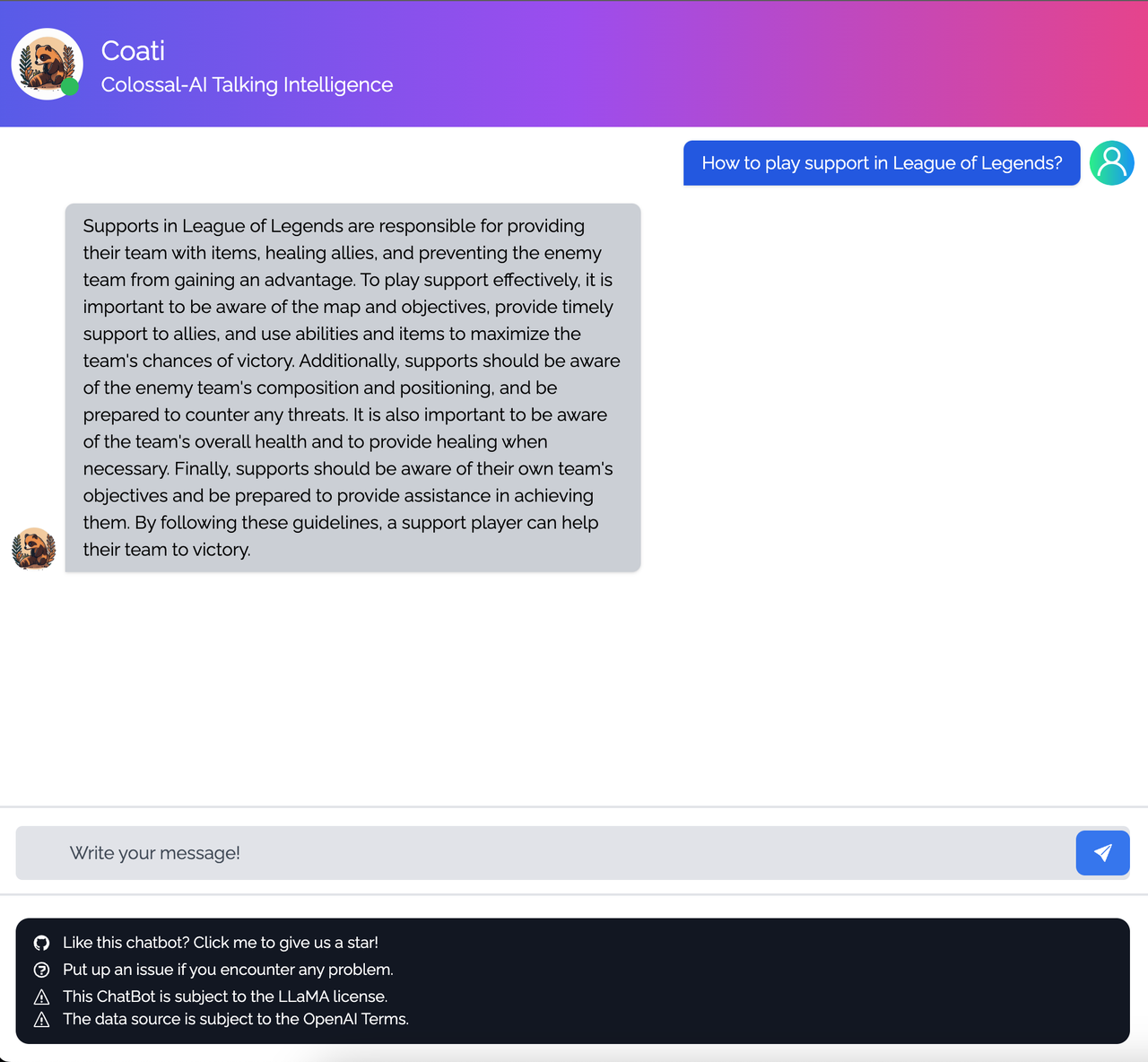

### Open QA

|

### Open QA

|

||||||

<details><summary><b>Game</b></summary>

|

<details><summary><b>Game</b></summary>

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

</details>

|

</details>

|

||||||

|

|

||||||

<details><summary><b>Travel</b></summary>

|

<details><summary><b>Travel</b></summary>

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

</details>

|

</details>

|

||||||

|

|

||||||

<details><summary><b>Physical</b></summary>

|

<details><summary><b>Physical</b></summary>

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

</details>

|

</details>

|

||||||

|

|

||||||

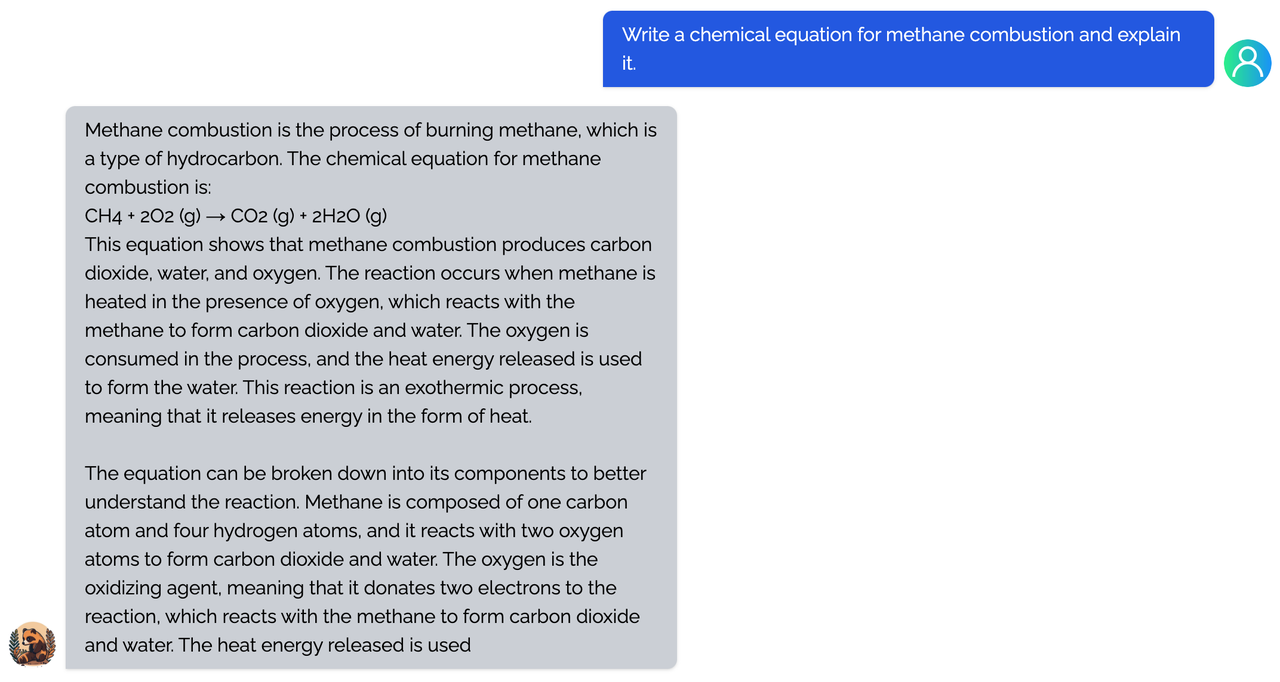

<details><summary><b>Chemical</b></summary>

|

<details><summary><b>Chemical</b></summary>

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

</details>

|

</details>

|

||||||

|

|

||||||

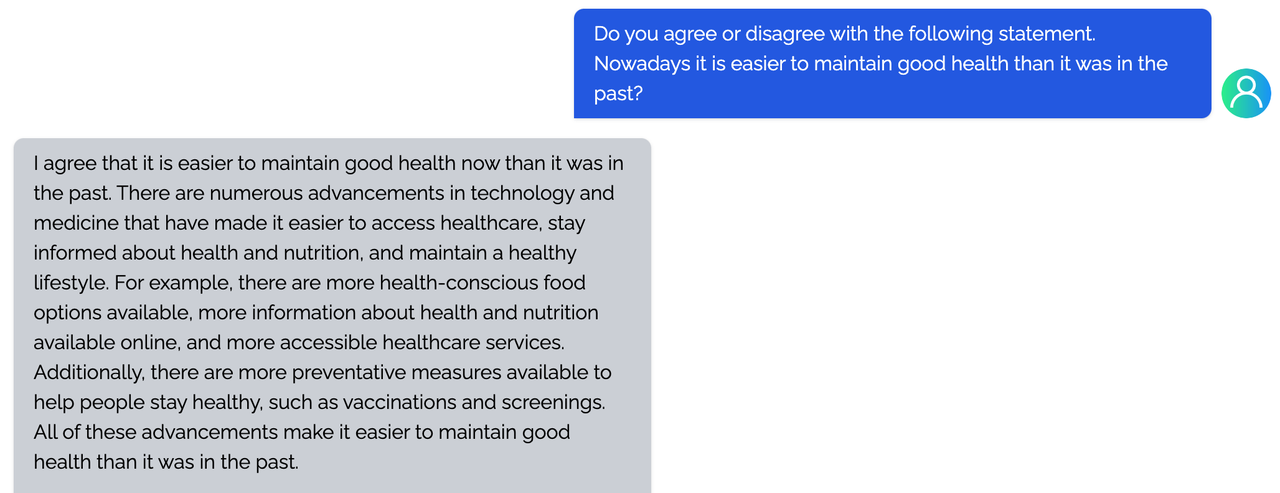

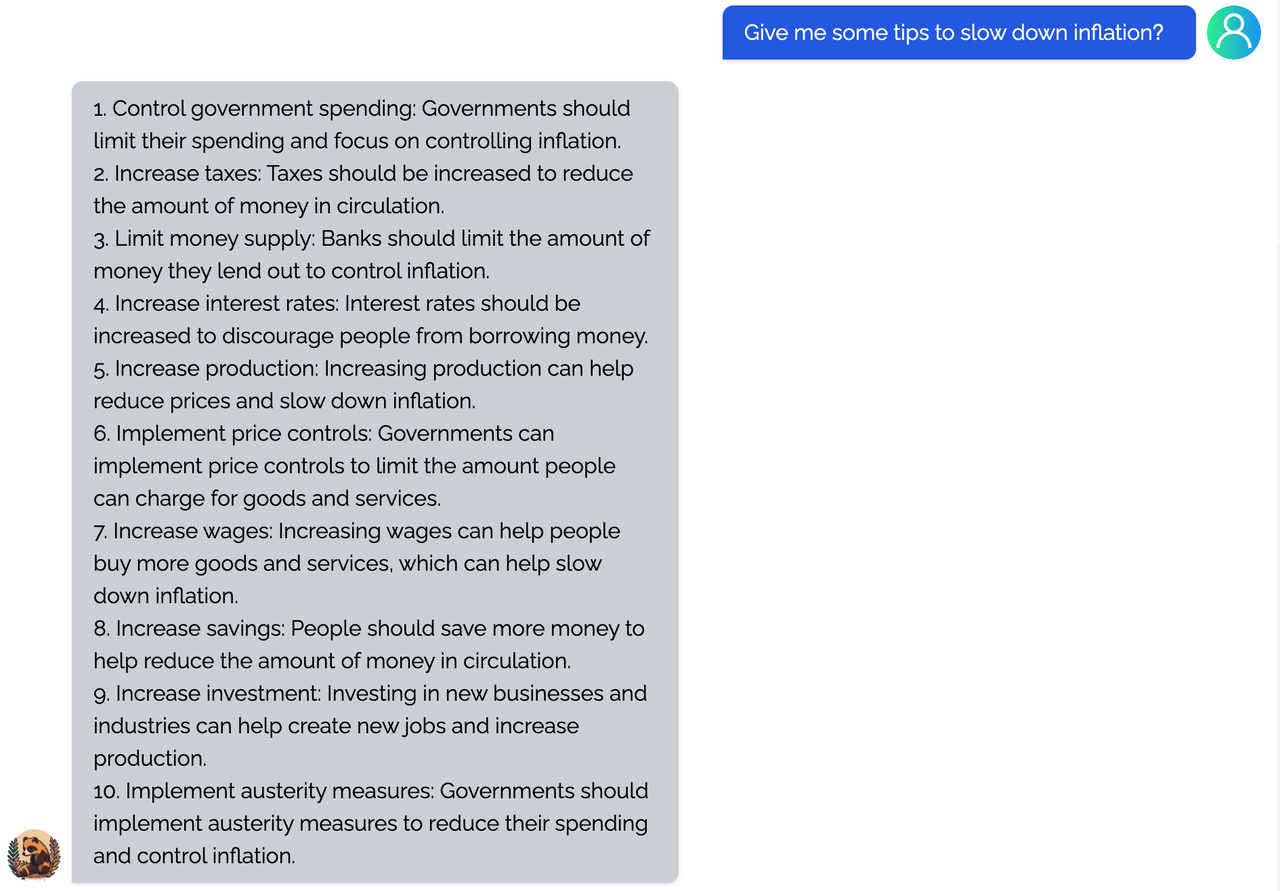

<details><summary><b>Economy</b></summary>

|

<details><summary><b>Economy</b></summary>

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

</details>

|

</details>

|

||||||

|

|

||||||

|

|

|

||||||

|

Before Width: | Height: | Size: 273 KiB |

|

Before Width: | Height: | Size: 307 KiB |

|

Before Width: | Height: | Size: 401 KiB |

|

Before Width: | Height: | Size: 390 KiB |

|

Before Width: | Height: | Size: 403 KiB |

|

Before Width: | Height: | Size: 640 KiB |

|

Before Width: | Height: | Size: 171 KiB |

|

Before Width: | Height: | Size: 173 KiB |

|

Before Width: | Height: | Size: 40 KiB |

|

Before Width: | Height: | Size: 370 KiB |

|

Before Width: | Height: | Size: 116 KiB |

|

Before Width: | Height: | Size: 284 KiB |

|

Before Width: | Height: | Size: 230 KiB |

|

Before Width: | Height: | Size: 229 KiB |