diff --git a/.github/ISSUE_TEMPLATE/bug-report.yml b/.github/ISSUE_TEMPLATE/bug-report.yml

index 845f7af06..c09c10308 100644

--- a/.github/ISSUE_TEMPLATE/bug-report.yml

+++ b/.github/ISSUE_TEMPLATE/bug-report.yml

@@ -20,6 +20,8 @@ body:

A clear and concise description of what you expected to happen.

**Screenshots**

If applicable, add screenshots to help explain your problem.

+ **Optional: Affiliation**

+ Institution/email information helps better analyze and evaluate users to improve the project. Welcome to establish in-depth cooperation.

placeholder: |

A clear and concise description of what the bug is.

validations:

diff --git a/.github/ISSUE_TEMPLATE/documentation.yml b/.github/ISSUE_TEMPLATE/documentation.yml

index e31193ba8..511997e2e 100644

--- a/.github/ISSUE_TEMPLATE/documentation.yml

+++ b/.github/ISSUE_TEMPLATE/documentation.yml

@@ -17,6 +17,7 @@ body:

**Expectation** What is your expected content about it?

**Screenshots** If applicable, add screenshots to help explain your problem.

**Suggestions** Tell us how we could improve the documentation.

+ **Optional: Affiliation** Institution/email information helps better analyze and evaluate users to improve the project. Welcome to establish in-depth cooperation.

placeholder: |

A clear and concise description of the issue.

validations:

diff --git a/.github/ISSUE_TEMPLATE/feature_request.yml b/.github/ISSUE_TEMPLATE/feature_request.yml

index 8dcc51ea8..d05bc25f6 100644

--- a/.github/ISSUE_TEMPLATE/feature_request.yml

+++ b/.github/ISSUE_TEMPLATE/feature_request.yml

@@ -22,6 +22,8 @@ body:

If applicable, add screenshots to help explain your problem.

**Suggest a potential alternative/fix**

Tell us how we could improve this project.

+ **Optional: Affiliation**

+ Institution/email information helps better analyze and evaluate users to improve the project. Welcome to establish in-depth cooperation.

placeholder: |

A clear and concise description of your idea.

validations:

diff --git a/.github/ISSUE_TEMPLATE/proposal.yml b/.github/ISSUE_TEMPLATE/proposal.yml

index 6ca7bd1a0..614ef7775 100644

--- a/.github/ISSUE_TEMPLATE/proposal.yml

+++ b/.github/ISSUE_TEMPLATE/proposal.yml

@@ -13,6 +13,7 @@ body:

- Bumping a critical dependency's major version;

- A significant improvement in user-friendliness;

- Significant refactor;

+ - Optional: Affiliation/email information helps better analyze and evaluate users to improve the project. Welcome to establish in-depth cooperation.

- ...

Please note this is not for feature request or bug template; such action could make us identify the issue wrongly and close it without doing anything.

@@ -43,4 +44,4 @@ body:

- type: markdown

attributes:

value: >

- Thanks for contributing 🎉!

\ No newline at end of file

+ Thanks for contributing 🎉!

diff --git a/.github/reviewer_list.yml b/.github/reviewer_list.yml

deleted file mode 100644

index ce1d4849f..000000000

--- a/.github/reviewer_list.yml

+++ /dev/null

@@ -1,9 +0,0 @@

-addReviewers: true

-

-addAssignees: author

-

-numberOfReviewers: 1

-

-reviewers:

- - frankleeeee

- - kurisusnowdeng

diff --git a/.github/workflows/assign_reviewer.yml b/.github/workflows/assign_reviewer.yml

deleted file mode 100644

index 6ebb33982..000000000

--- a/.github/workflows/assign_reviewer.yml

+++ /dev/null

@@ -1,18 +0,0 @@

-name: Assign Reviewers for Team

-

-on:

- pull_request:

- types: [opened]

-

-jobs:

- assign_reviewer:

- name: Assign Reviewer for PR

- runs-on: ubuntu-latest

- if: |

- github.event.pull_request.draft == false && github.base_ref == 'main'

- && github.event.pull_request.base.repo.full_name == 'hpcaitech/ColossalAI'

- && toJson(github.event.pull_request.requested_reviewers) == '[]'

- steps:

- - uses: kentaro-m/auto-assign-action@v1.2.1

- with:

- configuration-path: '.github/reviewer_list.yml'

diff --git a/.github/workflows/auto_example_check.yml b/.github/workflows/auto_example_check.yml

new file mode 100644

index 000000000..7f1e357e3

--- /dev/null

+++ b/.github/workflows/auto_example_check.yml

@@ -0,0 +1,130 @@

+name: Test Example

+on:

+ pull_request:

+ # any change in the examples folder will trigger check for the corresponding example.

+ paths:

+ - 'examples/**'

+ # run at 00:00 of every Sunday(singapore time) so here is UTC time Saturday 16:00

+ schedule:

+ - cron: '0 16 * * 6'

+

+jobs:

+ # This is for changed example files detect and output a matrix containing all the corresponding directory name.

+ detect-changed-example:

+ if: |

+ github.event.pull_request.draft == false &&

+ github.base_ref == 'main' &&

+ github.event.pull_request.base.repo.full_name == 'hpcaitech/ColossalAI' && github.event_name == 'pull_request'

+ runs-on: ubuntu-latest

+ outputs:

+ matrix: ${{ steps.setup-matrix.outputs.matrix }}

+ anyChanged: ${{ steps.setup-matrix.outputs.anyChanged }}

+ name: Detect changed example files

+ steps:

+ - uses: actions/checkout@v3

+ with:

+ fetch-depth: 0

+ ref: ${{ github.event.pull_request.head.sha }}

+ - name: Get all changed example files

+ id: changed-files

+ uses: tj-actions/changed-files@v35

+ # Using this can trigger action each time a PR is submitted.

+ with:

+ since_last_remote_commit: true

+ - name: setup matrix

+ id: setup-matrix

+ run: |

+ changedFileName=""

+ for file in ${{ steps.changed-files.outputs.all_changed_files }}; do

+ changedFileName="${file}:${changedFileName}"

+ done

+ echo "$changedFileName was changed"

+ res=`python .github/workflows/scripts/example_checks/detect_changed_example.py --fileNameList $changedFileName`

+ echo "All changed examples are $res"

+

+ if [ "$x" = "[]" ]; then

+ echo "anyChanged=false" >> $GITHUB_OUTPUT

+ echo "matrix=null" >> $GITHUB_OUTPUT

+ else

+ dirs=$( IFS=',' ; echo "${res[*]}" )

+ echo "anyChanged=true" >> $GITHUB_OUTPUT

+ echo "matrix={\"directory\":$(echo "$dirs")}" >> $GITHUB_OUTPUT

+ fi

+

+ # If no file is changed, it will prompt an error and shows the matrix do not have value.

+ check-changed-example:

+ # Add this condition to avoid executing this job if the trigger event is workflow_dispatch.

+ if: |

+ github.event.pull_request.draft == false &&

+ github.base_ref == 'main' &&

+ github.event.pull_request.base.repo.full_name == 'hpcaitech/ColossalAI' && github.event_name == 'pull_request'

+ name: Test the changed example

+ needs: detect-changed-example

+ runs-on: [self-hosted, gpu]

+ strategy:

+ matrix: ${{fromJson(needs.detect-changed-example.outputs.matrix)}}

+ container:

+ image: hpcaitech/pytorch-cuda:1.12.0-11.3.0

+ options: --gpus all --rm -v /data/scratch/examples-data:/data/

+ timeout-minutes: 10

+ steps:

+ - uses: actions/checkout@v3

+ - name: Install Colossal-AI

+ run: |

+ pip install -v .

+ - name: Test the example

+ run: |

+ example_dir=${{ matrix.directory }}

+ cd "${PWD}/examples/${example_dir}"

+ bash test_ci.sh

+ env:

+ NCCL_SHM_DISABLE: 1

+

+ # This is for all files' weekly check. Specifically, this job is to find all the directories.

+ matrix_preparation:

+ if: |

+ github.event.pull_request.draft == false &&

+ github.base_ref == 'main' &&

+ github.event.pull_request.base.repo.full_name == 'hpcaitech/ColossalAI' && github.event_name == 'schedule'

+ name: Prepare matrix for weekly check

+ runs-on: ubuntu-latest

+ outputs:

+ matrix: ${{ steps.setup-matrix.outputs.matrix }}

+ steps:

+ - name: 📚 Checkout

+ uses: actions/checkout@v3

+ - name: setup matrix

+ id: setup-matrix

+ run: |

+ res=`python .github/workflows/scripts/example_checks/check_example_weekly.py`

+ all_loc=$( IFS=',' ; echo "${res[*]}" )

+ echo "Found the examples: $all_loc"

+ echo "matrix={\"directory\":$(echo "$all_loc")}" >> $GITHUB_OUTPUT

+

+ weekly_check:

+ if: |

+ github.event.pull_request.draft == false &&

+ github.base_ref == 'main' &&

+ github.event.pull_request.base.repo.full_name == 'hpcaitech/ColossalAI' && github.event_name == 'schedule'

+ name: Weekly check all examples

+ needs: matrix_preparation

+ runs-on: [self-hosted, gpu]

+ strategy:

+ matrix: ${{fromJson(needs.matrix_preparation.outputs.matrix)}}

+ container:

+ image: hpcaitech/pytorch-cuda:1.12.0-11.3.0

+ timeout-minutes: 10

+ steps:

+ - name: 📚 Checkout

+ uses: actions/checkout@v3

+ - name: Install Colossal-AI

+ run: |

+ pip install -v .

+ - name: Traverse all files

+ run: |

+ example_dir=${{ matrix.diretory }}

+ echo "Testing ${example_dir} now"

+ cd "${PWD}/examples/${example_dir}"

+ bash test_ci.sh

+ env:

+ NCCL_SHM_DISABLE: 1

diff --git a/.github/workflows/build.yml b/.github/workflows/build.yml

index b7023098f..62d6350d6 100644

--- a/.github/workflows/build.yml

+++ b/.github/workflows/build.yml

@@ -1,17 +1,45 @@

name: Build

-on:

+on:

pull_request:

types: [synchronize, labeled]

jobs:

- build:

- name: Build and Test Colossal-AI

+ detect:

+ name: Detect kernel-related file change

if: |

github.event.pull_request.draft == false &&

github.base_ref == 'main' &&

github.event.pull_request.base.repo.full_name == 'hpcaitech/ColossalAI' &&

contains( github.event.pull_request.labels.*.name, 'Run Build and Test')

+ outputs:

+ changedFiles: ${{ steps.find-changed-files.outputs.changedFiles }}

+ anyChanged: ${{ steps.find-changed-files.outputs.any_changed }}

+ runs-on: ubuntu-latest

+ steps:

+ - uses: actions/checkout@v2

+ with:

+ fetch-depth: 0

+ ref: ${{ github.event.pull_request.head.sha }}

+ - name: Find the changed files

+ id: find-changed-files

+ uses: tj-actions/changed-files@v35

+ with:

+ since_last_remote_commit: true

+ files: |

+ op_builder/**

+ colossalai/kernel/**

+ setup.py

+ - name: List changed files

+ run: |

+ for file in ${{ steps.find-changed-files.outputs.all_changed_files }}; do

+ echo "$file was changed"

+ done

+

+

+ build:

+ name: Build and Test Colossal-AI

+ needs: detect

runs-on: [self-hosted, gpu]

container:

image: hpcaitech/pytorch-cuda:1.11.0-11.3.0

@@ -23,27 +51,38 @@ jobs:

repository: hpcaitech/TensorNVMe

ssh-key: ${{ secrets.SSH_KEY_FOR_CI }}

path: TensorNVMe

+

- name: Install tensornvme

run: |

cd TensorNVMe

conda install cmake

pip install -r requirements.txt

pip install -v .

+

- uses: actions/checkout@v2

with:

ssh-key: ${{ secrets.SSH_KEY_FOR_CI }}

- - name: Install Colossal-AI

+

+ - name: Restore cache

+ if: needs.detect.outputs.anyChanged != 'true'

run: |

- [ ! -z "$(ls -A /github/home/cuda_ext_cache/)" ] && cp -r /github/home/cuda_ext_cache/* /__w/ColossalAI/ColossalAI/

- pip install -r requirements/requirements.txt

- pip install -v -e .

- cp -r /__w/ColossalAI/ColossalAI/build /github/home/cuda_ext_cache/

- cp /__w/ColossalAI/ColossalAI/*.so /github/home/cuda_ext_cache/

+ # -p flag is required to preserve the file timestamp to avoid ninja rebuild

+ [ ! -z "$(ls -A /github/home/cuda_ext_cache/)" ] && cp -p -r /github/home/cuda_ext_cache/* /__w/ColossalAI/ColossalAI/

+

+ - name: Install Colossal-AI

+ run: |

+ CUDA_EXT=1 pip install -v -e .

pip install -r requirements/requirements-test.txt

+

- name: Unit Testing

run: |

- PYTHONPATH=$PWD pytest tests

+ PYTHONPATH=$PWD pytest --cov=. --cov-report lcov tests

env:

DATA: /data/scratch/cifar-10

NCCL_SHM_DISABLE: 1

LD_LIBRARY_PATH: /github/home/.tensornvme/lib:/usr/local/nvidia/lib:/usr/local/nvidia/lib64

+

+ - name: Store Cache

+ run: |

+ # -p flag is required to preserve the file timestamp to avoid ninja rebuild

+ cp -p -r /__w/ColossalAI/ColossalAI/build /github/home/cuda_ext_cache/

diff --git a/.github/workflows/build_gpu_8.yml b/.github/workflows/build_gpu_8.yml

index 4d96390f2..be8337dd0 100644

--- a/.github/workflows/build_gpu_8.yml

+++ b/.github/workflows/build_gpu_8.yml

@@ -2,7 +2,7 @@ name: Build on 8 GPUs

on:

schedule:

- # run at 00:00 of every Sunday

+ # run at 00:00 of every Sunday

- cron: '0 0 * * *'

workflow_dispatch:

@@ -30,13 +30,11 @@ jobs:

- uses: actions/checkout@v2

with:

ssh-key: ${{ secrets.SSH_KEY_FOR_CI }}

- - name: Install Colossal-AI

+ - name: Install Colossal-AI

run: |

[ ! -z "$(ls -A /github/home/cuda_ext_cache/)" ] && cp -r /github/home/cuda_ext_cache/* /__w/ColossalAI/ColossalAI/

- pip install -r requirements/requirements.txt

- pip install -v -e .

+ CUDA_EXT=1 pip install -v -e .

cp -r /__w/ColossalAI/ColossalAI/build /github/home/cuda_ext_cache/

- cp /__w/ColossalAI/ColossalAI/*.so /github/home/cuda_ext_cache/

pip install -r requirements/requirements-test.txt

- name: Unit Testing

run: |

@@ -45,4 +43,3 @@ jobs:

env:

DATA: /data/scratch/cifar-10

LD_LIBRARY_PATH: /github/home/.tensornvme/lib:/usr/local/nvidia/lib:/usr/local/nvidia/lib64

-

\ No newline at end of file

diff --git a/.github/workflows/compatibility_test.yml b/.github/workflows/compatibility_test.yml

index 7948eb20c..eadd07886 100644

--- a/.github/workflows/compatibility_test.yml

+++ b/.github/workflows/compatibility_test.yml

@@ -70,7 +70,7 @@ jobs:

- uses: actions/checkout@v2

with:

ssh-key: ${{ secrets.SSH_KEY_FOR_CI }}

- - name: Install Colossal-AI

+ - name: Install Colossal-AI

run: |

pip install -r requirements/requirements.txt

pip install -v --no-cache-dir .

diff --git a/.github/workflows/dispatch_example_check.yml b/.github/workflows/dispatch_example_check.yml

new file mode 100644

index 000000000..e0333422f

--- /dev/null

+++ b/.github/workflows/dispatch_example_check.yml

@@ -0,0 +1,63 @@

+name: Manual Test Example

+on:

+ workflow_dispatch:

+ inputs:

+ example_directory:

+ type: string

+ description: example directory, separated by space. For example, language/gpt, images/vit. Simply input language or simply gpt does not work.

+ required: true

+

+jobs:

+ matrix_preparation:

+ if: |

+ github.event.pull_request.draft == false &&

+ github.base_ref == 'main' &&

+ github.event.pull_request.base.repo.full_name == 'hpcaitech/ColossalAI'

+ name: Check the examples user want

+ runs-on: ubuntu-latest

+ outputs:

+ matrix: ${{ steps.set-matrix.outputs.matrix }}

+ steps:

+ - name: 📚 Checkout

+ uses: actions/checkout@v3

+ - name: Set up matrix

+ id: set-matrix

+ env:

+ check_dir: ${{ inputs.example_directory }}

+ run: |

+ res=`python .github/workflows/scripts/example_checks/check_dispatch_inputs.py --fileNameList $check_dir`

+ if [ res == "failure" ];then

+ exit -1

+ fi

+ dirs="[${check_dir}]"

+ echo "Testing examples in $dirs"

+ echo "matrix={\"directory\":$(echo "$dirs")}" >> $GITHUB_OUTPUT

+

+ test_example:

+ if: |

+ github.event.pull_request.draft == false &&

+ github.base_ref == 'main' &&

+ github.event.pull_request.base.repo.full_name == 'hpcaitech/ColossalAI'

+ name: Manually check example files

+ needs: manual_check_matrix_preparation

+ runs-on: [self-hosted, gpu]

+ strategy:

+ matrix: ${{fromJson(needs.manual_check_matrix_preparation.outputs.matrix)}}

+ container:

+ image: hpcaitech/pytorch-cuda:1.12.0-11.3.0

+ options: --gpus all --rm -v /data/scratch/examples-data:/data/

+ timeout-minutes: 10

+ steps:

+ - name: 📚 Checkout

+ uses: actions/checkout@v3

+ - name: Install Colossal-AI

+ run: |

+ pip install -v .

+ - name: Test the example

+ run: |

+ dir=${{ matrix.directory }}

+ echo "Testing ${dir} now"

+ cd "${PWD}/examples/${dir}"

+ bash test_ci.sh

+ env:

+ NCCL_SHM_DISABLE: 1

diff --git a/.github/workflows/draft_github_release_post.yml b/.github/workflows/draft_github_release_post.yml

index f970a9091..413714daf 100644

--- a/.github/workflows/draft_github_release_post.yml

+++ b/.github/workflows/draft_github_release_post.yml

@@ -20,7 +20,7 @@ jobs:

fetch-depth: 0

- uses: actions/setup-python@v2

with:

- python-version: '3.7.12'

+ python-version: '3.8.14'

- name: generate draft

id: generate_draft

run: |

@@ -42,4 +42,3 @@ jobs:

body_path: ${{ steps.generate_draft.outputs.path }}

draft: True

prerelease: false

-

\ No newline at end of file

diff --git a/.github/workflows/release_bdist.yml b/.github/workflows/release_bdist.yml

index aeac3e327..c9c51df8d 100644

--- a/.github/workflows/release_bdist.yml

+++ b/.github/workflows/release_bdist.yml

@@ -64,9 +64,21 @@ jobs:

- name: Copy scripts and checkout

run: |

cp -r ./.github/workflows/scripts/* ./

+

+ # link the cache diretories to current path

+ ln -s /github/home/conda_pkgs ./conda_pkgs

ln -s /github/home/pip_wheels ./pip_wheels

+

+ # set the conda package path

+ echo "pkgs_dirs:\n - $PWD/conda_pkgs" > ~/.condarc

+

+ # set safe directory

git config --global --add safe.directory /__w/ColossalAI/ColossalAI

+

+ # check out

git checkout $git_ref

+

+ # get cub package for cuda 10.2

wget https://github.com/NVIDIA/cub/archive/refs/tags/1.8.0.zip

unzip 1.8.0.zip

env:

diff --git a/.github/workflows/release_docker.yml b/.github/workflows/release_docker.yml

index 8e88ea311..c72d3fb33 100644

--- a/.github/workflows/release_docker.yml

+++ b/.github/workflows/release_docker.yml

@@ -18,23 +18,17 @@ jobs:

with:

fetch-depth: 0

- name: Build Docker

+ id: build

run: |

version=$(cat version.txt)

- docker build --build-arg http_proxy=http://172.17.0.1:7890 --build-arg https_proxy=http://172.17.0.1:7890 -t hpcaitech/colossalai:$version ./docker

+ tag=hpcaitech/colossalai:$version

+ docker build --build-arg http_proxy=http://172.17.0.1:7890 --build-arg https_proxy=http://172.17.0.1:7890 -t $tag ./docker

+ echo "tag=${tag}" >> $GITHUB_OUTPUT

- name: Log in to Docker Hub

uses: docker/login-action@f054a8b539a109f9f41c372932f1ae047eff08c9

with:

username: ${{ secrets.DOCKER_USERNAME }}

password: ${{ secrets.DOCKER_PASSWORD }}

- - name: Extract metadata (tags, labels) for Docker

- id: meta

- uses: docker/metadata-action@98669ae865ea3cffbcbaa878cf57c20bbf1c6c38

- with:

- images: hpcaitech/colossalai

- - name: Build and push Docker image

- uses: docker/build-push-action@ad44023a93711e3deb337508980b4b5e9bcdc5dc

- with:

- context: .

- push: true

- tags: ${{ steps.meta.outputs.tags }}

- labels: ${{ steps.meta.outputs.labels }}

\ No newline at end of file

+ - name: Push Docker image

+ run: |

+ docker push ${{ steps.build.outputs.tag }}

diff --git a/.github/workflows/release_nightly.yml b/.github/workflows/release_nightly.yml

index 0ef942841..8aa48b8ed 100644

--- a/.github/workflows/release_nightly.yml

+++ b/.github/workflows/release_nightly.yml

@@ -1,74 +1,29 @@

-name: Release bdist wheel for Nightly versions

+name: Publish Nightly Version to PyPI

on:

- schedule:

- # run at 00:00 of every Sunday

- - cron: '0 0 * * 6'

workflow_dispatch:

-

-jobs:

- matrix_preparation:

- name: Prepare Container List

- runs-on: ubuntu-latest

- outputs:

- matrix: ${{ steps.set-matrix.outputs.matrix }}

- steps:

- - id: set-matrix

- run: |

- matrix="[\"hpcaitech/cuda-conda:11.3\", \"hpcaitech/cuda-conda:10.2\"]"

- echo $matrix

- echo "::set-output name=matrix::{\"container\":$(echo $matrix)}"

+ schedule:

+ - cron: '0 0 * * 6' # release on every Sunday 00:00 UTC time

- build:

- name: Release bdist wheels

- needs: matrix_preparation

- if: github.repository == 'hpcaitech/ColossalAI' && contains(fromJson('["FrankLeeeee", "ver217", "feifeibear", "kurisusnowdeng"]'), github.actor)

- runs-on: [self-hosted, gpu]

- strategy:

- fail-fast: false

- matrix: ${{fromJson(needs.matrix_preparation.outputs.matrix)}}

- container:

- image: ${{ matrix.container }}

- options: --gpus all --rm

- steps:

- - uses: actions/checkout@v2

- with:

- fetch-depth: 0

- # cub is for cuda 10.2

- - name: Copy scripts and checkout

- run: |

- cp -r ./.github/workflows/scripts/* ./

- ln -s /github/home/pip_wheels ./pip_wheels

- wget https://github.com/NVIDIA/cub/archive/refs/tags/1.8.0.zip

- unzip 1.8.0.zip

- - name: Build bdist wheel

- run: |

- pip install beautifulsoup4 requests packaging

- python ./build_colossalai_wheel.py --nightly

- - name: 🚀 Deploy

- uses: garygrossgarten/github-action-scp@release

- with:

- local: all_dist

- remote: ${{ secrets.PRIVATE_PYPI_NIGHTLY_DIR }}

- host: ${{ secrets.PRIVATE_PYPI_HOST }}

- username: ${{ secrets.PRIVATE_PYPI_USER }}

- password: ${{ secrets.PRIVATE_PYPI_PASSWD }}

- remove_old_build:

- name: Remove old nightly build

+jobs:

+ build-n-publish:

+ if: github.event_name == 'workflow_dispatch' || github.repository == 'hpcaitech/ColossalAI'

+ name: Build and publish Python 🐍 distributions 📦 to PyPI

runs-on: ubuntu-latest

- needs: build

+ timeout-minutes: 20

steps:

- - name: executing remote ssh commands using password

- uses: appleboy/ssh-action@master

- env:

- BUILD_DIR: ${{ secrets.PRIVATE_PYPI_NIGHTLY_DIR }}

- with:

- host: ${{ secrets.PRIVATE_PYPI_HOST }}

- username: ${{ secrets.PRIVATE_PYPI_USER }}

- password: ${{ secrets.PRIVATE_PYPI_PASSWD }}

- envs: BUILD_DIR

- script: |

- cd $BUILD_DIR

- find . -type f -mtime +0 -exec rm -f {} +

- script_stop: true

-

+ - uses: actions/checkout@v2

+

+ - uses: actions/setup-python@v2

+ with:

+ python-version: '3.8.14'

+

+ - run: NIGHTLY=1 python setup.py sdist build

+

+ # publish to PyPI if executed on the main branch

+ - name: Publish package to PyPI

+ uses: pypa/gh-action-pypi-publish@release/v1

+ with:

+ user: __token__

+ password: ${{ secrets.PYPI_API_TOKEN }}

+ verbose: true

diff --git a/.github/workflows/release.yml b/.github/workflows/release_pypi.yml

similarity index 63%

rename from .github/workflows/release.yml

rename to .github/workflows/release_pypi.yml

index ab83c7a43..7f3f63cf3 100644

--- a/.github/workflows/release.yml

+++ b/.github/workflows/release_pypi.yml

@@ -1,21 +1,29 @@

name: Publish to PyPI

-on: workflow_dispatch

+on:

+ workflow_dispatch:

+ pull_request:

+ paths:

+ - 'version.txt'

+ types:

+ - closed

jobs:

build-n-publish:

- if: github.ref_name == 'main' && github.repository == 'hpcaitech/ColossalAI' && contains(fromJson('["FrankLeeeee", "ver217", "feifeibear", "kurisusnowdeng"]'), github.actor)

+ if: github.event_name == 'workflow_dispatch' || github.repository == 'hpcaitech/ColossalAI' && github.event.pull_request.merged == true && github.base_ref == 'main'

name: Build and publish Python 🐍 distributions 📦 to PyPI

runs-on: ubuntu-latest

timeout-minutes: 20

steps:

- uses: actions/checkout@v2

+

- uses: actions/setup-python@v2

with:

- python-version: '3.7.12'

+ python-version: '3.8.14'

+

- run: python setup.py sdist build

+

# publish to PyPI if executed on the main branch

- # publish to Test PyPI if executed on the develop branch

- name: Publish package to PyPI

uses: pypa/gh-action-pypi-publish@release/v1

with:

diff --git a/.github/workflows/release_test.yml b/.github/workflows/release_test.yml

deleted file mode 100644

index cae88edaa..000000000

--- a/.github/workflows/release_test.yml

+++ /dev/null

@@ -1,25 +0,0 @@

-name: Publish to Test PyPI

-

-on: workflow_dispatch

-

-jobs:

- build-n-publish:

- if: github.repository == 'hpcaitech/ColossalAI' && contains(fromJson('["FrankLeeeee", "ver217", "feifeibear", "kurisusnowdeng"]'), github.actor)

- name: Build and publish Python 🐍 distributions 📦 to Test PyPI

- runs-on: ubuntu-latest

- timeout-minutes: 20

- steps:

- - uses: actions/checkout@v2

- - uses: actions/setup-python@v2

- with:

- python-version: '3.7.12'

- - run: python setup.py sdist build

- # publish to PyPI if executed on the main branch

- # publish to Test PyPI if executed on the develop branch

- - name: Publish package to Test PyPI

- uses: pypa/gh-action-pypi-publish@release/v1

- with:

- user: __token__

- password: ${{ secrets.TEST_PYPI_API_TOKEN }}

- repository_url: https://test.pypi.org/legacy/

- verbose: true

diff --git a/.github/workflows/scripts/build_colossalai_wheel.py b/.github/workflows/scripts/build_colossalai_wheel.py

index 5a2db0c87..a9ac16fbc 100644

--- a/.github/workflows/scripts/build_colossalai_wheel.py

+++ b/.github/workflows/scripts/build_colossalai_wheel.py

@@ -1,12 +1,13 @@

-from filecmp import cmp

-import requests

-from bs4 import BeautifulSoup

import argparse

import os

import subprocess

-from packaging import version

+from filecmp import cmp

from functools import cmp_to_key

+import requests

+from bs4 import BeautifulSoup

+from packaging import version

+

WHEEL_TEXT_ROOT_URL = 'https://github.com/hpcaitech/public_assets/tree/main/colossalai/torch_build/torch_wheels'

RAW_TEXT_FILE_PREFIX = 'https://raw.githubusercontent.com/hpcaitech/public_assets/main/colossalai/torch_build/torch_wheels'

CUDA_HOME = os.environ['CUDA_HOME']

diff --git a/.github/workflows/scripts/build_colossalai_wheel.sh b/.github/workflows/scripts/build_colossalai_wheel.sh

index 55a87d956..c0d40fd2c 100644

--- a/.github/workflows/scripts/build_colossalai_wheel.sh

+++ b/.github/workflows/scripts/build_colossalai_wheel.sh

@@ -18,7 +18,7 @@ if [ $1 == "pip" ]

then

wget -nc -q -O ./pip_wheels/$filename $url

pip install ./pip_wheels/$filename

-

+

elif [ $1 == 'conda' ]

then

conda install pytorch==$torch_version cudatoolkit=$cuda_version $flags

@@ -34,8 +34,9 @@ fi

python setup.py bdist_wheel

mv ./dist/* ./all_dist

+# must remove build to enable compilation for

+# cuda extension in the next build

+rm -rf ./build

python setup.py clean

conda deactivate

conda env remove -n $python_version

-

-

diff --git a/.github/workflows/scripts/example_checks/check_dispatch_inputs.py b/.github/workflows/scripts/example_checks/check_dispatch_inputs.py

new file mode 100644

index 000000000..04d2063ec

--- /dev/null

+++ b/.github/workflows/scripts/example_checks/check_dispatch_inputs.py

@@ -0,0 +1,27 @@

+import argparse

+import os

+

+

+def check_inputs(input_list):

+ for path in input_list:

+ real_path = os.path.join('examples', path)

+ if not os.path.exists(real_path):

+ return False

+ return True

+

+

+def main():

+ parser = argparse.ArgumentParser()

+ parser.add_argument('-f', '--fileNameList', type=str, help="List of file names")

+ args = parser.parse_args()

+ name_list = args.fileNameList.split(",")

+ is_correct = check_inputs(name_list)

+

+ if is_correct:

+ print('success')

+ else:

+ print('failure')

+

+

+if __name__ == '__main__':

+ main()

diff --git a/.github/workflows/scripts/example_checks/check_example_weekly.py b/.github/workflows/scripts/example_checks/check_example_weekly.py

new file mode 100644

index 000000000..941e90901

--- /dev/null

+++ b/.github/workflows/scripts/example_checks/check_example_weekly.py

@@ -0,0 +1,37 @@

+import os

+

+

+def show_files(path, all_files):

+ # Traverse all the folder/file in current directory

+ file_list = os.listdir(path)

+ # Determine the element is folder or file. If file, pass it into list, if folder, recurse.

+ for file_name in file_list:

+ # Get the abs directory using os.path.join() and store into cur_path.

+ cur_path = os.path.join(path, file_name)

+ # Determine whether folder

+ if os.path.isdir(cur_path):

+ show_files(cur_path, all_files)

+ else:

+ all_files.append(cur_path)

+ return all_files

+

+

+def join(input_list, sep=None):

+ return (sep or ' ').join(input_list)

+

+

+def main():

+ contents = show_files('examples/', [])

+ all_loc = []

+ for file_loc in contents:

+ split_loc = file_loc.split('/')

+ # must have two sub-folder levels after examples folder, such as examples/images/vit is acceptable, examples/images/README.md is not, examples/requirements.txt is not.

+ if len(split_loc) >= 4:

+ re_loc = '/'.join(split_loc[1:3])

+ if re_loc not in all_loc:

+ all_loc.append(re_loc)

+ print(all_loc)

+

+

+if __name__ == '__main__':

+ main()

diff --git a/.github/workflows/scripts/example_checks/detect_changed_example.py b/.github/workflows/scripts/example_checks/detect_changed_example.py

new file mode 100644

index 000000000..df4fd6736

--- /dev/null

+++ b/.github/workflows/scripts/example_checks/detect_changed_example.py

@@ -0,0 +1,24 @@

+import argparse

+

+

+def main():

+ parser = argparse.ArgumentParser()

+ parser.add_argument('-f', '--fileNameList', type=str, help="The list of changed files")

+ args = parser.parse_args()

+ name_list = args.fileNameList.split(":")

+ folder_need_check = set()

+ for loc in name_list:

+ # Find only the sub-sub-folder of 'example' folder

+ # the examples folder structure is like

+ # - examples

+ # - area

+ # - application

+ # - file

+ if loc.split("/")[0] == "examples" and len(loc.split("/")) >= 4:

+ folder_need_check.add('/'.join(loc.split("/")[1:3]))

+ # Output the result using print. Then the shell can get the values.

+ print(list(folder_need_check))

+

+

+if __name__ == '__main__':

+ main()

diff --git a/.github/workflows/scripts/generate_release_draft.py b/.github/workflows/scripts/generate_release_draft.py

index fdcd667ae..1c407cf14 100644

--- a/.github/workflows/scripts/generate_release_draft.py

+++ b/.github/workflows/scripts/generate_release_draft.py

@@ -2,9 +2,10 @@

# coding: utf-8

import argparse

-import requests

-import re

import os

+import re

+

+import requests

COMMIT_API = 'https://api.github.com/repos/hpcaitech/ColossalAI/commits'

TAGS_API = 'https://api.github.com/repos/hpcaitech/ColossalAI/tags'

diff --git a/.github/workflows/submodule.yml b/.github/workflows/submodule.yml

index ac01f85db..4ffb26118 100644

--- a/.github/workflows/submodule.yml

+++ b/.github/workflows/submodule.yml

@@ -1,6 +1,6 @@

name: Synchronize Submodule

-on:

+on:

workflow_dispatch:

schedule:

- cron: "0 0 * * *"

@@ -27,11 +27,11 @@ jobs:

- name: Commit update

run: |

- git config --global user.name 'github-actions'

- git config --global user.email 'github-actions@github.com'

+ git config --global user.name 'github-actions'

+ git config --global user.email 'github-actions@github.com'

git remote set-url origin https://x-access-token:${{ secrets.GITHUB_TOKEN }}@github.com/${{ github.repository }}

git commit -am "Automated submodule synchronization"

-

+

- name: Create Pull Request

uses: peter-evans/create-pull-request@v3

with:

@@ -43,4 +43,3 @@ jobs:

assignees: ${{ github.actor }}

delete-branch: true

branch: create-pull-request/patch-sync-submodule

-

\ No newline at end of file

diff --git a/.gitignore b/.gitignore

index 458f37553..8e345eeb8 100644

--- a/.gitignore

+++ b/.gitignore

@@ -134,10 +134,23 @@ dmypy.json

.vscode/

# macos

-.DS_Store

+*.DS_Store

#data/

docs/.build

# pytorch checkpoint

-*.pt

\ No newline at end of file

+*.pt

+

+# ignore version.py generated by setup.py

+colossalai/version.py

+

+# ignore any kernel build files

+.o

+.so

+

+# ignore python interface defition file

+.pyi

+

+# ignore coverage test file

+converage.lcov

diff --git a/.readthedocs.yaml b/.readthedocs.yaml

index ce22f43c1..98dd0cc4e 100644

--- a/.readthedocs.yaml

+++ b/.readthedocs.yaml

@@ -27,4 +27,4 @@ sphinx:

python:

install:

- requirements: requirements/requirements.txt

- - requirements: docs/requirements.txt

\ No newline at end of file

+ - requirements: docs/requirements.txt

diff --git a/LICENSE b/LICENSE

index 9ca515ca7..0528c89ea 100644

--- a/LICENSE

+++ b/LICENSE

@@ -1,4 +1,4 @@

-Copyright 2021- The Colossal-ai Authors. All rights reserved.

+Copyright 2021- HPC-AI Technology Inc. All rights reserved.

Apache License

Version 2.0, January 2004

http://www.apache.org/licenses/

@@ -187,7 +187,7 @@ Copyright 2021- The Colossal-ai Authors. All rights reserved.

same "printed page" as the copyright notice for easier

identification within third-party archives.

- Copyright [yyyy] [name of copyright owner]

+ Copyright 2021- HPC-AI Technology Inc.

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

diff --git a/MANIFEST.in b/MANIFEST.in

index 48a44e0b4..ad26b634a 100644

--- a/MANIFEST.in

+++ b/MANIFEST.in

@@ -1,3 +1,4 @@

include *.txt README.md

recursive-include requirements *.txt

-recursive-include colossalai *.cpp *.h *.cu *.tr *.cuh *.cc

\ No newline at end of file

+recursive-include colossalai *.cpp *.h *.cu *.tr *.cuh *.cc *.pyi

+recursive-include op_builder *.py

diff --git a/README-zh-Hans.md b/README-zh-Hans.md

index b678af55d..b97b02f5a 100644

--- a/README-zh-Hans.md

+++ b/README-zh-Hans.md

@@ -1,14 +1,14 @@

# Colossal-AI

- [](https://www.colossalai.org/)

+ [](https://www.colossalai.org/)

Colossal-AI: 一个面向大模型时代的通用深度学习系统

-

论文 |

- 文档 |

- 例程 |

- 论坛 |

+

[](https://github.com/hpcaitech/ColossalAI/actions/workflows/build.yml)

@@ -22,41 +22,50 @@

(返回顶端)

## 并行训练样例展示

-### ViT

-

- -

-

-- 14倍批大小和5倍训练速度(张量并行=64)

### GPT-3

@@ -131,7 +131,7 @@ Colossal-AI 为您提供了一系列并行组件。我们的目标是让您的

GPT-2.png) - 用相同的硬件训练24倍大的模型

-- 超3倍的吞吐量

+- 超3倍的吞吐量

### BERT

- 用相同的硬件训练24倍大的模型

-- 超3倍的吞吐量

+- 超3倍的吞吐量

### BERT

@@ -145,10 +145,16 @@ Colossal-AI 为您提供了一系列并行组件。我们的目标是让您的

@@ -145,10 +145,16 @@ Colossal-AI 为您提供了一系列并行组件。我们的目标是让您的

- [Open Pretrained Transformer (OPT)](https://github.com/facebookresearch/metaseq), 由Meta发布的1750亿语言模型,由于完全公开了预训练参数权重,因此促进了下游任务和应用部署的发展。

-- 加速45%,仅用几行代码以低成本微调OPT。[[样例]](https://github.com/hpcaitech/ColossalAI-Examples/tree/main/language/opt) [[在线推理]](https://service.colossalai.org/opt)

+- 加速45%,仅用几行代码以低成本微调OPT。[[样例]](https://github.com/hpcaitech/ColossalAI-Examples/tree/main/language/opt) [[在线推理]](https://service.colossalai.org/opt)

请访问我们的 [文档](https://www.colossalai.org/) 和 [例程](https://github.com/hpcaitech/ColossalAI-Examples) 以了解详情。

+### ViT

+

- [Open Pretrained Transformer (OPT)](https://github.com/facebookresearch/metaseq), 由Meta发布的1750亿语言模型,由于完全公开了预训练参数权重,因此促进了下游任务和应用部署的发展。

-- 加速45%,仅用几行代码以低成本微调OPT。[[样例]](https://github.com/hpcaitech/ColossalAI-Examples/tree/main/language/opt) [[在线推理]](https://service.colossalai.org/opt)

+- 加速45%,仅用几行代码以低成本微调OPT。[[样例]](https://github.com/hpcaitech/ColossalAI-Examples/tree/main/language/opt) [[在线推理]](https://service.colossalai.org/opt)

请访问我们的 [文档](https://www.colossalai.org/) 和 [例程](https://github.com/hpcaitech/ColossalAI-Examples) 以了解详情。

+### ViT

+

+ +

+

+

+- 14倍批大小和5倍训练速度(张量并行=64)

### 推荐系统模型

- [Cached Embedding](https://github.com/hpcaitech/CachedEmbedding), 使用软件Cache实现Embeddings,用更少GPU显存训练更大的模型。

@@ -178,7 +184,7 @@ Colossal-AI 为您提供了一系列并行组件。我们的目标是让您的

- 用相同的硬件训练34倍大的模型

-(back to top)

+(返回顶端)

## 推理 (Energon-AI) 样例展示

@@ -195,23 +201,82 @@ Colossal-AI 为您提供了一系列并行组件。我们的目标是让您的

- [OPT推理服务](https://service.colossalai.org/opt): 无需注册,免费体验1750亿参数OPT在线推理服务

+

+ +

+

-(back to top)

+- [BLOOM](https://github.com/hpcaitech/EnergonAI/tree/main/examples/bloom): 降低1750亿参数BLOOM模型部署推理成本超10倍

+

+(返回顶端)

## Colossal-AI 成功案例

-### xTrimoMultimer: 蛋白质单体与复合物结构预测

+### AIGC

+加速AIGC(AI内容生成)模型,如[Stable Diffusion v1](https://github.com/CompVis/stable-diffusion) 和 [Stable Diffusion v2](https://github.com/Stability-AI/stablediffusion)

+

+

+ +

+

+

+- [训练](https://github.com/hpcaitech/ColossalAI/tree/main/examples/images/diffusion): 减少5.6倍显存消耗,硬件成本最高降低46倍(从A100到RTX3060)

+

+

+ +

+

+

+- [DreamBooth微调](https://github.com/hpcaitech/ColossalAI/tree/main/examples/images/dreambooth): 仅需3-5张目标主题图像个性化微调

+

+

+ +

+

+

+- [推理](https://github.com/hpcaitech/ColossalAI/tree/main/examples/images/diffusion): GPU推理显存消耗降低2.5倍

+

+

+(返回顶端)

+

+### 生物医药

+

+加速 [AlphaFold](https://alphafold.ebi.ac.uk/) 蛋白质结构预测

+

+

+ +

+

+

+- [FastFold](https://github.com/hpcaitech/FastFold): 加速AlphaFold训练与推理、数据前处理、推理序列长度超过10000残基

+

- -

-

- [xTrimoMultimer](https://github.com/biomap-research/xTrimoMultimer): 11倍加速蛋白质单体与复合物结构预测

+

- [xTrimoMultimer](https://github.com/biomap-research/xTrimoMultimer): 11倍加速蛋白质单体与复合物结构预测

+(返回顶端)

## 安装

+### 从PyPI安装

+

+您可以用下面的命令直接从PyPI上下载并安装Colossal-AI。我们默认不会安装PyTorch扩展包

+

+```bash

+pip install colossalai

+```

+

+但是,如果你想在安装时就直接构建PyTorch扩展,您可以设置环境变量`CUDA_EXT=1`.

+

+```bash

+CUDA_EXT=1 pip install colossalai

+```

+

+**否则,PyTorch扩展只会在你实际需要使用他们时在运行时里被构建。**

+

+与此同时,我们也每周定时发布Nightly版本,这能让你提前体验到新的feature和bug fix。你可以通过以下命令安装Nightly版本。

+

+```bash

+pip install colossalai-nightly

+```

+

### 从官方安装

您可以访问我们[下载](https://www.colossalai.org/download)页面来安装Colossal-AI,在这个页面上发布的版本都预编译了CUDA扩展。

@@ -231,10 +296,10 @@ pip install -r requirements/requirements.txt

pip install .

```

-如果您不想安装和启用 CUDA 内核融合(使用融合优化器时强制安装):

+我们默认在`pip install`时不安装PyTorch扩展,而是在运行时临时编译,如果你想要提前安装这些扩展的话(在使用融合优化器时会用到),可以使用一下命令。

```shell

-NO_CUDA_EXT=1 pip install .

+CUDA_EXT=1 pip install .

```

(返回顶端)

@@ -283,31 +348,6 @@ docker run -ti --gpus all --rm --ipc=host colossalai bash

(返回顶端)

-## 快速预览

-

-### 几行代码开启分布式训练

-

-```python

-parallel = dict(

- pipeline=2,

- tensor=dict(mode='2.5d', depth = 1, size=4)

-)

-```

-

-### 几行代码开启异构训练

-

-```python

-zero = dict(

- model_config=dict(

- tensor_placement_policy='auto',

- shard_strategy=TensorShardStrategy(),

- reuse_fp16_shard=True

- ),

- optimizer_config=dict(initial_scale=2**5, gpu_margin_mem_ratio=0.2)

-)

-```

-

-(返回顶端)

## 引用我们

@@ -320,4 +360,4 @@ zero = dict(

}

```

-(返回顶端)

\ No newline at end of file

+(返回顶端)

diff --git a/README.md b/README.md

index c5a798a0e..7aba907e0 100644

--- a/README.md

+++ b/README.md

@@ -1,14 +1,14 @@

# Colossal-AI

- [](https://www.colossalai.org/)

+ [](https://www.colossalai.org/)

Colossal-AI: A Unified Deep Learning System for Big Model Era

-

Paper |

- Documentation |

- Examples |

- Forum |

+

[](https://github.com/hpcaitech/ColossalAI/actions/workflows/build.yml)

@@ -17,46 +17,55 @@

[](https://huggingface.co/hpcai-tech)

[](https://join.slack.com/t/colossalaiworkspace/shared_invite/zt-z7b26eeb-CBp7jouvu~r0~lcFzX832w)

[](https://raw.githubusercontent.com/hpcaitech/public_assets/main/colossalai/img/WeChat.png)

-

+

| [English](README.md) | [中文](README-zh-Hans.md) |

(back to top)

## Parallel Training Demo

-### ViT

-

- -

-

-

-- 14x larger batch size, and 5x faster training for Tensor Parallelism = 64

### GPT-3

@@ -150,10 +149,17 @@ distributed training and inference in a few lines.

- [Open Pretrained Transformer (OPT)](https://github.com/facebookresearch/metaseq), a 175-Billion parameter AI language model released by Meta, which stimulates AI programmers to perform various downstream tasks and application deployments because public pretrained model weights.

-- 45% speedup fine-tuning OPT at low cost in lines. [[Example]](https://github.com/hpcaitech/ColossalAI-Examples/tree/main/language/opt) [[Online Serving]](https://service.colossalai.org/opt)

+- 45% speedup fine-tuning OPT at low cost in lines. [[Example]](https://github.com/hpcaitech/ColossalAI-Examples/tree/main/language/opt) [[Online Serving]](https://service.colossalai.org/opt)

Please visit our [documentation](https://www.colossalai.org/) and [examples](https://github.com/hpcaitech/ColossalAI-Examples) for more details.

+### ViT

+

- [Open Pretrained Transformer (OPT)](https://github.com/facebookresearch/metaseq), a 175-Billion parameter AI language model released by Meta, which stimulates AI programmers to perform various downstream tasks and application deployments because public pretrained model weights.

-- 45% speedup fine-tuning OPT at low cost in lines. [[Example]](https://github.com/hpcaitech/ColossalAI-Examples/tree/main/language/opt) [[Online Serving]](https://service.colossalai.org/opt)

+- 45% speedup fine-tuning OPT at low cost in lines. [[Example]](https://github.com/hpcaitech/ColossalAI-Examples/tree/main/language/opt) [[Online Serving]](https://service.colossalai.org/opt)

Please visit our [documentation](https://www.colossalai.org/) and [examples](https://github.com/hpcaitech/ColossalAI-Examples) for more details.

+### ViT

+

+ +

+

+

+- 14x larger batch size, and 5x faster training for Tensor Parallelism = 64

+

### Recommendation System Models

- [Cached Embedding](https://github.com/hpcaitech/CachedEmbedding), utilize software cache to train larger embedding tables with a smaller GPU memory budget.

@@ -198,26 +204,85 @@ Please visit our [documentation](https://www.colossalai.org/) and [examples](htt

- [OPT Serving](https://service.colossalai.org/opt): Try 175-billion-parameter OPT online services for free, without any registration whatsoever.

+

+ +

+

+

+- [BLOOM](https://github.com/hpcaitech/EnergonAI/tree/main/examples/bloom): Reduce hardware deployment costs of 175-billion-parameter BLOOM by more than 10 times.

+

(back to top)

## Colossal-AI in the Real World

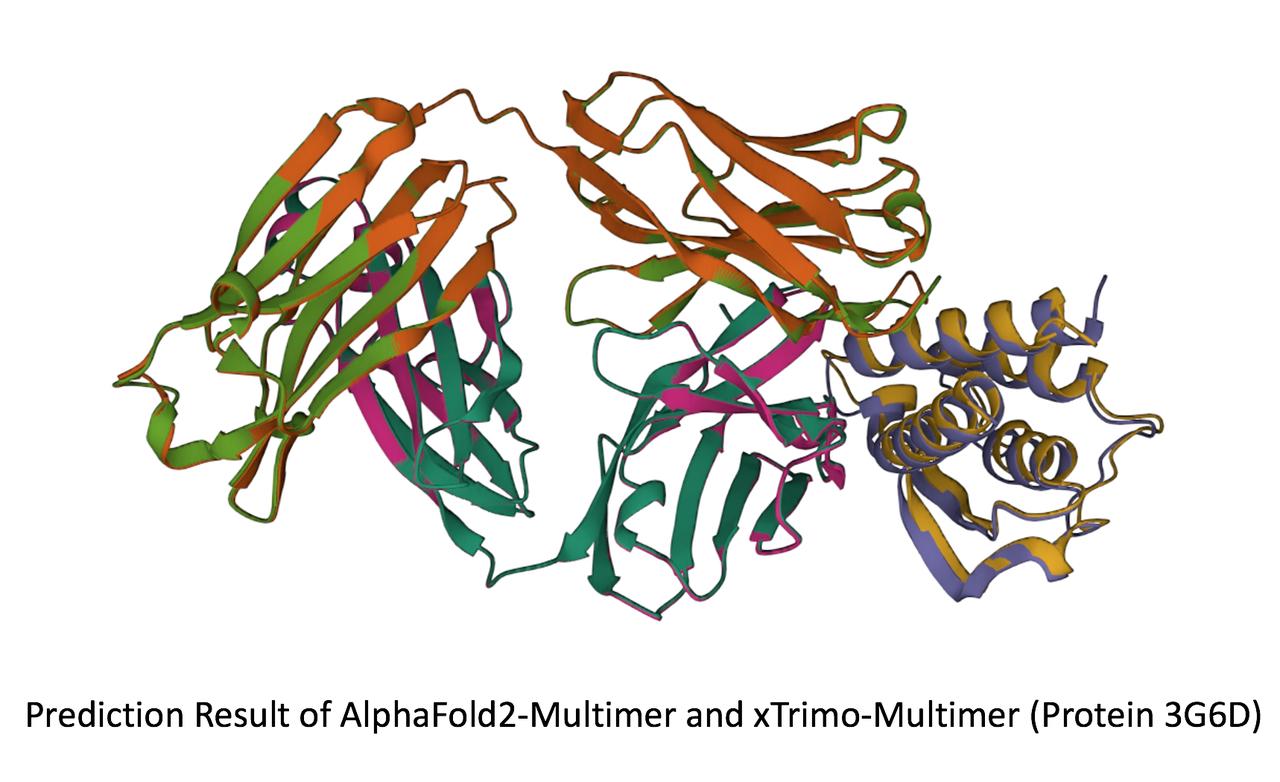

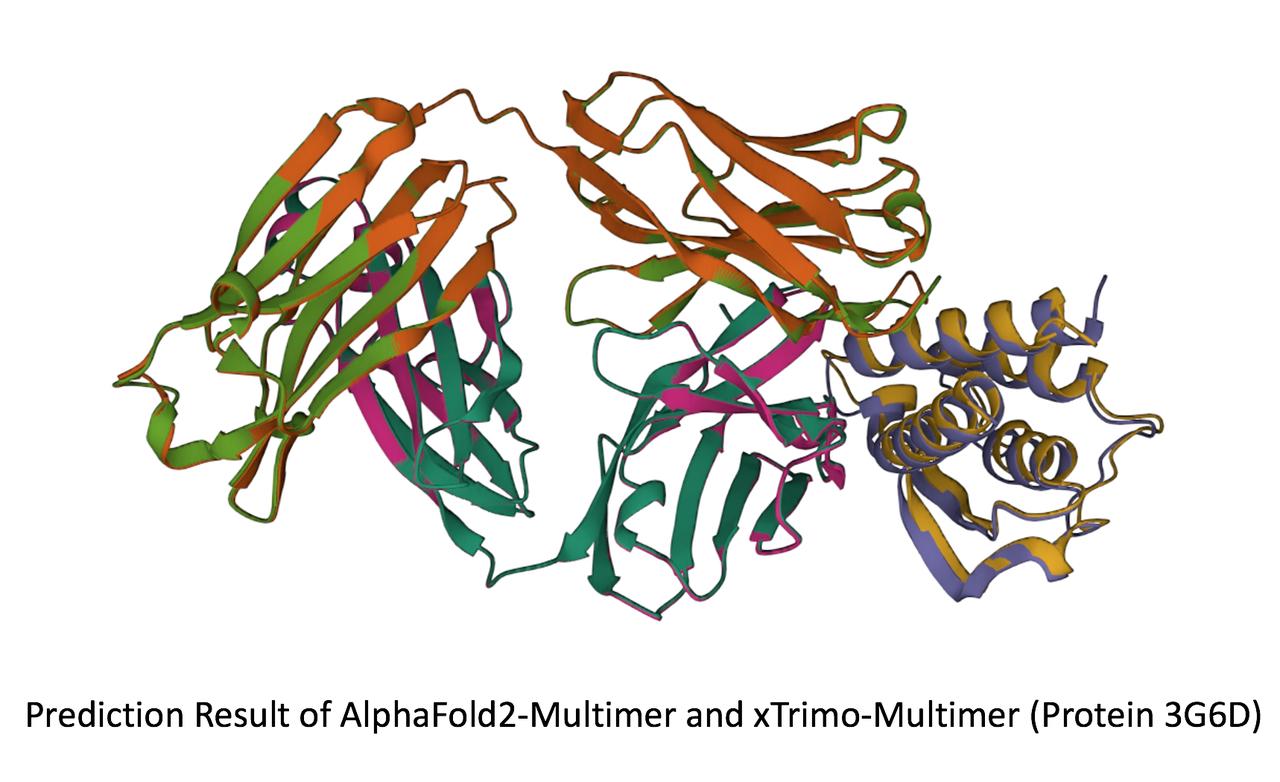

-### xTrimoMultimer: Accelerating Protein Monomer and Multimer Structure Prediction

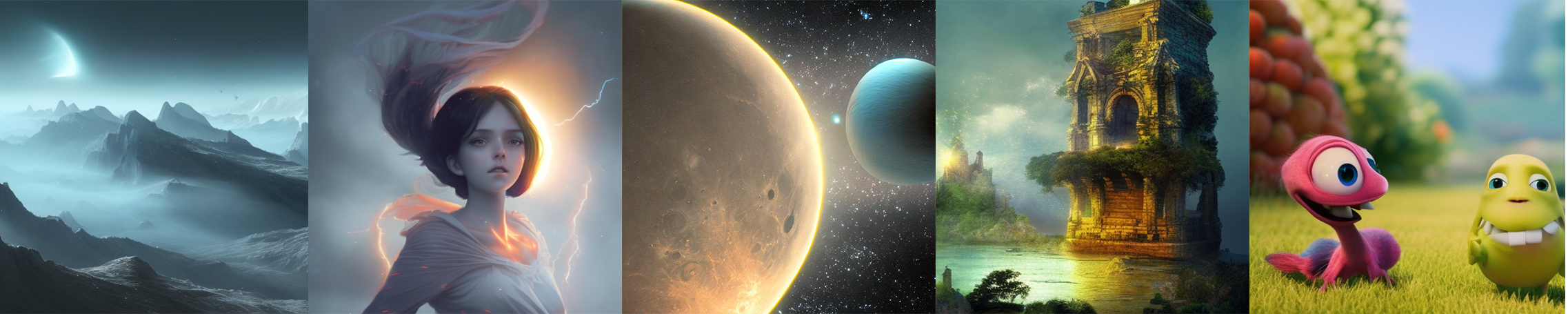

+### AIGC

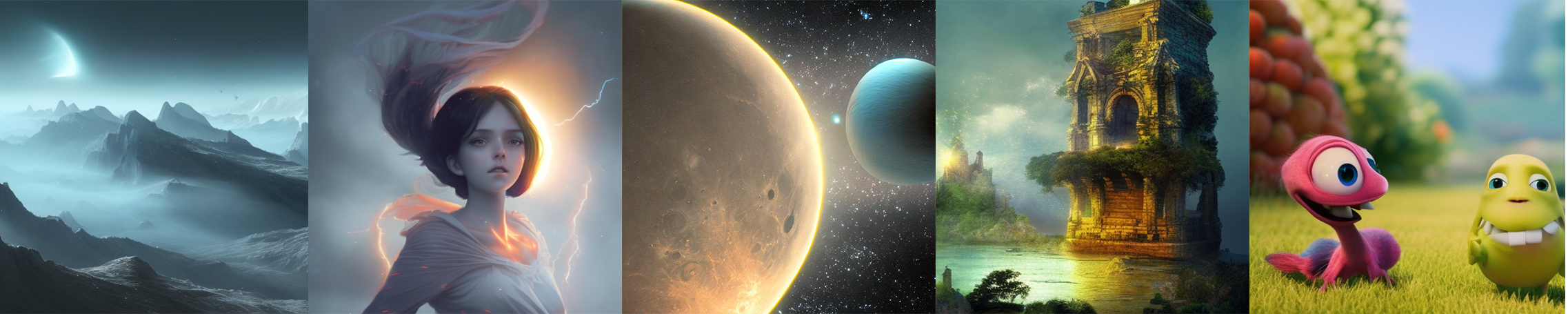

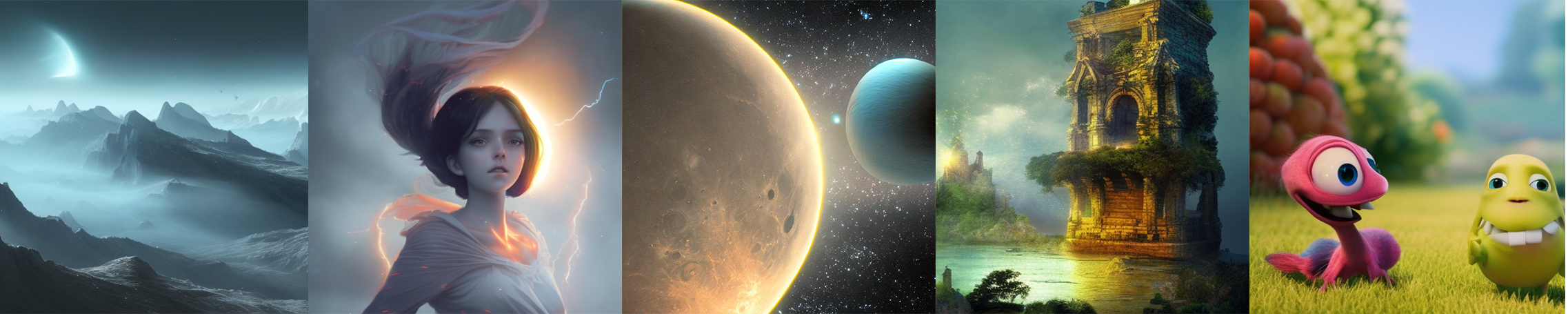

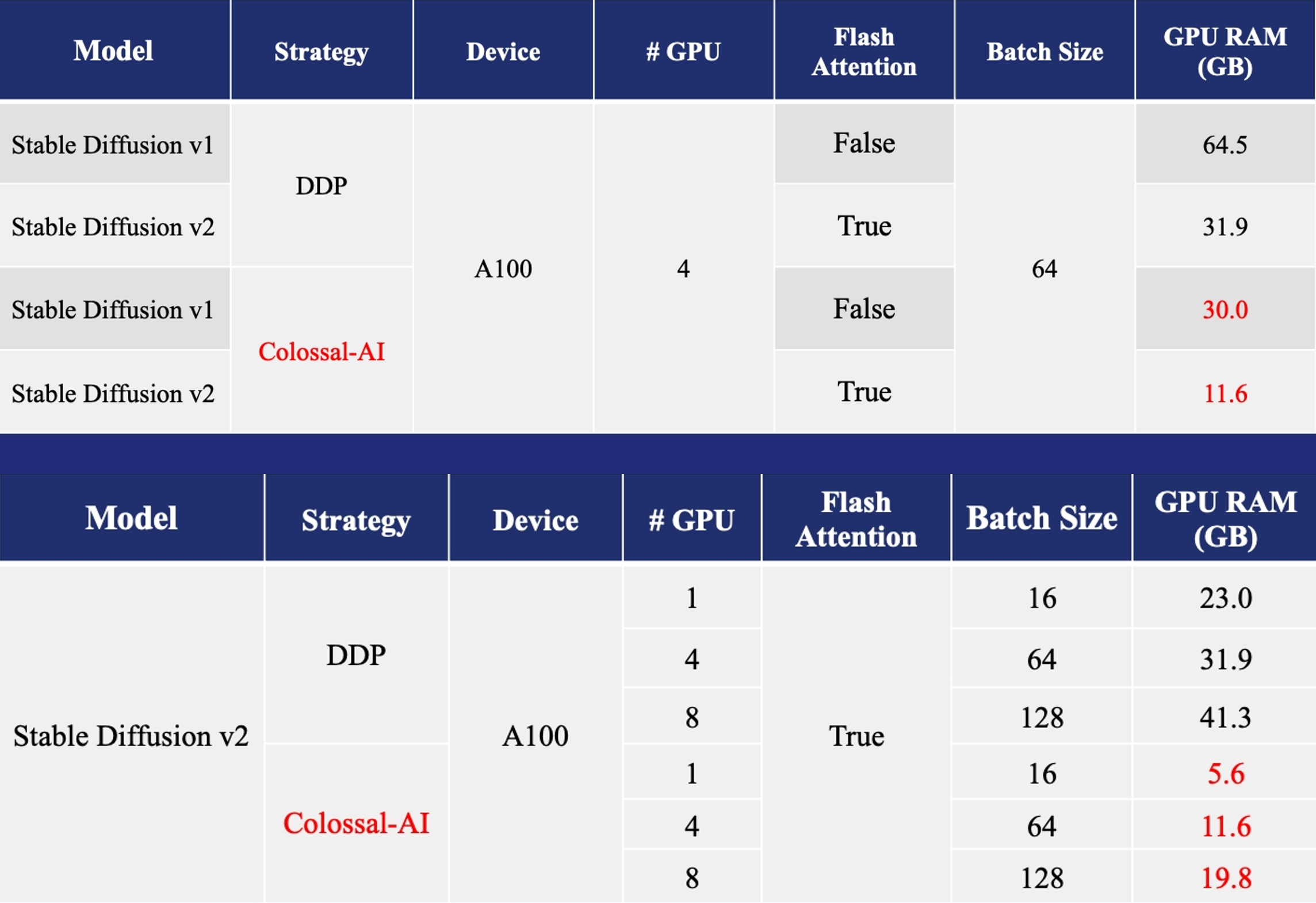

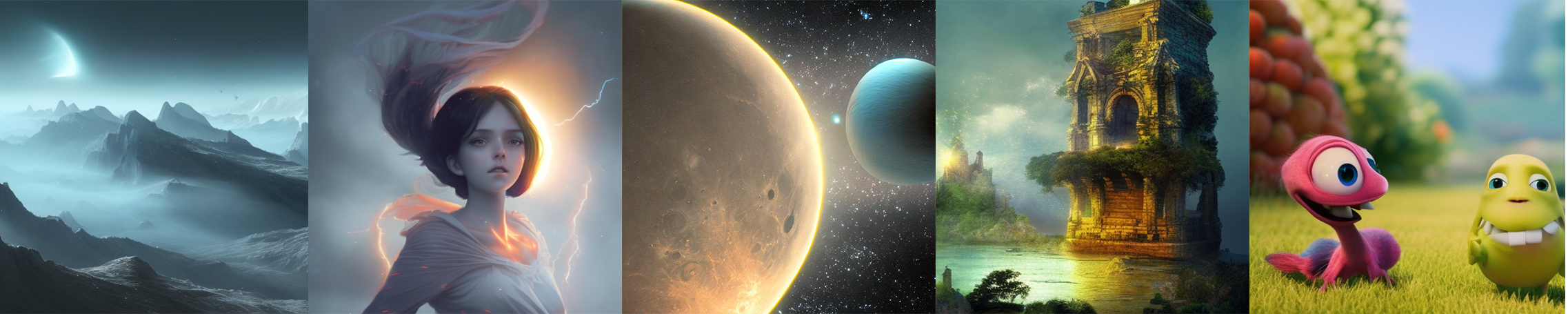

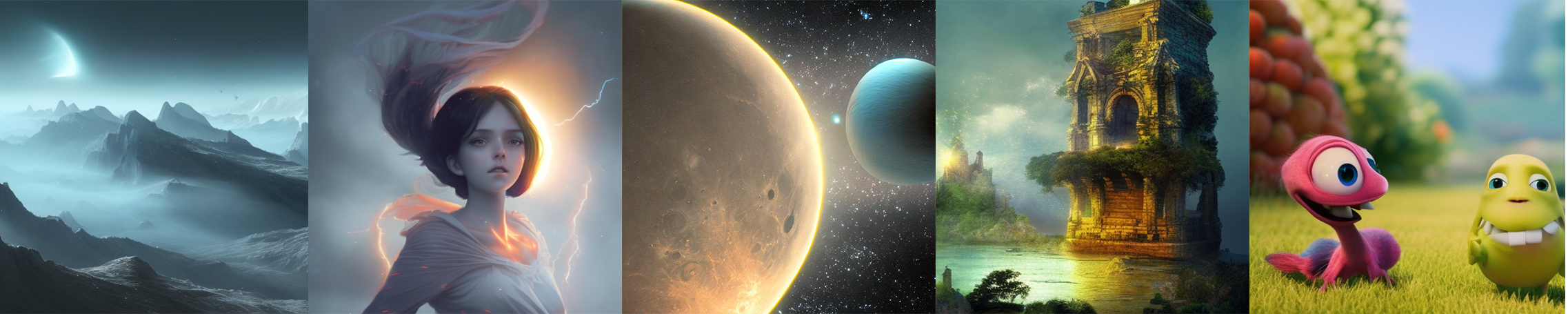

+Acceleration of AIGC (AI-Generated Content) models such as [Stable Diffusion v1](https://github.com/CompVis/stable-diffusion) and [Stable Diffusion v2](https://github.com/Stability-AI/stablediffusion).

+

+ +

+

+

+- [Training](https://github.com/hpcaitech/ColossalAI/tree/main/examples/images/diffusion): Reduce Stable Diffusion memory consumption by up to 5.6x and hardware cost by up to 46x (from A100 to RTX3060).

+

+

+ +

+

+

+- [DreamBooth Fine-tuning](https://github.com/hpcaitech/ColossalAI/tree/main/examples/images/dreambooth): Personalize your model using just 3-5 images of the desired subject.

+

+

+ +

+

+

+- [Inference](https://github.com/hpcaitech/ColossalAI/tree/main/examples/images/diffusion): Reduce inference GPU memory consumption by 2.5x.

+

+

+(back to top)

+

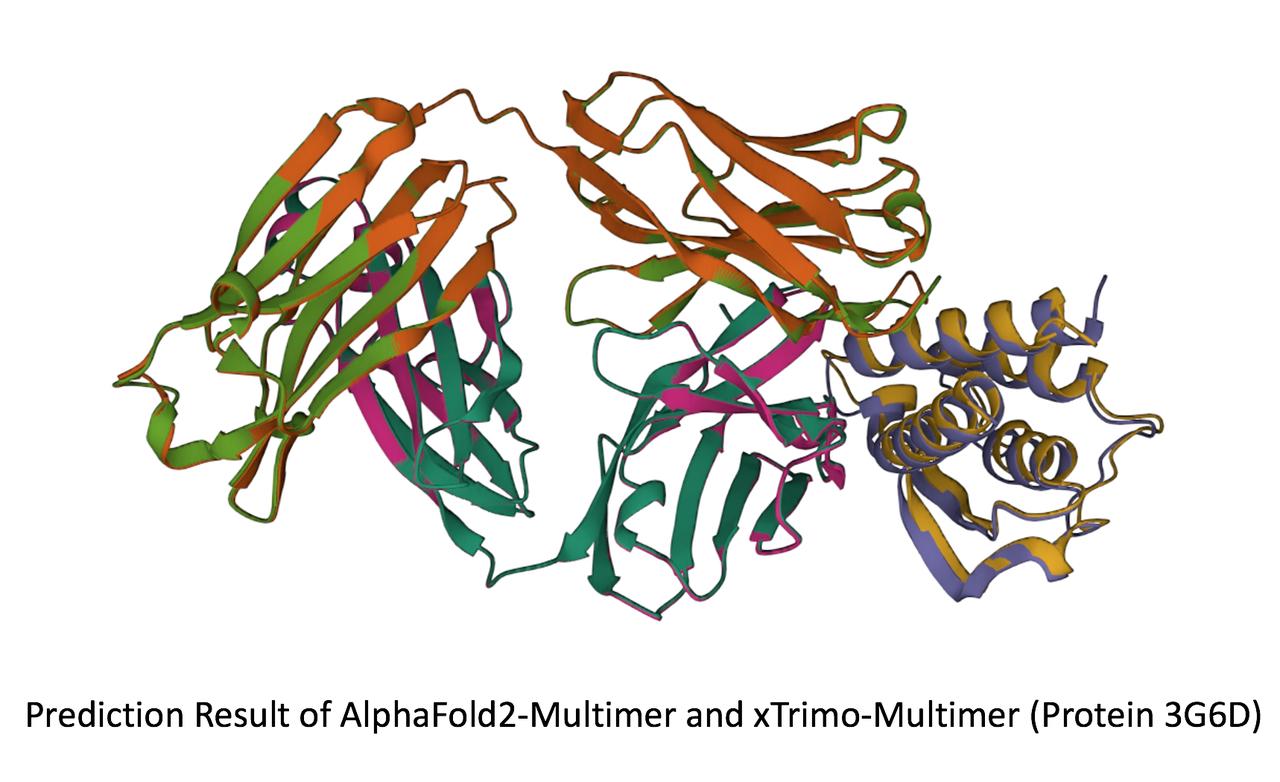

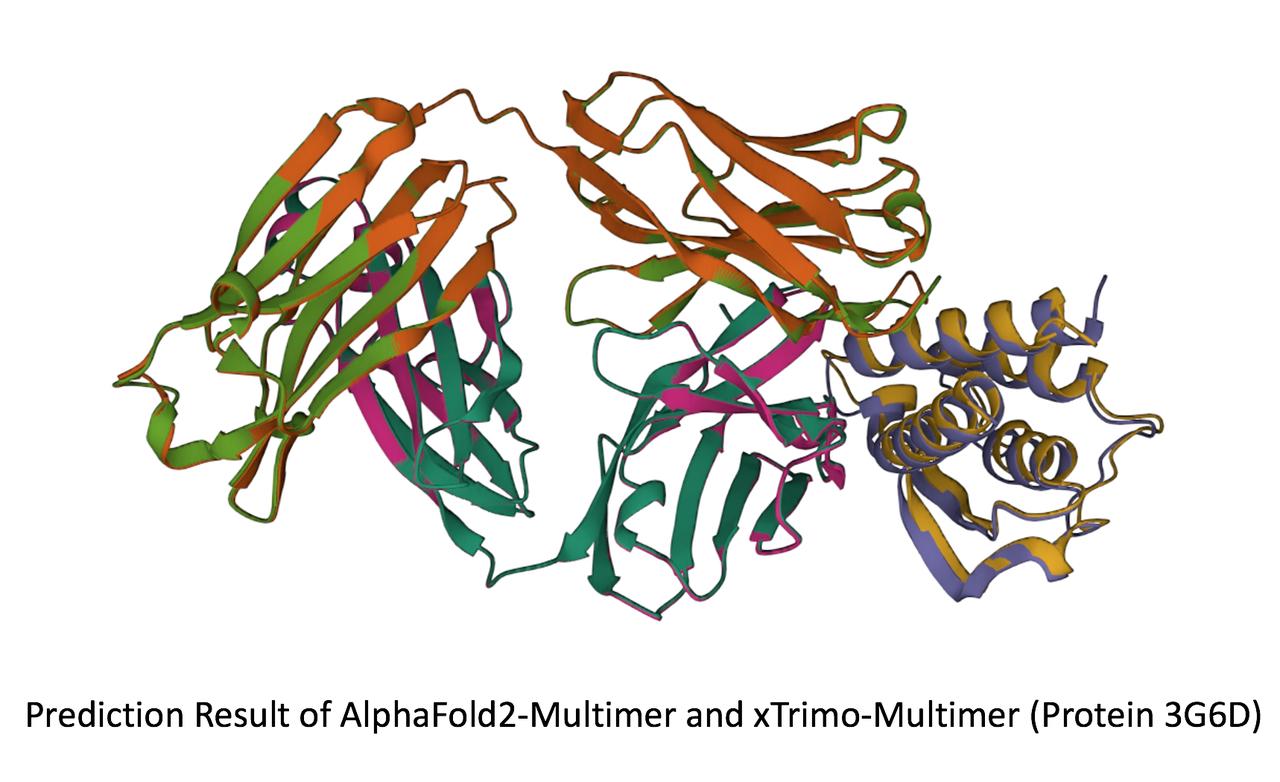

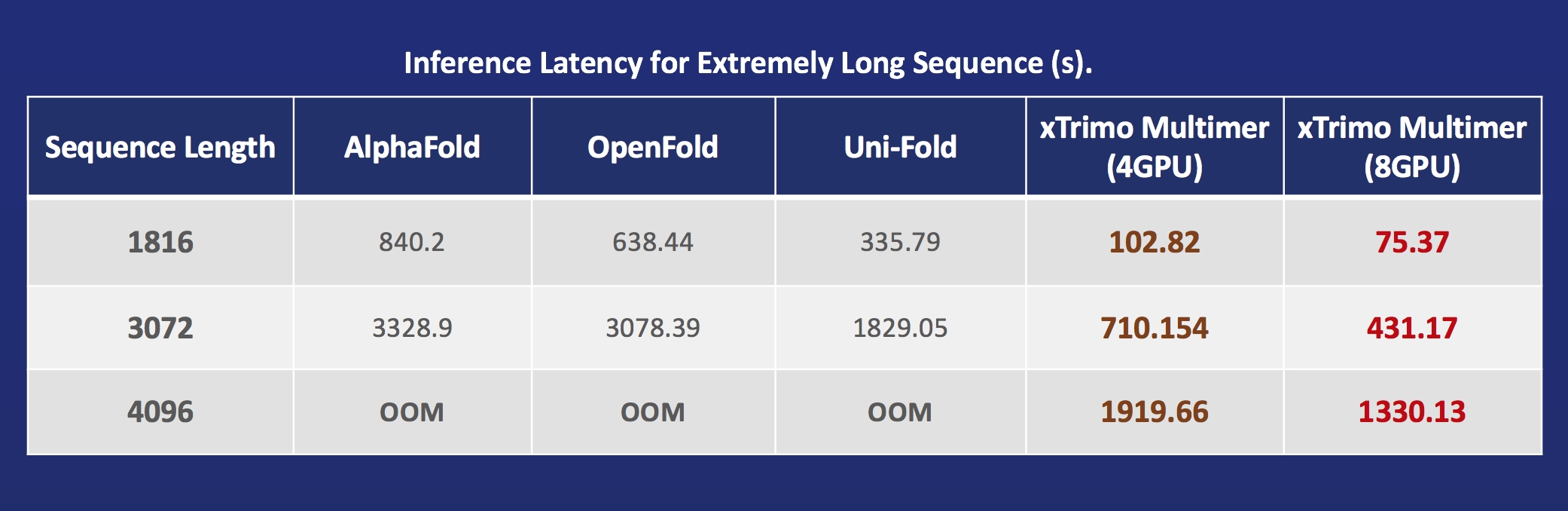

+### Biomedicine

+Acceleration of [AlphaFold Protein Structure](https://alphafold.ebi.ac.uk/)

+

+

+ +

+

+

+- [FastFold](https://github.com/hpcaitech/FastFold): accelerating training and inference on GPU Clusters, faster data processing, inference sequence containing more than 10000 residues.

+

- -

-

-- [xTrimoMultimer](https://github.com/biomap-research/xTrimoMultimer): accelerating structure prediction of protein monomers and multimer by 11x

+- [xTrimoMultimer](https://github.com/biomap-research/xTrimoMultimer): accelerating structure prediction of protein monomers and multimer by 11x.

+

-- [xTrimoMultimer](https://github.com/biomap-research/xTrimoMultimer): accelerating structure prediction of protein monomers and multimer by 11x

+- [xTrimoMultimer](https://github.com/biomap-research/xTrimoMultimer): accelerating structure prediction of protein monomers and multimer by 11x.

+

(back to top)

## Installation

+### Install from PyPI

+

+You can easily install Colossal-AI with the following command. **By defualt, we do not build PyTorch extensions during installation.**

+

+```bash

+pip install colossalai

+```

+

+However, if you want to build the PyTorch extensions during installation, you can set `CUDA_EXT=1`.

+

+```bash

+CUDA_EXT=1 pip install colossalai

+```

+

+**Otherwise, CUDA kernels will be built during runtime when you actually need it.**

+

+We also keep release the nightly version to PyPI on a weekly basis. This allows you to access the unreleased features and bug fixes in the main branch.

+Installation can be made via

+

+```bash

+pip install colossalai-nightly

+```

+

### Download From Official Releases

-You can visit the [Download](https://www.colossalai.org/download) page to download Colossal-AI with pre-built CUDA extensions.

+You can visit the [Download](https://www.colossalai.org/download) page to download Colossal-AI with pre-built PyTorch extensions.

### Download From Source

@@ -228,17 +293,15 @@ You can visit the [Download](https://www.colossalai.org/download) page to downlo

git clone https://github.com/hpcaitech/ColossalAI.git

cd ColossalAI

-# install dependency

-pip install -r requirements/requirements.txt

-

# install colossalai

pip install .

```

-If you don't want to install and enable CUDA kernel fusion (compulsory installation when using fused optimizer):

+By default, we do not compile CUDA/C++ kernels. ColossalAI will build them during runtime.

+If you want to install and enable CUDA kernel fusion (compulsory installation when using fused optimizer):

```shell

-NO_CUDA_EXT=1 pip install .

+CUDA_EXT=1 pip install .

```

(back to top)

@@ -289,32 +352,6 @@ Thanks so much to all of our amazing contributors!

(back to top)

-## Quick View

-

-### Start Distributed Training in Lines

-

-```python

-parallel = dict(

- pipeline=2,

- tensor=dict(mode='2.5d', depth = 1, size=4)

-)

-```

-

-### Start Heterogeneous Training in Lines

-

-```python

-zero = dict(

- model_config=dict(

- tensor_placement_policy='auto',

- shard_strategy=TensorShardStrategy(),

- reuse_fp16_shard=True

- ),

- optimizer_config=dict(initial_scale=2**5, gpu_margin_mem_ratio=0.2)

-)

-

-```

-

-(back to top)

## Cite Us

diff --git a/colossalai/_C/__init__.py b/colossalai/_C/__init__.py

new file mode 100644

index 000000000..e69de29bb

diff --git a/colossalai/__init__.py b/colossalai/__init__.py

index fab03445b..f859161f7 100644

--- a/colossalai/__init__.py

+++ b/colossalai/__init__.py

@@ -7,4 +7,11 @@ from .initialize import (

launch_from_torch,

)

-__version__ = '0.1.11rc1'

+try:

+ # .version will be created by setup.py

+ from .version import __version__

+except ModuleNotFoundError:

+ # this will only happen if the user did not run `pip install`

+ # and directly set PYTHONPATH to use Colossal-AI which is a bad practice

+ __version__ = '0.0.0'

+ print('please install Colossal-AI from https://www.colossalai.org/download or from source')

diff --git a/colossalai/amp/apex_amp/__init__.py b/colossalai/amp/apex_amp/__init__.py

index 6689a157c..51b9b97dc 100644

--- a/colossalai/amp/apex_amp/__init__.py

+++ b/colossalai/amp/apex_amp/__init__.py

@@ -1,7 +1,8 @@

-from .apex_amp import ApexAMPOptimizer

import torch.nn as nn

from torch.optim import Optimizer

+from .apex_amp import ApexAMPOptimizer

+

def convert_to_apex_amp(model: nn.Module, optimizer: Optimizer, amp_config):

r"""A helper function to wrap training components with Apex AMP modules

diff --git a/colossalai/amp/naive_amp/__init__.py b/colossalai/amp/naive_amp/__init__.py

index bb2b8eb26..5b2f71d3c 100644

--- a/colossalai/amp/naive_amp/__init__.py

+++ b/colossalai/amp/naive_amp/__init__.py

@@ -1,10 +1,13 @@

import inspect

+

import torch.nn as nn

from torch.optim import Optimizer

+

from colossalai.utils import is_no_pp_or_last_stage

-from .naive_amp import NaiveAMPOptimizer, NaiveAMPModel

-from .grad_scaler import DynamicGradScaler, ConstantGradScaler

+

from ._fp16_optimizer import FP16Optimizer

+from .grad_scaler import ConstantGradScaler, DynamicGradScaler

+from .naive_amp import NaiveAMPModel, NaiveAMPOptimizer

def convert_to_naive_amp(model: nn.Module, optimizer: Optimizer, amp_config):

diff --git a/colossalai/amp/naive_amp/_fp16_optimizer.py b/colossalai/amp/naive_amp/_fp16_optimizer.py

index 58d9e3df1..e4699f92b 100644

--- a/colossalai/amp/naive_amp/_fp16_optimizer.py

+++ b/colossalai/amp/naive_amp/_fp16_optimizer.py

@@ -3,24 +3,33 @@

import torch

import torch.distributed as dist

+from torch.distributed import ProcessGroup

+from torch.optim import Optimizer

+

+from colossalai.context import ParallelMode

+from colossalai.core import global_context as gpc

+from colossalai.kernel.op_builder import FusedOptimBuilder

+from colossalai.logging import get_dist_logger

+from colossalai.utils import clip_grad_norm_fp32, copy_tensor_parallel_attributes, multi_tensor_applier

+

+from ._utils import has_inf_or_nan, zero_gard_by_list

+from .grad_scaler import BaseGradScaler

try:

- import colossal_C

+ from colossalai._C import fused_optim

except:

- print('Colossalai should be built with cuda extension to use the FP16 optimizer')

-

-from torch.optim import Optimizer

-from colossalai.core import global_context as gpc

-from colossalai.context import ParallelMode

-from colossalai.logging import get_dist_logger

-from colossalai.utils import (copy_tensor_parallel_attributes, clip_grad_norm_fp32, multi_tensor_applier)

-from torch.distributed import ProcessGroup

-from .grad_scaler import BaseGradScaler

-from ._utils import has_inf_or_nan, zero_gard_by_list

+ fused_optim = None

__all__ = ['FP16Optimizer']

+def load_fused_optim():

+ global fused_optim

+

+ if fused_optim is None:

+ fused_optim = FusedOptimBuilder().load()

+

+

def _multi_tensor_copy_this_to_that(this, that, overflow_buf=None):

"""

adapted from Megatron-LM (https://github.com/NVIDIA/Megatron-LM)

@@ -33,7 +42,9 @@ def _multi_tensor_copy_this_to_that(this, that, overflow_buf=None):

if overflow_buf:

overflow_buf.fill_(0)

# Scaling with factor `1.0` is equivalent to copy.

- multi_tensor_applier(colossal_C.multi_tensor_scale, overflow_buf, [this, that], 1.0)

+ global fused_optim

+ load_fused_optim()

+ multi_tensor_applier(fused_optim.multi_tensor_scale, overflow_buf, [this, that], 1.0)

else:

for this_, that_ in zip(this, that):

that_.copy_(this_)

@@ -41,7 +52,7 @@ def _multi_tensor_copy_this_to_that(this, that, overflow_buf=None):

class FP16Optimizer(Optimizer):

"""Float16 optimizer for fp16 and bf16 data types.

-

+

Args:

optimizer (torch.optim.Optimizer): base optimizer such as Adam or SGD

grad_scaler (BaseGradScaler): grad scaler for gradient chose in

@@ -73,8 +84,8 @@ class FP16Optimizer(Optimizer):

# get process group

def _get_process_group(parallel_mode):

- if gpc.is_initialized(ParallelMode.DATA) and gpc.get_world_size(ParallelMode.DATA):

- return gpc.get_group(ParallelMode.DATA)

+ if gpc.is_initialized(parallel_mode) and gpc.get_world_size(parallel_mode):

+ return gpc.get_group(parallel_mode)

else:

return None

@@ -150,6 +161,12 @@ class FP16Optimizer(Optimizer):

f"==========================================",

ranks=[0])

+ @property

+ def max_norm(self):

+ """Returns the maximum norm of gradient clipping.

+ """

+ return self._clip_grad_max_norm

+

@property

def grad_scaler(self):

"""Returns the gradient scaler.

diff --git a/colossalai/amp/naive_amp/_utils.py b/colossalai/amp/naive_amp/_utils.py

index ad2a2ceed..7633705e1 100644

--- a/colossalai/amp/naive_amp/_utils.py

+++ b/colossalai/amp/naive_amp/_utils.py

@@ -1,4 +1,5 @@

from typing import List

+

from torch import Tensor

diff --git a/colossalai/amp/naive_amp/grad_scaler/base_grad_scaler.py b/colossalai/amp/naive_amp/grad_scaler/base_grad_scaler.py

index d27883a8e..0d84384a7 100644

--- a/colossalai/amp/naive_amp/grad_scaler/base_grad_scaler.py

+++ b/colossalai/amp/naive_amp/grad_scaler/base_grad_scaler.py

@@ -1,12 +1,14 @@

#!/usr/bin/env python

# -*- encoding: utf-8 -*-

-import torch

from abc import ABC, abstractmethod

-from colossalai.logging import get_dist_logger

-from torch import Tensor

from typing import Dict

+import torch

+from torch import Tensor

+

+from colossalai.logging import get_dist_logger

+

__all__ = ['BaseGradScaler']

diff --git a/colossalai/amp/naive_amp/grad_scaler/dynamic_grad_scaler.py b/colossalai/amp/naive_amp/grad_scaler/dynamic_grad_scaler.py

index 1ac26ee91..6d6f2f287 100644

--- a/colossalai/amp/naive_amp/grad_scaler/dynamic_grad_scaler.py

+++ b/colossalai/amp/naive_amp/grad_scaler/dynamic_grad_scaler.py

@@ -1,10 +1,12 @@

#!/usr/bin/env python

# -*- encoding: utf-8 -*-

-import torch

-from .base_grad_scaler import BaseGradScaler

from typing import Optional

+import torch

+

+from .base_grad_scaler import BaseGradScaler

+

__all__ = ['DynamicGradScaler']

diff --git a/colossalai/amp/naive_amp/naive_amp.py b/colossalai/amp/naive_amp/naive_amp.py

index 02eae80b9..6a39d518d 100644

--- a/colossalai/amp/naive_amp/naive_amp.py

+++ b/colossalai/amp/naive_amp/naive_amp.py

@@ -1,17 +1,20 @@

#!/usr/bin/env python

# -*- encoding: utf-8 -*-

-import torch

-import torch.nn as nn

-import torch.distributed as dist

-from torch import Tensor

from typing import Any

-from torch.optim import Optimizer

-from torch.distributed import ReduceOp

-from colossalai.core import global_context as gpc

-from colossalai.context import ParallelMode

-from colossalai.nn.optimizer import ColossalaiOptimizer

+

+import torch

+import torch.distributed as dist

+import torch.nn as nn

+from torch import Tensor

from torch._utils import _flatten_dense_tensors, _unflatten_dense_tensors

+from torch.distributed import ReduceOp

+from torch.optim import Optimizer

+

+from colossalai.context import ParallelMode

+from colossalai.core import global_context as gpc

+from colossalai.nn.optimizer import ColossalaiOptimizer

+

from ._fp16_optimizer import FP16Optimizer

@@ -40,7 +43,11 @@ class NaiveAMPOptimizer(ColossalaiOptimizer):

return self.optim.step()

def clip_grad_norm(self, model: nn.Module, max_norm: float):

- pass

+ if self.optim.max_norm == max_norm:

+ return

+ raise RuntimeError("NaiveAMP optimizer has clipped gradients during optimizer.step(). "

+ "If you have supplied clip_grad_norm in the amp_config, "

+ "executing the method clip_grad_norm is not allowed.")

class NaiveAMPModel(nn.Module):

diff --git a/colossalai/amp/torch_amp/__init__.py b/colossalai/amp/torch_amp/__init__.py

index 8943b86d6..893cc890d 100644

--- a/colossalai/amp/torch_amp/__init__.py

+++ b/colossalai/amp/torch_amp/__init__.py

@@ -1,10 +1,13 @@

-import torch.nn as nn

-from torch.optim import Optimizer

-from torch.nn.modules.loss import _Loss

-from colossalai.context import Config

-from .torch_amp import TorchAMPOptimizer, TorchAMPModel, TorchAMPLoss

from typing import Optional

+import torch.nn as nn

+from torch.nn.modules.loss import _Loss

+from torch.optim import Optimizer

+

+from colossalai.context import Config

+

+from .torch_amp import TorchAMPLoss, TorchAMPModel, TorchAMPOptimizer

+

def convert_to_torch_amp(model: nn.Module,

optimizer: Optimizer,

diff --git a/colossalai/amp/torch_amp/_grad_scaler.py b/colossalai/amp/torch_amp/_grad_scaler.py

index de39b3e16..7b78998fb 100644

--- a/colossalai/amp/torch_amp/_grad_scaler.py

+++ b/colossalai/amp/torch_amp/_grad_scaler.py

@@ -3,16 +3,18 @@

# modified from https://github.com/pytorch/pytorch/blob/master/torch/cuda/amp/grad_scaler.py

# to support tensor parallel

-import torch

-from collections import defaultdict, abc

import warnings

+from collections import abc, defaultdict

from enum import Enum

from typing import Any, Dict, List, Optional, Tuple

-from colossalai.context import ParallelMode

+

+import torch

import torch.distributed as dist

-from colossalai.core import global_context as gpc

-from torch._utils import _flatten_dense_tensors, _unflatten_dense_tensors

from packaging import version

+from torch._utils import _flatten_dense_tensors, _unflatten_dense_tensors

+

+from colossalai.context import ParallelMode

+from colossalai.core import global_context as gpc

class _MultiDeviceReplicator(object):

diff --git a/colossalai/amp/torch_amp/torch_amp.py b/colossalai/amp/torch_amp/torch_amp.py

index 5074e9c81..65718d77c 100644

--- a/colossalai/amp/torch_amp/torch_amp.py

+++ b/colossalai/amp/torch_amp/torch_amp.py

@@ -1,17 +1,17 @@

#!/usr/bin/env python

# -*- encoding: utf-8 -*-

-import torch.nn as nn

import torch.cuda.amp as torch_amp

-

+import torch.nn as nn

from torch import Tensor

from torch.nn.modules.loss import _Loss

from torch.optim import Optimizer

-from ._grad_scaler import GradScaler

from colossalai.nn.optimizer import ColossalaiOptimizer

from colossalai.utils import clip_grad_norm_fp32

+from ._grad_scaler import GradScaler

+

class TorchAMPOptimizer(ColossalaiOptimizer):

"""A wrapper class which integrate Pytorch AMP with an optimizer

diff --git a/colossalai/auto_parallel/checkpoint/__init__.py b/colossalai/auto_parallel/checkpoint/__init__.py

index e69de29bb..10ade417a 100644

--- a/colossalai/auto_parallel/checkpoint/__init__.py

+++ b/colossalai/auto_parallel/checkpoint/__init__.py

@@ -0,0 +1,3 @@

+from .ckpt_solver_base import CheckpointSolverBase

+from .ckpt_solver_chen import CheckpointSolverChen

+from .ckpt_solver_rotor import CheckpointSolverRotor

diff --git a/colossalai/auto_parallel/checkpoint/build_c_ext.py b/colossalai/auto_parallel/checkpoint/build_c_ext.py

new file mode 100644

index 000000000..af4349865

--- /dev/null

+++ b/colossalai/auto_parallel/checkpoint/build_c_ext.py

@@ -0,0 +1,16 @@

+import os

+

+from setuptools import Extension, setup

+

+this_dir = os.path.dirname(os.path.abspath(__file__))

+ext_modules = [Extension(

+ 'rotorc',

+ sources=[os.path.join(this_dir, 'ckpt_solver_rotor.c')],

+)]

+

+setup(

+ name='rotor c extension',

+ version='0.1',

+ description='rotor c extension for faster dp computing',

+ ext_modules=ext_modules,

+)

diff --git a/colossalai/auto_parallel/checkpoint/ckpt_solver_base.py b/colossalai/auto_parallel/checkpoint/ckpt_solver_base.py

new file mode 100644

index 000000000..b388d00ac

--- /dev/null

+++ b/colossalai/auto_parallel/checkpoint/ckpt_solver_base.py

@@ -0,0 +1,195 @@

+from abc import ABC, abstractmethod

+from copy import deepcopy

+from typing import Any, List

+

+import torch

+from torch.fx import Graph, Node

+

+from colossalai.auto_parallel.passes.runtime_apply_pass import (

+ runtime_apply,

+ runtime_apply_for_iterable_object,

+ runtime_comm_spec_apply,

+)

+from colossalai.fx.codegen.activation_checkpoint_codegen import ActivationCheckpointCodeGen

+

+__all___ = ['CheckpointSolverBase']

+

+

+def _copy_output(src: Graph, dst: Graph):

+ """Copy the output node from src to dst"""

+ for n_src, n_dst in zip(src.nodes, dst.nodes):

+ if n_src.op == 'output':

+ n_dst.meta = n_src.meta

+

+

+def _get_param_size(module: torch.nn.Module):

+ """Get the size of the parameters in the module"""

+ return sum([p.numel() * torch.tensor([], dtype=p.dtype).element_size() for p in module.parameters()])

+

+

+class CheckpointSolverBase(ABC):

+

+ def __init__(

+ self,

+ graph: Graph,

+ free_memory: float = -1.0,

+ requires_linearize: bool = False,

+ cnode: List[str] = None,

+ optim_multiplier: float = 1.0,

+ ):

+ """``CheckpointSolverBase`` class will integrate information provided by the components

+ and use an existing solver to find a possible optimal strategies combination for target

+ computing graph.

+

+ Existing Solvers:

+ Chen's Greedy solver: https://arxiv.org/abs/1604.06174 (CheckpointSolverChen)

+ Rotor solver: https://hal.inria.fr/hal-02352969 (CheckpointSolverRotor)

+

+ Args:

+ graph (Graph): The computing graph to be optimized.

+ free_memory (float): Memory constraint for the solution.

+ requires_linearize (bool): Whether the graph needs to be linearized.

+ cnode (List[str], optional): Common node List, should be the subset of input. Default to None.

+ optim_multiplier (float, optional): The multiplier of extra weight storage for the

+ ``torch.optim.Optimizer``. Default to 1.0.

+

+ Warnings:

+ Meta information of the graph is required for any ``CheckpointSolver``.

+ """

+ # super-dainiu: this graph is a temporary graph which can refer to

+ # the owning module, but we will return another deepcopy of it after

+ # the solver is executed.

+ self.graph = deepcopy(graph)

+ self.graph.owning_module = graph.owning_module

+ _copy_output(graph, self.graph)

+ self.graph.set_codegen(ActivationCheckpointCodeGen())

+

+ # check if has meta information

+ if any(len(node.meta) == 0 for node in self.graph.nodes):

+ raise RuntimeError(

+ "Nodes meta information hasn't been prepared! Please extract from graph before constructing the solver!"

+ )

+

+ # parameter memory = parameter size + optimizer extra weight storage

+ self.free_memory = free_memory - _get_param_size(self.graph.owning_module) * (optim_multiplier + 1)

+ self.cnode = cnode

+ self.requires_linearize = requires_linearize

+ if self.requires_linearize:

+ self.node_list = self._linearize_graph()

+ else:

+ self.node_list = self.get_node_list()

+

+ @abstractmethod

+ def solve(self):

+ """Solve the checkpointing problem and return the solution.

+ """

+ pass

+

+ def get_node_list(self):

+ """Get the node list.

+ """

+ return [[node] for node in self.graph.nodes]

+

+ def _linearize_graph(self) -> List[List[Node]]:

+ """Linearizing the graph

+

+ Args:

+ graph (Graph): The computing graph to be optimized.

+

+ Returns:

+ List[List[Node]]: List of list, each inside list of Node presents

+ the actual 'node' in linearized manner.

+

+ Remarks:

+ Do merge the inplace ops and shape-consistency ops into the previous node.

+ """

+

+ # Common nodes are type of nodes that could be seen as attributes and remain

+ # unchanged throughout the whole model, it will be used several times by

+ # different blocks of model, so that it is hard for us to linearize the graph

+ # when we encounter those kinds of nodes. We let users to annotate some of the

+ # input as common node, such as attention mask, and the followings are some of

+ # the ops that could actually be seen as common nodes. With our common node prop,

+ # we could find some of the "real" common nodes (e.g. the real attention mask

+ # used in BERT and GPT), the rule is simple, for node who's parents are all common

+ # nodes or it's op belongs to the following operations, we view this node as a

+ # newly born common node.

+ # List of target name that could be seen as common node

+ common_ops = ["getattr", "getitem", "size"]

+

+ def _is_cop(target: Any) -> bool:

+ """Check if an op could be seen as common node

+

+ Args:

+ target (Any): node target

+

+ Returns:

+ bool

+ """

+

+ if isinstance(target, str):

+ return target in common_ops

+ else:

+ return target.__name__ in common_ops

+

+ def _is_sink() -> bool:

+ """Check if we can free all dependencies

+

+ Returns:

+ bool

+ """

+

+ def _is_inplace(n: Node):

+ """Get the inplace argument from ``torch.fx.Node``

+ """

+ inplace = False

+ if n.op == "call_function":

+ inplace = n.kwargs.get("inplace", False)

+ elif n.op == "call_module":

+ inplace = getattr(n.graph.owning_module.get_submodule(n.target), "inplace", False)

+ return inplace

+

+ def _is_shape_consistency(n: Node):

+ """Check if this node is shape-consistency node (i.e. ``runtime_apply`` or ``runtime_apply_for_iterable_object``)

+ """

+ return n.target in [runtime_apply, runtime_apply_for_iterable_object, runtime_comm_spec_apply]

+

+ return not sum([v for _, v in deps.items()]) and not any(map(_is_inplace, n.users)) and not any(

+ map(_is_shape_consistency, n.users))

+

+ # make sure that item in cnode is valid

+ if self.cnode:

+ for name in self.cnode:

+ try:

+ assert next(node for node in self.graph.nodes if node.name == name).op == "placeholder", \

+ f"Common node {name} is not an input of the model."

+ except StopIteration:

+ raise ValueError(f"Common node name {name} not in graph.")

+

+ else:

+ self.cnode = []

+

+ deps = {}

+ node_list = []

+ region = []

+

+ for n in self.graph.nodes:

+ if n.op != "placeholder" and n.op != "output":

+ for n_par in n.all_input_nodes:

+ if n_par.op != "placeholder" and n_par.name not in self.cnode:

+ deps[n_par] -= 1

+ region.append(n)

+

+ # if the node could free all dependencies in graph

+ # we could begin a new node

+ if _is_sink():

+ node_list.append(region)

+ region = []

+

+ # propagate common node attr if possible

+ if len(n.all_input_nodes) == len([node for node in n.all_input_nodes if node.name in self.cnode

+ ]) or _is_cop(n.target):

+ self.cnode.append(n.name)

+ else:

+ deps[n] = len([user for user in n.users if user.op != "output"])

+ return node_list

diff --git a/colossalai/auto_parallel/checkpoint/ckpt_solver_chen.py b/colossalai/auto_parallel/checkpoint/ckpt_solver_chen.py

new file mode 100644

index 000000000..19b2ef598

--- /dev/null

+++ b/colossalai/auto_parallel/checkpoint/ckpt_solver_chen.py

@@ -0,0 +1,87 @@

+import math

+from copy import deepcopy

+from typing import List, Set, Tuple

+

+from torch.fx import Graph, Node

+

+from colossalai.fx.profiler import calculate_fwd_in, calculate_fwd_tmp

+

+from .ckpt_solver_base import CheckpointSolverBase

+

+__all__ = ['CheckpointSolverChen']

+

+

+class CheckpointSolverChen(CheckpointSolverBase):

+

+ def __init__(self, graph: Graph, cnode: List[str] = None, num_grids: int = 6):

+ """

+ This is the simple implementation of Algorithm 3 in https://arxiv.org/abs/1604.06174.

+ Note that this algorithm targets at memory optimization only, using techniques in appendix A.

+

+ Usage:

+ Assume that we have a ``GraphModule``, and we have already done the extractions

+ to the graph to retrieve all information needed, then we could use the following

+ code to find a solution using ``CheckpointSolverChen``:

+ >>> solver = CheckpointSolverChen(gm.graph)

+ >>> chen_graph = solver.solve()

+ >>> gm.graph = chen_graph # set the graph to a new graph

+

+ Args:

+ graph (Graph): The computing graph to be optimized.

+ cnode (List[str], optional): Common node List, should be the subset of input. Defaults to None.

+ num_grids (int, optional): Number of grids to search for b. Defaults to 6.

+ """

+ super().__init__(graph, 0, 0, True, cnode)

+ self.num_grids = num_grids

+

+ def solve(self) -> Graph:

+ """Solve the checkpointing problem using Algorithm 3.

+

+ Returns:

+ graph (Graph): The optimized graph, should be a copy of the original graph.

+ """

+ checkpointable_op = ['call_module', 'call_method', 'call_function', 'get_attr']

+ ckpt = self.grid_search()

+ for i, seg in enumerate(ckpt):

+ for idx in range(*seg):

+ nodes = self.node_list[idx]

+ for n in nodes:

+ if n.op in checkpointable_op:

+ n.meta['activation_checkpoint'] = i

+ return deepcopy(self.graph)

+

+ def run_chen_greedy(self, b: int = 0) -> Tuple[Set, int]:

+ """

+ This is the simple implementation of Algorithm 3 in https://arxiv.org/abs/1604.06174.

+ """

+ ckpt_intv = []

+ temp = 0

+ x = 0

+ y = 0

+ prev_idx = 2

+ for idx, nodes in enumerate(self.node_list):

+ for n in nodes:

+ n: Node

+ temp += calculate_fwd_in(n) + calculate_fwd_tmp(n)

+ y = max(y, temp)

+ if temp > b and idx > prev_idx:

+ x += calculate_fwd_in(nodes[0])

+ temp = 0

+ ckpt_intv.append((prev_idx, idx + 1))

+ prev_idx = idx + 1

+ return ckpt_intv, math.floor(math.sqrt(x * y))

+

+ def grid_search(self) -> Set:

+ """

+ Search ckpt strategy with b = 0, then run the allocation algorithm again with b = √xy.

+ Grid search over [√2/2 b, √2 b] for ``ckpt_opt`` over ``num_grids`` as in appendix A.

+ """

+ _, b_approx = self.run_chen_greedy(0)

+ b_min, b_max = math.floor(b_approx / math.sqrt(2)), math.ceil(b_approx * math.sqrt(2))

+ b_opt = math.inf

+ for b in range(b_min, b_max, (b_max - b_min) // self.num_grids):

+ ckpt_intv, b_approx = self.run_chen_greedy(b)

+ if b_approx < b_opt:

+ b_opt = b_approx

+ ckpt_opt = ckpt_intv

+ return ckpt_opt

diff --git a/colossalai/auto_parallel/checkpoint/ckpt_solver_rotor.c b/colossalai/auto_parallel/checkpoint/ckpt_solver_rotor.c

new file mode 100644

index 000000000..0fdcfd58a

--- /dev/null

+++ b/colossalai/auto_parallel/checkpoint/ckpt_solver_rotor.c

@@ -0,0 +1,197 @@

+#define PY_SSIZE_T_CLEAN

+#include

+

+long* PySequenceToLongArray(PyObject* pylist) {

+ if (!(pylist && PySequence_Check(pylist))) return NULL;

+ Py_ssize_t len = PySequence_Size(pylist);

+ long* result = (long*)calloc(len + 1, sizeof(long));

+ for (Py_ssize_t i = 0; i < len; ++i) {

+ PyObject* item = PySequence_GetItem(pylist, i);

+ result[i] = PyLong_AsLong(item);

+ Py_DECREF(item);

+ }

+ result[len] = 0;

+ return result;

+}

+

+double* PySequenceToDoubleArray(PyObject* pylist) {

+ if (!(pylist && PySequence_Check(pylist))) return NULL;

+ Py_ssize_t len = PySequence_Size(pylist);

+ double* result = (double*)calloc(len + 1, sizeof(double));

+ for (Py_ssize_t i = 0; i < len; ++i) {

+ PyObject* item = PySequence_GetItem(pylist, i);

+ result[i] = PyFloat_AsDouble(item);

+ Py_DECREF(item);

+ }

+ result[len] = 0;

+ return result;

+}

+

+long* getLongArray(PyObject* container, const char* attributeName) {

+ PyObject* sequence = PyObject_GetAttrString(container, attributeName);

+ long* result = PySequenceToLongArray(sequence);

+ Py_DECREF(sequence);

+ return result;

+}

+

+double* getDoubleArray(PyObject* container, const char* attributeName) {

+ PyObject* sequence = PyObject_GetAttrString(container, attributeName);

+ double* result = PySequenceToDoubleArray(sequence);

+ Py_DECREF(sequence);

+ return result;

+}

+

+static PyObject* computeTable(PyObject* self, PyObject* args) {

+ PyObject* chainParam;

+ int mmax;

+

+ if (!PyArg_ParseTuple(args, "Oi", &chainParam, &mmax)) return NULL;

+

+ double* ftime = getDoubleArray(chainParam, "ftime");

+ if (!ftime) return NULL;

+

+ double* btime = getDoubleArray(chainParam, "btime");

+ if (!btime) return NULL;

+

+ long* x = getLongArray(chainParam, "x");

+ if (!x) return NULL;

+

+ long* xbar = getLongArray(chainParam, "xbar");

+ if (!xbar) return NULL;

+

+ long* ftmp = getLongArray(chainParam, "btmp");

+ if (!ftmp) return NULL;

+

+ long* btmp = getLongArray(chainParam, "btmp");

+ if (!btmp) return NULL;

+

+ long chainLength = PyObject_Length(chainParam);

+ if (!chainLength) return NULL;

+

+#define COST_TABLE(m, i, l) \

+ costTable[(m) * (chainLength + 1) * (chainLength + 1) + \

+ (i) * (chainLength + 1) + (l)]

+ double* costTable = (double*)calloc(