mirror of https://github.com/hpcaitech/ColossalAI

fix readme

parent

9179d4088e

commit

dccc9e14ba

|

|

@ -0,0 +1,162 @@

|

|||

# Byte-compiled / optimized / DLL files

|

||||

__pycache__/

|

||||

*.py[cod]

|

||||

*$py.class

|

||||

|

||||

# C extensions

|

||||

*.so

|

||||

|

||||

# Distribution / packaging

|

||||

.Python

|

||||

build/

|

||||

develop-eggs/

|

||||

dist/

|

||||

downloads/

|

||||

eggs/

|

||||

.eggs/

|

||||

lib/

|

||||

lib64/

|

||||

parts/

|

||||

sdist/

|

||||

var/

|

||||

wheels/

|

||||

pip-wheel-metadata/

|

||||

share/python-wheels/

|

||||

*.egg-info/

|

||||

.installed.cfg

|

||||

*.egg

|

||||

MANIFEST

|

||||

|

||||

# PyInstaller

|

||||

# Usually these files are written by a python script from a template

|

||||

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

||||

*.manifest

|

||||

*.spec

|

||||

|

||||

# Installer logs

|

||||

pip-log.txt

|

||||

pip-delete-this-directory.txt

|

||||

|

||||

# Unit test / coverage reports

|

||||

htmlcov/

|

||||

.tox/

|

||||

.nox/

|

||||

.coverage

|

||||

.coverage.*

|

||||

.cache

|

||||

nosetests.xml

|

||||

coverage.xml

|

||||

*.cover

|

||||

*.py,cover

|

||||

.hypothesis/

|

||||

.pytest_cache/

|

||||

|

||||

# Translations

|

||||

*.mo

|

||||

*.pot

|

||||

|

||||

# Django stuff:

|

||||

*.log

|

||||

local_settings.py

|

||||

db.sqlite3

|

||||

db.sqlite3-journal

|

||||

|

||||

# Flask stuff:

|

||||

instance/

|

||||

.webassets-cache

|

||||

|

||||

# Scrapy stuff:

|

||||

.scrapy

|

||||

|

||||

# Sphinx documentation

|

||||

docs/_build/

|

||||

docs/.build/

|

||||

|

||||

# PyBuilder

|

||||

target/

|

||||

|

||||

# Jupyter Notebook

|

||||

.ipynb_checkpoints

|

||||

|

||||

# IPython

|

||||

profile_default/

|

||||

ipython_config.py

|

||||

|

||||

# pyenv

|

||||

.python-version

|

||||

|

||||

# pipenv

|

||||

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

||||

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

||||

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

||||

# install all needed dependencies.

|

||||

#Pipfile.lock

|

||||

|

||||

# PEP 582; used by e.g. github.com/David-OConnor/pyflow

|

||||

__pypackages__/

|

||||

|

||||

# Celery stuff

|

||||

celerybeat-schedule

|

||||

celerybeat.pid

|

||||

|

||||

# SageMath parsed files

|

||||

*.sage.py

|

||||

|

||||

# Environments

|

||||

.env

|

||||

.venv

|

||||

env/

|

||||

venv/

|

||||

ENV/

|

||||

env.bak/

|

||||

venv.bak/

|

||||

|

||||

# Spyder project settings

|

||||

.spyderproject

|

||||

.spyproject

|

||||

|

||||

# Rope project settings

|

||||

.ropeproject

|

||||

|

||||

# mkdocs documentation

|

||||

/site

|

||||

|

||||

# mypy

|

||||

.mypy_cache/

|

||||

.dmypy.json

|

||||

dmypy.json

|

||||

|

||||

# Pyre type checker

|

||||

.pyre/

|

||||

|

||||

# IDE

|

||||

.idea/

|

||||

.vscode/

|

||||

|

||||

# macos

|

||||

*.DS_Store

|

||||

#data/

|

||||

|

||||

docs/.build

|

||||

|

||||

# pytorch checkpoint

|

||||

*.pt

|

||||

|

||||

# wandb log

|

||||

examples/wandb/

|

||||

examples/logs/

|

||||

examples/output/

|

||||

examples/training_scripts/logs

|

||||

examples/training_scripts/wandb

|

||||

examples/training_scripts/output

|

||||

|

||||

examples/awesome-chatgpt-prompts/

|

||||

temp/

|

||||

|

||||

# ColossalChat

|

||||

applications/ColossalChat/logs

|

||||

applications/ColossalChat/models

|

||||

applications/ColossalChat/sft_data

|

||||

applications/ColossalChat/prompt_data

|

||||

applications/ColossalChat/preference_data

|

||||

applications/ColossalChat/temp

|

||||

|

|

@ -0,0 +1,202 @@

|

|||

Copyright 2021- HPC-AI Technology Inc. All rights reserved.

|

||||

Apache License

|

||||

Version 2.0, January 2004

|

||||

http://www.apache.org/licenses/

|

||||

|

||||

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

||||

|

||||

1. Definitions.

|

||||

|

||||

"License" shall mean the terms and conditions for use, reproduction,

|

||||

and distribution as defined by Sections 1 through 9 of this document.

|

||||

|

||||

"Licensor" shall mean the copyright owner or entity authorized by

|

||||

the copyright owner that is granting the License.

|

||||

|

||||

"Legal Entity" shall mean the union of the acting entity and all

|

||||

other entities that control, are controlled by, or are under common

|

||||

control with that entity. For the purposes of this definition,

|

||||

"control" means (i) the power, direct or indirect, to cause the

|

||||

direction or management of such entity, whether by contract or

|

||||

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

||||

outstanding shares, or (iii) beneficial ownership of such entity.

|

||||

|

||||

"You" (or "Your") shall mean an individual or Legal Entity

|

||||

exercising permissions granted by this License.

|

||||

|

||||

"Source" form shall mean the preferred form for making modifications,

|

||||

including but not limited to software source code, documentation

|

||||

source, and configuration files.

|

||||

|

||||

"Object" form shall mean any form resulting from mechanical

|

||||

transformation or translation of a Source form, including but

|

||||

not limited to compiled object code, generated documentation,

|

||||

and conversions to other media types.

|

||||

|

||||

"Work" shall mean the work of authorship, whether in Source or

|

||||

Object form, made available under the License, as indicated by a

|

||||

copyright notice that is included in or attached to the work

|

||||

(an example is provided in the Appendix below).

|

||||

|

||||

"Derivative Works" shall mean any work, whether in Source or Object

|

||||

form, that is based on (or derived from) the Work and for which the

|

||||

editorial revisions, annotations, elaborations, or other modifications

|

||||

represent, as a whole, an original work of authorship. For the purposes

|

||||

of this License, Derivative Works shall not include works that remain

|

||||

separable from, or merely link (or bind by name) to the interfaces of,

|

||||

the Work and Derivative Works thereof.

|

||||

|

||||

"Contribution" shall mean any work of authorship, including

|

||||

the original version of the Work and any modifications or additions

|

||||

to that Work or Derivative Works thereof, that is intentionally

|

||||

submitted to Licensor for inclusion in the Work by the copyright owner

|

||||

or by an individual or Legal Entity authorized to submit on behalf of

|

||||

the copyright owner. For the purposes of this definition, "submitted"

|

||||

means any form of electronic, verbal, or written communication sent

|

||||

to the Licensor or its representatives, including but not limited to

|

||||

communication on electronic mailing lists, source code control systems,

|

||||

and issue tracking systems that are managed by, or on behalf of, the

|

||||

Licensor for the purpose of discussing and improving the Work, but

|

||||

excluding communication that is conspicuously marked or otherwise

|

||||

designated in writing by the copyright owner as "Not a Contribution."

|

||||

|

||||

"Contributor" shall mean Licensor and any individual or Legal Entity

|

||||

on behalf of whom a Contribution has been received by Licensor and

|

||||

subsequently incorporated within the Work.

|

||||

|

||||

2. Grant of Copyright License. Subject to the terms and conditions of

|

||||

this License, each Contributor hereby grants to You a perpetual,

|

||||

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

||||

copyright license to reproduce, prepare Derivative Works of,

|

||||

publicly display, publicly perform, sublicense, and distribute the

|

||||

Work and such Derivative Works in Source or Object form.

|

||||

|

||||

3. Grant of Patent License. Subject to the terms and conditions of

|

||||

this License, each Contributor hereby grants to You a perpetual,

|

||||

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

||||

(except as stated in this section) patent license to make, have made,

|

||||

use, offer to sell, sell, import, and otherwise transfer the Work,

|

||||

where such license applies only to those patent claims licensable

|

||||

by such Contributor that are necessarily infringed by their

|

||||

Contribution(s) alone or by combination of their Contribution(s)

|

||||

with the Work to which such Contribution(s) was submitted. If You

|

||||

institute patent litigation against any entity (including a

|

||||

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

||||

or a Contribution incorporated within the Work constitutes direct

|

||||

or contributory patent infringement, then any patent licenses

|

||||

granted to You under this License for that Work shall terminate

|

||||

as of the date such litigation is filed.

|

||||

|

||||

4. Redistribution. You may reproduce and distribute copies of the

|

||||

Work or Derivative Works thereof in any medium, with or without

|

||||

modifications, and in Source or Object form, provided that You

|

||||

meet the following conditions:

|

||||

|

||||

(a) You must give any other recipients of the Work or

|

||||

Derivative Works a copy of this License; and

|

||||

|

||||

(b) You must cause any modified files to carry prominent notices

|

||||

stating that You changed the files; and

|

||||

|

||||

(c) You must retain, in the Source form of any Derivative Works

|

||||

that You distribute, all copyright, patent, trademark, and

|

||||

attribution notices from the Source form of the Work,

|

||||

excluding those notices that do not pertain to any part of

|

||||

the Derivative Works; and

|

||||

|

||||

(d) If the Work includes a "NOTICE" text file as part of its

|

||||

distribution, then any Derivative Works that You distribute must

|

||||

include a readable copy of the attribution notices contained

|

||||

within such NOTICE file, excluding those notices that do not

|

||||

pertain to any part of the Derivative Works, in at least one

|

||||

of the following places: within a NOTICE text file distributed

|

||||

as part of the Derivative Works; within the Source form or

|

||||

documentation, if provided along with the Derivative Works; or,

|

||||

within a display generated by the Derivative Works, if and

|

||||

wherever such third-party notices normally appear. The contents

|

||||

of the NOTICE file are for informational purposes only and

|

||||

do not modify the License. You may add Your own attribution

|

||||

notices within Derivative Works that You distribute, alongside

|

||||

or as an addendum to the NOTICE text from the Work, provided

|

||||

that such additional attribution notices cannot be construed

|

||||

as modifying the License.

|

||||

|

||||

You may add Your own copyright statement to Your modifications and

|

||||

may provide additional or different license terms and conditions

|

||||

for use, reproduction, or distribution of Your modifications, or

|

||||

for any such Derivative Works as a whole, provided Your use,

|

||||

reproduction, and distribution of the Work otherwise complies with

|

||||

the conditions stated in this License.

|

||||

|

||||

5. Submission of Contributions. Unless You explicitly state otherwise,

|

||||

any Contribution intentionally submitted for inclusion in the Work

|

||||

by You to the Licensor shall be under the terms and conditions of

|

||||

this License, without any additional terms or conditions.

|

||||

Notwithstanding the above, nothing herein shall supersede or modify

|

||||

the terms of any separate license agreement you may have executed

|

||||

with Licensor regarding such Contributions.

|

||||

|

||||

6. Trademarks. This License does not grant permission to use the trade

|

||||

names, trademarks, service marks, or product names of the Licensor,

|

||||

except as required for reasonable and customary use in describing the

|

||||

origin of the Work and reproducing the content of the NOTICE file.

|

||||

|

||||

7. Disclaimer of Warranty. Unless required by applicable law or

|

||||

agreed to in writing, Licensor provides the Work (and each

|

||||

Contributor provides its Contributions) on an "AS IS" BASIS,

|

||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

||||

implied, including, without limitation, any warranties or conditions

|

||||

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

||||

PARTICULAR PURPOSE. You are solely responsible for determining the

|

||||

appropriateness of using or redistributing the Work and assume any

|

||||

risks associated with Your exercise of permissions under this License.

|

||||

|

||||

8. Limitation of Liability. In no event and under no legal theory,

|

||||

whether in tort (including negligence), contract, or otherwise,

|

||||

unless required by applicable law (such as deliberate and grossly

|

||||

negligent acts) or agreed to in writing, shall any Contributor be

|

||||

liable to You for damages, including any direct, indirect, special,

|

||||

incidental, or consequential damages of any character arising as a

|

||||

result of this License or out of the use or inability to use the

|

||||

Work (including but not limited to damages for loss of goodwill,

|

||||

work stoppage, computer failure or malfunction, or any and all

|

||||

other commercial damages or losses), even if such Contributor

|

||||

has been advised of the possibility of such damages.

|

||||

|

||||

9. Accepting Warranty or Additional Liability. While redistributing

|

||||

the Work or Derivative Works thereof, You may choose to offer,

|

||||

and charge a fee for, acceptance of support, warranty, indemnity,

|

||||

or other liability obligations and/or rights consistent with this

|

||||

License. However, in accepting such obligations, You may act only

|

||||

on Your own behalf and on Your sole responsibility, not on behalf

|

||||

of any other Contributor, and only if You agree to indemnify,

|

||||

defend, and hold each Contributor harmless for any liability

|

||||

incurred by, or claims asserted against, such Contributor by reason

|

||||

of your accepting any such warranty or additional liability.

|

||||

|

||||

END OF TERMS AND CONDITIONS

|

||||

|

||||

APPENDIX: How to apply the Apache License to your work.

|

||||

|

||||

To apply the Apache License to your work, attach the following

|

||||

boilerplate notice, with the fields enclosed by brackets "[]"

|

||||

replaced with your own identifying information. (Don't include

|

||||

the brackets!) The text should be enclosed in the appropriate

|

||||

comment syntax for the file format. We also recommend that a

|

||||

file or class name and description of purpose be included on the

|

||||

same "printed page" as the copyright notice for easier

|

||||

identification within third-party archives.

|

||||

|

||||

Copyright 2021- HPC-AI Technology Inc.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License");

|

||||

you may not use this file except in compliance with the License.

|

||||

You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software

|

||||

distributed under the License is distributed on an "AS IS" BASIS,

|

||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

See the License for the specific language governing permissions and

|

||||

limitations under the License.

|

||||

|

|

@ -0,0 +1,601 @@

|

|||

<h1 align="center">

|

||||

<img width="auto" height="100px", src="https://raw.githubusercontent.com/hpcaitech/public_assets/main/applications/chat/logo_coati.png"/>

|

||||

<br/>

|

||||

<span>ColossalChat</span>

|

||||

</h1>

|

||||

|

||||

## Table of Contents

|

||||

|

||||

- [Table of Contents](#table-of-contents)

|

||||

- [What is ColossalChat and Coati ?](#what-is-colossalchat-and-coati-)

|

||||

- [Online demo](#online-demo)

|

||||

- [Install](#install)

|

||||

- [Install the environment](#install-the-environment)

|

||||

- [Install the Transformers](#install-the-transformers)

|

||||

- [How to use?](#how-to-use)

|

||||

- [Supervised datasets collection](#step-1-data-collection)

|

||||

- [RLHF Training Stage1 - Supervised instructs tuning](#rlhf-training-stage1---supervised-instructs-tuning)

|

||||

- [RLHF Training Stage2 - Training reward model](#rlhf-training-stage2---training-reward-model)

|

||||

- [RLHF Training Stage3 - Training model with reinforcement learning by human feedback](#rlhf-training-stage3---proximal-policy-optimization)

|

||||

- [Inference Quantization and Serving - After Training](#inference-quantization-and-serving---after-training)

|

||||

- [Coati7B examples](#coati7b-examples)

|

||||

- [Generation](#generation)

|

||||

- [Open QA](#open-qa)

|

||||

- [Limitation for LLaMA-finetuned models](#limitation)

|

||||

- [Limitation of dataset](#limitation)

|

||||

- [Alternative Option For RLHF: DPO](#alternative-option-for-rlhf-direct-preference-optimization)

|

||||

- [Alternative Option For RLHF: SimPO](#alternative-option-for-rlhf-simple-preference-optimization-simpo)

|

||||

- [Alternative Option For RLHF: ORPO](#alternative-option-for-rlhf-odds-ratio-preference-optimization-orpo)

|

||||

- [Alternative Option For RLHF: KTO](#alternative-option-for-rlhf-kahneman-tversky-optimization-kto)

|

||||

- [FAQ](#faq)

|

||||

- [How to save/load checkpoint](#faq)

|

||||

- [How to train with limited resources](#faq)

|

||||

- [The Plan](#the-plan)

|

||||

- [Real-time progress](#real-time-progress)

|

||||

- [Invitation to open-source contribution](#invitation-to-open-source-contribution)

|

||||

- [Quick Preview](#quick-preview)

|

||||

- [Authors](#authors)

|

||||

- [Citations](#citations)

|

||||

- [Licenses](#licenses)

|

||||

|

||||

---

|

||||

|

||||

## What Is ColossalChat And Coati ?

|

||||

|

||||

[ColossalChat](https://github.com/hpcaitech/ColossalAI/tree/main/applications/Chat) is the project to implement LLM with RLHF, powered by the [Colossal-AI](https://github.com/hpcaitech/ColossalAI) project.

|

||||

|

||||

Coati stands for `ColossalAI Talking Intelligence`. It is the name for the module implemented in this project and is also the name of the large language model developed by the ColossalChat project.

|

||||

|

||||

The Coati package provides a unified large language model framework that has implemented the following functions

|

||||

|

||||

- Supports comprehensive large-model training acceleration capabilities for ColossalAI, without requiring knowledge of complex distributed training algorithms

|

||||

- Supervised datasets collection

|

||||

- Supervised instructions fine-tuning

|

||||

- Training reward model

|

||||

- Reinforcement learning with human feedback

|

||||

- Quantization inference

|

||||

- Fast model deploying

|

||||

- Perfectly integrated with the Hugging Face ecosystem, a high degree of model customization

|

||||

|

||||

<div align="center">

|

||||

<p align="center">

|

||||

<img src="https://raw.githubusercontent.com/hpcaitech/public_assets/main/applications/chatgpt/chatgpt.png" width=700/>

|

||||

</p>

|

||||

|

||||

Image source: https://openai.com/blog/chatgpt

|

||||

|

||||

</div>

|

||||

|

||||

**As Colossal-AI is undergoing some major updates, this project will be actively maintained to stay in line with the Colossal-AI project.**

|

||||

|

||||

More details can be found in the latest news.

|

||||

|

||||

- [2023/03] [ColossalChat: An Open-Source Solution for Cloning ChatGPT With a Complete RLHF Pipeline](https://medium.com/@yangyou_berkeley/colossalchat-an-open-source-solution-for-cloning-chatgpt-with-a-complete-rlhf-pipeline-5edf08fb538b)

|

||||

- [2023/02] [Open Source Solution Replicates ChatGPT Training Process! Ready to go with only 1.6GB GPU Memory](https://www.hpc-ai.tech/blog/colossal-ai-chatgpt)

|

||||

|

||||

## Online demo

|

||||

|

||||

<div align="center">

|

||||

<a href="https://www.youtube.com/watch?v=HcTiHzApHm0">

|

||||

<img src="https://raw.githubusercontent.com/hpcaitech/public_assets/main/applications/chat/ColossalChat%20YouTube.png" width="700" />

|

||||

</a>

|

||||

</div>

|

||||

|

||||

[ColossalChat](https://github.com/hpcaitech/ColossalAI/tree/main/applications/Chat): An open-source solution for cloning [ChatGPT](https://openai.com/blog/chatgpt/) with a complete RLHF pipeline.

|

||||

[[code]](https://github.com/hpcaitech/ColossalAI/tree/main/applications/Chat)

|

||||

[[blog]](https://medium.com/@yangyou_berkeley/colossalchat-an-open-source-solution-for-cloning-chatgpt-with-a-complete-rlhf-pipeline-5edf08fb538b)

|

||||

[[demo]](https://www.youtube.com/watch?v=HcTiHzApHm0)

|

||||

[[tutorial]](https://www.youtube.com/watch?v=-qFBZFmOJfg)

|

||||

|

||||

<p id="ColossalChat-Speed" align="center">

|

||||

<img src="https://raw.githubusercontent.com/hpcaitech/public_assets/main/applications/chat/ColossalChat%20Speed.jpg" width=450/>

|

||||

</p>

|

||||

|

||||

> DeepSpeedChat performance comes from its blog on 2023 April 12, ColossalChat performance can be reproduced on an AWS p4d.24xlarge node with 8 A100-40G GPUs with the following command: `torchrun --standalone --nproc_per_node 8 benchmark_opt_lora_dummy.py --num_collect_steps 1 --use_kernels --strategy colossalai_zero2 --experience_batch_size 64 --train_batch_size 32`

|

||||

|

||||

## Install

|

||||

|

||||

### Install the Environment

|

||||

|

||||

```bash

|

||||

# Create new environment

|

||||

conda create -n colossal-chat python=3.10.9 (>=3.8.7)

|

||||

conda activate colossal-chat

|

||||

|

||||

# Install flash-attention

|

||||

git clone -b v2.0.5 https://github.com/Dao-AILab/flash-attention.git

|

||||

cd $FLASH_ATTENTION_ROOT/

|

||||

pip install .

|

||||

cd $FLASH_ATTENTION_ROOT/csrc/xentropy

|

||||

pip install .

|

||||

cd $FLASH_ATTENTION_ROOT/csrc/layer_norm

|

||||

pip install .

|

||||

cd $FLASH_ATTENTION_ROOT/csrc/rotary

|

||||

pip install .

|

||||

|

||||

# Clone Colossalai

|

||||

git clone https://github.com/hpcaitech/ColossalAI.git

|

||||

|

||||

# Install ColossalAI

|

||||

cd $COLOSSAL_AI_ROOT

|

||||

BUILD_EXT=1 pip install .

|

||||

|

||||

# Install ColossalChat

|

||||

cd $COLOSSAL_AI_ROOT/applications/Chat

|

||||

pip install .

|

||||

```

|

||||

|

||||

## How To Use?

|

||||

|

||||

### RLHF Training Stage1 - Supervised Instructs Tuning

|

||||

|

||||

Stage1 is supervised instructs fine-tuning (SFT). This step is a crucial part of the RLHF training process, as it involves training a machine learning model using human-provided instructions to learn the initial behavior for the task at hand. Here's a detailed guide on how to SFT your LLM with ColossalChat. More details can be found in [example guideline](./examples/README.md).

|

||||

|

||||

#### Step 1: Data Collection

|

||||

The first step in Stage 1 is to collect a dataset of human demonstrations of the following format.

|

||||

|

||||

```json

|

||||

[

|

||||

{"messages":

|

||||

[

|

||||

{

|

||||

"from": "user",

|

||||

"content": "what are some pranks with a pen i can do?"

|

||||

},

|

||||

{

|

||||

"from": "assistant",

|

||||

"content": "Are you looking for practical joke ideas?"

|

||||

},

|

||||

]

|

||||

},

|

||||

]

|

||||

```

|

||||

|

||||

#### Step 2: Preprocessing

|

||||

Once you have collected your SFT dataset, you will need to preprocess it. This involves four steps: data cleaning, data deduplication, formatting and tokenization. In this section, we will focus on formatting and tokenization.

|

||||

|

||||

In this code, we provide a flexible way for users to set the conversation template for formatting chat data using Huggingface's newest feature--- chat template. Please follow the [example guideline](./examples/README.md) on how to format and tokenize data.

|

||||

|

||||

#### Step 3: Training

|

||||

Choose a suitable model architecture for your task. Note that your model should be compatible with the tokenizer that you used to tokenize the SFT dataset. You can run [train_sft.sh](./examples/training_scripts/train_sft.sh) to start a supervised instructs fine-tuning. More details can be found in [example guideline](./examples/README.md).

|

||||

|

||||

### RLHF Training Stage2 - Training Reward Model

|

||||

|

||||

Stage2 trains a reward model, which obtains corresponding scores by manually ranking different outputs for the same prompt and supervises the training of the reward model.

|

||||

|

||||

#### Step 1: Data Collection

|

||||

Below shows the preference dataset format used in training the reward model.

|

||||

|

||||

```json

|

||||

[

|

||||

{"context": [

|

||||

{

|

||||

"from": "human",

|

||||

"content": "Introduce butterflies species in Oregon."

|

||||

}

|

||||

],

|

||||

"chosen": [

|

||||

{

|

||||

"from": "assistant",

|

||||

"content": "About 150 species of butterflies live in Oregon, with about 100 species are moths..."

|

||||

},

|

||||

],

|

||||

"rejected": [

|

||||

{

|

||||

"from": "assistant",

|

||||

"content": "Are you interested in just the common butterflies? There are a few common ones which will be easy to find..."

|

||||

},

|

||||

]

|

||||

},

|

||||

]

|

||||

```

|

||||

|

||||

#### Step 2: Preprocessing

|

||||

Similar to the second step in the previous stage, we format the reward data into the same structured format as used in step 2 of the SFT stage. You can run [prepare_preference_dataset.sh](./examples/data_preparation_scripts/prepare_preference_dataset.sh) to prepare the preference data for reward model training.

|

||||

|

||||

#### Step 3: Training

|

||||

You can run [train_rm.sh](./examples/training_scripts/train_rm.sh) to start the reward model training. More details can be found in [example guideline](./examples/README.md).

|

||||

|

||||

### RLHF Training Stage3 - Proximal Policy Optimization

|

||||

|

||||

In stage3 we will use reinforcement learning algorithm--- Proximal Policy Optimization (PPO), which is the most complex part of the training process:

|

||||

|

||||

<p align="center">

|

||||

<img src="https://raw.githubusercontent.com/hpcaitech/public_assets/main/applications/chat/stage-3.jpeg" width=800/>

|

||||

</p>

|

||||

|

||||

#### Step 1: Data Collection

|

||||

PPO uses two kind of training data--- the prompt data and the sft data (optional). The first dataset is mandatory, data samples within the prompt dataset ends with a line from "human" and thus the "assistant" needs to generate a response to answer to the "human". Note that you can still use conversation that ends with a line from the "assistant", in that case, the last line will be dropped. Here is an example of the prompt dataset format.

|

||||

|

||||

```json

|

||||

[

|

||||

{"messages":

|

||||

[

|

||||

{

|

||||

"from": "human",

|

||||

"content": "what are some pranks with a pen i can do?"

|

||||

}

|

||||

]

|

||||

},

|

||||

]

|

||||

```

|

||||

|

||||

#### Step 2: Data Preprocessing

|

||||

To prepare the prompt dataset for PPO training, simply run [prepare_prompt_dataset.sh](./examples/data_preparation_scripts/prepare_prompt_dataset.sh)

|

||||

|

||||

#### Step 3: Training

|

||||

You can run the [train_ppo.sh](./examples/training_scripts/train_ppo.sh) to start PPO training. Here are some unique arguments for PPO, please refer to the training configuration section for other training configuration. More detais can be found in [example guideline](./examples/README.md).

|

||||

|

||||

```bash

|

||||

--pretrain $PRETRAINED_MODEL_PATH \

|

||||

--rm_pretrain $PRETRAINED_MODEL_PATH \ # reward model architectual

|

||||

--tokenizer_dir $PRETRAINED_TOKENIZER_PATH \

|

||||

--rm_checkpoint_path $REWARD_MODEL_PATH \ # reward model checkpoint path

|

||||

--prompt_dataset ${prompt_dataset[@]} \ # List of string, the prompt dataset

|

||||

--ptx_dataset ${ptx_dataset[@]} \ # List of string, the SFT data used in the SFT stage

|

||||

--ptx_batch_size 1 \ # batch size for calculate ptx loss

|

||||

--ptx_coef 0.0 \ # none-zero if ptx loss is enable

|

||||

--num_episodes 2000 \ # number of episodes to train

|

||||

--num_collect_steps 1 \

|

||||

--num_update_steps 1 \

|

||||

--experience_batch_size 8 \

|

||||

--train_batch_size 4 \

|

||||

--accumulation_steps 2

|

||||

```

|

||||

|

||||

Each episode has two phases, the collect phase and the update phase. During the collect phase, we will collect experiences (answers generated by actor), store those in ExperienceBuffer. Then data in ExperienceBuffer is used during the update phase to update parameter of actor and critic.

|

||||

|

||||

- Without tensor parallelism,

|

||||

```

|

||||

experience buffer size

|

||||

= num_process * num_collect_steps * experience_batch_size

|

||||

= train_batch_size * accumulation_steps * num_process

|

||||

```

|

||||

|

||||

- With tensor parallelism,

|

||||

```

|

||||

num_tp_group = num_process / tp

|

||||

experience buffer size

|

||||

= num_tp_group * num_collect_steps * experience_batch_size

|

||||

= train_batch_size * accumulation_steps * num_tp_group

|

||||

```

|

||||

|

||||

## Alternative Option For RLHF: Direct Preference Optimization (DPO)

|

||||

For those seeking an alternative to Reinforcement Learning from Human Feedback (RLHF), Direct Preference Optimization (DPO) presents a compelling option. DPO, as detailed in this [paper](https://arxiv.org/abs/2305.18290), DPO offers an low-cost way to perform RLHF and usually request less computation resources compares to PPO. Read this [README](./examples/README.md) for more information.

|

||||

|

||||

### DPO Training Stage1 - Supervised Instructs Tuning

|

||||

|

||||

Please refer the [sft section](#dpo-training-stage1---supervised-instructs-tuning) in the PPO part.

|

||||

|

||||

### DPO Training Stage2 - DPO Training

|

||||

#### Step 1: Data Collection & Preparation

|

||||

For DPO training, you only need the preference dataset. Please follow the instruction in the [preference dataset preparation section](#rlhf-training-stage2---training-reward-model) to prepare the preference data for DPO training.

|

||||

|

||||

#### Step 2: Training

|

||||

You can run the [train_dpo.sh](./examples/training_scripts/train_dpo.sh) to start DPO training. More detais can be found in [example guideline](./examples/README.md).

|

||||

|

||||

## Alternative Option For RLHF: Simple Preference Optimization (SimPO)

|

||||

Simple Preference Optimization (SimPO) from this [paper](https://arxiv.org/pdf/2405.14734) is similar to DPO but it abandons the use of the reference model, which makes the training more efficient. It also adds a reward shaping term called target reward margin to enhance training stability. It also use length normalization to better align with the inference process. Read this [README](./examples/README.md) for more information.

|

||||

|

||||

## Alternative Option For RLHF: Odds Ratio Preference Optimization (ORPO)

|

||||

Odds Ratio Preference Optimization (ORPO) from this [paper](https://arxiv.org/pdf/2403.07691) is a reference model free alignment method that use a mixture of SFT loss and a reinforcement leanring loss calculated based on odds-ratio-based implicit reward to makes the training more efficient and stable. Read this [README](./examples/README.md) for more information.

|

||||

|

||||

## Alternative Option For RLHF: Kahneman-Tversky Optimization (KTO)

|

||||

We support the method introduced in the paper [KTO:Model Alignment as Prospect Theoretic Optimization](https://arxiv.org/pdf/2402.01306) (KTO). Which is a aligment method that directly maximize "human utility" of generation results. Read this [README](./examples/README.md) for more information.

|

||||

|

||||

### Inference Quantization and Serving - After Training

|

||||

|

||||

We provide an online inference server and a benchmark. We aim to run inference on single GPU, so quantization is essential when using large models.

|

||||

|

||||

We support 8-bit quantization (RTN), 4-bit quantization (GPTQ), and FP16 inference.

|

||||

|

||||

Online inference server scripts can help you deploy your own services.

|

||||

For more details, see [`inference/`](https://github.com/hpcaitech/ColossalAI/tree/main/applications/Chat/inference).

|

||||

|

||||

## Coati7B examples

|

||||

|

||||

### Generation

|

||||

|

||||

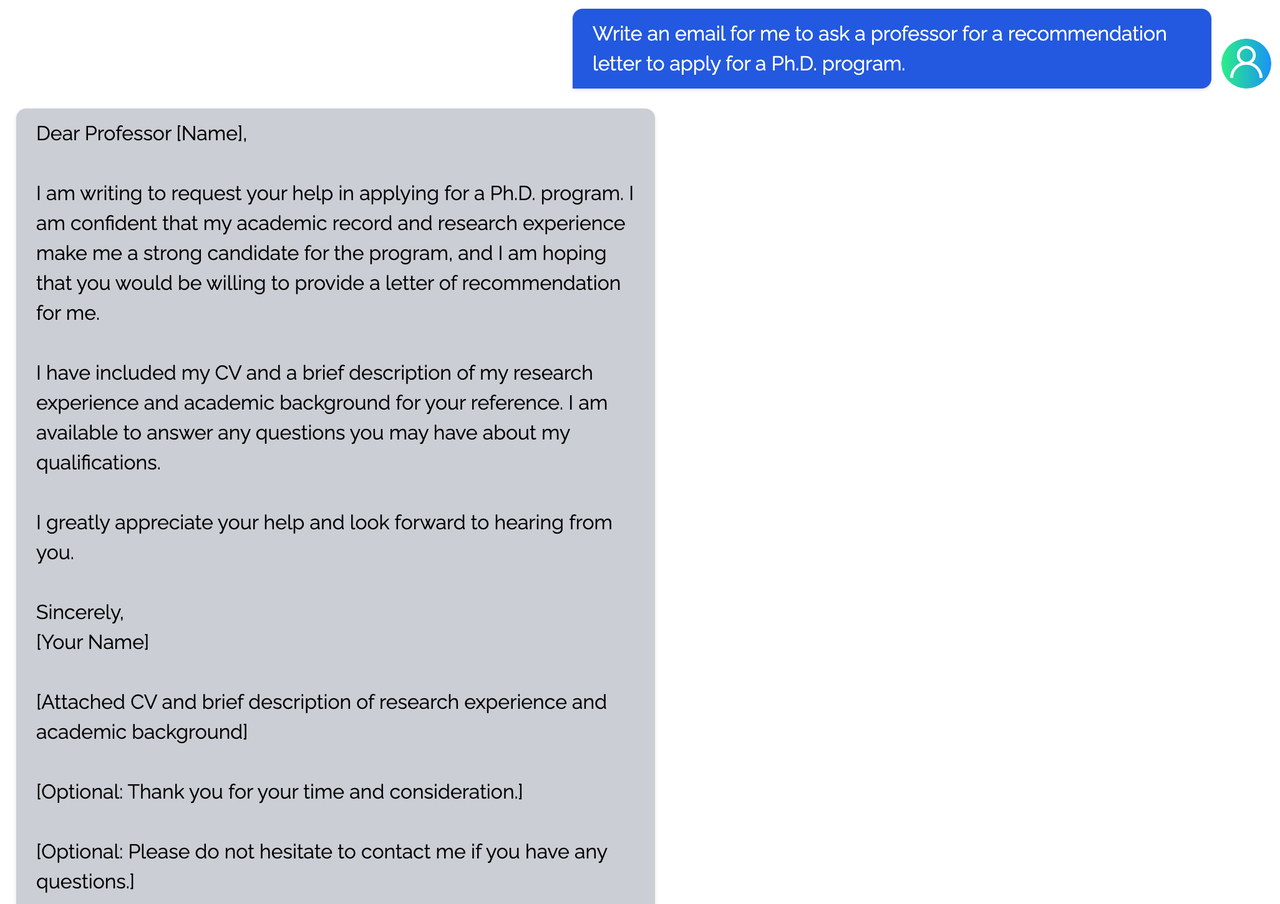

<details><summary><b>E-mail</b></summary>

|

||||

|

||||

|

||||

|

||||

</details>

|

||||

|

||||

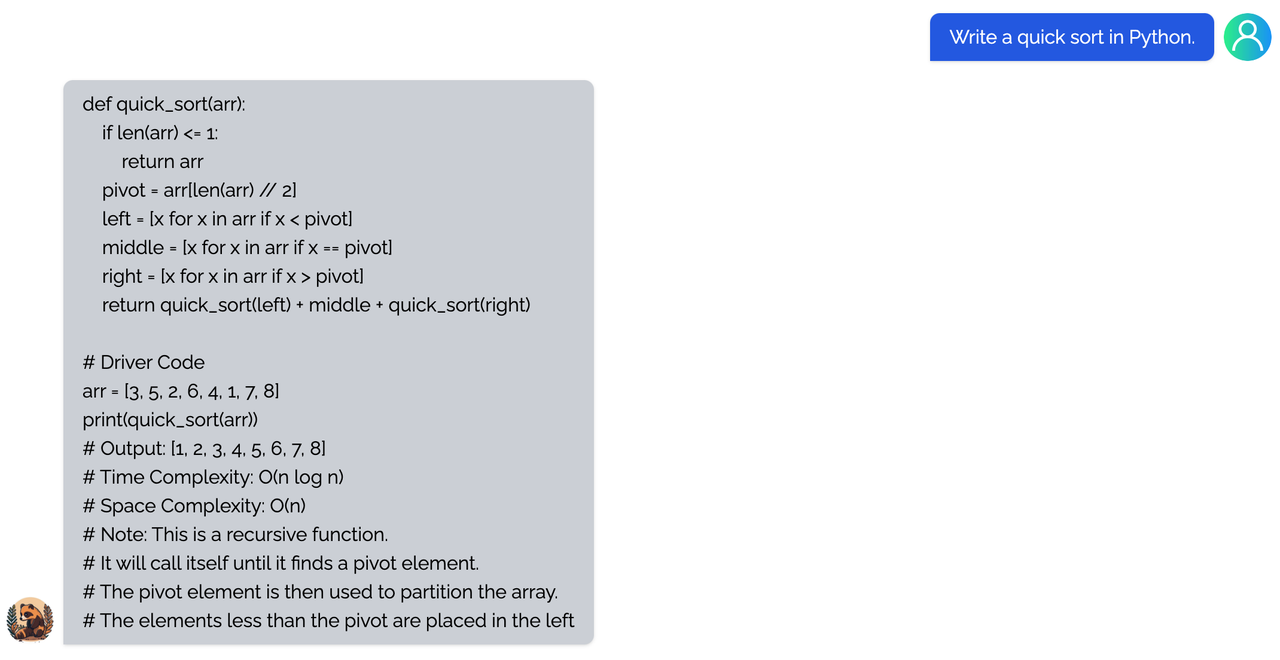

<details><summary><b>coding</b></summary>

|

||||

|

||||

|

||||

|

||||

</details>

|

||||

|

||||

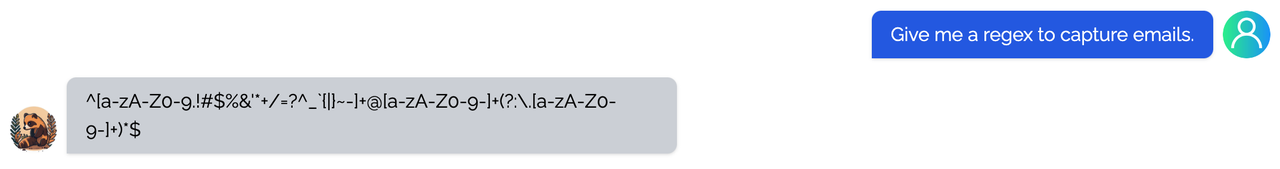

<details><summary><b>regex</b></summary>

|

||||

|

||||

|

||||

|

||||

</details>

|

||||

|

||||

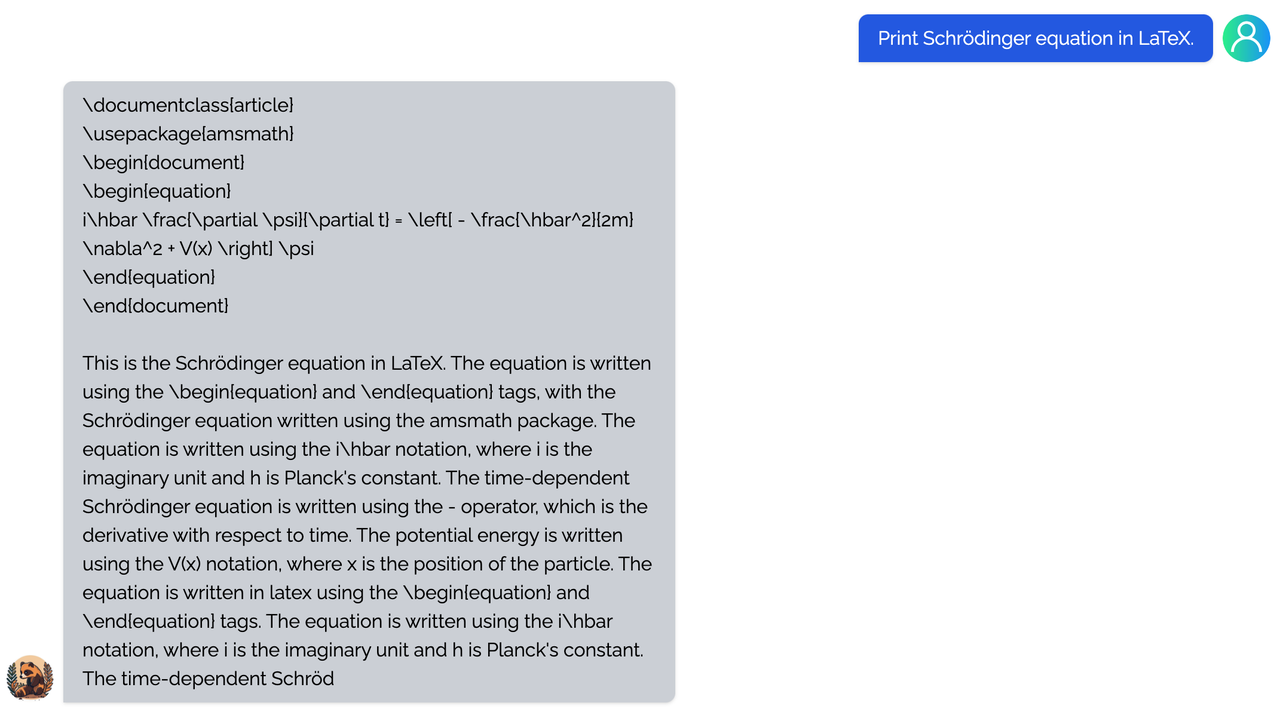

<details><summary><b>Tex</b></summary>

|

||||

|

||||

|

||||

|

||||

</details>

|

||||

|

||||

<details><summary><b>writing</b></summary>

|

||||

|

||||

|

||||

|

||||

</details>

|

||||

|

||||

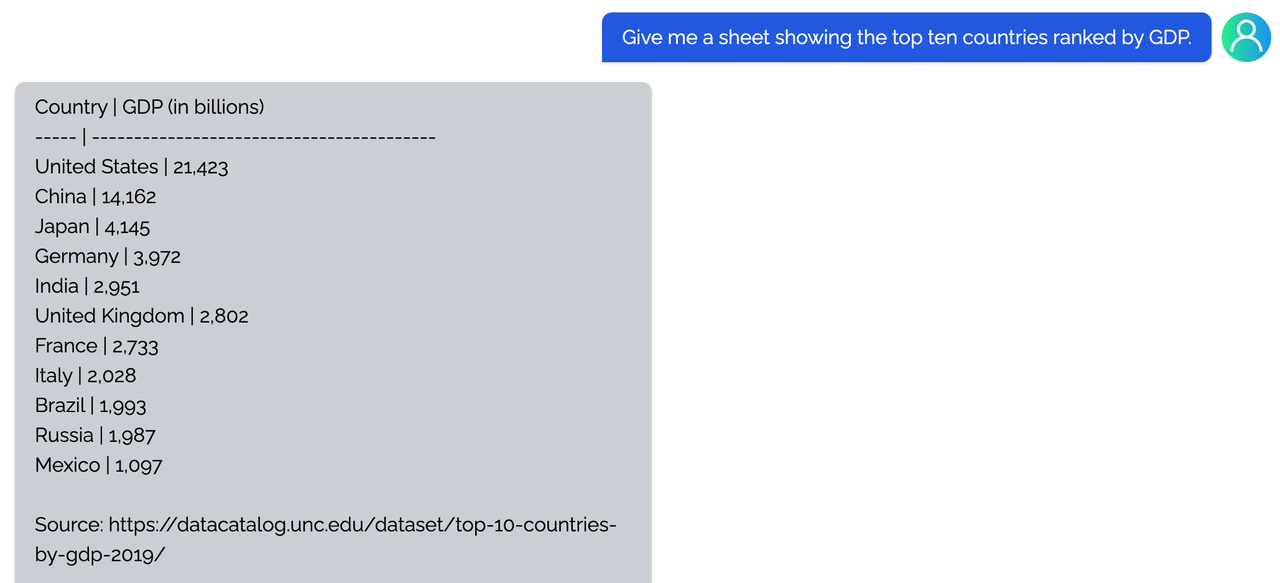

<details><summary><b>Table</b></summary>

|

||||

|

||||

|

||||

|

||||

</details>

|

||||

|

||||

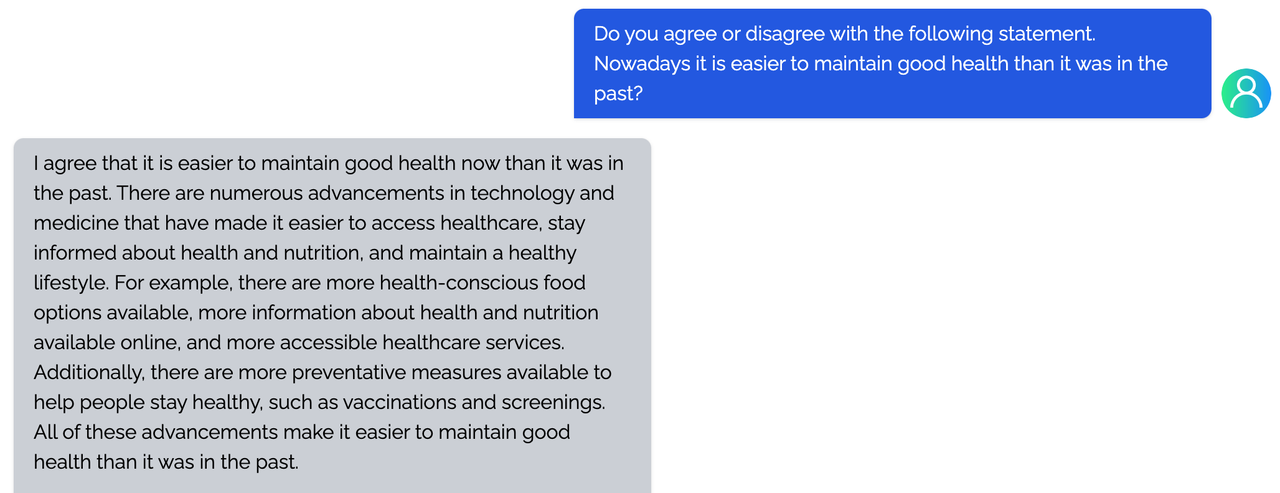

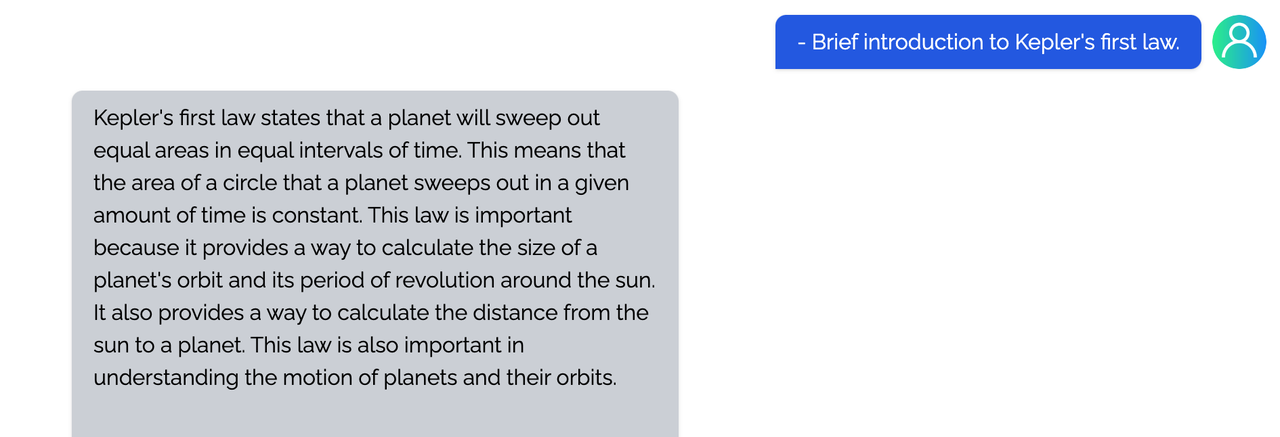

### Open QA

|

||||

|

||||

<details><summary><b>Game</b></summary>

|

||||

|

||||

|

||||

|

||||

</details>

|

||||

|

||||

<details><summary><b>Travel</b></summary>

|

||||

|

||||

|

||||

|

||||

</details>

|

||||

|

||||

<details><summary><b>Physical</b></summary>

|

||||

|

||||

|

||||

|

||||

</details>

|

||||

|

||||

<details><summary><b>Chemical</b></summary>

|

||||

|

||||

|

||||

|

||||

</details>

|

||||

|

||||

<details><summary><b>Economy</b></summary>

|

||||

|

||||

|

||||

|

||||

</details>

|

||||

|

||||

You can find more examples in this [repo](https://github.com/XueFuzhao/InstructionWild/blob/main/comparison.md).

|

||||

|

||||

### Limitation

|

||||

|

||||

<details><summary><b>Limitation for LLaMA-finetuned models</b></summary>

|

||||

- Both Alpaca and ColossalChat are based on LLaMA. It is hard to compensate for the missing knowledge in the pre-training stage.

|

||||

- Lack of counting ability: Cannot count the number of items in a list.

|

||||

- Lack of Logics (reasoning and calculation)

|

||||

- Tend to repeat the last sentence (fail to produce the end token).

|

||||

- Poor multilingual results: LLaMA is mainly trained on English datasets (Generation performs better than QA).

|

||||

</details>

|

||||

|

||||

<details><summary><b>Limitation of dataset</b></summary>

|

||||

- Lack of summarization ability: No such instructions in finetune datasets.

|

||||

- Lack of multi-turn chat: No such instructions in finetune datasets

|

||||

- Lack of self-recognition: No such instructions in finetune datasets

|

||||

- Lack of Safety:

|

||||

- When the input contains fake facts, the model makes up false facts and explanations.

|

||||

- Cannot abide by OpenAI's policy: When generating prompts from OpenAI API, it always abides by its policy. So no violation case is in the datasets.

|

||||

</details>

|

||||

|

||||

## FAQ

|

||||

|

||||

<details><summary><b>How to save/load checkpoint</b></summary>

|

||||

|

||||

We have integrated the Transformers save and load pipeline, allowing users to freely call Hugging Face's language models and save them in the HF format.

|

||||

|

||||

- Option 1: Save the model weights, model config and generation config (Note: tokenizer will not be saved) which can be loaded using HF's from_pretrained method.

|

||||

```python

|

||||

# if use lora, you can choose to merge lora weights before saving

|

||||

if args.lora_rank > 0 and args.merge_lora_weights:

|

||||

from coati.models.lora import LORA_MANAGER

|

||||

|

||||

# NOTE: set model to eval to merge LoRA weights

|

||||

LORA_MANAGER.merge_weights = True

|

||||

model.eval()

|

||||

# save model checkpoint after fitting on only rank0

|

||||

booster.save_model(model, os.path.join(args.save_dir, "modeling"), shard=True)

|

||||

|

||||

```

|

||||

|

||||

- Option 2: Save the model weights, model config, generation config, as well as the optimizer, learning rate scheduler, running states (Note: tokenizer will not be saved) which are needed for resuming training.

|

||||

```python

|

||||

from coati.utils import save_checkpoint

|

||||

# save model checkpoint after fitting on only rank0

|

||||

save_checkpoint(

|

||||

save_dir=actor_save_dir,

|

||||

booster=actor_booster,

|

||||

model=model,

|

||||

optimizer=optim,

|

||||

lr_scheduler=lr_scheduler,

|

||||

epoch=0,

|

||||

step=step,

|

||||

batch_size=train_batch_size,

|

||||

coordinator=coordinator,

|

||||

)

|

||||

```

|

||||

To load the saved checkpoint

|

||||

```python

|

||||

from coati.utils import load_checkpoint

|

||||

start_epoch, start_step, sampler_start_idx = load_checkpoint(

|

||||

load_dir=checkpoint_path,

|

||||

booster=booster,

|

||||

model=model,

|

||||

optimizer=optim,

|

||||

lr_scheduler=lr_scheduler,

|

||||

)

|

||||

```

|

||||

</details>

|

||||

|

||||

<details><summary><b>How to train with limited resources</b></summary>

|

||||

|

||||

Here are some suggestions that can allow you to train a 7B model on a single or multiple consumer-grade GPUs.

|

||||

|

||||

`batch_size`, `lora_rank` and `grad_checkpoint` are the most important parameters to successfully train the model. To maintain a descent batch size for gradient calculation, consider increase the accumulation_step and reduce the batch_size on each rank.

|

||||

|

||||

If you only have a single 24G GPU. Generally, using lora and "zero2-cpu" will be sufficient.

|

||||

|

||||

`gemini` and `gemini-auto` can enable a single 24G GPU to train the whole model without using LoRA if you have sufficient CPU memory. But that strategy doesn't support gradient accumulation.

|

||||

|

||||

If you have multiple GPUs each has very limited VRAM, say 8GB. You can try the `3d` for the plugin option, which supports tensor parellelism, set `--tp` to the number of GPUs that you have.

|

||||

</details>

|

||||

|

||||

### Real-time progress

|

||||

|

||||

You will find our progress in github [project broad](https://github.com/orgs/hpcaitech/projects/17/views/1).

|

||||

|

||||

## Invitation to open-source contribution

|

||||

|

||||

Referring to the successful attempts of [BLOOM](https://bigscience.huggingface.co/) and [Stable Diffusion](https://en.wikipedia.org/wiki/Stable_Diffusion), any and all developers and partners with computing powers, datasets, models are welcome to join and build the Colossal-AI community, making efforts towards the era of big AI models from the starting point of replicating ChatGPT!

|

||||

|

||||

You may contact us or participate in the following ways:

|

||||

|

||||

1. [Leaving a Star ⭐](https://github.com/hpcaitech/ColossalAI/stargazers) to show your like and support. Thanks!

|

||||

2. Posting an [issue](https://github.com/hpcaitech/ColossalAI/issues/new/choose), or submitting a PR on GitHub follow the guideline in [Contributing](https://github.com/hpcaitech/ColossalAI/blob/main/CONTRIBUTING.md).

|

||||

3. Join the Colossal-AI community on

|

||||

[Slack](https://github.com/hpcaitech/public_assets/tree/main/colossalai/contact/slack),

|

||||

and [WeChat(微信)](https://raw.githubusercontent.com/hpcaitech/public_assets/main/colossalai/img/WeChat.png "qrcode") to share your ideas.

|

||||

4. Send your official proposal to email contact@hpcaitech.com

|

||||

|

||||

Thanks so much to all of our amazing contributors!

|

||||

|

||||

## Quick Preview

|

||||

|

||||

<div align="center">

|

||||

<a href="https://chat.colossalai.org/">

|

||||

<img src="https://raw.githubusercontent.com/hpcaitech/public_assets/main/colossalai/img/Chat-demo.png" width="700" />

|

||||

</a>

|

||||

</div>

|

||||

|

||||

- An open-source low-cost solution for cloning [ChatGPT](https://openai.com/blog/chatgpt/) with a complete RLHF pipeline. [[demo]](https://chat.colossalai.org)

|

||||

|

||||

<p id="ChatGPT_scaling" align="center">

|

||||

<img src="https://raw.githubusercontent.com/hpcaitech/public_assets/main/applications/chatgpt/ChatGPT%20scaling.png" width=800/>

|

||||

</p>

|

||||

|

||||

- Up to 7.73 times faster for single server training and 1.42 times faster for single-GPU inference

|

||||

|

||||

<p id="ChatGPT-1GPU" align="center">

|

||||

<img src="https://raw.githubusercontent.com/hpcaitech/public_assets/main/applications/chatgpt/ChatGPT-1GPU.jpg" width=450/>

|

||||

</p>

|

||||

|

||||

- Up to 10.3x growth in model capacity on one GPU

|

||||

- A mini demo training process requires only 1.62GB of GPU memory (any consumer-grade GPU)

|

||||

|

||||

<p id="inference" align="center">

|

||||

<img src="https://raw.githubusercontent.com/hpcaitech/public_assets/main/applications/chatgpt/LoRA%20data.jpg" width=600/>

|

||||

</p>

|

||||

|

||||

- Increase the capacity of the fine-tuning model by up to 3.7 times on a single GPU

|

||||

- Keep in a sufficiently high running speed

|

||||

|

||||

| Model Pair | Alpaca-7B ⚔ Coati-7B | Coati-7B ⚔ Alpaca-7B |

|

||||

| :-----------: | :------------------: | :------------------: |

|

||||

| Better Cases | 38 ⚔ **41** | **45** ⚔ 33 |

|

||||

| Win Rate | 48% ⚔ **52%** | **58%** ⚔ 42% |

|

||||

| Average Score | 7.06 ⚔ **7.13** | **7.31** ⚔ 6.82 |

|

||||

|

||||

- Our Coati-7B model performs better than Alpaca-7B when using GPT-4 to evaluate model performance. The Coati-7B model we evaluate is an old version we trained a few weeks ago and the new version is around the corner.

|

||||

|

||||

## Authors

|

||||

|

||||

Coati is developed by ColossalAI Team:

|

||||

|

||||

- [ver217](https://github.com/ver217) Leading the project while contributing to the main framework.

|

||||

- [FrankLeeeee](https://github.com/FrankLeeeee) Providing ML infra support and also taking charge of both front-end and back-end development.

|

||||

- [htzhou](https://github.com/ht-zhou) Contributing to the algorithm and development for RM and PPO training.

|

||||

- [Fazzie](https://fazzie-key.cool/about/index.html) Contributing to the algorithm and development for SFT.

|

||||

- [ofey404](https://github.com/ofey404) Contributing to both front-end and back-end development.

|

||||

- [Wenhao Chen](https://github.com/CWHer) Contributing to subsequent code enhancements and performance improvements.

|

||||

- [Anbang Ye](https://github.com/YeAnbang) Contributing to the refactored PPO version with updated acceleration framework. Add support for DPO, SimPO, ORPO.

|

||||

|

||||

The PhD student from [(HPC-AI) Lab](https://ai.comp.nus.edu.sg/) also contributed a lot to this project.

|

||||

- [Zangwei Zheng](https://github.com/zhengzangw)

|

||||

- [Xue Fuzhao](https://github.com/XueFuzhao)

|

||||

|

||||

We also appreciate the valuable suggestions provided by [Jian Hu](https://github.com/hijkzzz) regarding the convergence of the PPO algorithm.

|

||||

|

||||

## Citations

|

||||

|

||||

```bibtex

|

||||

@article{Hu2021LoRALA,

|

||||

title = {LoRA: Low-Rank Adaptation of Large Language Models},

|

||||

author = {Edward J. Hu and Yelong Shen and Phillip Wallis and Zeyuan Allen-Zhu and Yuanzhi Li and Shean Wang and Weizhu Chen},

|

||||

journal = {ArXiv},

|

||||

year = {2021},

|

||||

volume = {abs/2106.09685}

|

||||

}

|

||||

|

||||

@article{ouyang2022training,

|

||||

title={Training language models to follow instructions with human feedback},

|

||||

author={Ouyang, Long and Wu, Jeff and Jiang, Xu and Almeida, Diogo and Wainwright, Carroll L and Mishkin, Pamela and Zhang, Chong and Agarwal, Sandhini and Slama, Katarina and Ray, Alex and others},

|

||||

journal={arXiv preprint arXiv:2203.02155},

|

||||

year={2022}

|

||||

}

|

||||

|

||||

@article{touvron2023llama,

|

||||

title={LLaMA: Open and Efficient Foundation Language Models},

|

||||

author={Touvron, Hugo and Lavril, Thibaut and Izacard, Gautier and Martinet, Xavier and Lachaux, Marie-Anne and Lacroix, Timoth{\'e}e and Rozi{\`e}re, Baptiste and Goyal, Naman and Hambro, Eric and Azhar, Faisal and Rodriguez, Aurelien and Joulin, Armand and Grave, Edouard and Lample, Guillaume},

|

||||

journal={arXiv preprint arXiv:2302.13971},

|

||||

year={2023}

|

||||

}

|

||||

|

||||

@misc{alpaca,

|

||||

author = {Rohan Taori and Ishaan Gulrajani and Tianyi Zhang and Yann Dubois and Xuechen Li and Carlos Guestrin and Percy Liang and Tatsunori B. Hashimoto },

|

||||

title = {Stanford Alpaca: An Instruction-following LLaMA model},

|

||||

year = {2023},

|

||||

publisher = {GitHub},

|

||||

journal = {GitHub repository},

|

||||

howpublished = {\url{https://github.com/tatsu-lab/stanford_alpaca}},

|

||||

}

|

||||

|

||||

@misc{instructionwild,

|

||||

author = {Fuzhao Xue and Zangwei Zheng and Yang You },

|

||||

title = {Instruction in the Wild: A User-based Instruction Dataset},

|

||||

year = {2023},

|

||||

publisher = {GitHub},

|

||||

journal = {GitHub repository},

|

||||

howpublished = {\url{https://github.com/XueFuzhao/InstructionWild}},

|

||||

}

|

||||

|

||||

@misc{meng2024simposimplepreferenceoptimization,

|

||||

title={SimPO: Simple Preference Optimization with a Reference-Free Reward},

|

||||

author={Yu Meng and Mengzhou Xia and Danqi Chen},

|

||||

year={2024},

|

||||

eprint={2405.14734},

|

||||

archivePrefix={arXiv},

|

||||

primaryClass={cs.CL},

|

||||

url={https://arxiv.org/abs/2405.14734},

|

||||

}

|

||||

|

||||

@misc{rafailov2023directpreferenceoptimizationlanguage,

|

||||

title={Direct Preference Optimization: Your Language Model is Secretly a Reward Model},

|

||||

author={Rafael Rafailov and Archit Sharma and Eric Mitchell and Stefano Ermon and Christopher D. Manning and Chelsea Finn},

|

||||

year={2023},

|

||||

eprint={2305.18290},

|

||||

archivePrefix={arXiv},

|

||||

primaryClass={cs.LG},

|

||||

url={https://arxiv.org/abs/2305.18290},

|

||||

}

|

||||

|

||||

@misc{hong2024orpomonolithicpreferenceoptimization,

|

||||

title={ORPO: Monolithic Preference Optimization without Reference Model},

|

||||

author={Jiwoo Hong and Noah Lee and James Thorne},

|

||||

year={2024},

|

||||

eprint={2403.07691},

|

||||

archivePrefix={arXiv},

|

||||

primaryClass={cs.CL},

|

||||

url={https://arxiv.org/abs/2403.07691},

|

||||

}

|

||||

```

|

||||

|

||||

## Licenses

|

||||

|

||||

Coati is licensed under the [Apache 2.0 License](LICENSE).

|

||||

|

|

@ -0,0 +1,17 @@

|

|||

{

|

||||

"chat_template": "{% for message in messages %}{% if message['role'] == 'user' %}{{'Human: ' + bos_token + message['content'].strip() + eos_token }}{% elif message['role'] == 'system' %}{{ message['content'].strip() + '\\n\\n' }}{% elif message['role'] == 'assistant' %}{{ 'Assistant: ' + bos_token + message['content'].strip() + eos_token }}{% endif %}{% endfor %}",

|

||||

"system_message": "A chat between a curious human and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the human's questions.\n\n",

|

||||

"human_line_start": [

|

||||

2

|

||||

],

|

||||

"human_line_end": [

|

||||

2

|

||||

],

|

||||

"assistant_line_start": [

|

||||

2

|

||||

],

|

||||

"assistant_line_end": [

|

||||

2

|

||||

],

|

||||

"end_of_system_line_position": 0

|

||||

}

|

||||

|

|

@ -0,0 +1,37 @@

|

|||

# Benchmarks

|

||||

|

||||

## Benchmark OPT with LoRA on dummy prompt data

|

||||

|

||||

We provide various OPT models (string in parentheses is the corresponding model name used in this script):

|

||||

|

||||

- OPT-125M (125m)

|

||||

- OPT-350M (350m)

|

||||

- OPT-700M (700m)

|

||||

- OPT-1.3B (1.3b)

|

||||

- OPT-2.7B (2.7b)

|

||||

- OPT-3.5B (3.5b)

|

||||

- OPT-5.5B (5.5b)

|

||||

- OPT-6.7B (6.7b)

|

||||

- OPT-10B (10b)

|

||||

- OPT-13B (13b)

|

||||

|

||||

We also provide various training strategies:

|

||||

|

||||

- gemini: ColossalAI GeminiPlugin with `placement_policy="cuda"`, like zero3

|

||||

- gemini_auto: ColossalAI GeminiPlugin with `placement_policy="cpu"`, like zero3-offload

|

||||

- zero2: ColossalAI zero2

|

||||

- zero2_cpu: ColossalAI zero2-offload

|

||||

- 3d: ColossalAI HybridParallelPlugin with TP, DP support

|

||||

|

||||

## How to Run

|

||||

```bash

|

||||

cd ../tests

|

||||

# Prepare data for benchmark

|

||||

SFT_DATASET=/path/to/sft/data/ \

|

||||

PROMPT_DATASET=/path/to/prompt/data/ \

|

||||

PRETRAIN_DATASET=/path/to/ptx/data/ \

|

||||

PREFERENCE_DATASET=/path/to/preference/data \

|

||||

./test_data_preparation.sh

|

||||

# Start benchmark

|

||||

./benchmark_ppo.sh

|

||||

```

|

||||

|

|

@ -0,0 +1,51 @@

|

|||

#!/bin/bash

|

||||

set_n_least_used_CUDA_VISIBLE_DEVICES() {

|

||||

local n=${1:-"9999"}

|

||||

echo "GPU Memory Usage:"

|

||||

local FIRST_N_GPU_IDS=$(nvidia-smi --query-gpu=memory.used --format=csv |

|

||||

tail -n +2 |

|

||||

nl -v 0 |

|

||||

tee /dev/tty |

|

||||

sort -g -k 2 |

|

||||

awk '{print $1}' |

|

||||

head -n $n)

|

||||

export CUDA_VISIBLE_DEVICES=$(echo $FIRST_N_GPU_IDS | sed 's/ /,/g')

|

||||

echo "Now CUDA_VISIBLE_DEVICES is set to:"

|

||||

echo "CUDA_VISIBLE_DEVICES=$CUDA_VISIBLE_DEVICES"

|

||||

}

|

||||

set_n_least_used_CUDA_VISIBLE_DEVICES 4

|

||||

|

||||

PROJECT_NAME="dpo"

|

||||

PARENT_CONFIG_FILE="./benchmark_config" # Path to a folder to save training config logs

|

||||

PRETRAINED_MODEL_PATH="" # huggingface or local model path

|

||||

PRETRAINED_TOKENIZER_PATH="" # huggingface or local tokenizer path

|

||||

BENCHMARK_DATA_DIR="./temp/dpo" # Path to benchmark data

|

||||

DATASET_SIZE=320

|

||||

|

||||

TIMESTAMP=$(date +%Y-%m-%d-%H-%M-%S)

|

||||

FULL_PROJECT_NAME="${PROJECT_NAME}-${TIMESTAMP}"

|

||||

declare -a dataset=(

|

||||

$BENCHMARK_DATA_DIR/arrow/part-0

|

||||

)

|

||||

|

||||

# Generate dummy test data

|

||||

python prepare_dummy_test_dataset.py --data_dir $BENCHMARK_DATA_DIR --dataset_size $DATASET_SIZE --max_length 2048 --data_type preference

|

||||

|

||||

|

||||

colossalai run --nproc_per_node 4 --master_port 31313 ../examples/training_scripts/train_dpo.py \

|

||||

--pretrain $PRETRAINED_MODEL_PATH \

|

||||

--tokenizer_dir $PRETRAINED_TOKENIZER_PATH \

|

||||

--dataset ${dataset[@]} \

|

||||

--plugin "zero2_cpu" \

|

||||

--max_epochs 1 \

|

||||

--accumulation_steps 1 \

|

||||

--batch_size 4 \

|

||||

--lr 1e-6 \

|

||||

--beta 0.1 \

|

||||

--mixed_precision "bf16" \

|

||||

--grad_clip 1.0 \

|

||||

--max_length 2048 \

|

||||

--weight_decay 0.01 \

|

||||

--warmup_steps 60 \

|

||||

--grad_checkpoint \

|

||||

--use_flash_attn

|

||||

|

|

@ -0,0 +1,51 @@

|

|||

#!/bin/bash

|

||||

set_n_least_used_CUDA_VISIBLE_DEVICES() {

|

||||

local n=${1:-"9999"}

|

||||

echo "GPU Memory Usage:"

|

||||

local FIRST_N_GPU_IDS=$(nvidia-smi --query-gpu=memory.used --format=csv |

|

||||

tail -n +2 |

|

||||

nl -v 0 |

|

||||

tee /dev/tty |

|

||||

sort -g -k 2 |

|

||||

awk '{print $1}' |

|

||||

head -n $n)

|

||||

export CUDA_VISIBLE_DEVICES=$(echo $FIRST_N_GPU_IDS | sed 's/ /,/g')

|

||||

echo "Now CUDA_VISIBLE_DEVICES is set to:"

|

||||

echo "CUDA_VISIBLE_DEVICES=$CUDA_VISIBLE_DEVICES"

|

||||

}

|

||||

set_n_least_used_CUDA_VISIBLE_DEVICES 4

|

||||

|

||||

PROJECT_NAME="kto"

|

||||

PARENT_CONFIG_FILE="./benchmark_config" # Path to a folder to save training config logs

|

||||

PRETRAINED_MODEL_PATH="" # huggingface or local model path

|

||||

PRETRAINED_TOKENIZER_PATH="" # huggingface or local tokenizer path

|

||||

BENCHMARK_DATA_DIR="./temp/kto" # Path to benchmark data

|

||||

DATASET_SIZE=80

|

||||

|

||||

TIMESTAMP=$(date +%Y-%m-%d-%H-%M-%S)

|

||||

FULL_PROJECT_NAME="${PROJECT_NAME}-${TIMESTAMP}"

|

||||

declare -a dataset=(

|

||||

$BENCHMARK_DATA_DIR/arrow/part-0

|

||||

)

|

||||

|

||||

# Generate dummy test data

|

||||

python prepare_dummy_test_dataset.py --data_dir $BENCHMARK_DATA_DIR --dataset_size $DATASET_SIZE --max_length 2048 --data_type kto

|

||||

|

||||

|

||||

colossalai run --nproc_per_node 2 --master_port 31313 ../examples/training_scripts/train_kto.py \

|

||||

--pretrain $PRETRAINED_MODEL_PATH \

|

||||

--tokenizer_dir $PRETRAINED_TOKENIZER_PATH \

|

||||

--dataset ${dataset[@]} \

|

||||

--plugin "zero2_cpu" \

|

||||

--max_epochs 1 \

|

||||

--accumulation_steps 1 \

|

||||

--batch_size 2 \

|

||||

--lr 1e-5 \

|

||||

--beta 0.1 \

|

||||

--mixed_precision "bf16" \

|

||||

--grad_clip 1.0 \

|

||||

--max_length 2048 \

|

||||

--weight_decay 0.01 \

|

||||

--warmup_steps 60 \

|

||||

--grad_checkpoint \

|

||||

--use_flash_attn

|

||||

|

|

@ -0,0 +1,4 @@

|

|||

Model=Opt-125m; lora_rank=0; plugin=zero2

|

||||

Max CUDA memory usage: 26123.16 MB

|

||||

Model=Opt-125m; lora_rank=0; plugin=zero2

|

||||

Max CUDA memory usage: 26123.91 MB

|

||||

|

|

@ -0,0 +1,51 @@

|

|||

#!/bin/bash

|

||||

set_n_least_used_CUDA_VISIBLE_DEVICES() {

|

||||

local n=${1:-"9999"}

|

||||

echo "GPU Memory Usage:"

|

||||

local FIRST_N_GPU_IDS=$(nvidia-smi --query-gpu=memory.used --format=csv |

|

||||

tail -n +2 |

|

||||

nl -v 0 |

|

||||

tee /dev/tty |

|

||||

sort -g -k 2 |

|

||||

awk '{print $1}' |

|

||||

head -n $n)

|

||||

export CUDA_VISIBLE_DEVICES=$(echo $FIRST_N_GPU_IDS | sed 's/ /,/g')

|

||||

echo "Now CUDA_VISIBLE_DEVICES is set to:"

|

||||

echo "CUDA_VISIBLE_DEVICES=$CUDA_VISIBLE_DEVICES"

|

||||

}

|

||||

set_n_least_used_CUDA_VISIBLE_DEVICES 2

|

||||

|

||||

PROJECT_NAME="orpo"

|

||||

PARENT_CONFIG_FILE="./benchmark_config" # Path to a folder to save training config logs

|

||||

PRETRAINED_MODEL_PATH="" # huggingface or local model path

|

||||

PRETRAINED_TOKENIZER_PATH="" # huggingface or local tokenizer path

|

||||

BENCHMARK_DATA_DIR="./temp/orpo" # Path to benchmark data

|

||||

DATASET_SIZE=160

|

||||

|

||||

TIMESTAMP=$(date +%Y-%m-%d-%H-%M-%S)

|

||||

FULL_PROJECT_NAME="${PROJECT_NAME}-${TIMESTAMP}"

|

||||

declare -a dataset=(

|

||||

$BENCHMARK_DATA_DIR/arrow/part-0

|

||||

)

|

||||

|

||||

# Generate dummy test data

|

||||

python prepare_dummy_test_dataset.py --data_dir $BENCHMARK_DATA_DIR --dataset_size $DATASET_SIZE --max_length 2048 --data_type preference

|

||||

|

||||

|

||||

colossalai run --nproc_per_node 2 --master_port 31313 ../examples/training_scripts/train_orpo.py \

|

||||

--pretrain $PRETRAINED_MODEL_PATH \

|

||||

--tokenizer_dir $PRETRAINED_TOKENIZER_PATH \

|

||||

--dataset ${dataset[@]} \

|

||||

--plugin "zero2" \

|

||||

--max_epochs 1 \

|

||||

--accumulation_steps 1 \

|

||||

--batch_size 4 \

|

||||

--lr 8e-6 \

|

||||

--lam 0.5 \

|

||||

--mixed_precision "bf16" \

|

||||

--grad_clip 1.0 \

|

||||

--max_length 2048 \

|

||||

--weight_decay 0.01 \

|

||||

--warmup_steps 60 \

|

||||

--grad_checkpoint \

|

||||

--use_flash_attn

|

||||

|

|

@ -0,0 +1,16 @@

|

|||

facebook/opt-125m; 0; zero2

|

||||

Performance summary:

|

||||

Generate 768 samples, throughput: 188.48 samples/s, TFLOPS per GPU: 361.23

|

||||

Train 768 samples, throughput: 448.38 samples/s, TFLOPS per GPU: 82.84

|

||||

Overall throughput: 118.42 samples/s

|

||||

Overall time per sample: 0.01 s

|

||||

Make experience time per sample: 0.01 s, 62.83%

|

||||

Learn time per sample: 0.00 s, 26.41%

|

||||

facebook/opt-125m; 0; zero2

|

||||

Performance summary:

|

||||

Generate 768 samples, throughput: 26.32 samples/s, TFLOPS per GPU: 50.45

|

||||

Train 768 samples, throughput: 71.15 samples/s, TFLOPS per GPU: 13.14

|

||||

Overall throughput: 18.86 samples/s

|

||||

Overall time per sample: 0.05 s

|

||||

Make experience time per sample: 0.04 s, 71.66%

|

||||

Learn time per sample: 0.01 s, 26.51%

|

||||

|

|

@ -0,0 +1,523 @@

|

|||

"""

|

||||

For becnhmarking ppo. Mudified from examples/training_scripts/train_ppo.py

|

||||

"""

|

||||

|

||||

import argparse

|

||||

import json

|

||||

import os

|

||||

import resource

|

||||

from contextlib import nullcontext

|

||||

|

||||

import torch

|

||||

import torch.distributed as dist

|

||||

from coati.dataset import (

|

||||

DataCollatorForPromptDataset,

|

||||

DataCollatorForSupervisedDataset,

|

||||

StatefulDistributedSampler,

|

||||

load_tokenized_dataset,

|

||||

setup_conversation_template,

|

||||

setup_distributed_dataloader,

|

||||

)

|

||||

from coati.models import Critic, RewardModel, convert_to_lora_module, disable_dropout

|

||||

from coati.trainer import PPOTrainer

|

||||

from coati.trainer.callbacks import PerformanceEvaluator

|

||||

from coati.trainer.utils import is_rank_0

|

||||

from coati.utils import load_checkpoint, replace_with_flash_attention

|

||||

from transformers import AutoTokenizer, OPTForCausalLM

|

||||

from transformers.models.opt.configuration_opt import OPTConfig

|

||||

|

||||

import colossalai

|

||||

from colossalai.booster import Booster

|

||||

from colossalai.booster.plugin import GeminiPlugin, HybridParallelPlugin, LowLevelZeroPlugin

|

||||

from colossalai.cluster import DistCoordinator

|

||||

from colossalai.lazy import LazyInitContext

|

||||

from colossalai.nn.lr_scheduler import CosineAnnealingWarmupLR

|

||||

from colossalai.nn.optimizer import HybridAdam

|

||||

from colossalai.utils import get_current_device

|

||||

|

||||

|

||||

def get_model_numel(model: torch.nn.Module, plugin: str, tp: int) -> int:

|

||||

numel = sum(p.numel() for p in model.parameters())

|

||||

if plugin == "3d" and tp > 1:

|

||||

numel *= dist.get_world_size()

|

||||

return numel

|

||||

|

||||

|

||||

def get_gpt_config(model_name: str) -> OPTConfig:

|

||||

model_map = {

|

||||

"125m": OPTConfig.from_pretrained("facebook/opt-125m"),

|

||||

"350m": OPTConfig(hidden_size=1024, ffn_dim=4096, num_hidden_layers=24, num_attention_heads=16),

|

||||

"700m": OPTConfig(hidden_size=1280, ffn_dim=5120, num_hidden_layers=36, num_attention_heads=20),

|

||||

"1.3b": OPTConfig.from_pretrained("facebook/opt-1.3b"),

|

||||