From ce8a3eae5be301d1e3f536ede32a2b0c65f7c51f Mon Sep 17 00:00:00 2001

From: Sze-qq <68757353+Sze-qq@users.noreply.github.com>

Date: Mon, 4 Apr 2022 13:47:43 +0800

Subject: [PATCH] update GPT-2 experiment result (#666)

---

README-zh-Hans.md | 5 +++--

README.md | 5 +++--

2 files changed, 6 insertions(+), 4 deletions(-)

diff --git a/README-zh-Hans.md b/README-zh-Hans.md

index 7ccdeaa19..5e15cd5af 100644

--- a/README-zh-Hans.md

+++ b/README-zh-Hans.md

@@ -86,9 +86,10 @@ Colossal-AI为您提供了一系列并行训练组件。我们的目标是让您

- 降低11倍GPU显存占用,或超线性扩展(张量并行)

- +

+GPT-2.png) -- 能训练接近11倍大小的模型(ZeRO)

+- 用相同的硬件条件训练24倍大的模型

+- 超3倍的吞吐量

### BERT

-- 能训练接近11倍大小的模型(ZeRO)

+- 用相同的硬件条件训练24倍大的模型

+- 超3倍的吞吐量

### BERT

diff --git a/README.md b/README.md

index 400116d1d..1378d8f24 100644

--- a/README.md

+++ b/README.md

@@ -87,9 +87,10 @@ distributed training in a few lines.

- 11x lower GPU memory consumption, and superlinear scaling efficiency with Tensor Parallelism

-

diff --git a/README.md b/README.md

index 400116d1d..1378d8f24 100644

--- a/README.md

+++ b/README.md

@@ -87,9 +87,10 @@ distributed training in a few lines.

- 11x lower GPU memory consumption, and superlinear scaling efficiency with Tensor Parallelism

- +

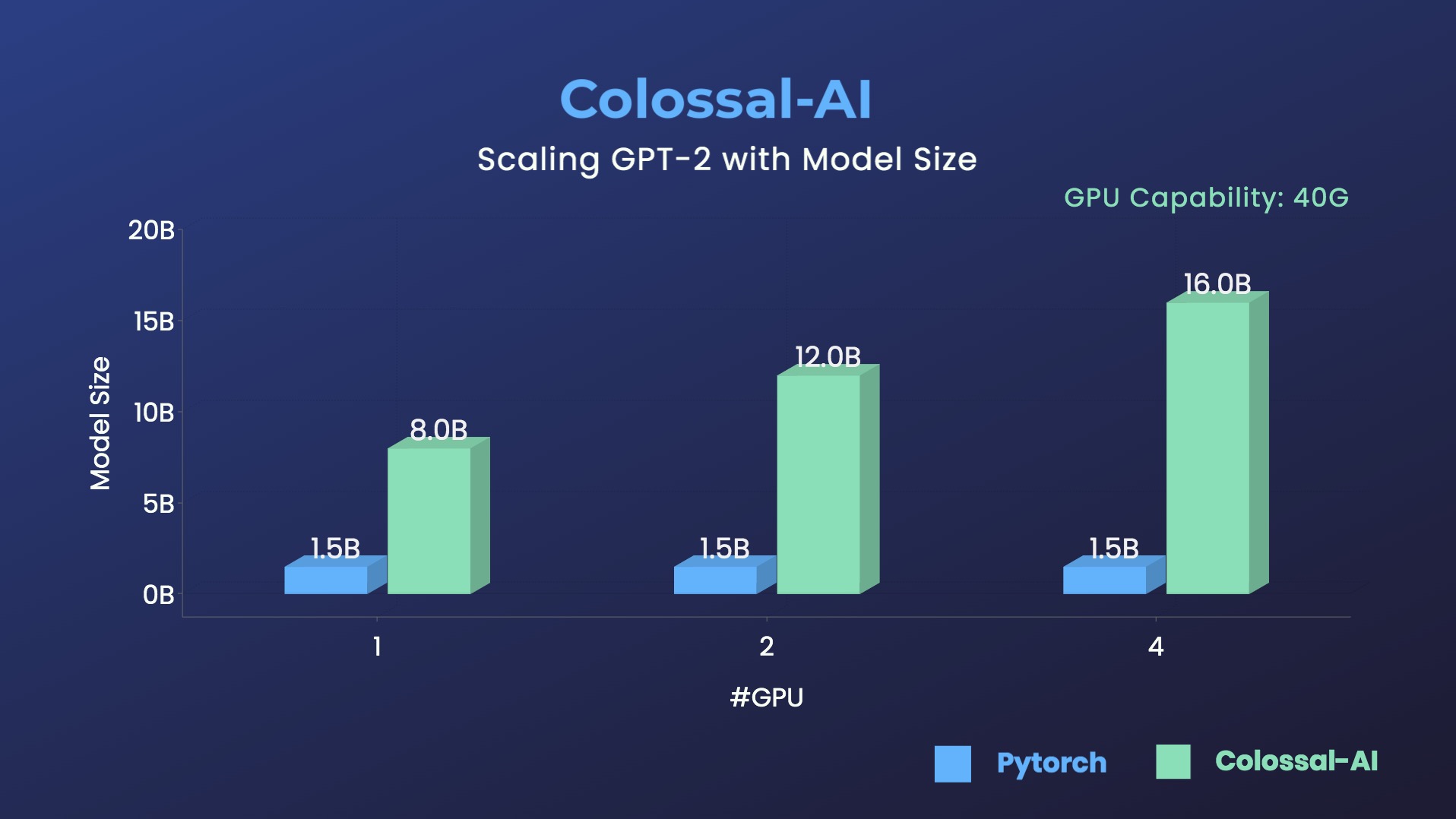

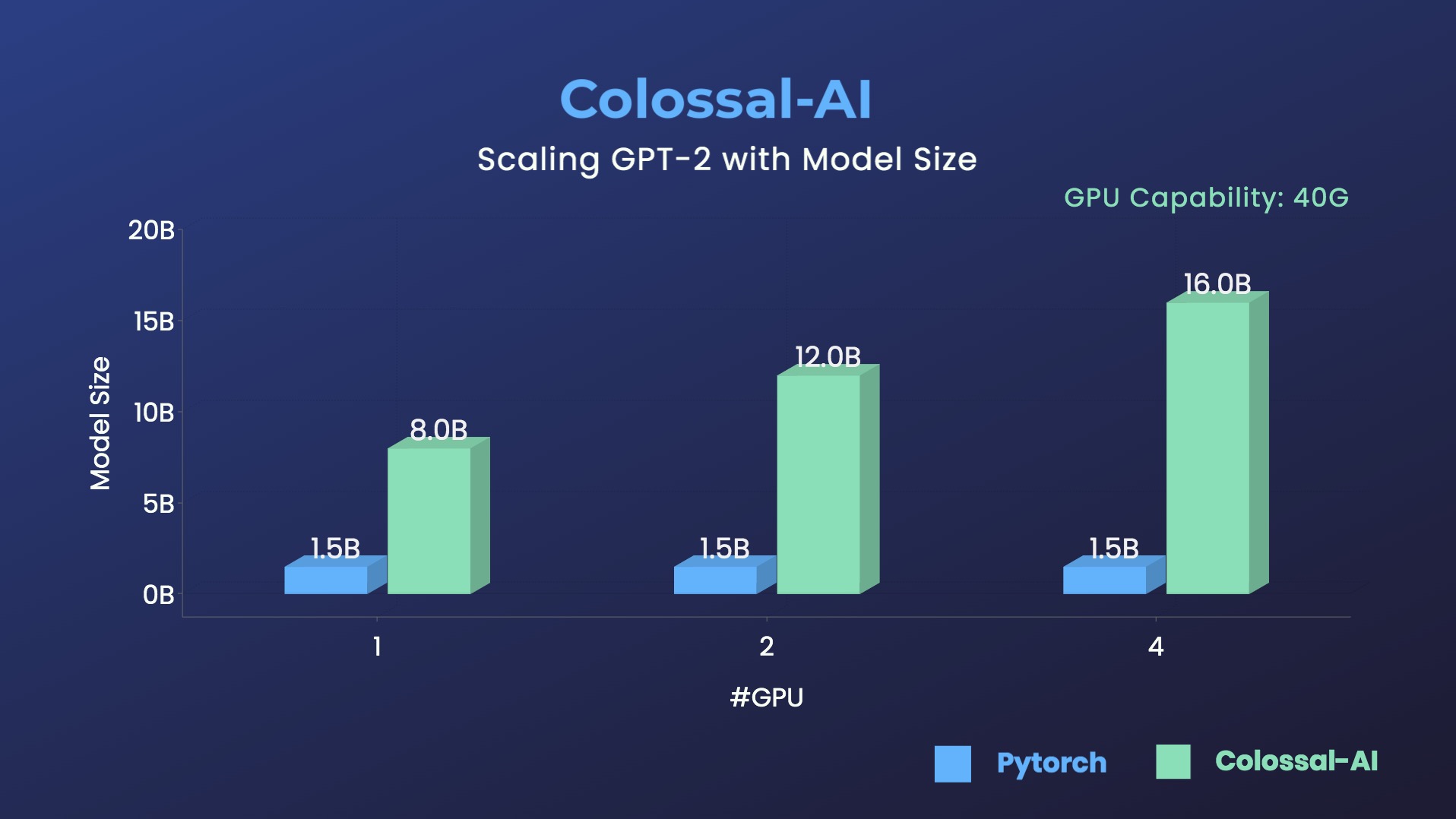

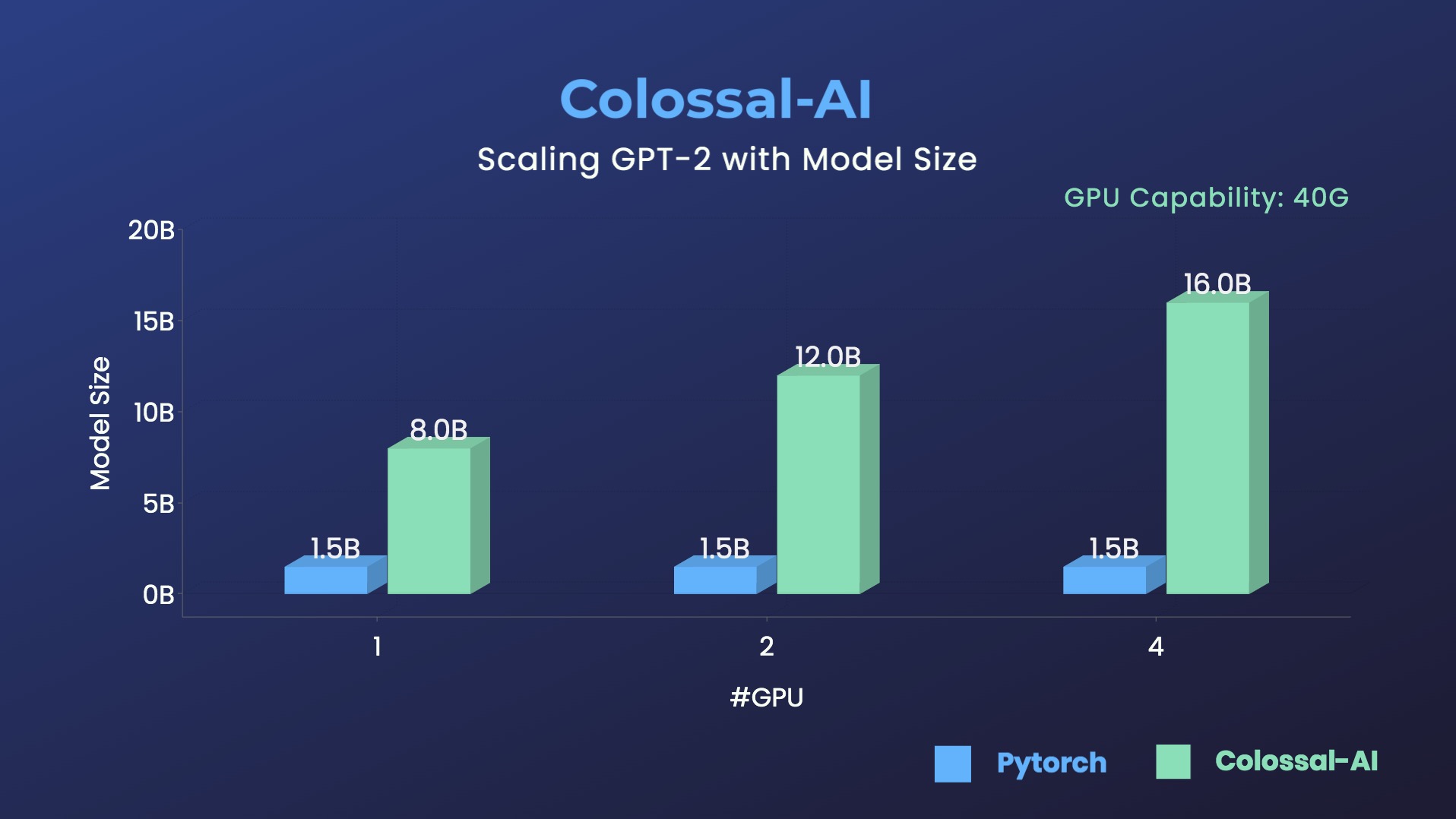

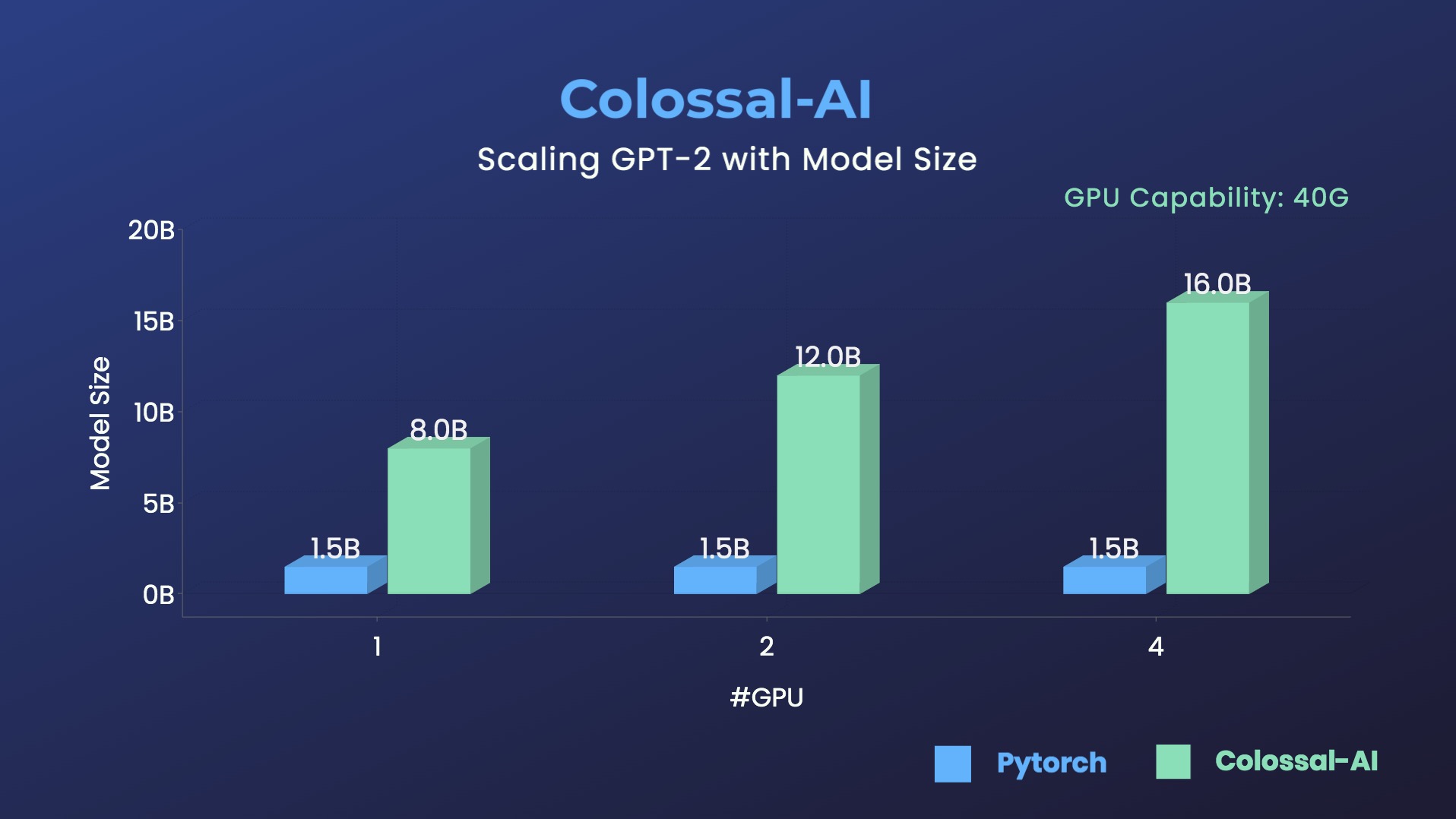

+GPT-2.png) -- 10.7x larger model size on the same hardware

+- 24x larger model size on the same hardware

+- over 3x acceleration

### BERT

-- 10.7x larger model size on the same hardware

+- 24x larger model size on the same hardware

+- over 3x acceleration

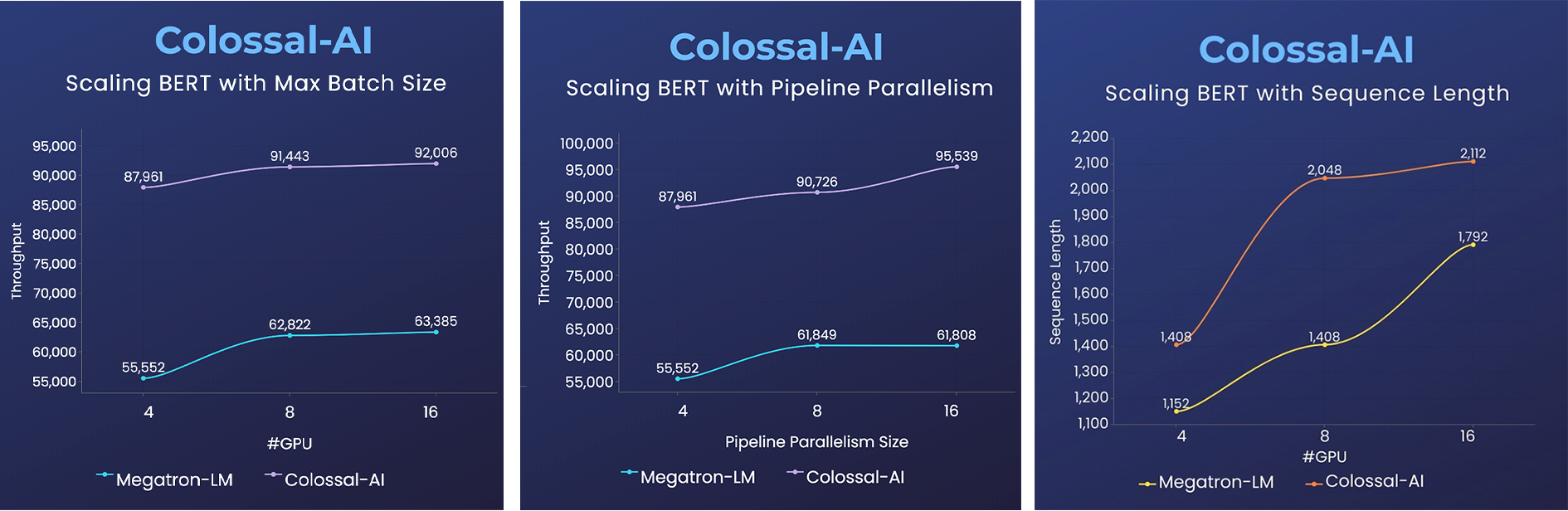

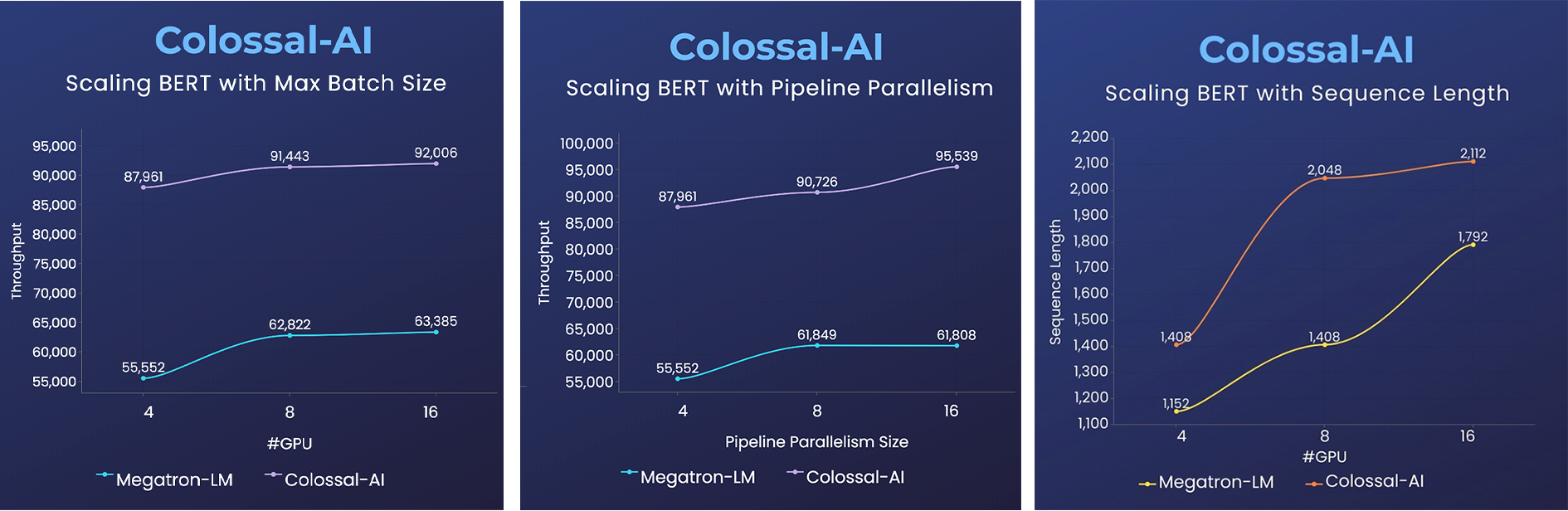

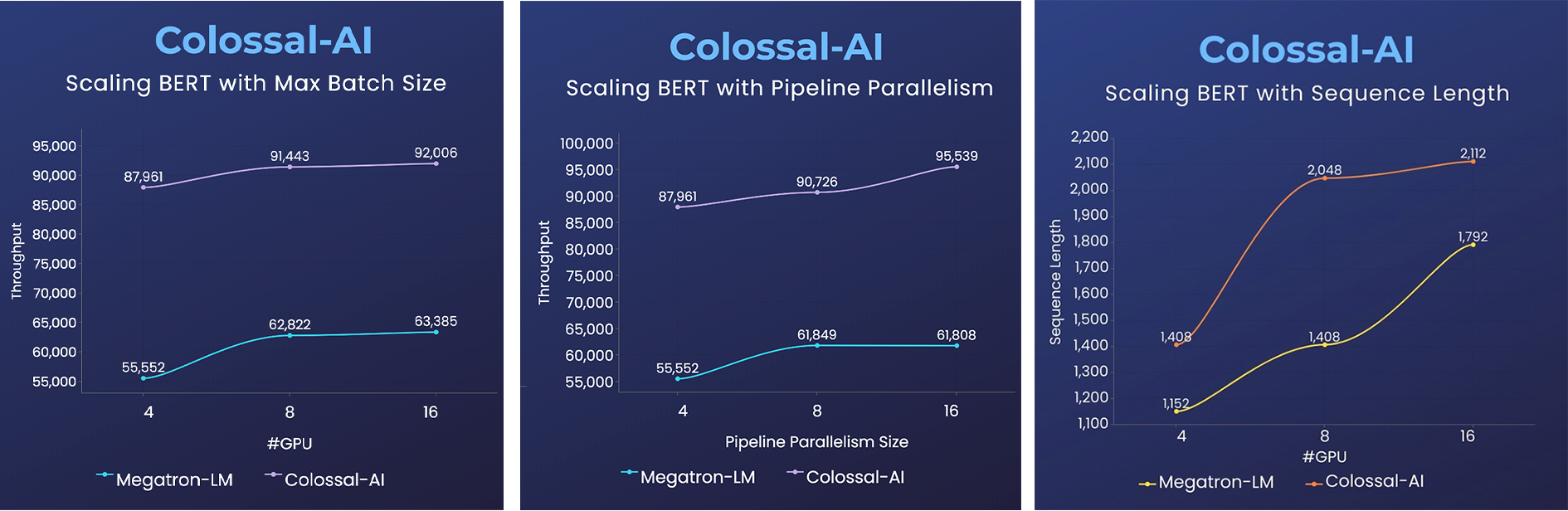

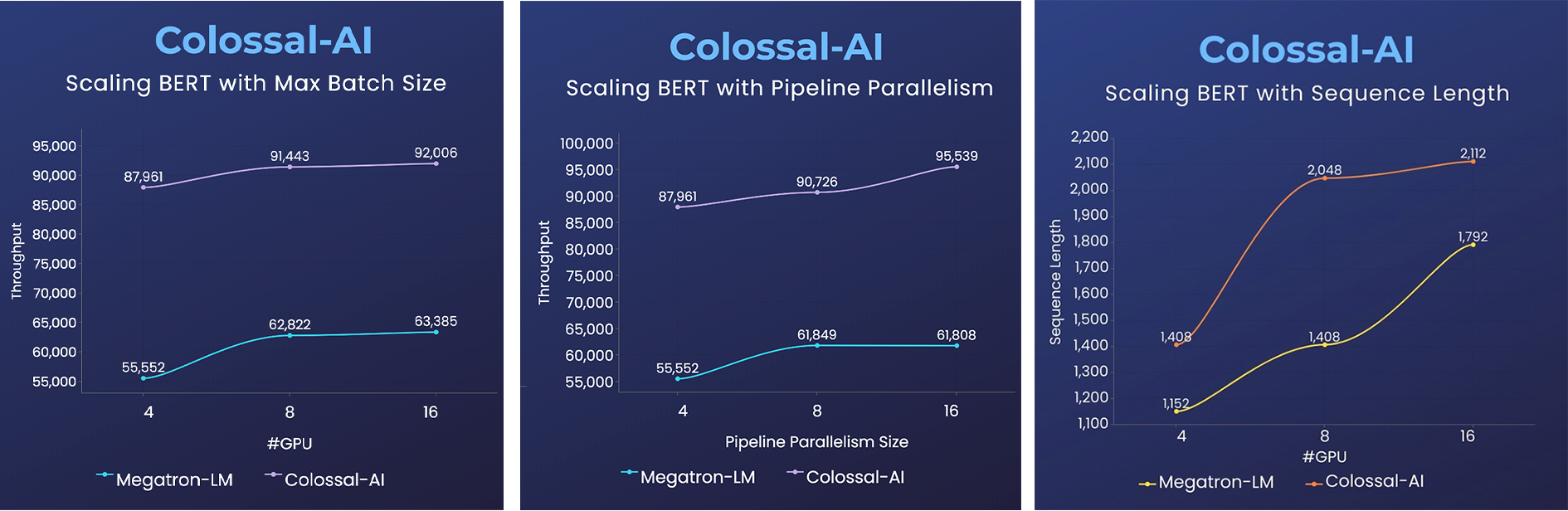

### BERT

+

+GPT-2.png) -- 能训练接近11倍大小的模型(ZeRO)

+- 用相同的硬件条件训练24倍大的模型

+- 超3倍的吞吐量

### BERT

-- 能训练接近11倍大小的模型(ZeRO)

+- 用相同的硬件条件训练24倍大的模型

+- 超3倍的吞吐量

### BERT

diff --git a/README.md b/README.md

index 400116d1d..1378d8f24 100644

--- a/README.md

+++ b/README.md

@@ -87,9 +87,10 @@ distributed training in a few lines.

- 11x lower GPU memory consumption, and superlinear scaling efficiency with Tensor Parallelism

-

diff --git a/README.md b/README.md

index 400116d1d..1378d8f24 100644

--- a/README.md

+++ b/README.md

@@ -87,9 +87,10 @@ distributed training in a few lines.

- 11x lower GPU memory consumption, and superlinear scaling efficiency with Tensor Parallelism

- +

+GPT-2.png) -- 10.7x larger model size on the same hardware

+- 24x larger model size on the same hardware

+- over 3x acceleration

### BERT

-- 10.7x larger model size on the same hardware

+- 24x larger model size on the same hardware

+- over 3x acceleration

### BERT