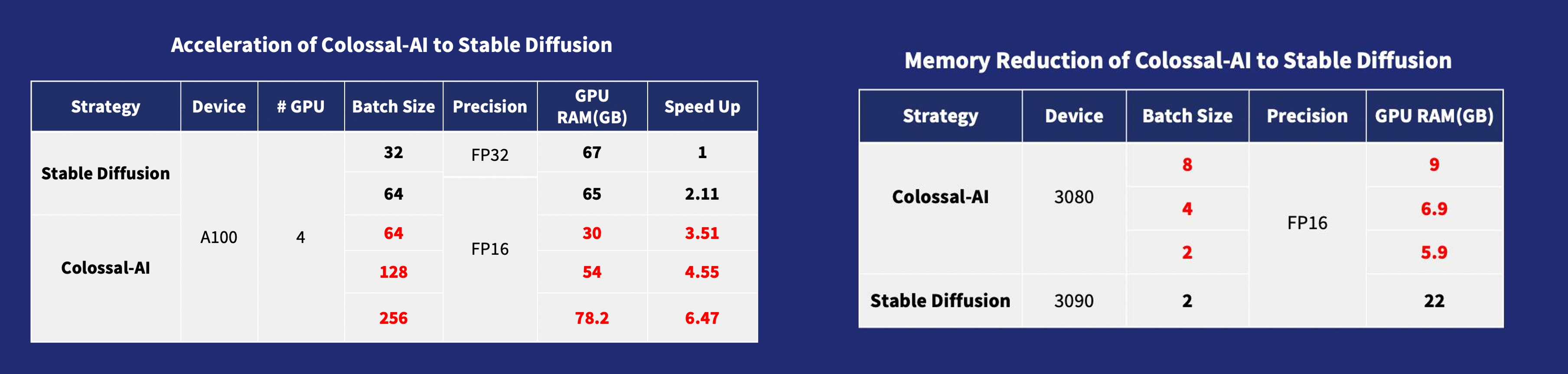

- AIGC: 加速 Stable Diffusion

- 生物医药: 加速AlphaFold蛋白质结构预测

@@ -131,7 +131,7 @@ Colossal-AI 为您提供了一系列并行组件。我们的目标是让您的

GPT-2.png) - 用相同的硬件训练24倍大的模型

-- 超3倍的吞吐量

+- 超3倍的吞吐量

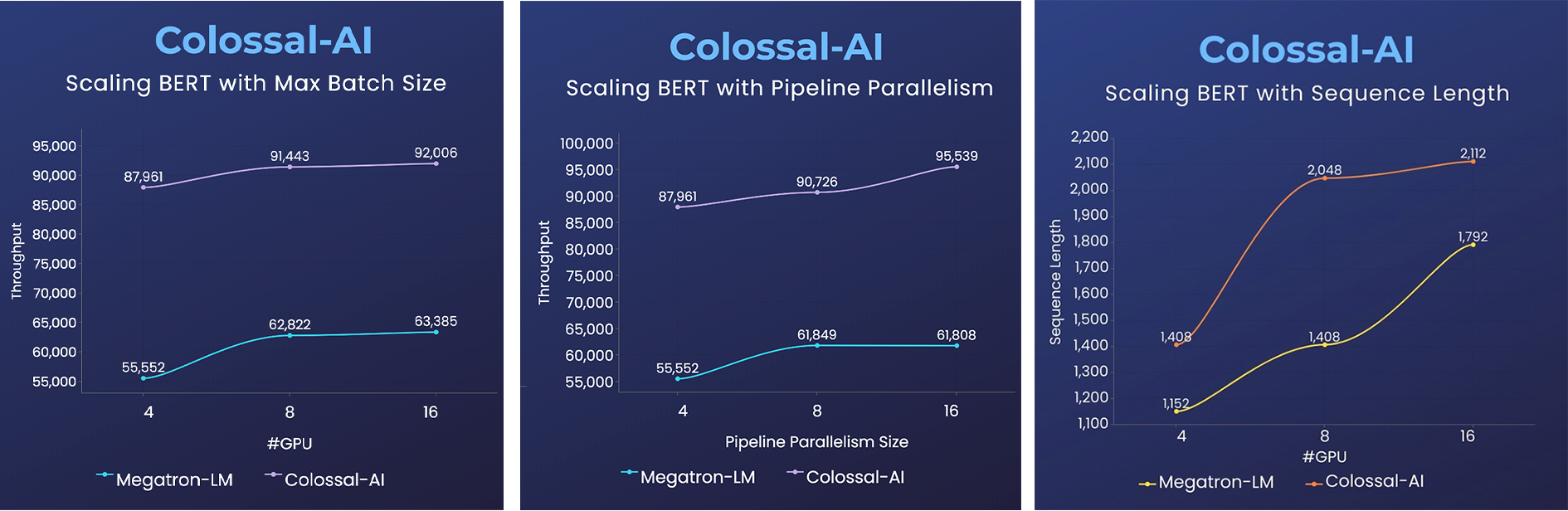

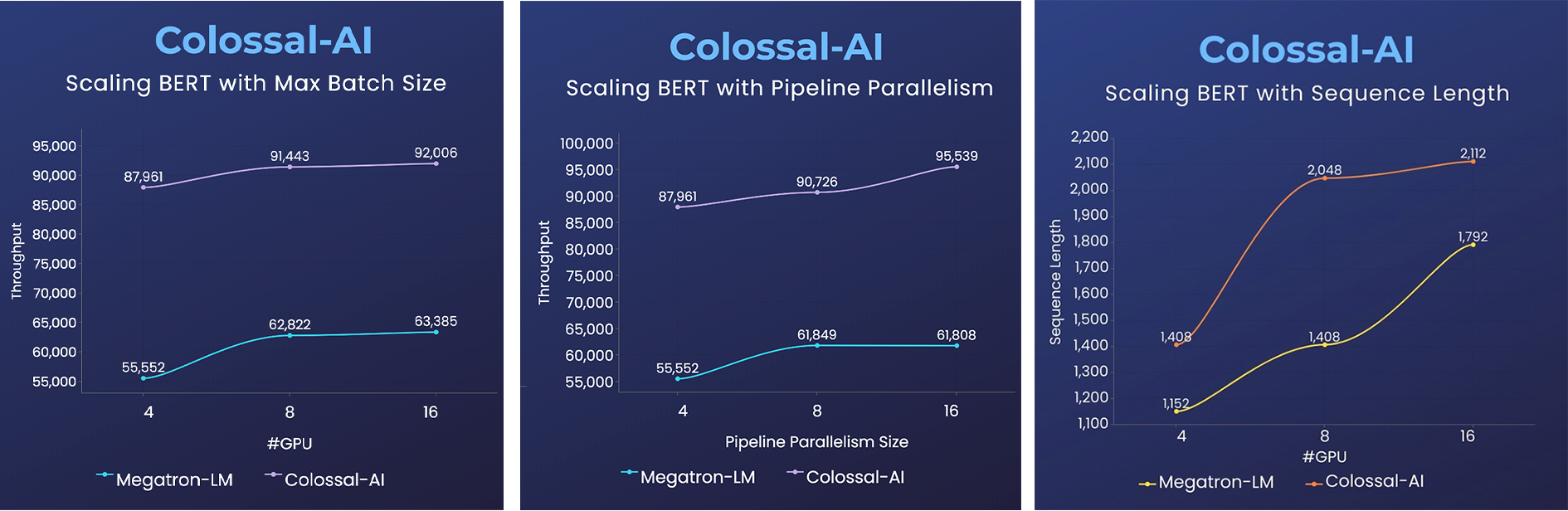

### BERT

- 用相同的硬件训练24倍大的模型

-- 超3倍的吞吐量

+- 超3倍的吞吐量

### BERT

@@ -145,7 +145,7 @@ Colossal-AI 为您提供了一系列并行组件。我们的目标是让您的

@@ -145,7 +145,7 @@ Colossal-AI 为您提供了一系列并行组件。我们的目标是让您的

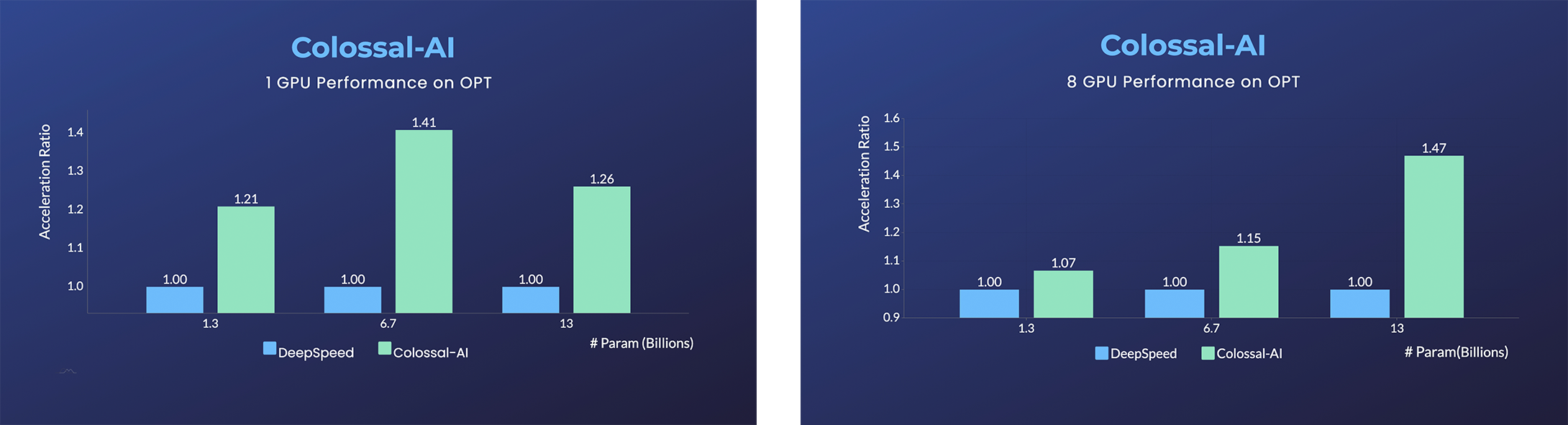

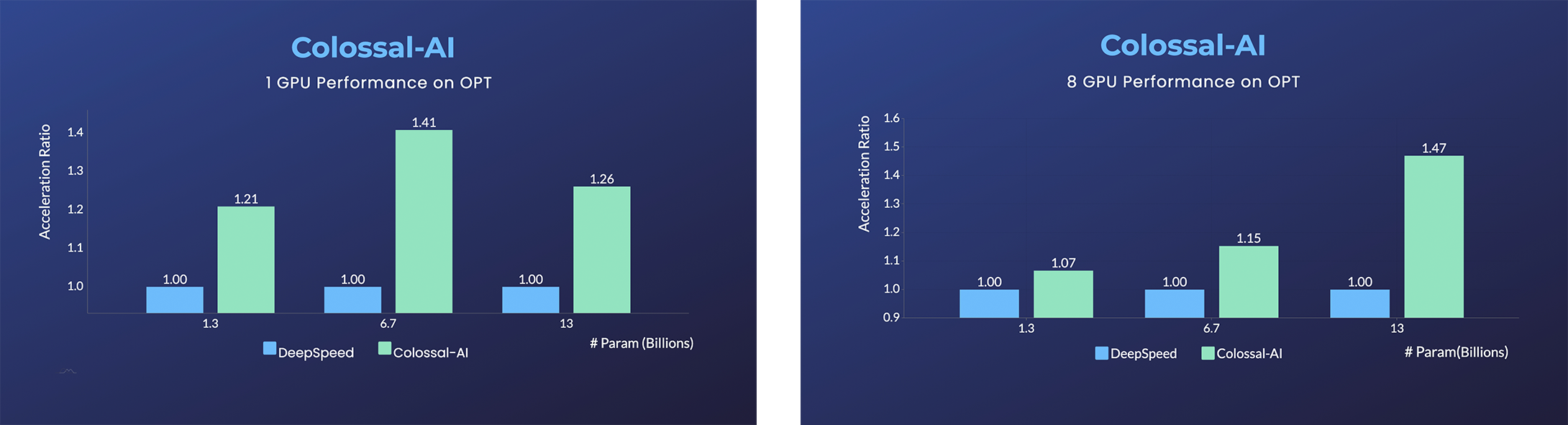

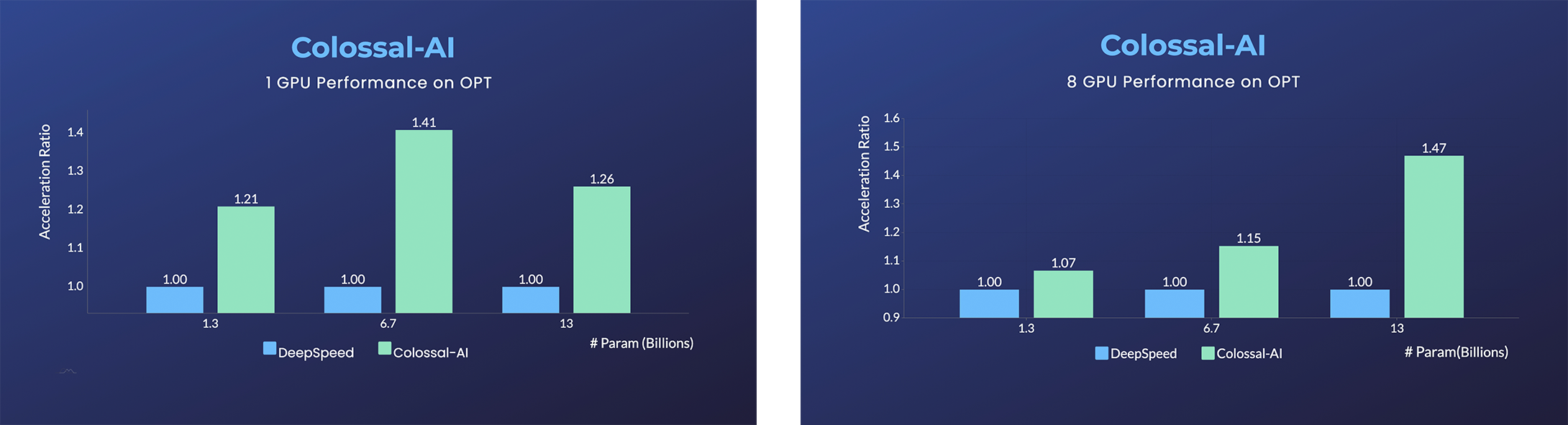

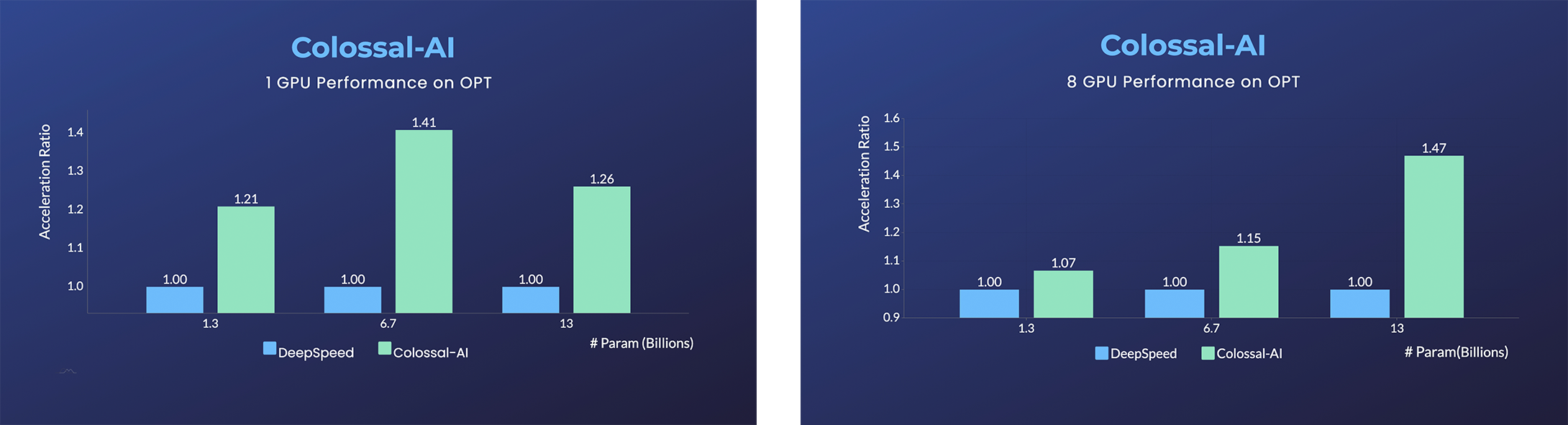

- [Open Pretrained Transformer (OPT)](https://github.com/facebookresearch/metaseq), 由Meta发布的1750亿语言模型,由于完全公开了预训练参数权重,因此促进了下游任务和应用部署的发展。

-- 加速45%,仅用几行代码以低成本微调OPT。[[样例]](https://github.com/hpcaitech/ColossalAI-Examples/tree/main/language/opt) [[在线推理]](https://service.colossalai.org/opt)

+- 加速45%,仅用几行代码以低成本微调OPT。[[样例]](https://github.com/hpcaitech/ColossalAI-Examples/tree/main/language/opt) [[在线推理]](https://github.com/hpcaitech/ColossalAI-Documentation/blob/main/i18n/zh-Hans/docusaurus-plugin-content-docs/current/advanced_tutorials/opt_service.md)

请访问我们的 [文档](https://www.colossalai.org/) 和 [例程](https://github.com/hpcaitech/ColossalAI-Examples) 以了解详情。

@@ -199,7 +199,7 @@ Colossal-AI 为您提供了一系列并行组件。我们的目标是让您的

- [Open Pretrained Transformer (OPT)](https://github.com/facebookresearch/metaseq), 由Meta发布的1750亿语言模型,由于完全公开了预训练参数权重,因此促进了下游任务和应用部署的发展。

-- 加速45%,仅用几行代码以低成本微调OPT。[[样例]](https://github.com/hpcaitech/ColossalAI-Examples/tree/main/language/opt) [[在线推理]](https://service.colossalai.org/opt)

+- 加速45%,仅用几行代码以低成本微调OPT。[[样例]](https://github.com/hpcaitech/ColossalAI-Examples/tree/main/language/opt) [[在线推理]](https://github.com/hpcaitech/ColossalAI-Documentation/blob/main/i18n/zh-Hans/docusaurus-plugin-content-docs/current/advanced_tutorials/opt_service.md)

请访问我们的 [文档](https://www.colossalai.org/) 和 [例程](https://github.com/hpcaitech/ColossalAI-Examples) 以了解详情。

@@ -199,7 +199,7 @@ Colossal-AI 为您提供了一系列并行组件。我们的目标是让您的

-- [OPT推理服务](https://service.colossalai.org/opt): 无需注册,免费体验1750亿参数OPT在线推理服务

+- [OPT推理服务](https://github.com/hpcaitech/ColossalAI-Documentation/blob/main/i18n/zh-Hans/docusaurus-plugin-content-docs/current/advanced_tutorials/opt_service.md): 无需注册,免费体验1750亿参数OPT在线推理服务

-- [OPT推理服务](https://service.colossalai.org/opt): 无需注册,免费体验1750亿参数OPT在线推理服务

+- [OPT推理服务](https://github.com/hpcaitech/ColossalAI-Documentation/blob/main/i18n/zh-Hans/docusaurus-plugin-content-docs/current/advanced_tutorials/opt_service.md): 无需注册,免费体验1750亿参数OPT在线推理服务

@@ -255,6 +255,28 @@ Colossal-AI 为您提供了一系列并行组件。我们的目标是让您的

## 安装

+### 从PyPI安装

+

+您可以用下面的命令直接从PyPI上下载并安装Colossal-AI。我们默认不会安装PyTorch扩展包

+

+```bash

+pip install colossalai

+```

+

+但是,如果你想在安装时就直接构建PyTorch扩展,您可以设置环境变量`CUDA_EXT=1`.

+

+```bash

+CUDA_EXT=1 pip install colossalai

+```

+

+**否则,PyTorch扩展只会在你实际需要使用他们时在运行时里被构建。**

+

+与此同时,我们也每周定时发布Nightly版本,这能让你提前体验到新的feature和bug fix。你可以通过以下命令安装Nightly版本。

+

+```bash

+pip install colossalai-nightly

+```

+

### 从官方安装

您可以访问我们[下载](https://www.colossalai.org/download)页面来安装Colossal-AI,在这个页面上发布的版本都预编译了CUDA扩展。

@@ -274,10 +296,10 @@ pip install -r requirements/requirements.txt

pip install .

```

-如果您不想安装和启用 CUDA 内核融合(使用融合优化器时强制安装):

+我们默认在`pip install`时不安装PyTorch扩展,而是在运行时临时编译,如果你想要提前安装这些扩展的话(在使用融合优化器时会用到),可以使用一下命令。

```shell

-NO_CUDA_EXT=1 pip install .

+CUDA_EXT=1 pip install .

```

@@ -255,6 +255,28 @@ Colossal-AI 为您提供了一系列并行组件。我们的目标是让您的

## 安装

+### 从PyPI安装

+

+您可以用下面的命令直接从PyPI上下载并安装Colossal-AI。我们默认不会安装PyTorch扩展包

+

+```bash

+pip install colossalai

+```

+

+但是,如果你想在安装时就直接构建PyTorch扩展,您可以设置环境变量`CUDA_EXT=1`.

+

+```bash

+CUDA_EXT=1 pip install colossalai

+```

+

+**否则,PyTorch扩展只会在你实际需要使用他们时在运行时里被构建。**

+

+与此同时,我们也每周定时发布Nightly版本,这能让你提前体验到新的feature和bug fix。你可以通过以下命令安装Nightly版本。

+

+```bash

+pip install colossalai-nightly

+```

+

### 从官方安装

您可以访问我们[下载](https://www.colossalai.org/download)页面来安装Colossal-AI,在这个页面上发布的版本都预编译了CUDA扩展。

@@ -274,10 +296,10 @@ pip install -r requirements/requirements.txt

pip install .

```

-如果您不想安装和启用 CUDA 内核融合(使用融合优化器时强制安装):

+我们默认在`pip install`时不安装PyTorch扩展,而是在运行时临时编译,如果你想要提前安装这些扩展的话(在使用融合优化器时会用到),可以使用一下命令。

```shell

-NO_CUDA_EXT=1 pip install .

+CUDA_EXT=1 pip install .

```

(返回顶端)

@@ -327,6 +349,11 @@ docker run -ti --gpus all --rm --ipc=host colossalai bash

(返回顶端)

+## CI/CD

+

+我们使用[GitHub Actions](https://github.com/features/actions)来自动化大部分开发以及部署流程。如果想了解这些工作流是如何运行的,请查看这个[文档](.github/workflows/README.md).

+

+

## 引用我们

```

@@ -338,4 +365,6 @@ docker run -ti --gpus all --rm --ipc=host colossalai bash

}

```

+Colossal-AI 已被 [SC](https://sc22.supercomputing.org/), [AAAI](https://aaai.org/Conferences/AAAI-23/), [PPoPP](https://ppopp23.sigplan.org/) 等顶级会议录取为官方教程。

+

(返回顶端)

diff --git a/README.md b/README.md

index 1b0ca7e97..01e7b0ec5 100644

--- a/README.md

+++ b/README.md

@@ -149,7 +149,7 @@ distributed training and inference in a few lines.

- [Open Pretrained Transformer (OPT)](https://github.com/facebookresearch/metaseq), a 175-Billion parameter AI language model released by Meta, which stimulates AI programmers to perform various downstream tasks and application deployments because public pretrained model weights.

-- 45% speedup fine-tuning OPT at low cost in lines. [[Example]](https://github.com/hpcaitech/ColossalAI-Examples/tree/main/language/opt) [[Online Serving]](https://service.colossalai.org/opt)

+- 45% speedup fine-tuning OPT at low cost in lines. [[Example]](https://github.com/hpcaitech/ColossalAI-Examples/tree/main/language/opt) [[Online Serving]](https://github.com/hpcaitech/ColossalAI-Documentation/blob/main/i18n/en/docusaurus-plugin-content-docs/current/advanced_tutorials/opt_service.md)

Please visit our [documentation](https://www.colossalai.org/) and [examples](https://github.com/hpcaitech/ColossalAI-Examples) for more details.

@@ -202,7 +202,7 @@ Please visit our [documentation](https://www.colossalai.org/) and [examples](htt

- [Open Pretrained Transformer (OPT)](https://github.com/facebookresearch/metaseq), a 175-Billion parameter AI language model released by Meta, which stimulates AI programmers to perform various downstream tasks and application deployments because public pretrained model weights.

-- 45% speedup fine-tuning OPT at low cost in lines. [[Example]](https://github.com/hpcaitech/ColossalAI-Examples/tree/main/language/opt) [[Online Serving]](https://service.colossalai.org/opt)

+- 45% speedup fine-tuning OPT at low cost in lines. [[Example]](https://github.com/hpcaitech/ColossalAI-Examples/tree/main/language/opt) [[Online Serving]](https://github.com/hpcaitech/ColossalAI-Documentation/blob/main/i18n/en/docusaurus-plugin-content-docs/current/advanced_tutorials/opt_service.md)

Please visit our [documentation](https://www.colossalai.org/) and [examples](https://github.com/hpcaitech/ColossalAI-Examples) for more details.

@@ -202,7 +202,7 @@ Please visit our [documentation](https://www.colossalai.org/) and [examples](htt

-- [OPT Serving](https://service.colossalai.org/opt): Try 175-billion-parameter OPT online services for free, without any registration whatsoever.

+- [OPT Serving](https://github.com/hpcaitech/ColossalAI-Documentation/blob/main/i18n/en/docusaurus-plugin-content-docs/current/advanced_tutorials/opt_service.md): Try 175-billion-parameter OPT online services for free, without any registration whatsoever.

-- [OPT Serving](https://service.colossalai.org/opt): Try 175-billion-parameter OPT online services for free, without any registration whatsoever.

+- [OPT Serving](https://github.com/hpcaitech/ColossalAI-Documentation/blob/main/i18n/en/docusaurus-plugin-content-docs/current/advanced_tutorials/opt_service.md): Try 175-billion-parameter OPT online services for free, without any registration whatsoever.

@@ -257,9 +257,32 @@ Acceleration of [AlphaFold Protein Structure](https://alphafold.ebi.ac.uk/)

## Installation

+### Install from PyPI

+

+You can easily install Colossal-AI with the following command. **By defualt, we do not build PyTorch extensions during installation.**

+

+```bash

+pip install colossalai

+```

+

+However, if you want to build the PyTorch extensions during installation, you can set `CUDA_EXT=1`.

+

+```bash

+CUDA_EXT=1 pip install colossalai

+```

+

+**Otherwise, CUDA kernels will be built during runtime when you actually need it.**

+

+We also keep release the nightly version to PyPI on a weekly basis. This allows you to access the unreleased features and bug fixes in the main branch.

+Installation can be made via

+

+```bash

+pip install colossalai-nightly

+```

+

### Download From Official Releases

-You can visit the [Download](https://www.colossalai.org/download) page to download Colossal-AI with pre-built CUDA extensions.

+You can visit the [Download](https://www.colossalai.org/download) page to download Colossal-AI with pre-built PyTorch extensions.

### Download From Source

@@ -270,9 +293,6 @@ You can visit the [Download](https://www.colossalai.org/download) page to downlo

git clone https://github.com/hpcaitech/ColossalAI.git

cd ColossalAI

-# install dependency

-pip install -r requirements/requirements.txt

-

# install colossalai

pip install .

```

@@ -333,6 +353,11 @@ Thanks so much to all of our amazing contributors!

@@ -257,9 +257,32 @@ Acceleration of [AlphaFold Protein Structure](https://alphafold.ebi.ac.uk/)

## Installation

+### Install from PyPI

+

+You can easily install Colossal-AI with the following command. **By defualt, we do not build PyTorch extensions during installation.**

+

+```bash

+pip install colossalai

+```

+

+However, if you want to build the PyTorch extensions during installation, you can set `CUDA_EXT=1`.

+

+```bash

+CUDA_EXT=1 pip install colossalai

+```

+

+**Otherwise, CUDA kernels will be built during runtime when you actually need it.**

+

+We also keep release the nightly version to PyPI on a weekly basis. This allows you to access the unreleased features and bug fixes in the main branch.

+Installation can be made via

+

+```bash

+pip install colossalai-nightly

+```

+

### Download From Official Releases

-You can visit the [Download](https://www.colossalai.org/download) page to download Colossal-AI with pre-built CUDA extensions.

+You can visit the [Download](https://www.colossalai.org/download) page to download Colossal-AI with pre-built PyTorch extensions.

### Download From Source

@@ -270,9 +293,6 @@ You can visit the [Download](https://www.colossalai.org/download) page to downlo

git clone https://github.com/hpcaitech/ColossalAI.git

cd ColossalAI

-# install dependency

-pip install -r requirements/requirements.txt

-

# install colossalai

pip install .

```

@@ -333,6 +353,11 @@ Thanks so much to all of our amazing contributors!

(back to top)

+## CI/CD

+

+We leverage the power of [GitHub Actions](https://github.com/features/actions) to automate our development, release and deployment workflows. Please check out this [documentation](.github/workflows/README.md) on how the automated workflows are operated.

+

+

## Cite Us

```

@@ -344,4 +369,6 @@ Thanks so much to all of our amazing contributors!

}

```

+Colossal-AI has been accepted as official tutorials by top conference [SC](https://sc22.supercomputing.org/), [AAAI](https://aaai.org/Conferences/AAAI-23/), [PPoPP](https://ppopp23.sigplan.org/), etc.

+

(back to top)

diff --git a/colossalai/auto_parallel/passes/runtime_apply_pass.py b/colossalai/auto_parallel/passes/runtime_apply_pass.py

index 7f2aac42b..9d83f1057 100644

--- a/colossalai/auto_parallel/passes/runtime_apply_pass.py

+++ b/colossalai/auto_parallel/passes/runtime_apply_pass.py

@@ -128,6 +128,8 @@ def _shape_consistency_apply(gm: torch.fx.GraphModule):

runtime_apply,

args=(node, origin_dict_node, input_dict_node,

node_to_index_dict[node], user_node_index))

+ if 'activation_checkpoint' in user_node.meta:

+ shape_consistency_node.meta['activation_checkpoint'] = user_node.meta['activation_checkpoint']

new_args = list(user_node.args)

new_kwargs = dict(user_node.kwargs)

@@ -208,6 +210,37 @@ def _comm_spec_apply(gm: torch.fx.GraphModule):

# substitute the origin node with comm_spec_apply_node

new_kwargs[str(node)] = comm_spec_apply_node

user.kwargs = new_kwargs

+

+ if 'activation_checkpoint' in node.meta:

+ comm_spec_apply_node.meta['activation_checkpoint'] = node.meta['activation_checkpoint']

+

+ return gm

+

+

+def _act_annotataion_pass(gm: torch.fx.GraphModule):

+ """

+ This pass is used to add the act annotation to the new inserted nodes.

+ """

+ mod_graph = gm.graph

+ nodes = tuple(mod_graph.nodes)

+

+ for node in nodes:

+ if not hasattr(node.meta, 'activation_checkpoint'):

+ from .runtime_preparation_pass import size_processing

+

+ user_act_annotation = -1

+ input_act_annotation = -1

+ for user_node in node.users.keys():

+ if 'activation_checkpoint' in user_node.meta:

+ user_act_annotation = user_node.meta['activation_checkpoint']

+ break

+ for input_node in node._input_nodes.keys():

+ if 'activation_checkpoint' in input_node.meta:

+ input_act_annotation = input_node.meta['activation_checkpoint']

+ break

+ if user_act_annotation == input_act_annotation and user_act_annotation != -1:

+ node.meta['activation_checkpoint'] = user_act_annotation

+

return gm

diff --git a/colossalai/auto_parallel/passes/runtime_preparation_pass.py b/colossalai/auto_parallel/passes/runtime_preparation_pass.py

index f9b890263..1c25e4c94 100644

--- a/colossalai/auto_parallel/passes/runtime_preparation_pass.py

+++ b/colossalai/auto_parallel/passes/runtime_preparation_pass.py

@@ -179,6 +179,8 @@ def _size_value_converting(gm: torch.fx.GraphModule, device_mesh: DeviceMesh):

# It will be used to replace the original node with processing node in slice object

node_pairs[node] = size_processing_node

size_processing_node._meta_data = node._meta_data

+ if 'activation_checkpoint' in node.meta:

+ size_processing_node.meta['activation_checkpoint'] = node.meta['activation_checkpoint']

user_list = list(node.users.keys())

for user in user_list:

diff --git a/colossalai/auto_parallel/tensor_shard/initialize.py b/colossalai/auto_parallel/tensor_shard/initialize.py

index 0dce2564c..387a682a1 100644

--- a/colossalai/auto_parallel/tensor_shard/initialize.py

+++ b/colossalai/auto_parallel/tensor_shard/initialize.py

@@ -18,6 +18,7 @@ from colossalai.auto_parallel.tensor_shard.solver import (

)

from colossalai.device.alpha_beta_profiler import AlphaBetaProfiler

from colossalai.device.device_mesh import DeviceMesh

+from colossalai.fx.graph_module import ColoGraphModule

from colossalai.fx.tracer import ColoTracer

from colossalai.tensor.sharding_spec import ShardingSpec

@@ -28,7 +29,7 @@ class ModuleWrapper(nn.Module):

into the forward function.

'''

- def __init__(self, module: GraphModule, sharding_spec_dict: Dict[int, List[ShardingSpec]],

+ def __init__(self, module: ColoGraphModule, sharding_spec_dict: Dict[int, List[ShardingSpec]],

origin_spec_dict: Dict[int, ShardingSpec], comm_actions_dict: Dict[int, Dict[str, CommAction]]):

'''

Args:

@@ -59,18 +60,6 @@ def extract_meta_args_from_dataloader(data_loader: torch.utils.data.DataLoader,

pass

-def search_best_logical_mesh_shape(world_size: int, alpha_beta_dict: Dict[Tuple[int], Tuple[float]]):

- '''

- This method is used to search the best logical mesh shape for the given world size

- based on the alpha_beta_dict.

-

- For example:

- if the world_size is 8, and the possible logical shape will be (1, 8), (2, 4), (4, 2), (8, 1).

- '''

- # TODO: implement this function

- return (world_size, 1)

-

-

def extract_alpha_beta_for_device_mesh(alpha_beta_dict: Dict[Tuple[int], Tuple[float]], logical_mesh_shape: Tuple[int]):

'''

This method is used to extract the mesh_alpha and mesh_beta for the given logical_mesh_shape

@@ -93,7 +82,7 @@ def build_strategy_constructor(graph: Graph, device_mesh: DeviceMesh):

return strategies_constructor

-def solve_solution(gm: GraphModule, strategy_constructor: StrategiesConstructor, memory_budget: float = -1.0):

+def solve_solution(gm: ColoGraphModule, strategy_constructor: StrategiesConstructor, memory_budget: float = -1.0):

'''

This method is used to solve the best solution for the given graph.

The solution is a list of integers, each integer represents the best strategy index of the corresponding node.

@@ -109,7 +98,7 @@ def solve_solution(gm: GraphModule, strategy_constructor: StrategiesConstructor,

return solution

-def transform_to_sharded_model(gm: GraphModule, solution: List[int], device_mesh: DeviceMesh,

+def transform_to_sharded_model(gm: ColoGraphModule, solution: List[int], device_mesh: DeviceMesh,

strategies_constructor: StrategiesConstructor):

'''

This method is used to transform the original graph to the sharded graph.

@@ -127,39 +116,56 @@ def transform_to_sharded_model(gm: GraphModule, solution: List[int], device_mesh

def initialize_device_mesh(world_size: int = -1,

+ physical_devices: List[int] = None,

alpha_beta_dict: Dict[Tuple[int], Tuple[float]] = None,

- logical_mesh_shape: Tuple[int] = None):

+ logical_mesh_shape: Tuple[int] = None,

+ logical_mesh_id: torch.Tensor = None):

'''

This method is used to initialize the device mesh.

Args:

- world_size(optional): the size of device mesh. If the world_size is -1,

+ world_size: the size of device mesh. If the world_size is -1,

the world size will be set to the number of GPUs in the current machine.

+ physical_devices: the physical devices used to initialize the device mesh.

alpha_beta_dict(optional): the alpha_beta_dict contains the alpha and beta values

for each devices. if the alpha_beta_dict is None, the alpha_beta_dict will be

generated by profile_alpha_beta function.

logical_mesh_shape(optional): the logical_mesh_shape is used to specify the logical

- mesh shape. If the logical_mesh_shape is None, the logical_mesh_shape will be

- generated by search_best_logical_mesh_shape function.

+ mesh shape.

+ logical_mesh_id(optional): the logical_mesh_id is used to specify the logical mesh id.

'''

# if world_size is not set, use the world size from torch.distributed

if world_size == -1:

world_size = dist.get_world_size()

- device1d = [i for i in range(world_size)]

+

+ if physical_devices is None:

+ physical_devices = [i for i in range(world_size)]

+ physical_mesh = torch.tensor(physical_devices)

if alpha_beta_dict is None:

# if alpha_beta_dict is not given, use a series of executions to profile alpha and beta values for each device

- alpha_beta_dict = profile_alpha_beta(device1d)

+ ab_profiler = AlphaBetaProfiler(physical_devices)

+ alpha_beta_dict = ab_profiler.alpha_beta_dict

+ else:

+ ab_profiler = AlphaBetaProfiler(physical_devices, alpha_beta_dict=alpha_beta_dict)

- if logical_mesh_shape is None:

+ if logical_mesh_shape is None and logical_mesh_id is None:

# search for the best logical mesh shape

- logical_mesh_shape = search_best_logical_mesh_shape(world_size, alpha_beta_dict)

+ logical_mesh_id = ab_profiler.search_best_logical_mesh()

+ logical_mesh_id = torch.Tensor(logical_mesh_id).to(torch.int)

+ logical_mesh_shape = logical_mesh_id.shape

+

+ # extract alpha and beta values for the chosen logical mesh shape

+ mesh_alpha, mesh_beta = ab_profiler.extract_alpha_beta_for_device_mesh()

+

+ elif logical_mesh_shape is not None and logical_mesh_id is None:

+ logical_mesh_id = physical_mesh.reshape(logical_mesh_shape)

+

+ # extract alpha and beta values for the chosen logical mesh shape

+ mesh_alpha, mesh_beta = extract_alpha_beta_for_device_mesh(alpha_beta_dict, logical_mesh_id)

- # extract alpha and beta values for the chosen logical mesh shape

- mesh_alpha, mesh_beta = extract_alpha_beta_for_device_mesh(alpha_beta_dict, logical_mesh_shape)

- physical_mesh = torch.tensor(device1d)

device_mesh = DeviceMesh(physical_mesh_id=physical_mesh,

- mesh_shape=logical_mesh_shape,

+ logical_mesh_id=logical_mesh_id,

mesh_alpha=mesh_alpha,

mesh_beta=mesh_beta,

init_process_group=True)

@@ -192,10 +198,10 @@ def initialize_model(model: nn.Module,

solution will be used to debug or help to analyze the sharding result. Therefore, we will not just

return a series of integers, but return the best strategies.

'''

- tracer = ColoTracer()

+ tracer = ColoTracer(trace_act_ckpt=True)

graph = tracer.trace(root=model, meta_args=meta_args)

- gm = GraphModule(model, graph, model.__class__.__name__)

+ gm = ColoGraphModule(model, graph, model.__class__.__name__)

gm.recompile()

strategies_constructor = build_strategy_constructor(graph, device_mesh)

if load_solver_solution:

@@ -224,6 +230,7 @@ def autoparallelize(model: nn.Module,

data_process_func: callable = None,

alpha_beta_dict: Dict[Tuple[int], Tuple[float]] = None,

logical_mesh_shape: Tuple[int] = None,

+ logical_mesh_id: torch.Tensor = None,

save_solver_solution: bool = False,

load_solver_solution: bool = False,

solver_solution_path: str = None,

@@ -245,6 +252,7 @@ def autoparallelize(model: nn.Module,

logical_mesh_shape(optional): the logical_mesh_shape is used to specify the logical

mesh shape. If the logical_mesh_shape is None, the logical_mesh_shape will be

generated by search_best_logical_mesh_shape function.

+ logical_mesh_id(optional): the logical_mesh_id is used to specify the logical mesh id.

save_solver_solution(optional): if the save_solver_solution is True, the solution will be saved

to the solution_path.

load_solver_solution(optional): if the load_solver_solution is True, the solution will be loaded

@@ -254,7 +262,9 @@ def autoparallelize(model: nn.Module,

memory_budget(optional): the max cuda memory could be used. If the memory budget is -1.0,

the memory budget will be infinity.

'''

- device_mesh = initialize_device_mesh(alpha_beta_dict=alpha_beta_dict, logical_mesh_shape=logical_mesh_shape)

+ device_mesh = initialize_device_mesh(alpha_beta_dict=alpha_beta_dict,

+ logical_mesh_shape=logical_mesh_shape,

+ logical_mesh_id=logical_mesh_id)

if meta_args is None:

meta_args = extract_meta_args_from_dataloader(data_loader, data_process_func)

@@ -263,7 +273,7 @@ def autoparallelize(model: nn.Module,

device_mesh,

save_solver_solution=save_solver_solution,

load_solver_solution=load_solver_solution,

- solver_solution_path=solver_solution_path,

+ solution_path=solver_solution_path,

return_solution=return_solution,

memory_budget=memory_budget)

diff --git a/colossalai/auto_parallel/tensor_shard/node_handler/__init__.py b/colossalai/auto_parallel/tensor_shard/node_handler/__init__.py

index a5e3f649a..87bd8966b 100644

--- a/colossalai/auto_parallel/tensor_shard/node_handler/__init__.py

+++ b/colossalai/auto_parallel/tensor_shard/node_handler/__init__.py

@@ -11,6 +11,7 @@ from .layer_norm_handler import LayerNormModuleHandler

from .linear_handler import LinearFunctionHandler, LinearModuleHandler

from .matmul_handler import MatMulHandler

from .normal_pooling_handler import NormPoolingHandler

+from .option import ShardOption

from .output_handler import OutputHandler

from .placeholder_handler import PlaceholderHandler

from .registry import operator_registry

@@ -27,5 +28,5 @@ __all__ = [

'UnaryElementwiseHandler', 'ReshapeHandler', 'PlaceholderHandler', 'OutputHandler', 'WhereHandler',

'NormPoolingHandler', 'BinaryElementwiseHandler', 'MatMulHandler', 'operator_registry', 'ADDMMFunctionHandler',

'GetItemHandler', 'GetattrHandler', 'ViewHandler', 'PermuteHandler', 'TensorConstructorHandler',

- 'EmbeddingModuleHandler', 'EmbeddingFunctionHandler', 'SumHandler', 'SoftmaxHandler'

+ 'EmbeddingModuleHandler', 'EmbeddingFunctionHandler', 'SumHandler', 'SoftmaxHandler', 'ShardOption'

]

diff --git a/colossalai/auto_parallel/tensor_shard/node_handler/binary_elementwise_handler.py b/colossalai/auto_parallel/tensor_shard/node_handler/binary_elementwise_handler.py

index f510f7477..db8f0b54d 100644

--- a/colossalai/auto_parallel/tensor_shard/node_handler/binary_elementwise_handler.py

+++ b/colossalai/auto_parallel/tensor_shard/node_handler/binary_elementwise_handler.py

@@ -32,20 +32,32 @@ class BinaryElementwiseHandler(MetaInfoNodeHandler):

return OperationDataType.ARG

def _get_arg_value(idx):

+ non_tensor = False

if isinstance(self.node.args[idx], Node):

meta_data = self.node.args[idx]._meta_data

+ # The meta_data of node type argument could also possibly be a non-tensor object.

+ if not isinstance(meta_data, torch.Tensor):

+ assert isinstance(meta_data, (int, float))

+ meta_data = torch.Tensor([meta_data]).to('meta')

+ non_tensor = True

+

else:

# this is in fact a real data like int 1

# but we can deem it as meta data

# as it won't affect the strategy generation

assert isinstance(self.node.args[idx], (int, float))

meta_data = torch.Tensor([self.node.args[idx]]).to('meta')

- return meta_data

+ non_tensor = True

- input_meta_data = _get_arg_value(0)

- other_meta_data = _get_arg_value(1)

+ return meta_data, non_tensor

+

+ input_meta_data, non_tensor_input = _get_arg_value(0)

+ other_meta_data, non_tensor_other = _get_arg_value(1)

output_meta_data = self.node._meta_data

-

+ # we need record op_data with non-tensor data in this list,

+ # and filter the non-tensor op_data in post_process.

+ self.non_tensor_list = []

+ # assert False

input_op_data = OperationData(name=str(self.node.args[0]),

type=_get_op_data_type(input_meta_data),

data=input_meta_data,

@@ -58,6 +70,10 @@ class BinaryElementwiseHandler(MetaInfoNodeHandler):

type=OperationDataType.OUTPUT,

data=output_meta_data,

logical_shape=bcast_shape)

+ if non_tensor_input:

+ self.non_tensor_list.append(input_op_data)

+ if non_tensor_other:

+ self.non_tensor_list.append(other_op_data)

mapping = {'input': input_op_data, 'other': other_op_data, 'output': output_op_data}

return mapping

@@ -73,9 +89,10 @@ class BinaryElementwiseHandler(MetaInfoNodeHandler):

op_data_mapping = self.get_operation_data_mapping()

for op_name, op_data in op_data_mapping.items():

- if not isinstance(op_data.data, torch.Tensor):

+ if op_data in self.non_tensor_list:

# remove the sharding spec if the op_data is not a tensor, e.g. torch.pow(tensor, 2)

strategy.sharding_specs.pop(op_data)

+

else:

# convert the logical sharding spec to physical sharding spec if broadcast

# e.g. torch.rand(4, 4) + torch.rand(4)

diff --git a/colossalai/auto_parallel/tensor_shard/node_handler/node_handler.py b/colossalai/auto_parallel/tensor_shard/node_handler/node_handler.py

index 78dc58c90..fbab2b61e 100644

--- a/colossalai/auto_parallel/tensor_shard/node_handler/node_handler.py

+++ b/colossalai/auto_parallel/tensor_shard/node_handler/node_handler.py

@@ -5,6 +5,7 @@ import torch

from torch.fx.node import Node

from colossalai.auto_parallel.meta_profiler.metainfo import MetaInfo, meta_register

+from colossalai.auto_parallel.tensor_shard.node_handler.option import ShardOption

from colossalai.auto_parallel.tensor_shard.sharding_strategy import (

OperationData,

OperationDataType,

@@ -35,12 +36,14 @@ class NodeHandler(ABC):

node: Node,

device_mesh: DeviceMesh,

strategies_vector: StrategiesVector,

+ shard_option: ShardOption = ShardOption.STANDARD,

) -> None:

self.node = node

self.predecessor_node = list(node._input_nodes.keys())

self.successor_node = list(node.users.keys())

self.device_mesh = device_mesh

self.strategies_vector = strategies_vector

+ self.shard_option = shard_option

def update_resharding_cost(self, strategy: ShardingStrategy) -> None:

"""

@@ -181,6 +184,21 @@ class NodeHandler(ABC):

if op_data.data is not None and isinstance(op_data.data, torch.Tensor):

check_sharding_spec_validity(sharding_spec, op_data.data)

+ remove_strategy_list = []

+ for strategy in self.strategies_vector:

+ shard_level = 0

+ for op_data, sharding_spec in strategy.sharding_specs.items():

+ if op_data.data is not None and isinstance(op_data.data, torch.Tensor):

+ for dim, shard_axis in sharding_spec.dim_partition_dict.items():

+ shard_level += len(shard_axis)

+ if self.shard_option == ShardOption.SHARD and shard_level == 0:

+ remove_strategy_list.append(strategy)

+ if self.shard_option == ShardOption.FULL_SHARD and shard_level <= 1:

+ remove_strategy_list.append(strategy)

+

+ for strategy in remove_strategy_list:

+ self.strategies_vector.remove(strategy)

+

return self.strategies_vector

def post_process(self, strategy: ShardingStrategy) -> Union[ShardingStrategy, List[ShardingStrategy]]:

diff --git a/colossalai/auto_parallel/tensor_shard/node_handler/option.py b/colossalai/auto_parallel/tensor_shard/node_handler/option.py

new file mode 100644

index 000000000..dffb0386d

--- /dev/null

+++ b/colossalai/auto_parallel/tensor_shard/node_handler/option.py

@@ -0,0 +1,17 @@

+from enum import Enum

+

+__all__ = ['ShardOption']

+

+

+class ShardOption(Enum):

+ """

+ This enum class is to define the shard level required in node strategies.

+

+ Notes:

+ STANDARD: We do not add any extra shard requirements.

+ SHARD: We require the node to be shard using at least one device mesh axis.

+ FULL_SHARD: We require the node to be shard using all device mesh axes.

+ """

+ STANDARD = 0

+ SHARD = 1

+ FULL_SHARD = 2

diff --git a/colossalai/autochunk/autochunk_codegen.py b/colossalai/autochunk/autochunk_codegen.py

new file mode 100644

index 000000000..8c3155a60

--- /dev/null

+++ b/colossalai/autochunk/autochunk_codegen.py

@@ -0,0 +1,523 @@

+from typing import Any, Dict, Iterable, List, Tuple

+

+import torch

+

+import colossalai

+from colossalai.fx.codegen.activation_checkpoint_codegen import CODEGEN_AVAILABLE

+

+if CODEGEN_AVAILABLE:

+ from torch.fx.graph import (

+ CodeGen,

+ PythonCode,

+ _custom_builtins,

+ _CustomBuiltin,

+ _format_target,

+ _is_from_torch,

+ _Namespace,

+ _origin_type_map,

+ inplace_methods,

+ magic_methods,

+ )

+

+from torch.fx.node import Argument, Node, _get_qualified_name, _type_repr, map_arg

+

+from .search_chunk import SearchChunk

+from .utils import delete_free_var_from_last_use, find_idx_by_name, get_logger, get_node_shape

+

+

+def _gen_chunk_slice_dim(chunk_dim: int, chunk_indice_name: str, shape: List) -> str:

+ """

+ Generate chunk slice string, eg. [:, :, chunk_idx_name:chunk_idx_name + chunk_size, :]

+

+ Args:

+ chunk_dim (int)

+ chunk_indice_name (str): chunk indice name

+ shape (List): node shape

+

+ Returns:

+ new_shape (str): return slice

+ """

+ new_shape = "["

+ for idx, _ in enumerate(shape):

+ if idx == chunk_dim:

+ new_shape += "%s:%s + chunk_size" % (chunk_indice_name, chunk_indice_name)

+ else:

+ new_shape += ":"

+ new_shape += ", "

+ new_shape = new_shape[:-2] + "]"

+ return new_shape

+

+

+def _gen_loop_start(chunk_input: List[Node], chunk_output: Node, chunk_ouput_dim: int, chunk_size=2) -> str:

+ """

+ Generate chunk loop start

+

+ eg. chunk_result = torch.empty([100, 100], dtype=input_node.dtype, device=input_node.device)

+ chunk_size = 32

+ for chunk_idx in range(0, 100, 32):

+ ......

+

+ Args:

+ chunk_input (List[Node]): chunk input node

+ chunk_output (Node): chunk output node

+ chunk_ouput_dim (int): chunk output node chunk dim

+ chunk_size (int): chunk size. Defaults to 2.

+

+ Returns:

+ context (str): generated str

+ """

+ input_node = chunk_input[0]

+ out_shape = get_node_shape(chunk_output)

+ out_str = str(list(out_shape))

+ context = (

+ "chunk_result = torch.empty(%s, dtype=%s.dtype, device=%s.device); chunk_size = %d\nfor chunk_idx in range" %

+ (out_str, input_node.name, input_node.name, chunk_size))

+ context += "(0, %d, chunk_size):\n" % (out_shape[chunk_ouput_dim])

+ return context

+

+

+def _gen_loop_end(

+ chunk_inputs: List[Node],

+ chunk_non_compute_inputs: List[Node],

+ chunk_outputs: Node,

+ chunk_outputs_dim: int,

+ node_list: List[Node],

+) -> str:

+ """

+ Generate chunk loop end

+

+ eg. chunk_result[chunk_idx:chunk_idx + chunk_size] = output_node

+ output_node = chunk_result; xx = None; xx = None

+

+ Args:

+ chunk_inputs (List[Node]): chunk input node

+ chunk_non_compute_inputs (List[Node]): input node without chunk

+ chunk_outputs (Node): chunk output node

+ chunk_outputs_dim (int): chunk output node chunk dim

+ node_list (List)

+

+ Returns:

+ context (str): generated str

+ """

+ chunk_outputs_name = chunk_outputs.name

+ chunk_outputs_idx = find_idx_by_name(chunk_outputs_name, node_list)

+ chunk_output_shape = chunk_outputs.meta["tensor_meta"].shape

+ chunk_slice = _gen_chunk_slice_dim(chunk_outputs_dim, "chunk_idx", chunk_output_shape)

+ context = " chunk_result%s = %s; %s = None\n" % (

+ chunk_slice,

+ chunk_outputs_name,

+ chunk_outputs_name,

+ )

+ context += (chunk_outputs_name + " = chunk_result; chunk_result = None; chunk_size = None")

+

+ # determine if its the last use for chunk input

+ for chunk_input in chunk_inputs + chunk_non_compute_inputs:

+ if all([find_idx_by_name(user.name, node_list) <= chunk_outputs_idx for user in chunk_input.users.keys()]):

+ context += "; %s = None" % chunk_input.name

+

+ context += "\n"

+ return context

+

+

+def _replace_name(context: str, name_from: str, name_to: str) -> str:

+ """

+ replace node name

+ """

+ patterns = [(" ", " "), (" ", "."), (" ", ","), ("(", ")"), ("(", ","), (" ", ")"), (" ", ""), ("", " ")]

+ for p in patterns:

+ source = p[0] + name_from + p[1]

+ target = p[0] + name_to + p[1]

+ if source in context:

+ context = context.replace(source, target)

+ break

+ return context

+

+

+def _replace_reshape_size(context: str, node_name: str, reshape_size_dict: Dict) -> str:

+ """

+ replace reshape size, some may have changed due to chunk

+ """

+ if node_name not in reshape_size_dict:

+ return context

+ context = context.replace(reshape_size_dict[node_name][0], reshape_size_dict[node_name][1])

+ return context

+

+

+def _replace_ones_like(

+ search_chunk: SearchChunk,

+ chunk_infos: List[Dict],

+ region_idx: int,

+ node_idx: int,

+ node: Node,

+ body: List[str],

+) -> List[str]:

+ """

+ add chunk slice for new tensor op such as ones like

+ """

+ if "ones_like" in node.name:

+ meta_node = search_chunk.trace_indice.node_list[node_idx]

+ chunk_dim = chunk_infos[region_idx]["node_chunk_dim"][meta_node]["chunk_dim"]

+ if get_node_shape(meta_node)[chunk_dim] != 1:

+ source_node = meta_node.args[0].args[0]

+ if (source_node not in chunk_infos[region_idx]["node_chunk_dim"]

+ or chunk_infos[region_idx]["node_chunk_dim"][source_node]["chunk_dim"] is None):

+ chunk_slice = _gen_chunk_slice_dim(chunk_dim, "chunk_idx", get_node_shape(node))

+ body[-1] = _replace_name(body[-1], node.args[0].name, node.args[0].name + chunk_slice)

+ return body

+

+

+def _replace_input_node(

+ chunk_inputs: List[Node],

+ region_idx: int,

+ chunk_inputs_dim: Dict,

+ node_idx: int,

+ body: List[str],

+) -> List[str]:

+ """

+ add chunk slice for input nodes

+ """

+ for input_node_idx, input_node in enumerate(chunk_inputs[region_idx]):

+ for idx, dim in chunk_inputs_dim[region_idx][input_node_idx].items():

+ if idx == node_idx:

+ chunk_slice = _gen_chunk_slice_dim(dim[0], "chunk_idx", get_node_shape(input_node))

+ body[-1] = _replace_name(body[-1], input_node.name, input_node.name + chunk_slice)

+ return body

+

+

+def emit_code_with_chunk(

+ body: List[str],

+ nodes: Iterable[Node],

+ emit_node_func,

+ delete_unused_value_func,

+ search_chunk: SearchChunk,

+ chunk_infos: List,

+):

+ """

+ Emit code with chunk according to chunk_infos.

+

+ It will generate a for loop in chunk regions, and

+ replace inputs and outputs of regions with chunked variables.

+

+ Args:

+ body: forward code

+ nodes: graph.nodes

+ emit_node_func: function to emit node

+ delete_unused_value_func: function to remove the unused value

+ search_chunk: the class to search all chunks

+ chunk_infos: store all information about all chunks.

+ """

+ node_list = list(nodes)

+

+ # chunk region

+ chunk_starts = [i["region"][0] for i in chunk_infos]

+ chunk_ends = [i["region"][1] for i in chunk_infos]

+

+ # chunk inputs

+ chunk_inputs = [i["inputs"] for i in chunk_infos] # input with chunk

+ chunk_inputs_non_chunk = [i["inputs_non_chunk"] for i in chunk_infos] # input without chunk

+ chunk_inputs_dim = [i["inputs_dim"] for i in chunk_infos] # input chunk dim

+ chunk_inputs_names = [j.name for i in chunk_inputs for j in i] + [j.name for i in chunk_inputs_non_chunk for j in i]

+

+ # chunk outputs

+ chunk_outputs = [i["outputs"][0] for i in chunk_infos]

+ chunk_outputs_dim = [i["outputs_dim"] for i in chunk_infos]

+

+ node_list = search_chunk.reorder_graph.reorder_node_list(node_list)

+ node_idx = 0

+ region_idx = 0

+ within_chunk_region = False

+

+ while node_idx < len(node_list):

+ node = node_list[node_idx]

+

+ # if is chunk start, generate for loop start

+ if node_idx in chunk_starts:

+ within_chunk_region = True

+ region_idx = chunk_starts.index(node_idx)

+ body.append(

+ _gen_loop_start(

+ chunk_inputs[region_idx],

+ chunk_outputs[region_idx],

+ chunk_outputs_dim[region_idx],

+ chunk_infos[region_idx]["chunk_size"],

+ ))

+

+ if within_chunk_region:

+ emit_node_func(node, body)

+ # replace input var with chunk var

+ body = _replace_input_node(chunk_inputs, region_idx, chunk_inputs_dim, node_idx, body)

+ # ones like

+ body = _replace_ones_like(search_chunk, chunk_infos, region_idx, node_idx, node, body)

+ # reassgin reshape size

+ body[-1] = _replace_reshape_size(body[-1], node.name, chunk_infos[region_idx]["reshape_size"])

+ body[-1] = " " + body[-1]

+ delete_unused_value_func(node, body, chunk_inputs_names)

+ else:

+ emit_node_func(node, body)

+ if node_idx not in chunk_inputs:

+ delete_unused_value_func(node, body, chunk_inputs_names)

+

+ # generate chunk region end

+ if node_idx in chunk_ends:

+ body.append(

+ _gen_loop_end(

+ chunk_inputs[region_idx],

+ chunk_inputs_non_chunk[region_idx],

+ chunk_outputs[region_idx],

+ chunk_outputs_dim[region_idx],

+ node_list,

+ ))

+ within_chunk_region = False

+

+ node_idx += 1

+

+

+if CODEGEN_AVAILABLE:

+

+ class AutoChunkCodeGen(CodeGen):

+

+ def __init__(self,

+ meta_graph,

+ max_memory: int = None,

+ print_mem: bool = False,

+ print_progress: bool = False) -> None:

+ super().__init__()

+ # find the chunk regions

+ self.search_chunk = SearchChunk(meta_graph, max_memory, print_mem, print_progress)

+ self.chunk_infos = self.search_chunk.search_region()

+ if print_progress:

+ get_logger().info("AutoChunk start codegen")

+

+ def _gen_python_code(self, nodes, root_module: str, namespace: _Namespace) -> PythonCode:

+ free_vars: List[str] = []

+ body: List[str] = []

+ globals_: Dict[str, Any] = {}

+ wrapped_fns: Dict[str, None] = {}

+

+ # Wrap string in list to pass by reference

+ maybe_return_annotation: List[str] = [""]

+

+ def add_global(name_hint: str, obj: Any):

+ """Add an obj to be tracked as a global.

+

+ We call this for names that reference objects external to the

+ Graph, like functions or types.

+

+ Returns: the global name that should be used to reference 'obj' in generated source.

+ """

+ if (_is_from_torch(obj) and obj != torch.device): # to support registering torch.device

+ # HACK: workaround for how torch custom ops are registered. We

+ # can't import them like normal modules so they must retain their

+ # fully qualified name.

+ return _get_qualified_name(obj)

+

+ # normalize the name hint to get a proper identifier

+ global_name = namespace.create_name(name_hint, obj)

+

+ if global_name in globals_:

+ assert globals_[global_name] is obj

+ return global_name

+ globals_[global_name] = obj

+ return global_name

+

+ # set _custom_builtins here so that we needn't import colossalai in forward

+ _custom_builtins["colossalai"] = _CustomBuiltin("import colossalai", colossalai)

+

+ # Pre-fill the globals table with registered builtins.

+ for name, (_, obj) in _custom_builtins.items():

+ add_global(name, obj)

+

+ def type_repr(o: Any):

+ if o == ():

+ # Empty tuple is used for empty tuple type annotation Tuple[()]

+ return "()"

+

+ typename = _type_repr(o)

+

+ if hasattr(o, "__origin__"):

+ # This is a generic type, e.g. typing.List[torch.Tensor]

+ origin_type = _origin_type_map.get(o.__origin__, o.__origin__)

+ origin_typename = add_global(_type_repr(origin_type), origin_type)

+

+ if hasattr(o, "__args__"):

+ # Assign global names for each of the inner type variables.

+ args = [type_repr(arg) for arg in o.__args__]

+

+ if len(args) == 0:

+ # Bare type, such as `typing.Tuple` with no subscript

+ # This code-path used in Python < 3.9

+ return origin_typename

+

+ return f'{origin_typename}[{",".join(args)}]'

+ else:

+ # Bare type, such as `typing.Tuple` with no subscript

+ # This code-path used in Python 3.9+

+ return origin_typename

+

+ # Common case: this is a regular module name like 'foo.bar.baz'

+ return add_global(typename, o)

+

+ def _format_args(args: Tuple[Argument, ...], kwargs: Dict[str, Argument]) -> str:

+

+ def _get_repr(arg):

+ # Handle NamedTuples (if it has `_fields`) via add_global.

+ if isinstance(arg, tuple) and hasattr(arg, "_fields"):

+ qualified_name = _get_qualified_name(type(arg))

+ global_name = add_global(qualified_name, type(arg))

+ return f"{global_name}{repr(tuple(arg))}"

+ return repr(arg)

+

+ args_s = ", ".join(_get_repr(a) for a in args)

+ kwargs_s = ", ".join(f"{k} = {_get_repr(v)}" for k, v in kwargs.items())

+ if args_s and kwargs_s:

+ return f"{args_s}, {kwargs_s}"

+ return args_s or kwargs_s

+

+ # Run through reverse nodes and record the first instance of a use

+ # of a given node. This represents the *last* use of the node in the

+ # execution order of the program, which we will use to free unused

+ # values

+ node_to_last_use: Dict[Node, Node] = {}

+ user_to_last_uses: Dict[Node, List[Node]] = {}

+

+ def register_last_uses(n: Node, user: Node):

+ if n not in node_to_last_use:

+ node_to_last_use[n] = user

+ user_to_last_uses.setdefault(user, []).append(n)

+

+ for node in reversed(nodes):

+ map_arg(node.args, lambda n: register_last_uses(n, node))

+ map_arg(node.kwargs, lambda n: register_last_uses(n, node))

+

+ delete_free_var_from_last_use(user_to_last_uses)

+

+ # NOTE: we add a variable to distinguish body and ckpt_func

+ def delete_unused_values(user: Node, body, to_keep=[]):

+ """

+ Delete values after their last use. This ensures that values that are

+ not used in the remainder of the code are freed and the memory usage

+ of the code is optimal.

+ """

+ if user.op == "placeholder":

+ return

+ if user.op == "output":

+ body.append("\n")

+ return

+ nodes_to_delete = user_to_last_uses.get(user, [])

+ nodes_to_delete = [i for i in nodes_to_delete if i.name not in to_keep]

+ if len(nodes_to_delete):

+ to_delete_str = " = ".join([repr(n) for n in nodes_to_delete] + ["None"])

+ body.append(f"; {to_delete_str}\n")

+ else:

+ body.append("\n")

+

+ # NOTE: we add a variable to distinguish body and ckpt_func

+ def emit_node(node: Node, body):

+ maybe_type_annotation = ("" if node.type is None else f" : {type_repr(node.type)}")

+ if node.op == "placeholder":

+ assert isinstance(node.target, str)

+ maybe_default_arg = ("" if not node.args else f" = {repr(node.args[0])}")

+ free_vars.append(f"{node.target}{maybe_type_annotation}{maybe_default_arg}")

+ raw_name = node.target.replace("*", "")

+ if raw_name != repr(node):

+ body.append(f"{repr(node)} = {raw_name}\n")

+ return

+ elif node.op == "call_method":

+ assert isinstance(node.target, str)

+ body.append(

+ f"{repr(node)}{maybe_type_annotation} = {_format_target(repr(node.args[0]), node.target)}"

+ f"({_format_args(node.args[1:], node.kwargs)})")

+ return

+ elif node.op == "call_function":

+ assert callable(node.target)

+ # pretty print operators

+ if (node.target.__module__ == "_operator" and node.target.__name__ in magic_methods):

+ assert isinstance(node.args, tuple)

+ body.append(f"{repr(node)}{maybe_type_annotation} = "

+ f"{magic_methods[node.target.__name__].format(*(repr(a) for a in node.args))}")

+ return

+

+ # pretty print inplace operators; required for jit.script to work properly

+ # not currently supported in normal FX graphs, but generated by torchdynamo

+ if (node.target.__module__ == "_operator" and node.target.__name__ in inplace_methods):

+ body.append(f"{inplace_methods[node.target.__name__].format(*(repr(a) for a in node.args))}; "

+ f"{repr(node)}{maybe_type_annotation} = {repr(node.args[0])}")

+ return

+

+ qualified_name = _get_qualified_name(node.target)

+ global_name = add_global(qualified_name, node.target)

+ # special case for getattr: node.args could be 2-argument or 3-argument

+ # 2-argument: attribute access; 3-argument: fall through to attrib function call with default value

+ if (global_name == "getattr" and isinstance(node.args, tuple) and isinstance(node.args[1], str)

+ and node.args[1].isidentifier() and len(node.args) == 2):

+ body.append(

+ f"{repr(node)}{maybe_type_annotation} = {_format_target(repr(node.args[0]), node.args[1])}")

+ return

+ body.append(

+ f"{repr(node)}{maybe_type_annotation} = {global_name}({_format_args(node.args, node.kwargs)})")

+ if node.meta.get("is_wrapped", False):

+ wrapped_fns.setdefault(global_name)

+ return

+ elif node.op == "call_module":

+ assert isinstance(node.target, str)

+ body.append(f"{repr(node)}{maybe_type_annotation} = "

+ f"{_format_target(root_module, node.target)}({_format_args(node.args, node.kwargs)})")

+ return

+ elif node.op == "get_attr":

+ assert isinstance(node.target, str)

+ body.append(f"{repr(node)}{maybe_type_annotation} = {_format_target(root_module, node.target)}")

+ return

+ elif node.op == "output":

+ if node.type is not None:

+ maybe_return_annotation[0] = f" -> {type_repr(node.type)}"

+ body.append(self.generate_output(node.args[0]))

+ return

+ raise NotImplementedError(f"node: {node.op} {node.target}")

+

+ # Modified for activation checkpointing

+ ckpt_func = []

+

+ # if any node has a list of labels for activation_checkpoint, we

+ # will use nested type of activation checkpoint codegen

+ emit_code_with_chunk(

+ body,

+ nodes,

+ emit_node,

+ delete_unused_values,

+ self.search_chunk,

+ self.chunk_infos,

+ )

+

+ if len(body) == 0:

+ # If the Graph has no non-placeholder nodes, no lines for the body

+ # have been emitted. To continue to have valid Python code, emit a

+ # single pass statement

+ body.append("pass\n")

+

+ if len(wrapped_fns) > 0:

+ wrap_name = add_global("wrap", torch.fx.wrap)

+ wrap_stmts = "\n".join([f'{wrap_name}("{name}")' for name in wrapped_fns])

+ else:

+ wrap_stmts = ""

+

+ if self._body_transformer:

+ body = self._body_transformer(body)

+

+ for name, value in self.additional_globals():

+ add_global(name, value)

+

+ # as we need colossalai.utils.checkpoint, we need to import colossalai

+ # in forward function

+ prologue = self.gen_fn_def(free_vars, maybe_return_annotation[0])

+ prologue = "".join(ckpt_func) + prologue

+ prologue = prologue

+

+ code = "".join(body)

+ code = "\n".join(" " + line for line in code.split("\n"))

+ fn_code = f"""

+{wrap_stmts}

+

+{prologue}

+{code}"""

+ # print(fn_code)

+ return PythonCode(fn_code, globals_)

diff --git a/colossalai/autochunk/estimate_memory.py b/colossalai/autochunk/estimate_memory.py

new file mode 100644

index 000000000..a03a5413b

--- /dev/null

+++ b/colossalai/autochunk/estimate_memory.py

@@ -0,0 +1,323 @@

+import copy

+from typing import Any, Callable, Dict, Iterable, List, Tuple

+

+import torch

+from torch.fx.node import Node, map_arg

+

+from colossalai.fx.profiler import activation_size, parameter_size

+

+from .utils import delete_free_var_from_last_use, find_idx_by_name, get_node_shape, is_non_memory_node

+

+

+class EstimateMemory(object):

+ """

+ Estimate memory with chunk

+ """

+

+ def __init__(self) -> None:

+ pass

+

+ def _get_meta_node_size(self, x):

+ x = x.meta["tensor_meta"]

+ x = x.numel * torch.tensor([], dtype=x.dtype).element_size()

+ return x

+

+ def _get_output_node(self, n):

+ out_size = activation_size(n.meta["fwd_out"])

+ out_node = [n.name] if out_size > 0 else []

+ return out_size, out_node

+

+ def _get_output_node_size(self, n):

+ return self._get_output_node(n)[0]

+

+ def _add_active_node(self, n, active_list):

+ new_active = self._get_output_node(n)[1]

+ if n.op == "placeholder" and get_node_shape(n) is not None:

+ new_active.append(n.name)

+ for i in new_active:

+ if i not in active_list and get_node_shape(n) is not None:

+ active_list.append(i)

+

+ def _get_delete_node(self, user, user_to_last_uses, to_keep=None):

+ delete_size = 0

+ delete_node = []

+ if user.op not in ("output",):

+ nodes_to_delete = user_to_last_uses.get(user, [])

+ if len(user.users) == 0:

+ nodes_to_delete.append(user)

+ if to_keep is not None:

+ keep_list = []

+ for n in nodes_to_delete:

+ if n.name in to_keep:

+ keep_list.append(n)

+ for n in keep_list:

+ if n in nodes_to_delete:

+ nodes_to_delete.remove(n)

+ if len(nodes_to_delete):

+ out_node = [self._get_output_node(i) for i in nodes_to_delete]

+ delete_size = sum([i[0] for i in out_node])

+ for i in range(len(out_node)):

+ if out_node[i][0] > 0:

+ delete_node.append(out_node[i][1][0])

+ elif nodes_to_delete[i].op == "placeholder":

+ delete_node.append(nodes_to_delete[i].name)

+ # elif any(j in nodes_to_delete[i].name for j in ['transpose', 'permute', 'view']):

+ # delete_node.append(nodes_to_delete[i].name)

+ return delete_size, delete_node

+

+ def _get_delete_node_size(self, user, user_to_last_uses, to_keep):

+ return self._get_delete_node(user, user_to_last_uses, to_keep)[0]

+

+ def _remove_deactive_node(self, user, user_to_last_uses, active_list):

+ delete_node = self._get_delete_node(user, user_to_last_uses)[1]

+ for i in delete_node:

+ if i in active_list:

+ active_list.remove(i)

+

+ def _get_chunk_inputs_size(self, chunk_inputs, chunk_inputs_non_chunk, node_list, chunk_end_idx):

+ nodes_to_delete = []

+ for chunk_input in chunk_inputs + chunk_inputs_non_chunk:

+ chunk_input_users = chunk_input.users.keys()

+ chunk_input_users_idx = [find_idx_by_name(i.name, node_list) for i in chunk_input_users]

+ if all(i <= chunk_end_idx for i in chunk_input_users_idx):

+ if chunk_input not in nodes_to_delete:

+ nodes_to_delete.append(chunk_input)

+ out_node = [self._get_output_node(i) for i in nodes_to_delete]

+ delete_size = sum([i[0] for i in out_node])

+ return delete_size

+

+ def _get_last_usr(self, nodes):

+ node_to_last_use: Dict[Node, Node] = {}

+ user_to_last_uses: Dict[Node, List[Node]] = {}

+

+ def register_last_uses(n: Node, user: Node):

+ if n not in node_to_last_use:

+ node_to_last_use[n] = user

+ user_to_last_uses.setdefault(user, []).append(n)

+

+ for node in reversed(nodes):

+ map_arg(node.args, lambda n: register_last_uses(n, node))

+ map_arg(node.kwargs, lambda n: register_last_uses(n, node))

+ return user_to_last_uses

+

+ def _get_contiguous_memory(self, node, not_contiguous_list, delete=False):

+ mem = 0

+ not_contiguous_ops = ["permute"]

+ inherit_contiguous_ops = ["transpose", "view"]

+

+ if node.op == "call_function" and any(n in node.name for n in ["matmul", "reshape"]):

+ for n in node.args:

+ if n in not_contiguous_list:

+ # matmul won't change origin tensor, but create a tmp copy

+ mem += self._get_output_node_size(n)

+ elif node.op == "call_module":

+ for n in node.args:

+ if n in not_contiguous_list:

+ # module will just make origin tensor to contiguous

+ if delete:

+ not_contiguous_list.remove(n)

+ elif node.op == "call_method" and any(i in node.name for i in not_contiguous_ops):

+ if node not in not_contiguous_list:

+ not_contiguous_list.append(node)

+ return mem

+

+ def _get_chunk_ratio(self, node, chunk_node_dim, chunk_size):

+ if node not in chunk_node_dim:

+ return 1.0

+ node_shape = get_node_shape(node)

+ chunk_dim = chunk_node_dim[node]["chunk_dim"]

+ if chunk_dim is None:

+ return 1.0

+ else:

+ return float(chunk_size) / node_shape[chunk_dim]

+

+ def _get_chunk_delete_node_size(self, user, user_to_last_uses, chunk_ratio, chunk_inputs_names):

+ # if any(j in user.name for j in ['transpose', 'permute', 'view']):

+ # return 0

+ if user.op in ("placeholder", "output"):

+ return 0

+ nodes_to_delete = user_to_last_uses.get(user, [])

+ if len(user.users) == 0:

+ nodes_to_delete.append(user)

+ delete_size = 0

+ for n in nodes_to_delete:

+ if n.name in chunk_inputs_names:

+ continue

+ delete_size += self._get_output_node_size(n) * chunk_ratio

+ return delete_size

+

+ def _print_mem_log(self, log, nodes, title=None):

+ if title:

+ print(title)

+ for idx, (l, n) in enumerate(zip(log, nodes)):

+ print("%s:%.2f \t" % (n.name, l), end="")

+ if (idx + 1) % 3 == 0:

+ print("")

+ print("\n")

+

+ def _print_compute_op_mem_log(self, log, nodes, title=None):

+ if title:

+ print(title)

+ for idx, (l, n) in enumerate(zip(log, nodes)):

+ if n.op in ["placeholder", "get_attr", "output"]:

+ continue

+ if any(i in n.name for i in ["getitem", "getattr"]):

+ continue

+ print("%s:%.2f \t" % (n.name, l), end="")

+ if (idx + 1) % 3 == 0:

+ print("")

+ print("\n")

+

+ def estimate_chunk_inference_mem(

+ self,

+ node_list: List,

+ chunk_infos=None,

+ print_mem=False,

+ ):

+ """

+ Estimate inference memory with chunk

+

+ Args:

+ node_list (List): _description_

+ chunk_infos (Dict): Chunk information. Defaults to None.

+ print_mem (bool): Wether to print peak memory of every node. Defaults to False.

+

+ Returns:

+ act_memory_peak_log (List): peak memory of every node

+ act_memory_after_node_log (List): memory after excuting every node

+ active_node_list_log (List): active nodes of every node. active nodes refer to

+ nodes generated but not deleted.

+ """

+ act_memory = 0.0

+ act_memory_peak_log = []

+ act_memory_after_node_log = []

+ active_node_list = []

+ active_node_list_log = []

+ not_contiguous_list = []

+ user_to_last_uses = self._get_last_usr(node_list)

+ user_to_last_uses_no_free_var = self._get_last_usr(node_list)

+ delete_free_var_from_last_use(user_to_last_uses_no_free_var)

+

+ use_chunk = True if chunk_infos is not None else False

+ chunk_within = False

+ chunk_region_idx = None

+ chunk_ratio = 1 # use it to estimate chunk mem

+ chunk_inputs_names = []

+

+ if use_chunk:

+ chunk_regions = [i["region"] for i in chunk_infos]

+ chunk_starts = [i[0] for i in chunk_regions]

+ chunk_ends = [i[1] for i in chunk_regions]

+ chunk_inputs = [i["inputs"] for i in chunk_infos]

+ chunk_inputs_non_chunk = [i["inputs_non_chunk"] for i in chunk_infos]

+ chunk_inputs_names = [j.name for i in chunk_inputs for j in i

+ ] + [j.name for i in chunk_inputs_non_chunk for j in i]

+ chunk_outputs = [i["outputs"][0] for i in chunk_infos]

+ chunk_node_dim = [i["node_chunk_dim"] for i in chunk_infos]

+ chunk_sizes = [i["chunk_size"] if "chunk_size" in i else 1 for i in chunk_infos]

+

+ for idx, node in enumerate(node_list):

+ # if node in chunk start nodes, change chunk ratio and add chunk_tensor

+ if use_chunk and idx in chunk_starts:

+ chunk_within = True

+ chunk_region_idx = chunk_starts.index(idx)

+ act_memory += self._get_output_node_size(chunk_outputs[chunk_region_idx]) / (1024**2)

+

+ # determine chunk ratio for current node

+ if chunk_within:

+ chunk_ratio = self._get_chunk_ratio(

+ node,

+ chunk_node_dim[chunk_region_idx],

+ chunk_sizes[chunk_region_idx],

+ )

+

+ # if node is placeholder, just add the size of the node

+ if node.op == "placeholder":

+ act_memory += self._get_meta_node_size(node) * chunk_ratio / (1024**2)

+ act_memory_peak_log.append(act_memory)

+ # skip output

+ elif node.op == "output":

+ continue

+ # no change for non compute node

+ elif is_non_memory_node(node):

+ act_memory_peak_log.append(act_memory)

+ # node is a compute op

+ # calculate tmp, output node and delete node memory

+ else:

+ # forward memory

+ # TODO: contiguous_memory still not accurate for matmul, view, reshape and transpose

+ act_memory += (self._get_contiguous_memory(node, not_contiguous_list) * chunk_ratio / (1024**2))

+ act_memory += (self._get_output_node_size(node) * chunk_ratio / (1024**2))

+ # record max act memory

+ act_memory_peak_log.append(act_memory)

+ # delete useless memory

+ act_memory -= (self._get_contiguous_memory(node, not_contiguous_list, delete=True) * chunk_ratio /

+ (1024**2))

+ # delete unused vars not in chunk_input_list

+ # we can't delete input nodes until chunk ends

+ if chunk_within:

+ act_memory -= self._get_chunk_delete_node_size(

+ node,

+ user_to_last_uses_no_free_var,

+ chunk_ratio,

+ chunk_inputs_names,

+ ) / (1024**2)

+ else:

+ act_memory -= self._get_delete_node_size(node, user_to_last_uses_no_free_var,

+ chunk_inputs_names) / (1024**2)

+

+ # log active node, only effective without chunk

+ self._add_active_node(node, active_node_list)

+ self._remove_deactive_node(node, user_to_last_uses, active_node_list)

+

+ # if node in chunk end nodes, restore chunk settings

+ if use_chunk and idx in chunk_ends:

+ act_memory -= (self._get_output_node_size(node) * chunk_ratio / (1024**2))

+ act_memory -= self._get_chunk_inputs_size(

+ chunk_inputs[chunk_region_idx],

+ chunk_inputs_non_chunk[chunk_region_idx],

+ node_list,

+ chunk_regions[chunk_region_idx][1],

+ ) / (1024**2)

+ chunk_within = False

+ chunk_ratio = 1

+ chunk_region_idx = None

+

+ act_memory_after_node_log.append(act_memory)

+ active_node_list_log.append(copy.deepcopy(active_node_list))

+

+ if print_mem:

+ print("with chunk" if use_chunk else "without chunk")

+ # self._print_mem_log(act_memory_peak_log, node_list, "peak")

+ # self._print_mem_log(act_memory_after_node_log, node_list, "after")

+ self._print_compute_op_mem_log(act_memory_peak_log, node_list, "peak")

+ # self._print_compute_op_mem_log(

+ # act_memory_after_node_log, node_list, "after"

+ # )

+

+ # param_memory = parameter_size(gm)

+ # all_memory = act_memory + param_memory

+ return act_memory_peak_log, act_memory_after_node_log, active_node_list_log

+

+ def get_active_nodes(self, node_list: List) -> List:

+ """

+ Get active nodes for every node

+

+ Args:

+ node_list (List): _description_

+

+ Returns:

+ active_node_list_log (List): active nodes of every node. active nodes refer to

+ nodes generated but not deleted.

+ """

+ active_node_list = []

+ active_node_list_log = []

+ user_to_last_uses = self._get_last_usr(node_list)

+ user_to_last_uses_no_free_var = self._get_last_usr(node_list)

+ delete_free_var_from_last_use(user_to_last_uses_no_free_var)

+ for _, node in enumerate(node_list):

+ # log active node, only effective without chunk

+ self._add_active_node(node, active_node_list)

+ self._remove_deactive_node(node, user_to_last_uses, active_node_list)

+ active_node_list_log.append(copy.deepcopy(active_node_list))

+ return active_node_list_log

diff --git a/colossalai/autochunk/reorder_graph.py b/colossalai/autochunk/reorder_graph.py

new file mode 100644

index 000000000..0343e52ee

--- /dev/null

+++ b/colossalai/autochunk/reorder_graph.py

@@ -0,0 +1,117 @@

+from .trace_indice import TraceIndice

+from .utils import find_idx_by_name

+

+

+class ReorderGraph(object):

+ """

+ Reorder node list and indice trace list

+ """

+

+ def __init__(self, trace_indice: TraceIndice) -> None:

+ self.trace_indice = trace_indice

+ self.all_reorder_map = {

+ i: i for i in range(len(self.trace_indice.indice_trace_list))

+ }

+

+ def _get_reorder_map(self, chunk_info):

+ reorder_map = {i: i for i in range(len(self.trace_indice.node_list))}

+

+ chunk_region_start = chunk_info["region"][0]

+ chunk_region_end = chunk_info["region"][1]

+ chunk_prepose_nodes = chunk_info["args"]["prepose_nodes"]

+ chunk_prepose_nodes_idx = [

+ find_idx_by_name(i.name, self.trace_indice.node_list)

+ for i in chunk_prepose_nodes

+ ]

+ # put prepose nodes ahead

+ for idx, n in enumerate(chunk_prepose_nodes):

+ n_idx = chunk_prepose_nodes_idx[idx]

+ reorder_map[n_idx] = chunk_region_start + idx

+ # put other nodes after prepose nodes

+ for n in self.trace_indice.node_list[chunk_region_start : chunk_region_end + 1]:

+ if n in chunk_prepose_nodes:

+ continue

+ n_idx = find_idx_by_name(n.name, self.trace_indice.node_list)

+ pos = sum([n_idx < i for i in chunk_prepose_nodes_idx])

+ reorder_map[n_idx] = n_idx + pos

+

+ return reorder_map

+

+ def _reorder_chunk_info(self, chunk_info, reorder_map):

+ # update chunk info

+ chunk_info["region"] = (

+ chunk_info["region"][0] + len(chunk_info["args"]["prepose_nodes"]),

+ chunk_info["region"][1],

+ )

+ new_inputs_dim = []

+ for idx, input_dim in enumerate(chunk_info["inputs_dim"]):

+ new_input_dim = {}

+ for k, v in input_dim.items():

+ new_input_dim[reorder_map[k]] = v

+ new_inputs_dim.append(new_input_dim)

+ chunk_info["inputs_dim"] = new_inputs_dim

+ return chunk_info

+

+ def _update_all_reorder_map(self, reorder_map):

+ for origin_idx, map_idx in self.all_reorder_map.items():

+ self.all_reorder_map[origin_idx] = reorder_map[map_idx]

+

+ def _reorder_self_node_list(self, reorder_map):

+ new_node_list = [None for _ in range(len(self.trace_indice.node_list))]

+ for old_idx, new_idx in reorder_map.items():

+ new_node_list[new_idx] = self.trace_indice.node_list[old_idx]

+ self.trace_indice.node_list = new_node_list

+

+ def _reorder_idx_trace(self, reorder_map):

+ # reorder list

+ new_idx_trace_list = [

+ None for _ in range(len(self.trace_indice.indice_trace_list))

+ ]

+ for old_idx, new_idx in reorder_map.items():

+ new_idx_trace_list[new_idx] = self.trace_indice.indice_trace_list[old_idx]

+ self.trace_indice.indice_trace_list = new_idx_trace_list

+ # update compute

+ for idx_trace in self.trace_indice.indice_trace_list:

+ compute = idx_trace["compute"]

+ for dim_compute in compute:

+ for idx, i in enumerate(dim_compute):

+ dim_compute[idx] = reorder_map[i]

+ # update source

+ for idx_trace in self.trace_indice.indice_trace_list:

+ source = idx_trace["source"]

+ for dim_idx, dim_source in enumerate(source):

+ new_dim_source = {}

+ for k, v in dim_source.items():

+ new_dim_source[reorder_map[k]] = v

+ source[dim_idx] = new_dim_source

+

+ def reorder_all(self, chunk_info):

+ if chunk_info is None:

+ return chunk_info

+ if len(chunk_info["args"]["prepose_nodes"]) == 0:

+ return chunk_info

+ reorder_map = self._get_reorder_map(chunk_info)

+ self._update_all_reorder_map(reorder_map)

+ self._reorder_idx_trace(reorder_map)

+ self._reorder_self_node_list(reorder_map)

+ chunk_info = self._reorder_chunk_info(chunk_info, reorder_map)

+ return chunk_info

+

+ def reorder_node_list(self, node_list):

+ new_node_list = [None for _ in range(len(node_list))]

+ for old_idx, new_idx in self.all_reorder_map.items():

+ new_node_list[new_idx] = node_list[old_idx]

+ return new_node_list

+

+ def tmp_reorder(self, node_list, chunk_info):

+ if len(chunk_info["args"]["prepose_nodes"]) == 0:

+ return node_list, chunk_info

+ reorder_map = self._get_reorder_map(chunk_info)

+

+ # new tmp node list

+ new_node_list = [None for _ in range(len(node_list))]

+ for old_idx, new_idx in reorder_map.items():

+ new_node_list[new_idx] = node_list[old_idx]

+

+ chunk_info = self._reorder_chunk_info(chunk_info, reorder_map)

+ return new_node_list, chunk_info

diff --git a/colossalai/autochunk/search_chunk.py b/colossalai/autochunk/search_chunk.py

new file mode 100644

index 000000000..a86196712

--- /dev/null

+++ b/colossalai/autochunk/search_chunk.py

@@ -0,0 +1,319 @@

+import copy

+from typing import Dict, List, Tuple

+

+from torch.fx.node import Node

+

+from .estimate_memory import EstimateMemory

+from .reorder_graph import ReorderGraph

+from .select_chunk import SelectChunk

+from .trace_flow import TraceFlow

+from .trace_indice import TraceIndice

+from .utils import get_logger, get_node_shape, is_non_compute_node, is_non_compute_node_except_placeholder

+

+

+class SearchChunk(object):

+ """

+ This is the core class for AutoChunk.

+

+ It defines the framework of the strategy of AutoChunk.

+ Chunks will be selected one by one utill search stops.

+

+ The chunk search is as follows:

+ 1. find the peak memory node

+ 2. find the max chunk region according to the peak memory node

+ 3. find all possible chunk regions in the max chunk region

+ 4. find the best chunk region for current status

+ 5. goto 1

+

+ Attributes:

+ gm: graph model

+ print_mem (bool): print estimated memory

+ trace_index: trace the flow of every dim of every node to find all free dims

+ trace_flow: determine the region chunk strategy

+ reorder_graph: reorder nodes to improve chunk efficiency

+ estimate_memory: estimate memory with chunk

+ select_chunk: select the best chunk region

+

+ Args:

+ gm: graph model

+ max_memory (int): max memory in MB

+ print_mem (bool): print estimated memory

+ """

+

+ def __init__(self, gm, max_memory=None, print_mem=False, print_progress=False) -> None:

+ self.print_mem = print_mem

+ self.print_progress = print_progress

+ self.trace_indice = TraceIndice(list(gm.graph.nodes))

+ self.estimate_memory = EstimateMemory()

+ self._init_trace()

+ self.trace_flow = TraceFlow(self.trace_indice)

+ self.reorder_graph = ReorderGraph(self.trace_indice)

+ self.select_chunk = SelectChunk(

+ self.trace_indice,

+ self.estimate_memory,

+ self.reorder_graph,

+ max_memory=max_memory,

+ )

+

+ def _init_trace(self) -> None:

+ """

+ find the max trace range for every node

+ reduce the computation complexity of trace_indice

+ """

+ # find all max ranges

+ active_nodes = self.estimate_memory.get_active_nodes(self.trace_indice.node_list)

+ cur_node_idx = len(self._get_free_var_idx())

+ max_chunk_region_list = []

+ while True:

+ max_chunk_region = self._search_max_chunk_region(active_nodes, cur_node_idx)

+ cur_node_idx = max_chunk_region[1]

+ if cur_node_idx == len(active_nodes) - 1:

+ break

+ max_chunk_region_list.append(max_chunk_region)

+

+ # nothing to limit for the first range

+ max_chunk_region_list = max_chunk_region_list[1:]

+ max_chunk_region_list[0] = (0, max_chunk_region_list[0][1])

+

+ # set trace range and do the trace

+ if self.print_progress:

+ get_logger().info("AutoChunk start tracing indice")

+ self.trace_indice.set_trace_range(max_chunk_region_list, active_nodes)

+ self.trace_indice.trace_indice()

+

+ def _find_peak_node(self, mem_peak: List) -> int:

+ max_value = max(mem_peak)

+ max_idx = mem_peak.index(max_value)

+ return max_idx

+

+ def _get_free_var_idx(self) -> List:

+ """

+ Get free var index

+

+ Returns:

+ free_var_idx (List): all indexs of free vars

+ """

+ free_var_idx = []

+ for idx, n in enumerate(self.trace_indice.node_list):

+ if n.op == "placeholder" and get_node_shape(n) is not None:

+ free_var_idx.append(idx)

+ return free_var_idx

+

+ def _search_max_chunk_region(self, active_node: List, peak_node_idx: int, chunk_regions: List = None) -> Tuple:

+ """

+ Search max chunk region according to peak memory node

+

+ Chunk region starts extending from the peak node, stops where free var num is min

+

+ Args:

+ active_node (List): active node status for every node

+ peak_node_idx (int): peak memory node idx

+ chunk_regions (List): chunk region infos

+

+ Returns:

+ chunk_region_start (int)

+ chunk_region_end (int)

+ """

+ free_vars = self._get_free_var_idx()

+ free_var_num = len(free_vars)

+ active_node_num = [len(i) for i in active_node]

+ min_active_node_num = min(active_node_num[free_var_num:])

+ threshold = max(free_var_num, min_active_node_num)

+

+ # from peak_node to free_var

+ inside_flag = False

+ chunk_region_start = free_var_num

+ for i in range(peak_node_idx, -1, -1):

+ if active_node_num[i] <= threshold:

+ inside_flag = True

+ if inside_flag and active_node_num[i] > threshold:

+ chunk_region_start = i + 1

+ break

+

+ # from peak_node to len-2

+ inside_flag = False

+ chunk_region_end = len(active_node) - 1

+ for i in range(peak_node_idx, len(active_node)):

+ if active_node_num[i] <= threshold:

+ inside_flag = True

+ if inside_flag and active_node_num[i] > threshold:

+ chunk_region_end = i

+ break

+

+ # avoid chunk regions overlap

+ if chunk_regions is not None:

+ for i in chunk_regions:

+ region = i["region"]

+ if chunk_region_start >= region[0] and chunk_region_end <= region[1]:

+ return None

+ elif (region[0] <= chunk_region_start <= region[1] and chunk_region_end > region[1]):

+ chunk_region_start = region[1] + 1

+ elif (region[0] <= chunk_region_end <= region[1] and chunk_region_start < region[0]):

+ chunk_region_end = region[0] - 1

+ return chunk_region_start, chunk_region_end

+

+ def _find_chunk_info(self, input_trace, output_trace, start_idx, end_idx) -> List:

+ """

+ Find chunk info for a region.

+

+ We are given the region start and region end, and need to find out all chunk info for it.

+ We first loop every dim of start node and end node, to see if we can find dim pair,

+ which is linked in a flow and not computed.

+ If found, we then search flow in the whole region to find out all chunk infos.

+

+ Args:

+ input_trace (List): node's input trace in region

+ output_trace (List): node's output trace in region

+ start_idx (int): region start node index

+ end_idx (int): region end node index

+

+ Returns:

+ chunk_infos: possible regions found

+ """

+ start_traces = input_trace[start_idx]

+ end_trace = output_trace[end_idx]

+ end_node = self.trace_indice.node_list[end_idx]

+ chunk_infos = []

+ for end_dim, _ in enumerate(end_trace["indice"]):

+ if len(start_traces) > 1:

+ continue

+ for start_node, start_trace in start_traces.items():

+ for start_dim, _ in enumerate(start_trace["indice"]):

+ # dim size cannot be 1

+ if (get_node_shape(end_node)[end_dim] == 1 or get_node_shape(start_node)[start_dim] == 1):

+ continue

+ # must have users

+ if len(end_node.users) == 0:

+ continue

+ # check index source align

+ if not self.trace_flow.check_index_source(start_dim, start_node, start_idx, end_dim, end_node):

+ continue

+ # check index copmute

+ if not self.trace_flow.check_index_compute(start_idx, end_dim, end_node, end_idx):

+ continue

+ # flow search

+ chunk_info = self.trace_flow.flow_search(start_idx, start_dim, end_idx, end_dim)