mirror of https://github.com/hpcaitech/ColossalAI

[Inference] First PR for rebuild colossal-infer (#5143)

* add engine and scheduler * add dirs --------- Co-authored-by: CjhHa1 <cjh18671720497outlook.com>pull/5258/head

parent

c174c4fc5f

commit

4cf4682e70

|

|

@ -1,229 +0,0 @@

|

|||

# 🚀 Colossal-Inference

|

||||

|

||||

|

||||

## Table of Contents

|

||||

|

||||

- [💡 Introduction](#introduction)

|

||||

- [🔗 Design](#design)

|

||||

- [🔨 Usage](#usage)

|

||||

- [Quick start](#quick-start)

|

||||

- [Example](#example)

|

||||

- [📊 Performance](#performance)

|

||||

|

||||

## Introduction

|

||||

|

||||

`Colossal Inference` is a module that contains colossal-ai designed inference framework, featuring high performance, steady and easy usability. `Colossal Inference` incorporated the advantages of the latest open-source inference systems, including LightLLM, TGI, vLLM, FasterTransformer and flash attention. while combining the design of Colossal AI, especially Shardformer, to reduce the learning curve for users.

|

||||

|

||||

## Design

|

||||

|

||||

Colossal Inference is composed of three main components:

|

||||

|

||||

1. High performance kernels and ops: which are inspired from existing libraries and modified correspondingly.

|

||||

2. Efficient memory management mechanism:which includes the key-value cache manager, allowing for zero memory waste during inference.

|

||||

1. `cache manager`: serves as a memory manager to help manage the key-value cache, it integrates functions such as memory allocation, indexing and release.

|

||||

2. `batch_infer_info`: holds all essential elements of a batch inference, which is updated every batch.

|

||||

3. High-level inference engine combined with `Shardformer`: it allows our inference framework to easily invoke and utilize various parallel methods.

|

||||

1. `HybridEngine`: it is a high level interface that integrates with shardformer, especially for multi-card (tensor parallel, pipline parallel) inference:

|

||||

2. `modeling.llama.LlamaInferenceForwards`: contains the `forward` methods for llama inference. (in this case : llama)

|

||||

3. `policies.llama.LlamaModelInferPolicy` : contains the policies for `llama` models, which is used to call `shardformer` and segmentate the model forward in tensor parallelism way.

|

||||

|

||||

|

||||

## Architecture of inference:

|

||||

|

||||

In this section we discuss how the colossal inference works and integrates with the `Shardformer` . The details can be found in our codes.

|

||||

|

||||

<img src="https://raw.githubusercontent.com/hpcaitech/public_assets/main/colossalai/img/inference/inference-arch.png" alt="Colossal-Inference" style="zoom: 33%;"/>

|

||||

|

||||

## Roadmap of our implementation

|

||||

|

||||

- [x] Design cache manager and batch infer state

|

||||

- [x] Design TpInference engine to integrates with `Shardformer`

|

||||

- [x] Register corresponding high-performance `kernel` and `ops`

|

||||

- [x] Design policies and forwards (e.g. `Llama` and `Bloom`)

|

||||

- [x] policy

|

||||

- [x] context forward

|

||||

- [x] token forward

|

||||

- [x] support flash-decoding

|

||||

- [x] Support all models

|

||||

- [x] Llama

|

||||

- [x] Llama-2

|

||||

- [x] Bloom

|

||||

- [x] Chatglm2

|

||||

- [x] Quantization

|

||||

- [x] GPTQ

|

||||

- [x] SmoothQuant

|

||||

- [ ] Benchmarking for all models

|

||||

|

||||

## Get started

|

||||

|

||||

### Installation

|

||||

|

||||

```bash

|

||||

pip install -e .

|

||||

```

|

||||

|

||||

### Requirements

|

||||

|

||||

Install dependencies.

|

||||

|

||||

```bash

|

||||

pip install -r requirements/requirements-infer.txt

|

||||

|

||||

# if you want use smoothquant quantization, please install torch-int

|

||||

git clone --recurse-submodules https://github.com/Guangxuan-Xiao/torch-int.git

|

||||

cd torch-int

|

||||

git checkout 65266db1eadba5ca78941b789803929e6e6c6856

|

||||

pip install -r requirements.txt

|

||||

source environment.sh

|

||||

bash build_cutlass.sh

|

||||

python setup.py install

|

||||

```

|

||||

|

||||

### Docker

|

||||

|

||||

You can use docker run to use docker container to set-up environment

|

||||

|

||||

```

|

||||

# env: python==3.8, cuda 11.6, pytorch == 1.13.1 triton==2.0.0.dev20221202, vllm kernels support, flash-attention-2 kernels support

|

||||

docker pull hpcaitech/colossalai-inference:v2

|

||||

docker run -it --gpus all --name ANY_NAME -v $PWD:/workspace -w /workspace hpcaitech/colossalai-inference:v2 /bin/bash

|

||||

|

||||

# enter into docker container

|

||||

cd /path/to/CollossalAI

|

||||

pip install -e .

|

||||

|

||||

```

|

||||

|

||||

## Usage

|

||||

### Quick start

|

||||

|

||||

example files are in

|

||||

|

||||

```bash

|

||||

cd ColossalAI/examples

|

||||

python hybrid_llama.py --path /path/to/model --tp_size 2 --pp_size 2 --batch_size 4 --max_input_size 32 --max_out_len 16 --micro_batch_size 2

|

||||

```

|

||||

|

||||

|

||||

|

||||

### Example

|

||||

```python

|

||||

# import module

|

||||

from colossalai.inference import CaiInferEngine

|

||||

import colossalai

|

||||

from transformers import LlamaForCausalLM, LlamaTokenizer

|

||||

|

||||

#launch distributed environment

|

||||

colossalai.launch_from_torch(config={})

|

||||

|

||||

# load original model and tokenizer

|

||||

model = LlamaForCausalLM.from_pretrained("/path/to/model")

|

||||

tokenizer = LlamaTokenizer.from_pretrained("/path/to/model")

|

||||

|

||||

# generate token ids

|

||||

input = ["Introduce a landmark in London","Introduce a landmark in Singapore"]

|

||||

data = tokenizer(input, return_tensors='pt')

|

||||

|

||||

# set parallel parameters

|

||||

tp_size=2

|

||||

pp_size=2

|

||||

max_output_len=32

|

||||

micro_batch_size=1

|

||||

|

||||

# initial inference engine

|

||||

engine = CaiInferEngine(

|

||||

tp_size=tp_size,

|

||||

pp_size=pp_size,

|

||||

model=model,

|

||||

max_output_len=max_output_len,

|

||||

micro_batch_size=micro_batch_size,

|

||||

)

|

||||

|

||||

# inference

|

||||

output = engine.generate(data)

|

||||

|

||||

# get results

|

||||

if dist.get_rank() == 0:

|

||||

assert len(output[0]) == max_output_len, f"{len(output)}, {max_output_len}"

|

||||

|

||||

```

|

||||

|

||||

## Performance

|

||||

|

||||

### environment:

|

||||

|

||||

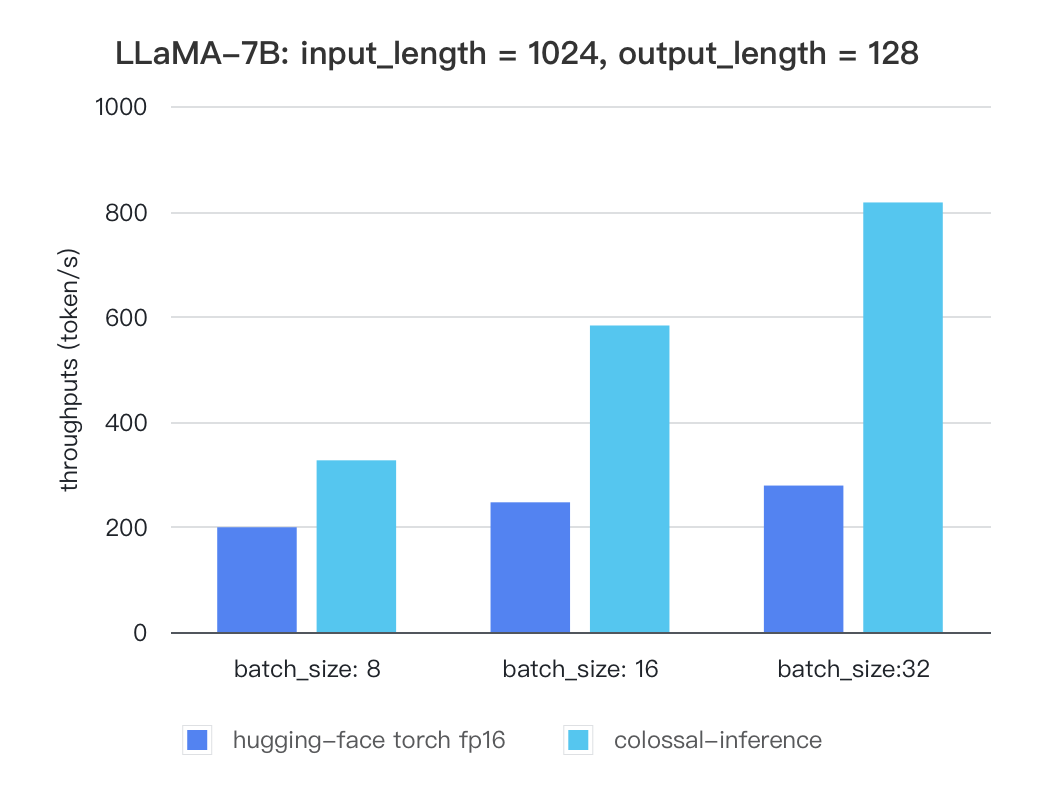

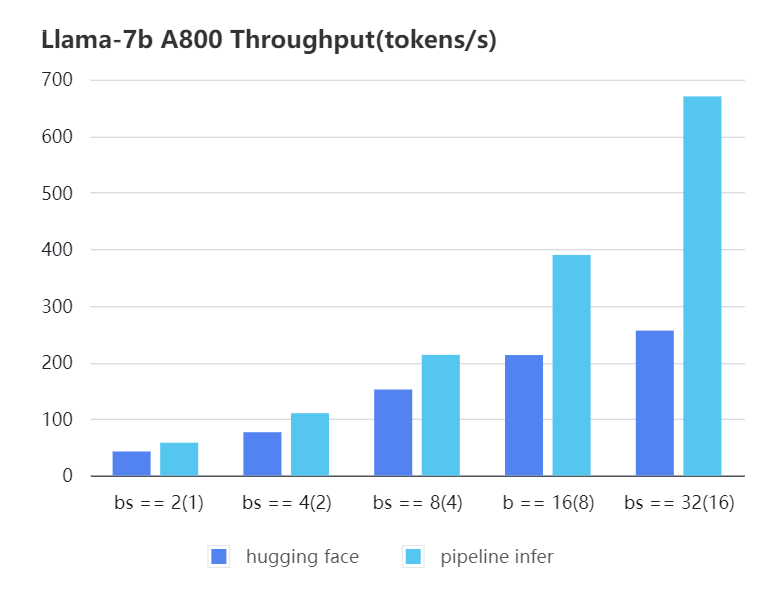

We conducted multiple benchmark tests to evaluate the performance. We compared the inference `latency` and `throughputs` between `colossal-inference` and original `hugging-face torch fp16`.

|

||||

|

||||

For various models, experiments were conducted using multiple batch sizes under the consistent model configuration of `7 billion(7b)` parameters, `1024` input length, and 128 output length. The obtained results are as follows (due to time constraints, the evaluation has currently been performed solely on the `A100` single GPU performance; multi-GPU performance will be addressed in the future):

|

||||

|

||||

### Single GPU Performance:

|

||||

|

||||

Currently the stats below are calculated based on A100 (single GPU), and we calculate token latency based on average values of context-forward and decoding forward process, which means we combine both of processes to calculate token generation times. We are actively developing new features and methods to further optimize the performance of LLM models. Please stay tuned.

|

||||

|

||||

### Tensor Parallelism Inference

|

||||

|

||||

##### Llama

|

||||

|

||||

| batch_size | 8 | 16 | 32 |

|

||||

| :---------------------: | :----: | :----: | :----: |

|

||||

| hugging-face torch fp16 | 199.12 | 246.56 | 278.4 |

|

||||

| colossal-inference | 326.4 | 582.72 | 816.64 |

|

||||

|

||||

|

||||

|

||||

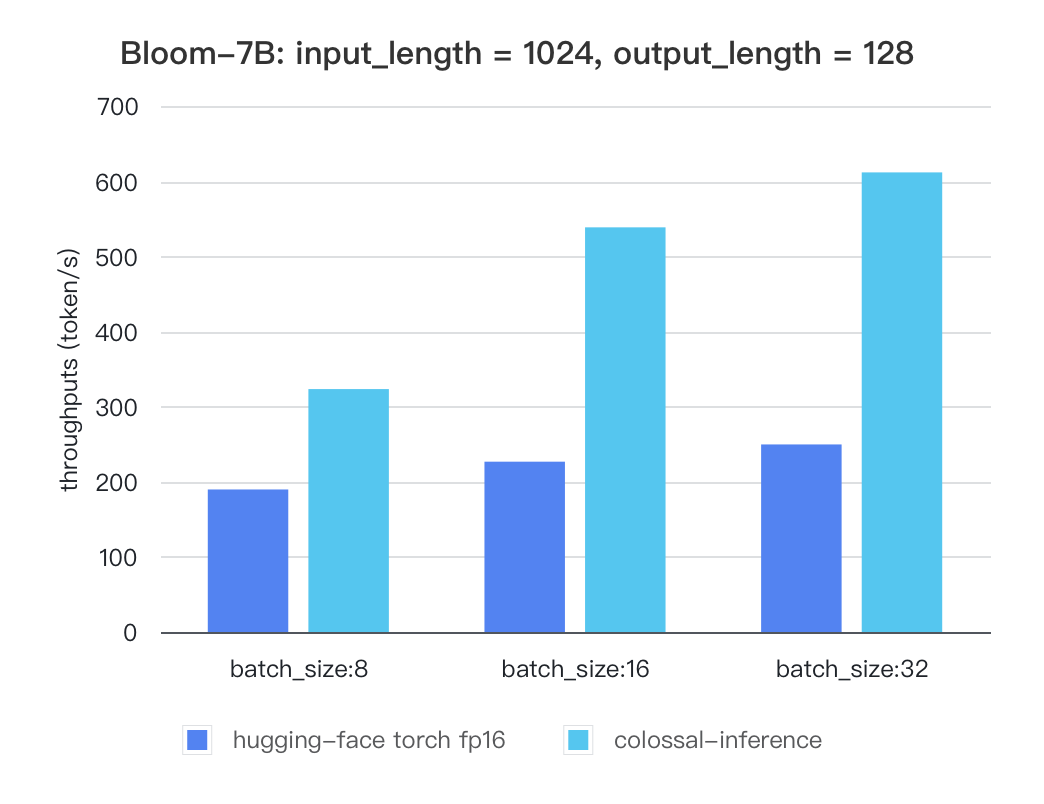

#### Bloom

|

||||

|

||||

| batch_size | 8 | 16 | 32 |

|

||||

| :---------------------: | :----: | :----: | :----: |

|

||||

| hugging-face torch fp16 | 189.68 | 226.66 | 249.61 |

|

||||

| colossal-inference | 323.28 | 538.52 | 611.64 |

|

||||

|

||||

|

||||

|

||||

|

||||

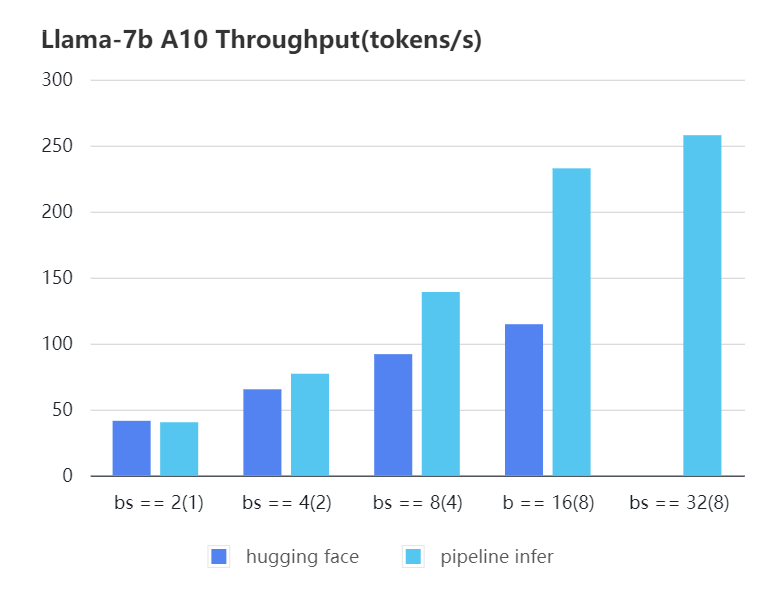

### Pipline Parallelism Inference

|

||||

We conducted multiple benchmark tests to evaluate the performance. We compared the inference `latency` and `throughputs` between `Pipeline Inference` and `hugging face` pipeline. The test environment is 2 * A10, 20G / 2 * A800, 80G. We set input length=1024, output length=128.

|

||||

|

||||

|

||||

#### A10 7b, fp16

|

||||

|

||||

| batch_size(micro_batch size)| 2(1) | 4(2) | 8(4) | 16(8) | 32(8) | 32(16)|

|

||||

| :-------------------------: | :---: | :---:| :---: | :---: | :---: | :---: |

|

||||

| Pipeline Inference | 40.35 | 77.10| 139.03| 232.70| 257.81| OOM |

|

||||

| Hugging Face | 41.43 | 65.30| 91.93 | 114.62| OOM | OOM |

|

||||

|

||||

|

||||

|

||||

|

||||

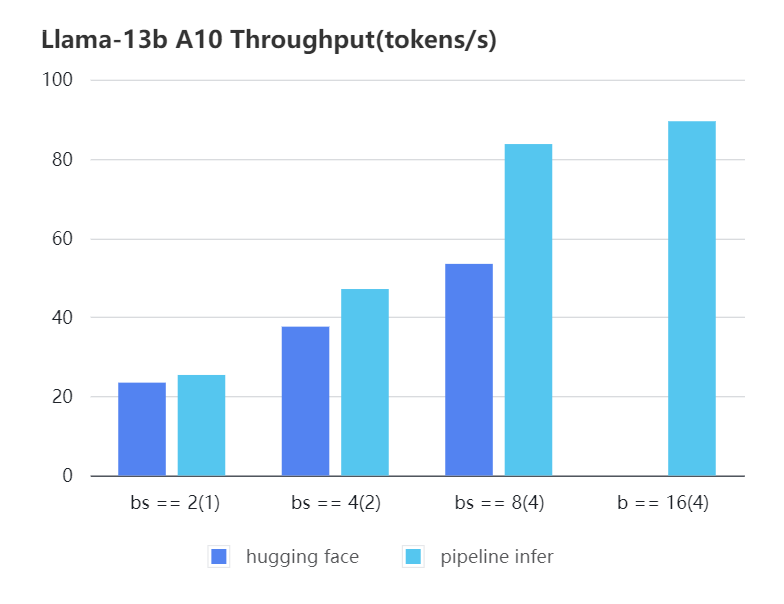

#### A10 13b, fp16

|

||||

|

||||

| batch_size(micro_batch size)| 2(1) | 4(2) | 8(4) | 16(4) |

|

||||

| :---: | :---: | :---: | :---: | :---: |

|

||||

| Pipeline Inference | 25.39 | 47.09 | 83.7 | 89.46 |

|

||||

| Hugging Face | 23.48 | 37.59 | 53.44 | OOM |

|

||||

|

||||

|

||||

|

||||

|

||||

#### A800 7b, fp16

|

||||

|

||||

| batch_size(micro_batch size) | 2(1) | 4(2) | 8(4) | 16(8) | 32(16) |

|

||||

| :---: | :---: | :---: | :---: | :---: | :---: |

|

||||

| Pipeline Inference| 57.97 | 110.13 | 213.33 | 389.86 | 670.12 |

|

||||

| Hugging Face | 42.44 | 76.5 | 151.97 | 212.88 | 256.13 |

|

||||

|

||||

|

||||

|

||||

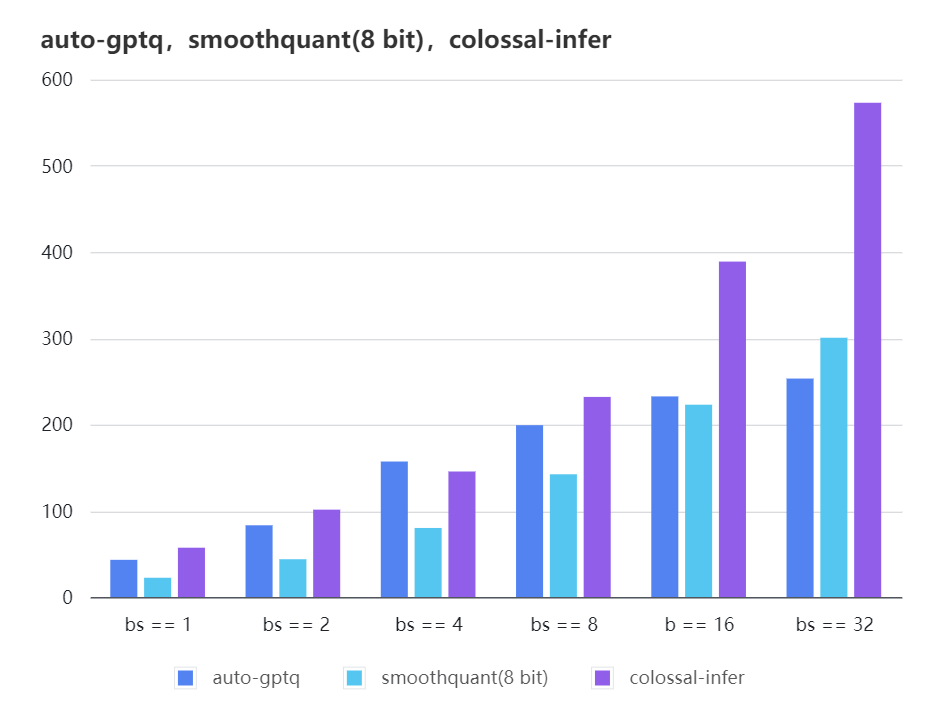

### Quantization LLama

|

||||

|

||||

| batch_size | 8 | 16 | 32 |

|

||||

| :---------------------: | :----: | :----: | :----: |

|

||||

| auto-gptq | 199.20 | 232.56 | 253.26 |

|

||||

| smooth-quant | 142.28 | 222.96 | 300.59 |

|

||||

| colossal-gptq | 231.98 | 388.87 | 573.03 |

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

The results of more models are coming soon!

|

||||

|

|

@ -1,4 +0,0 @@

|

|||

from .engine import InferenceEngine

|

||||

from .engine.policies import BloomModelInferPolicy, ChatGLM2InferPolicy, LlamaModelInferPolicy

|

||||

|

||||

__all__ = ["InferenceEngine", "LlamaModelInferPolicy", "BloomModelInferPolicy", "ChatGLM2InferPolicy"]

|

||||

|

|

@ -0,0 +1,73 @@

|

|||

from logging import Logger

|

||||

from typing import Optional

|

||||

|

||||

from .request_handler import RequestHandler

|

||||

|

||||

|

||||

class InferEngine:

|

||||

"""

|

||||

InferEngine is the core component for Inference.

|

||||

|

||||

It is responsible for launch the inference process, including:

|

||||

- Initialize model and distributed training environment(if needed)

|

||||

- Launch request_handler and corresponding kv cache manager

|

||||

- Receive requests and generate texts.

|

||||

- Log the generation process

|

||||

|

||||

Args:

|

||||

colossal_config: We provide a unified config api for that wrapped all the configs. You can use it to replace the below configs.

|

||||

model_config : The configuration for the model.

|

||||

parallel_config: The configuration for parallelize model.

|

||||

cache_config : Configuration for initialize and manage kv cache.

|

||||

tokenizer (Tokenizer): The tokenizer to be used for inference.

|

||||

use_logger (bool): Determine whether or not to log the generation process.

|

||||

"""

|

||||

|

||||

def __init__(

|

||||

self,

|

||||

model_config,

|

||||

cache_config,

|

||||

parallel_config,

|

||||

tokenizer,

|

||||

use_logger: bool = False,

|

||||

colossal_config: Optional["ColossalInferConfig"] = None,

|

||||

) -> None:

|

||||

assert colossal_config or (

|

||||

model_config and cache_config and parallel_config

|

||||

), "Please provide colossal_config or model_config, cache_config, parallel_config"

|

||||

if colossal_config:

|

||||

model_config, cache_config, parallel_config = colossal_config

|

||||

|

||||

self.model_config = model_config

|

||||

self.cache_config = cache_config

|

||||

self.parallel_config = parallel_config

|

||||

self._verify_config()

|

||||

|

||||

self._init_model()

|

||||

self.request_handler = RequestHandler(cache_config)

|

||||

if use_logger:

|

||||

self.logger = Logger()

|

||||

|

||||

def _init_model(self):

|

||||

"""

|

||||

Initialize model and distributed training environment(if needed).

|

||||

May need to provide two different initialization methods:

|

||||

1. 用户自定义(from local path)

|

||||

2. 从checkpoint加载(hugging face)

|

||||

"""

|

||||

|

||||

def _verify_config(self):

|

||||

"""

|

||||

Verify the configuration to avoid potential bugs.

|

||||

"""

|

||||

|

||||

def generate(self):

|

||||

pass

|

||||

|

||||

def step(self):

|

||||

"""

|

||||

In each step, do the follows:

|

||||

1. Run request_handler to update the kv cache and running input_ids

|

||||

2. Run model to generate the next token

|

||||

3. Check whether there is finied request and decode

|

||||

"""

|

||||

|

|

@ -0,0 +1,10 @@

|

|||

class RequestHandler:

|

||||

def __init__(self, cache_config) -> None:

|

||||

self.cache_config = cache_config

|

||||

self._init_cache()

|

||||

|

||||

def _init_cache(self):

|

||||

pass

|

||||

|

||||

def schedule(self, request):

|

||||

pass

|

||||

|

|

@ -1,3 +0,0 @@

|

|||

from .engine import InferenceEngine

|

||||

|

||||

__all__ = ["InferenceEngine"]

|

||||

|

|

@ -1,195 +0,0 @@

|

|||

from typing import Union

|

||||

|

||||

import torch

|

||||

import torch.distributed as dist

|

||||

import torch.nn as nn

|

||||

from transformers.utils import logging

|

||||

|

||||

from colossalai.cluster import ProcessGroupMesh

|

||||

from colossalai.pipeline.schedule.generate import GenerateSchedule

|

||||

from colossalai.pipeline.stage_manager import PipelineStageManager

|

||||

from colossalai.shardformer import ShardConfig, ShardFormer

|

||||

from colossalai.shardformer.policies.base_policy import Policy

|

||||

|

||||

from ..kv_cache import MemoryManager

|

||||

from .microbatch_manager import MicroBatchManager

|

||||

from .policies import model_policy_map

|

||||

|

||||

PP_AXIS, TP_AXIS = 0, 1

|

||||

|

||||

_supported_models = [

|

||||

"LlamaForCausalLM",

|

||||

"BloomForCausalLM",

|

||||

"LlamaGPTQForCausalLM",

|

||||

"SmoothLlamaForCausalLM",

|

||||

"ChatGLMForConditionalGeneration",

|

||||

]

|

||||

|

||||

|

||||

class InferenceEngine:

|

||||

"""

|

||||

InferenceEngine is a class that handles the pipeline parallel inference.

|

||||

|

||||

Args:

|

||||

tp_size (int): the size of tensor parallelism.

|

||||

pp_size (int): the size of pipeline parallelism.

|

||||

dtype (str): the data type of the model, should be one of 'fp16', 'fp32', 'bf16'.

|

||||

model (`nn.Module`): the model not in pipeline style, and will be modified with `ShardFormer`.

|

||||

model_policy (`colossalai.shardformer.policies.base_policy.Policy`): the policy to shardformer model. It will be determined by the model type if not provided.

|

||||

micro_batch_size (int): the micro batch size. Only useful when `pp_size` > 1.

|

||||

micro_batch_buffer_size (int): the buffer size for micro batch. Normally, it should be the same as the number of pipeline stages.

|

||||

max_batch_size (int): the maximum batch size.

|

||||

max_input_len (int): the maximum input length.

|

||||

max_output_len (int): the maximum output length.

|

||||

quant (str): the quantization method, should be one of 'smoothquant', 'gptq', None.

|

||||

verbose (bool): whether to return the time cost of each step.

|

||||

|

||||

"""

|

||||

|

||||

def __init__(

|

||||

self,

|

||||

tp_size: int = 1,

|

||||

pp_size: int = 1,

|

||||

dtype: str = "fp16",

|

||||

model: nn.Module = None,

|

||||

model_policy: Policy = None,

|

||||

micro_batch_size: int = 1,

|

||||

micro_batch_buffer_size: int = None,

|

||||

max_batch_size: int = 4,

|

||||

max_input_len: int = 32,

|

||||

max_output_len: int = 32,

|

||||

quant: str = None,

|

||||

verbose: bool = False,

|

||||

# TODO: implement early_stopping, and various gerneration options

|

||||

early_stopping: bool = False,

|

||||

do_sample: bool = False,

|

||||

num_beams: int = 1,

|

||||

) -> None:

|

||||

if quant == "gptq":

|

||||

from ..quant.gptq import GPTQManager

|

||||

|

||||

self.gptq_manager = GPTQManager(model.quantize_config, max_input_len=max_input_len)

|

||||

model = model.model

|

||||

elif quant == "smoothquant":

|

||||

model = model.model

|

||||

|

||||

assert model.__class__.__name__ in _supported_models, f"Model {model.__class__.__name__} is not supported."

|

||||

assert (

|

||||

tp_size * pp_size == dist.get_world_size()

|

||||

), f"TP size({tp_size}) * PP size({pp_size}) should be equal to the global world size ({dist.get_world_size()})"

|

||||

assert model, "Model should be provided."

|

||||

assert dtype in ["fp16", "fp32", "bf16"], "dtype should be one of 'fp16', 'fp32', 'bf16'"

|

||||

|

||||

assert max_batch_size <= 64, "Max batch size exceeds the constraint"

|

||||

assert max_input_len + max_output_len <= 4096, "Max length exceeds the constraint"

|

||||

assert quant in ["smoothquant", "gptq", None], "quant should be one of 'smoothquant', 'gptq'"

|

||||

self.pp_size = pp_size

|

||||

self.tp_size = tp_size

|

||||

self.quant = quant

|

||||

|

||||

logger = logging.get_logger(__name__)

|

||||

if quant == "smoothquant" and dtype != "fp32":

|

||||

dtype = "fp32"

|

||||

logger.warning_once("Warning: smoothquant only support fp32 and int8 mix precision. set dtype to fp32")

|

||||

|

||||

if dtype == "fp16":

|

||||

self.dtype = torch.float16

|

||||

model.half()

|

||||

elif dtype == "bf16":

|

||||

self.dtype = torch.bfloat16

|

||||

model.to(torch.bfloat16)

|

||||

else:

|

||||

self.dtype = torch.float32

|

||||

|

||||

if model_policy is None:

|

||||

model_policy = model_policy_map[model.config.model_type]()

|

||||

|

||||

# Init pg mesh

|

||||

pg_mesh = ProcessGroupMesh(pp_size, tp_size)

|

||||

|

||||

stage_manager = PipelineStageManager(pg_mesh, PP_AXIS, True if pp_size * tp_size > 1 else False)

|

||||

self.cache_manager_list = [

|

||||

self._init_manager(model, max_batch_size, max_input_len, max_output_len)

|

||||

for _ in range(micro_batch_buffer_size or pp_size)

|

||||

]

|

||||

self.mb_manager = MicroBatchManager(

|

||||

stage_manager.stage,

|

||||

micro_batch_size,

|

||||

micro_batch_buffer_size or pp_size,

|

||||

max_input_len,

|

||||

max_output_len,

|

||||

self.cache_manager_list,

|

||||

)

|

||||

self.verbose = verbose

|

||||

self.schedule = GenerateSchedule(stage_manager, self.mb_manager, verbose)

|

||||

|

||||

self.model = self._shardformer(

|

||||

model, model_policy, stage_manager, pg_mesh.get_group_along_axis(TP_AXIS) if pp_size * tp_size > 1 else None

|

||||

)

|

||||

if quant == "gptq":

|

||||

self.gptq_manager.post_init_gptq_buffer(self.model)

|

||||

|

||||

def generate(self, input_list: Union[list, dict]):

|

||||

"""

|

||||

Args:

|

||||

input_list (list): a list of input data, each element is a `BatchEncoding` or `dict`.

|

||||

|

||||

Returns:

|

||||

out (list): a list of output data, each element is a list of token.

|

||||

timestamp (float): the time cost of the inference, only return when verbose is `True`.

|

||||

"""

|

||||

|

||||

out, timestamp = self.schedule.generate_step(self.model, iter([input_list]))

|

||||

if self.verbose:

|

||||

return out, timestamp

|

||||

else:

|

||||

return out

|

||||

|

||||

def _shardformer(self, model, model_policy, stage_manager, tp_group):

|

||||

shardconfig = ShardConfig(

|

||||

tensor_parallel_process_group=tp_group,

|

||||

pipeline_stage_manager=stage_manager,

|

||||

enable_tensor_parallelism=(self.tp_size > 1),

|

||||

enable_fused_normalization=False,

|

||||

enable_all_optimization=False,

|

||||

enable_flash_attention=False,

|

||||

enable_jit_fused=False,

|

||||

enable_sequence_parallelism=False,

|

||||

extra_kwargs={"quant": self.quant},

|

||||

)

|

||||

shardformer = ShardFormer(shard_config=shardconfig)

|

||||

shard_model, _ = shardformer.optimize(model, model_policy)

|

||||

return shard_model.cuda()

|

||||

|

||||

def _init_manager(self, model, max_batch_size: int, max_input_len: int, max_output_len: int) -> None:

|

||||

max_total_token_num = max_batch_size * (max_input_len + max_output_len)

|

||||

if model.config.model_type == "llama":

|

||||

head_dim = model.config.hidden_size // model.config.num_attention_heads

|

||||

head_num = model.config.num_key_value_heads // self.tp_size

|

||||

num_hidden_layers = (

|

||||

model.config.num_hidden_layers

|

||||

if hasattr(model.config, "num_hidden_layers")

|

||||

else model.config.num_layers

|

||||

)

|

||||

layer_num = num_hidden_layers // self.pp_size

|

||||

elif model.config.model_type == "bloom":

|

||||

head_dim = model.config.hidden_size // model.config.n_head

|

||||

head_num = model.config.n_head // self.tp_size

|

||||

num_hidden_layers = model.config.n_layer

|

||||

layer_num = num_hidden_layers // self.pp_size

|

||||

elif model.config.model_type == "chatglm":

|

||||

head_dim = model.config.hidden_size // model.config.num_attention_heads

|

||||

if model.config.multi_query_attention:

|

||||

head_num = model.config.multi_query_group_num // self.tp_size

|

||||

else:

|

||||

head_num = model.config.num_attention_heads // self.tp_size

|

||||

num_hidden_layers = model.config.num_layers

|

||||

layer_num = num_hidden_layers // self.pp_size

|

||||

else:

|

||||

raise NotImplementedError("Only support llama, bloom and chatglm model.")

|

||||

|

||||

if self.quant == "smoothquant":

|

||||

cache_manager = MemoryManager(max_total_token_num, torch.int8, head_num, head_dim, layer_num)

|

||||

else:

|

||||

cache_manager = MemoryManager(max_total_token_num, self.dtype, head_num, head_dim, layer_num)

|

||||

return cache_manager

|

||||

|

|

@ -1,248 +0,0 @@

|

|||

from enum import Enum

|

||||

from typing import Dict

|

||||

|

||||

import torch

|

||||

|

||||

from ..kv_cache import BatchInferState, MemoryManager

|

||||

|

||||

__all__ = "MicroBatchManager"

|

||||

|

||||

|

||||

class Status(Enum):

|

||||

PREFILL = 1

|

||||

GENERATE = 2

|

||||

DONE = 3

|

||||

COOLDOWN = 4

|

||||

|

||||

|

||||

class MicroBatchDescription:

|

||||

"""

|

||||

This is the class to record the infomation of each microbatch, and also do some update operation.

|

||||

This clase is the base class of `HeadMicroBatchDescription` and `BodyMicroBatchDescription`, for more

|

||||

details, please refer to the doc of these two classes blow.

|

||||

|

||||

Args:

|

||||

inputs_dict (Dict[str, torch.Tensor]): the inputs of current stage. The key should have `input_ids` and `attention_mask`.

|

||||

output_dict (Dict[str, torch.Tensor]): the outputs of previous stage. The key should have `hidden_states` and `past_key_values`.

|

||||

"""

|

||||

|

||||

def __init__(

|

||||

self,

|

||||

inputs_dict: Dict[str, torch.Tensor],

|

||||

max_input_len: int,

|

||||

max_output_len: int,

|

||||

cache_manager: MemoryManager,

|

||||

) -> None:

|

||||

self.mb_length = inputs_dict["input_ids"].shape[-1]

|

||||

self.target_length = self.mb_length + max_output_len

|

||||

self.infer_state = BatchInferState.init_from_batch(

|

||||

batch=inputs_dict, max_input_len=max_input_len, max_output_len=max_output_len, cache_manager=cache_manager

|

||||

)

|

||||

# print(f"[init] {inputs_dict}, {max_input_len}, {max_output_len}, {cache_manager}, {self.infer_state}")

|

||||

|

||||

def update(self, *args, **kwargs):

|

||||

pass

|

||||

|

||||

@property

|

||||

def state(self):

|

||||

"""

|

||||

Return the state of current micro batch, when current length is equal to target length,

|

||||

the state is DONE, otherwise GENERATE

|

||||

|

||||

"""

|

||||

# TODO: add the condition for early stopping

|

||||

if self.cur_length == self.target_length:

|

||||

return Status.DONE

|

||||

elif self.cur_length == self.target_length - 1:

|

||||

return Status.COOLDOWN

|

||||

else:

|

||||

return Status.GENERATE

|

||||

|

||||

@property

|

||||

def cur_length(self):

|

||||

"""

|

||||

Return the current sequnence length of micro batch

|

||||

|

||||

"""

|

||||

|

||||

|

||||

class HeadMicroBatchDescription(MicroBatchDescription):

|

||||

"""

|

||||

This class is used to record the infomation of the first stage of pipeline, the first stage should have attributes `input_ids` and `attention_mask`

|

||||

and `new_tokens`, and the `new_tokens` is the tokens generated by the first stage. Also due to the schdule of pipeline, the operation to update the

|

||||

information and the condition to determine the state is different from other stages.

|

||||

|

||||

Args:

|

||||

inputs_dict (Dict[str, torch.Tensor]): the inputs of current stage. The key should have `input_ids` and `attention_mask`.

|

||||

output_dict (Dict[str, torch.Tensor]): the outputs of previous stage. The key should have `hidden_states` and `past_key_values`.

|

||||

|

||||

"""

|

||||

|

||||

def __init__(

|

||||

self,

|

||||

inputs_dict: Dict[str, torch.Tensor],

|

||||

max_input_len: int,

|

||||

max_output_len: int,

|

||||

cache_manager: MemoryManager,

|

||||

) -> None:

|

||||

super().__init__(inputs_dict, max_input_len, max_output_len, cache_manager)

|

||||

assert inputs_dict is not None

|

||||

assert inputs_dict.get("input_ids") is not None and inputs_dict.get("attention_mask") is not None

|

||||

self.input_ids = inputs_dict["input_ids"]

|

||||

self.attn_mask = inputs_dict["attention_mask"]

|

||||

self.new_tokens = None

|

||||

|

||||

def update(self, new_token: torch.Tensor = None):

|

||||

if new_token is not None:

|

||||

self._update_newtokens(new_token)

|

||||

if self.state is not Status.DONE and new_token is not None:

|

||||

self._update_attnmask()

|

||||

|

||||

def _update_newtokens(self, new_token: torch.Tensor):

|

||||

if self.new_tokens is None:

|

||||

self.new_tokens = new_token

|

||||

else:

|

||||

self.new_tokens = torch.cat([self.new_tokens, new_token], dim=-1)

|

||||

|

||||

def _update_attnmask(self):

|

||||

self.attn_mask = torch.cat(

|

||||

(self.attn_mask, torch.ones((self.attn_mask.shape[0], 1), dtype=torch.int64, device="cuda")), dim=-1

|

||||

)

|

||||

|

||||

@property

|

||||

def cur_length(self):

|

||||

"""

|

||||

When there is no new_token, the length is mb_length, otherwise the sequence length is `mb_length` plus the length of new_token

|

||||

|

||||

"""

|

||||

if self.new_tokens is None:

|

||||

return self.mb_length

|

||||

else:

|

||||

return self.mb_length + len(self.new_tokens[0])

|

||||

|

||||

|

||||

class BodyMicroBatchDescription(MicroBatchDescription):

|

||||

"""

|

||||

This class is used to record the infomation of the stages except the first stage of pipeline, the stages should have attributes `hidden_states` and `past_key_values`,

|

||||

|

||||

Args:

|

||||

inputs_dict (Dict[str, torch.Tensor]): will always be `None`. Other stages only receive hiddenstates from previous stage.

|

||||

"""

|

||||

|

||||

def __init__(

|

||||

self,

|

||||

inputs_dict: Dict[str, torch.Tensor],

|

||||

max_input_len: int,

|

||||

max_output_len: int,

|

||||

cache_manager: MemoryManager,

|

||||

) -> None:

|

||||

super().__init__(inputs_dict, max_input_len, max_output_len, cache_manager)

|

||||

|

||||

@property

|

||||

def cur_length(self):

|

||||

"""

|

||||

When there is no kv_cache, the length is mb_length, otherwise the sequence length is `kv_cache[0][0].shape[-2]` plus 1

|

||||

|

||||

"""

|

||||

return self.infer_state.seq_len.max().item()

|

||||

|

||||

|

||||

class MicroBatchManager:

|

||||

"""

|

||||

MicroBatchManager is a class that manages the micro batch.

|

||||

|

||||

Args:

|

||||

stage (int): stage id of current stage.

|

||||

micro_batch_size (int): the micro batch size.

|

||||

micro_batch_buffer_size (int): the buffer size for micro batch. Normally, it should be the same as the number of pipeline stages.

|

||||

|

||||

"""

|

||||

|

||||

def __init__(

|

||||

self,

|

||||

stage: int,

|

||||

micro_batch_size: int,

|

||||

micro_batch_buffer_size: int,

|

||||

max_input_len: int,

|

||||

max_output_len: int,

|

||||

cache_manager_list: MemoryManager,

|

||||

):

|

||||

self.stage = stage

|

||||

self.micro_batch_size = micro_batch_size

|

||||

self.buffer_size = micro_batch_buffer_size

|

||||

self.max_input_len = max_input_len

|

||||

self.max_output_len = max_output_len

|

||||

self.cache_manager_list = cache_manager_list

|

||||

self.mb_descrption_buffer = {}

|

||||

self.new_tokens_buffer = {}

|

||||

self.idx = 0

|

||||

|

||||

def add_descrption(self, inputs_dict: Dict[str, torch.Tensor]):

|

||||

if self.stage == 0:

|

||||

self.mb_descrption_buffer[self.idx] = HeadMicroBatchDescription(

|

||||

inputs_dict, self.max_input_len, self.max_output_len, self.cache_manager_list[self.idx]

|

||||

)

|

||||

else:

|

||||

self.mb_descrption_buffer[self.idx] = BodyMicroBatchDescription(

|

||||

inputs_dict, self.max_input_len, self.max_output_len, self.cache_manager_list[self.idx]

|

||||

)

|

||||

|

||||

def step(self, new_token: torch.Tensor = None):

|

||||

"""

|

||||

Update the state if microbatch manager, 2 conditions.

|

||||

1. For first stage in PREFILL, receive inputs and outputs, `_add_descrption` will save its inputs.

|

||||

2. For other conditon, only receive the output of previous stage, and update the descrption.

|

||||

|

||||

Args:

|

||||

inputs_dict (Dict[str, torch.Tensor]): the inputs of current stage. The key should have `input_ids` and `attention_mask`.

|

||||

output_dict (Dict[str, torch.Tensor]): the outputs of previous stage. The key should have `hidden_states` and `past_key_values`.

|

||||

new_token (torch.Tensor): the new token generated by current stage.

|

||||

"""

|

||||

# Add descrption first if the descrption is None

|

||||

self.cur_descrption.update(new_token)

|

||||

return self.cur_state

|

||||

|

||||

def export_new_tokens(self):

|

||||

new_tokens_list = []

|

||||

for i in self.mb_descrption_buffer.values():

|

||||

new_tokens_list.extend(i.new_tokens.tolist())

|

||||

return new_tokens_list

|

||||

|

||||

def is_micro_batch_done(self):

|

||||

if len(self.mb_descrption_buffer) == 0:

|

||||

return False

|

||||

for mb in self.mb_descrption_buffer.values():

|

||||

if mb.state != Status.DONE:

|

||||

return False

|

||||

return True

|

||||

|

||||

def clear(self):

|

||||

self.mb_descrption_buffer.clear()

|

||||

for cache in self.cache_manager_list:

|

||||

cache.free_all()

|

||||

|

||||

def next(self):

|

||||

self.idx = (self.idx + 1) % self.buffer_size

|

||||

|

||||

def _remove_descrption(self):

|

||||

self.mb_descrption_buffer.pop(self.idx)

|

||||

|

||||

@property

|

||||

def cur_descrption(self) -> MicroBatchDescription:

|

||||

return self.mb_descrption_buffer.get(self.idx)

|

||||

|

||||

@property

|

||||

def cur_infer_state(self):

|

||||

if self.cur_descrption is None:

|

||||

return None

|

||||

return self.cur_descrption.infer_state

|

||||

|

||||

@property

|

||||

def cur_state(self):

|

||||

"""

|

||||

Return the state of current micro batch, when current descrption is None, the state is PREFILL

|

||||

|

||||

"""

|

||||

if self.cur_descrption is None:

|

||||

return Status.PREFILL

|

||||

return self.cur_descrption.state

|

||||

|

|

@ -1,5 +0,0 @@

|

|||

from .bloom import BloomInferenceForwards

|

||||

from .chatglm2 import ChatGLM2InferenceForwards

|

||||

from .llama import LlamaInferenceForwards

|

||||

|

||||

__all__ = ["LlamaInferenceForwards", "BloomInferenceForwards", "ChatGLM2InferenceForwards"]

|

||||

|

|

@ -1,67 +0,0 @@

|

|||

"""

|

||||

Utils for model inference

|

||||

"""

|

||||

import os

|

||||

|

||||

import torch

|

||||

|

||||

from colossalai.kernel.triton.copy_kv_cache_dest import copy_kv_cache_to_dest

|

||||

|

||||

|

||||

def copy_kv_to_mem_cache(layer_id, key_buffer, value_buffer, context_mem_index, mem_manager):

|

||||

"""

|

||||

This function copies the key and value cache to the memory cache

|

||||

Args:

|

||||

layer_id : id of current layer

|

||||

key_buffer : key cache

|

||||

value_buffer : value cache

|

||||

context_mem_index : index of memory cache in kv cache manager

|

||||

mem_manager : cache manager

|

||||

"""

|

||||

copy_kv_cache_to_dest(key_buffer, context_mem_index, mem_manager.key_buffer[layer_id])

|

||||

copy_kv_cache_to_dest(value_buffer, context_mem_index, mem_manager.value_buffer[layer_id])

|

||||

|

||||

|

||||

def init_to_get_rotary(self, base=10000, use_elem=False):

|

||||

"""

|

||||

This function initializes the rotary positional embedding, it is compatible for all models and is called in ShardFormer

|

||||

Args:

|

||||

self : Model that holds the rotary positional embedding

|

||||

base : calculation arg

|

||||

use_elem : activated when using chatglm-based models

|

||||

"""

|

||||

self.config.head_dim_ = self.config.hidden_size // self.config.num_attention_heads

|

||||

if not hasattr(self.config, "rope_scaling"):

|

||||

rope_scaling_factor = 1.0

|

||||

else:

|

||||

rope_scaling_factor = self.config.rope_scaling.factor if self.config.rope_scaling is not None else 1.0

|

||||

|

||||

if hasattr(self.config, "max_sequence_length"):

|

||||

max_seq_len = self.config.max_sequence_length

|

||||

elif hasattr(self.config, "max_position_embeddings"):

|

||||

max_seq_len = self.config.max_position_embeddings * rope_scaling_factor

|

||||

else:

|

||||

max_seq_len = 2048 * rope_scaling_factor

|

||||

base = float(base)

|

||||

|

||||

# NTK ref: https://www.reddit.com/r/LocalLLaMA/comments/14lz7j5/ntkaware_scaled_rope_allows_llama_models_to_have/

|

||||

ntk_alpha = os.environ.get("INFER_NTK_ALPHA", None)

|

||||

|

||||

if ntk_alpha is not None:

|

||||

ntk_alpha = float(ntk_alpha)

|

||||

assert ntk_alpha >= 1, "NTK alpha must be greater than or equal to 1"

|

||||

if ntk_alpha > 1:

|

||||

print(f"Note: NTK enabled, alpha set to {ntk_alpha}")

|

||||

max_seq_len *= ntk_alpha

|

||||

base = base * (ntk_alpha ** (self.head_dim_ / (self.head_dim_ - 2))) # Base change formula

|

||||

|

||||

n_elem = self.config.head_dim_

|

||||

if use_elem:

|

||||

n_elem //= 2

|

||||

|

||||

inv_freq = 1.0 / (base ** (torch.arange(0, n_elem, 2, device="cpu", dtype=torch.float32) / n_elem))

|

||||

t = torch.arange(max_seq_len + 1024 * 64, device="cpu", dtype=torch.float32) / rope_scaling_factor

|

||||

freqs = torch.outer(t, inv_freq)

|

||||

|

||||

self._cos_cached = torch.cos(freqs).to(torch.float16).cuda()

|

||||

self._sin_cached = torch.sin(freqs).to(torch.float16).cuda()

|

||||

|

|

@ -1,452 +0,0 @@

|

|||

import math

|

||||

import warnings

|

||||

from typing import List, Optional, Tuple, Union

|

||||

|

||||

import torch

|

||||

import torch.distributed as dist

|

||||

from torch.nn import functional as F

|

||||

from transformers.models.bloom.modeling_bloom import (

|

||||

BaseModelOutputWithPastAndCrossAttentions,

|

||||

BloomAttention,

|

||||

BloomBlock,

|

||||

BloomForCausalLM,

|

||||

BloomModel,

|

||||

)

|

||||

from transformers.utils import logging

|

||||

|

||||

from colossalai.inference.kv_cache.batch_infer_state import BatchInferState

|

||||

from colossalai.kernel.triton import bloom_context_attn_fwd, copy_kv_cache_to_dest, token_attention_fwd

|

||||

from colossalai.pipeline.stage_manager import PipelineStageManager

|

||||

|

||||

try:

|

||||

from lightllm.models.bloom.triton_kernel.context_flashattention_nopad import (

|

||||

context_attention_fwd as lightllm_bloom_context_attention_fwd,

|

||||

)

|

||||

|

||||

HAS_LIGHTLLM_KERNEL = True

|

||||

except:

|

||||

HAS_LIGHTLLM_KERNEL = False

|

||||

|

||||

|

||||

def generate_alibi(n_head, dtype=torch.float16):

|

||||

"""

|

||||

This method is adapted from `_generate_alibi` function

|

||||

in `lightllm/models/bloom/layer_weights/transformer_layer_weight.py`

|

||||

of the ModelTC/lightllm GitHub repository.

|

||||

This method is originally the `build_alibi_tensor` function

|

||||

in `transformers/models/bloom/modeling_bloom.py`

|

||||

of the huggingface/transformers GitHub repository.

|

||||

"""

|

||||

|

||||

def get_slopes_power_of_2(n):

|

||||

start = 2 ** (-(2 ** -(math.log2(n) - 3)))

|

||||

return [start * start**i for i in range(n)]

|

||||

|

||||

def get_slopes(n):

|

||||

if math.log2(n).is_integer():

|

||||

return get_slopes_power_of_2(n)

|

||||

else:

|

||||

closest_power_of_2 = 2 ** math.floor(math.log2(n))

|

||||

slopes_power_of_2 = get_slopes_power_of_2(closest_power_of_2)

|

||||

slopes_double = get_slopes(2 * closest_power_of_2)

|

||||

slopes_combined = slopes_power_of_2 + slopes_double[0::2][: n - closest_power_of_2]

|

||||

return slopes_combined

|

||||

|

||||

slopes = get_slopes(n_head)

|

||||

return torch.tensor(slopes, dtype=dtype)

|

||||

|

||||

|

||||

class BloomInferenceForwards:

|

||||

"""

|

||||

This class serves a micro library for bloom inference forwards.

|

||||

We intend to replace the forward methods for BloomForCausalLM, BloomModel, BloomBlock, and BloomAttention,

|

||||

as well as prepare_inputs_for_generation method for BloomForCausalLM.

|

||||

For future improvement, we might want to skip replacing methods for BloomForCausalLM,

|

||||

and call BloomModel.forward iteratively in TpInferEngine

|

||||

"""

|

||||

|

||||

@staticmethod

|

||||

def bloom_for_causal_lm_forward(

|

||||

self: BloomForCausalLM,

|

||||

input_ids: Optional[torch.LongTensor] = None,

|

||||

past_key_values: Optional[Tuple[Tuple[torch.Tensor, torch.Tensor], ...]] = None,

|

||||

attention_mask: Optional[torch.Tensor] = None,

|

||||

head_mask: Optional[torch.Tensor] = None,

|

||||

inputs_embeds: Optional[torch.Tensor] = None,

|

||||

labels: Optional[torch.Tensor] = None,

|

||||

use_cache: Optional[bool] = False,

|

||||

output_attentions: Optional[bool] = False,

|

||||

output_hidden_states: Optional[bool] = False,

|

||||

return_dict: Optional[bool] = False,

|

||||

infer_state: BatchInferState = None,

|

||||

stage_manager: Optional[PipelineStageManager] = None,

|

||||

hidden_states: Optional[torch.FloatTensor] = None,

|

||||

stage_index: Optional[List[int]] = None,

|

||||

tp_group: Optional[dist.ProcessGroup] = None,

|

||||

**deprecated_arguments,

|

||||

):

|

||||

r"""

|

||||

labels (`torch.LongTensor` of shape `(batch_size, sequence_length)`, *optional*):

|

||||

Labels for language modeling. Note that the labels **are shifted** inside the model, i.e. you can set

|

||||

`labels = input_ids` Indices are selected in `[-100, 0, ..., config.vocab_size]` All labels set to `-100`

|

||||

are ignored (masked), the loss is only computed for labels in `[0, ..., config.vocab_size]`

|

||||

"""

|

||||

logger = logging.get_logger(__name__)

|

||||

|

||||

if deprecated_arguments.pop("position_ids", False) is not False:

|

||||

# `position_ids` could have been `torch.Tensor` or `None` so defaulting pop to `False` allows to detect if users were passing explicitly `None`

|

||||

warnings.warn(

|

||||

"`position_ids` have no functionality in BLOOM and will be removed in v5.0.0. You can safely ignore"

|

||||

" passing `position_ids`.",

|

||||

FutureWarning,

|

||||

)

|

||||

|

||||

# TODO(jianghai): left the recording kv-value tensors as () or None type, this feature may be added in the future.

|

||||

if output_attentions:

|

||||

logger.warning_once("output_attentions=True is not supported for pipeline models at the moment.")

|

||||

output_attentions = False

|

||||

if output_hidden_states:

|

||||

logger.warning_once("output_hidden_states=True is not supported for pipeline models at the moment.")

|

||||

output_hidden_states = False

|

||||

|

||||

# If is first stage and hidden_states is not None, go throught lm_head first

|

||||

if stage_manager.is_first_stage() and hidden_states is not None:

|

||||

lm_logits = self.lm_head(hidden_states)

|

||||

return {"logits": lm_logits}

|

||||

|

||||

outputs = BloomInferenceForwards.bloom_model_forward(

|

||||

self.transformer,

|

||||

input_ids,

|

||||

past_key_values=past_key_values,

|

||||

attention_mask=attention_mask,

|

||||

head_mask=head_mask,

|

||||

inputs_embeds=inputs_embeds,

|

||||

use_cache=use_cache,

|

||||

output_attentions=output_attentions,

|

||||

output_hidden_states=output_hidden_states,

|

||||

return_dict=return_dict,

|

||||

infer_state=infer_state,

|

||||

stage_manager=stage_manager,

|

||||

hidden_states=hidden_states,

|

||||

stage_index=stage_index,

|

||||

tp_group=tp_group,

|

||||

)

|

||||

|

||||

return outputs

|

||||

|

||||

@staticmethod

|

||||

def bloom_model_forward(

|

||||

self: BloomModel,

|

||||

input_ids: Optional[torch.LongTensor] = None,

|

||||

past_key_values: Optional[Tuple[Tuple[torch.Tensor, torch.Tensor], ...]] = None,

|

||||

attention_mask: Optional[torch.Tensor] = None,

|

||||

head_mask: Optional[torch.LongTensor] = None,

|

||||

inputs_embeds: Optional[torch.LongTensor] = None,

|

||||

use_cache: Optional[bool] = None,

|

||||

output_attentions: Optional[bool] = None,

|

||||

output_hidden_states: Optional[bool] = False,

|

||||

return_dict: Optional[bool] = None,

|

||||

infer_state: BatchInferState = None,

|

||||

stage_manager: Optional[PipelineStageManager] = None,

|

||||

hidden_states: Optional[torch.FloatTensor] = None,

|

||||

stage_index: Optional[List[int]] = None,

|

||||

tp_group: Optional[dist.ProcessGroup] = None,

|

||||

**deprecated_arguments,

|

||||

) -> Union[Tuple[torch.Tensor, ...], BaseModelOutputWithPastAndCrossAttentions]:

|

||||

logger = logging.get_logger(__name__)

|

||||

|

||||

# add warnings here

|

||||

if output_attentions:

|

||||

logger.warning_once("output_attentions=True is not supported for pipeline models at the moment.")

|

||||

output_attentions = False

|

||||

if output_hidden_states:

|

||||

logger.warning_once("output_hidden_states=True is not supported for pipeline models at the moment.")

|

||||

output_hidden_states = False

|

||||

if use_cache:

|

||||

logger.warning_once("use_cache=True is not supported for pipeline models at the moment.")

|

||||

use_cache = False

|

||||

|

||||

if deprecated_arguments.pop("position_ids", False) is not False:

|

||||

# `position_ids` could have been `torch.Tensor` or `None` so defaulting pop to `False` allows to detect if users were passing explicitly `None`

|

||||

warnings.warn(

|

||||

"`position_ids` have no functionality in BLOOM and will be removed in v5.0.0. You can safely ignore"

|

||||

" passing `position_ids`.",

|

||||

FutureWarning,

|

||||

)

|

||||

if len(deprecated_arguments) > 0:

|

||||

raise ValueError(f"Got unexpected arguments: {deprecated_arguments}")

|

||||

|

||||

# Prepare head mask if needed

|

||||

# 1.0 in head_mask indicate we keep the head

|

||||

# attention_probs has shape batch_size x num_heads x N x N

|

||||

# head_mask has shape n_layer x batch x num_heads x N x N

|

||||

head_mask = self.get_head_mask(head_mask, self.config.n_layer)

|

||||

|

||||

# first stage

|

||||

if stage_manager.is_first_stage():

|

||||

# check inputs and inputs embeds

|

||||

if input_ids is not None and inputs_embeds is not None:

|

||||

raise ValueError("You cannot specify both input_ids and inputs_embeds at the same time")

|

||||

elif input_ids is not None:

|

||||

batch_size, seq_length = input_ids.shape

|

||||

elif inputs_embeds is not None:

|

||||

batch_size, seq_length, _ = inputs_embeds.shape

|

||||

else:

|

||||

raise ValueError("You have to specify either input_ids or inputs_embeds")

|

||||

|

||||

if inputs_embeds is None:

|

||||

inputs_embeds = self.word_embeddings(input_ids)

|

||||

|

||||

hidden_states = self.word_embeddings_layernorm(inputs_embeds)

|

||||

# other stage

|

||||

else:

|

||||

input_shape = hidden_states.shape[:-1]

|

||||

batch_size, seq_length = input_shape

|

||||

|

||||

if infer_state.is_context_stage:

|

||||

past_key_values_length = 0

|

||||

else:

|

||||

past_key_values_length = infer_state.max_len_in_batch - 1

|

||||

|

||||

if seq_length != 1:

|

||||

# prefill stage

|

||||

infer_state.is_context_stage = True # set prefill stage, notify attention layer

|

||||

infer_state.context_mem_index = infer_state.cache_manager.alloc(infer_state.total_token_num)

|

||||

BatchInferState.init_block_loc(

|

||||

infer_state.block_loc, infer_state.seq_len, seq_length, infer_state.context_mem_index

|

||||

)

|

||||

else:

|

||||

infer_state.is_context_stage = False

|

||||

alloc_mem = infer_state.cache_manager.alloc_contiguous(batch_size)

|

||||

if alloc_mem is not None:

|

||||

infer_state.decode_is_contiguous = True

|

||||

infer_state.decode_mem_index = alloc_mem[0]

|

||||

infer_state.decode_mem_start = alloc_mem[1]

|

||||

infer_state.decode_mem_end = alloc_mem[2]

|

||||

infer_state.block_loc[:, infer_state.max_len_in_batch - 1] = infer_state.decode_mem_index

|

||||

else:

|

||||

print(f" *** Encountered allocation non-contiguous")

|

||||

print(f" infer_state.max_len_in_batch : {infer_state.max_len_in_batch}")

|

||||

infer_state.decode_is_contiguous = False

|

||||

alloc_mem = infer_state.cache_manager.alloc(batch_size)

|

||||

infer_state.decode_mem_index = alloc_mem

|

||||

infer_state.block_loc[:, infer_state.max_len_in_batch - 1] = infer_state.decode_mem_index

|

||||

|

||||

if attention_mask is None:

|

||||

attention_mask = torch.ones((batch_size, infer_state.max_len_in_batch), device=hidden_states.device)

|

||||

else:

|

||||

attention_mask = attention_mask.to(hidden_states.device)

|

||||

|

||||

# NOTE revise: we might want to store a single 1D alibi(length is #heads) in model,

|

||||

# or store to BatchInferState to prevent re-calculating

|

||||

# When we have multiple process group (e.g. dp together with tp), we need to pass the pg to here

|

||||

tp_size = dist.get_world_size(tp_group) if tp_group is not None else 1

|

||||

curr_tp_rank = dist.get_rank(tp_group) if tp_group is not None else 0

|

||||

alibi = (

|

||||

generate_alibi(self.num_heads * tp_size)

|

||||

.contiguous()[curr_tp_rank * self.num_heads : (curr_tp_rank + 1) * self.num_heads]

|

||||

.cuda()

|

||||

)

|

||||

causal_mask = self._prepare_attn_mask(

|

||||

attention_mask,

|

||||

input_shape=(batch_size, seq_length),

|

||||

past_key_values_length=past_key_values_length,

|

||||

)

|

||||

|

||||

infer_state.decode_layer_id = 0

|

||||

|

||||

start_idx, end_idx = stage_index[0], stage_index[1]

|

||||

if past_key_values is None:

|

||||

past_key_values = tuple([None] * (end_idx - start_idx + 1))

|

||||

|

||||

for idx, past_key_value in zip(range(start_idx, end_idx), past_key_values):

|

||||

block = self.h[idx]

|

||||

outputs = block(

|

||||

hidden_states,

|

||||

layer_past=past_key_value,

|

||||

attention_mask=causal_mask,

|

||||

head_mask=head_mask[idx],

|

||||

use_cache=use_cache,

|

||||

output_attentions=output_attentions,

|

||||

alibi=alibi,

|

||||

infer_state=infer_state,

|

||||

)

|

||||

|

||||

infer_state.decode_layer_id += 1

|

||||

hidden_states = outputs[0]

|

||||

|

||||

if stage_manager.is_last_stage() or stage_manager.num_stages == 1:

|

||||

hidden_states = self.ln_f(hidden_states)

|

||||

|

||||

# update indices

|

||||

infer_state.start_loc = infer_state.start_loc + torch.arange(0, batch_size, dtype=torch.int32, device="cuda")

|

||||

infer_state.seq_len += 1

|

||||

infer_state.max_len_in_batch += 1

|

||||

|

||||

# always return dict for imediate stage

|

||||

return {"hidden_states": hidden_states}

|

||||

|

||||

@staticmethod

|

||||

def bloom_block_forward(

|

||||

self: BloomBlock,

|

||||

hidden_states: torch.Tensor,

|

||||

alibi: torch.Tensor,

|

||||

attention_mask: torch.Tensor,

|

||||

layer_past: Optional[Tuple[torch.Tensor, torch.Tensor]] = None,

|

||||

head_mask: Optional[torch.Tensor] = None,

|

||||

use_cache: bool = False,

|

||||

output_attentions: bool = False,

|

||||

infer_state: Optional[BatchInferState] = None,

|

||||

):

|

||||

# hidden_states: [batch_size, seq_length, hidden_size]

|

||||

|

||||

# Layer norm at the beginning of the transformer layer.

|

||||

layernorm_output = self.input_layernorm(hidden_states)

|

||||

|

||||

# Layer norm post the self attention.

|

||||

if self.apply_residual_connection_post_layernorm:

|

||||

residual = layernorm_output

|

||||

else:

|

||||

residual = hidden_states

|

||||

|

||||

# Self attention.

|

||||

attn_outputs = self.self_attention(

|

||||

layernorm_output,

|

||||

residual,

|

||||

layer_past=layer_past,

|

||||

attention_mask=attention_mask,

|

||||

alibi=alibi,

|

||||

head_mask=head_mask,

|

||||

use_cache=use_cache,

|

||||

output_attentions=output_attentions,

|

||||

infer_state=infer_state,

|

||||

)

|

||||

|

||||

attention_output = attn_outputs[0]

|

||||

|

||||

outputs = attn_outputs[1:]

|

||||

|

||||

layernorm_output = self.post_attention_layernorm(attention_output)

|

||||

|

||||

# Get residual

|

||||

if self.apply_residual_connection_post_layernorm:

|

||||

residual = layernorm_output

|

||||

else:

|

||||

residual = attention_output

|

||||

|

||||

# MLP.

|

||||

output = self.mlp(layernorm_output, residual)

|

||||

|

||||

if use_cache:

|

||||

outputs = (output,) + outputs

|

||||

else:

|

||||

outputs = (output,) + outputs[1:]

|

||||

|

||||

return outputs # hidden_states, present, attentions

|

||||

|

||||

@staticmethod

|

||||

def bloom_attention_forward(

|

||||

self: BloomAttention,

|

||||

hidden_states: torch.Tensor,

|

||||

residual: torch.Tensor,

|

||||

alibi: torch.Tensor,

|

||||

attention_mask: torch.Tensor,

|

||||

layer_past: Optional[Tuple[torch.Tensor, torch.Tensor]] = None,

|

||||

head_mask: Optional[torch.Tensor] = None,

|

||||

use_cache: bool = False,

|

||||

output_attentions: bool = False,

|

||||

infer_state: Optional[BatchInferState] = None,

|

||||

):

|

||||

fused_qkv = self.query_key_value(hidden_states) # [batch_size, seq_length, 3 x hidden_size]

|

||||

|

||||

# 3 x [batch_size, seq_length, num_heads, head_dim]

|

||||

(query_layer, key_layer, value_layer) = self._split_heads(fused_qkv)

|

||||

batch_size, q_length, H, D_HEAD = query_layer.shape

|

||||

k = key_layer.reshape(-1, H, D_HEAD) # batch_size * q_length, H, D_HEAD, q_lenth == 1

|

||||

v = value_layer.reshape(-1, H, D_HEAD) # batch_size * q_length, H, D_HEAD, q_lenth == 1

|

||||

|

||||

mem_manager = infer_state.cache_manager

|

||||

layer_id = infer_state.decode_layer_id

|

||||

|

||||

if infer_state.is_context_stage:

|

||||

# context process

|

||||

max_input_len = q_length

|

||||

b_start_loc = infer_state.start_loc

|

||||

b_seq_len = infer_state.seq_len[:batch_size]

|

||||

q = query_layer.reshape(-1, H, D_HEAD)

|

||||

|

||||

copy_kv_cache_to_dest(k, infer_state.context_mem_index, mem_manager.key_buffer[layer_id])

|

||||

copy_kv_cache_to_dest(v, infer_state.context_mem_index, mem_manager.value_buffer[layer_id])

|

||||

|

||||

# output = self.output[:batch_size*q_length, :, :]

|

||||

output = torch.empty_like(q)

|

||||

|

||||

if HAS_LIGHTLLM_KERNEL:

|

||||

lightllm_bloom_context_attention_fwd(q, k, v, output, alibi, b_start_loc, b_seq_len, max_input_len)

|

||||

else:

|

||||

bloom_context_attn_fwd(q, k, v, output, b_start_loc, b_seq_len, max_input_len, alibi)

|

||||

|

||||

context_layer = output.view(batch_size, q_length, H * D_HEAD)

|

||||

else:

|

||||

# query_layer = query_layer.transpose(1, 2).reshape(batch_size * self.num_heads, q_length, self.head_dim)

|

||||

# need shape: batch_size, H, D_HEAD (q_length == 1), input q shape : (batch_size, q_length(1), H, D_HEAD)

|

||||

assert q_length == 1, "for non-context process, we only support q_length == 1"

|

||||

q = query_layer.reshape(-1, H, D_HEAD)

|

||||

|

||||

if infer_state.decode_is_contiguous:

|

||||

# if decode is contiguous, then we copy to key cache and value cache in cache manager directly

|

||||

cache_k = infer_state.cache_manager.key_buffer[layer_id][

|

||||

infer_state.decode_mem_start : infer_state.decode_mem_end, :, :

|

||||

]

|

||||

cache_v = infer_state.cache_manager.value_buffer[layer_id][

|

||||

infer_state.decode_mem_start : infer_state.decode_mem_end, :, :

|

||||

]

|

||||

cache_k.copy_(k)

|

||||

cache_v.copy_(v)

|

||||

else:

|

||||

# if decode is not contiguous, use triton kernel to copy key and value cache

|

||||

# k, v shape: [batch_size, num_heads, head_dim/embed_size_per_head]

|

||||

copy_kv_cache_to_dest(k, infer_state.decode_mem_index, mem_manager.key_buffer[layer_id])

|

||||

copy_kv_cache_to_dest(v, infer_state.decode_mem_index, mem_manager.value_buffer[layer_id])

|

||||

|

||||

b_start_loc = infer_state.start_loc

|

||||

b_loc = infer_state.block_loc

|

||||

b_seq_len = infer_state.seq_len

|

||||

output = torch.empty_like(q)

|

||||

token_attention_fwd(

|

||||

q,

|

||||

mem_manager.key_buffer[layer_id],

|

||||

mem_manager.value_buffer[layer_id],

|

||||

output,

|

||||

b_loc,

|

||||

b_start_loc,

|

||||

b_seq_len,

|

||||

infer_state.max_len_in_batch,

|

||||

alibi,

|

||||

)

|

||||

|

||||

context_layer = output.view(batch_size, q_length, H * D_HEAD)

|

||||

|

||||

# NOTE: always set present as none for now, instead of returning past key value to the next decoding,

|

||||

# we create the past key value pair from the cache manager

|

||||

present = None

|

||||

|

||||

# aggregate results across tp ranks. See here: https://github.com/pytorch/pytorch/issues/76232

|

||||

if self.pretraining_tp > 1 and self.slow_but_exact:

|

||||

slices = self.hidden_size / self.pretraining_tp

|

||||

output_tensor = torch.zeros_like(context_layer)

|

||||

for i in range(self.pretraining_tp):

|

||||

output_tensor = output_tensor + F.linear(

|

||||

context_layer[:, :, int(i * slices) : int((i + 1) * slices)],

|

||||

self.dense.weight[:, int(i * slices) : int((i + 1) * slices)],

|

||||

)

|

||||

else:

|

||||

output_tensor = self.dense(context_layer)

|

||||

|

||||

# dropout is not required here during inference

|

||||

output_tensor = residual + output_tensor

|

||||

|

||||

outputs = (output_tensor, present)

|

||||

assert output_attentions is False, "we do not support output_attentions at this time"

|

||||

|

||||

return outputs

|

||||

|

|

@ -1,492 +0,0 @@

|

|||

from typing import List, Optional, Tuple

|

||||

|

||||

import torch

|

||||

from transformers.utils import logging

|

||||

|

||||

from colossalai.inference.kv_cache import BatchInferState

|

||||

from colossalai.kernel.triton.token_attention_kernel import Llama2TokenAttentionForwards

|

||||

from colossalai.pipeline.stage_manager import PipelineStageManager

|

||||

from colossalai.shardformer import ShardConfig

|

||||

from colossalai.shardformer.modeling.chatglm2_6b.modeling_chatglm import (

|

||||

ChatGLMForConditionalGeneration,

|

||||

ChatGLMModel,

|

||||

GLMBlock,

|

||||

GLMTransformer,

|

||||

SelfAttention,

|

||||

split_tensor_along_last_dim,

|

||||

)

|

||||

|

||||

from ._utils import copy_kv_to_mem_cache

|

||||

|

||||

try:

|

||||

from lightllm.models.chatglm2.triton_kernel.rotary_emb import rotary_emb_fwd as chatglm2_rotary_emb_fwd

|

||||

from lightllm.models.llama2.triton_kernel.context_flashattention_nopad import (

|

||||

context_attention_fwd as lightllm_llama2_context_attention_fwd,

|

||||

)

|

||||

|

||||

HAS_LIGHTLLM_KERNEL = True

|

||||

except:

|

||||

print("please install lightllm from source to run inference: https://github.com/ModelTC/lightllm")

|

||||

HAS_LIGHTLLM_KERNEL = False

|

||||

|

||||

|

||||

def get_masks(self, input_ids, past_length, padding_mask=None):

|

||||

batch_size, seq_length = input_ids.shape

|

||||

full_attention_mask = torch.ones(batch_size, seq_length, seq_length, device=input_ids.device)

|

||||

full_attention_mask.tril_()

|

||||

if past_length:

|

||||

full_attention_mask = torch.cat(

|

||||

(

|

||||

torch.ones(batch_size, seq_length, past_length, device=input_ids.device),

|

||||

full_attention_mask,

|

||||

),

|

||||

dim=-1,

|

||||

)

|

||||

|

||||

if padding_mask is not None:

|

||||

full_attention_mask = full_attention_mask * padding_mask.unsqueeze(1)

|

||||

if not past_length and padding_mask is not None:

|

||||